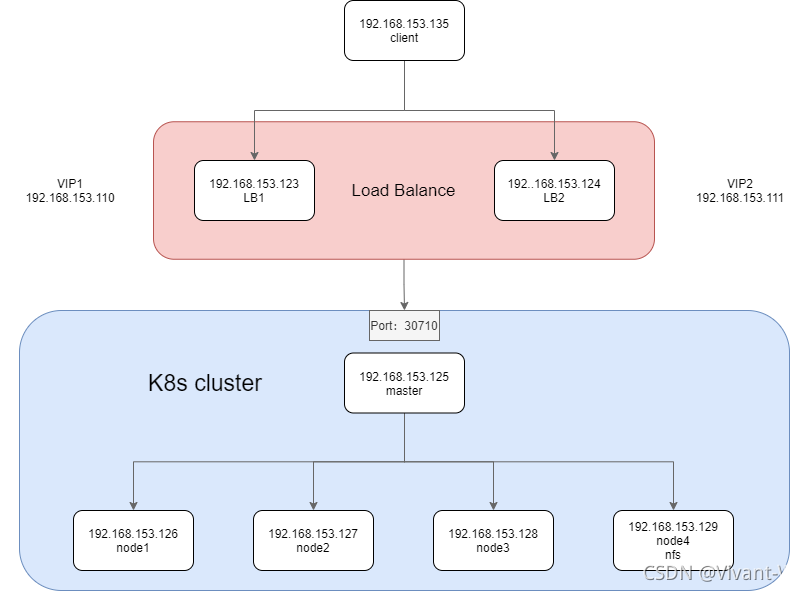

规划

| Ip | Role |

|---|---|

| 192.168.153.110 | VIP1 |

| 192.168.153.111 | VIP2 |

| 192.168.153.123 | LB |

| 192.168.153.124 | LB |

| 192.168.153.125 | master |

| 192.168.153.126 | node1 |

| 192.168.153.127 | node2 |

| 192.168.153.128 | node3 |

| 192.168.153.129 | node4、NFS |

| 192.168.153.135 | client |

规划图

k8s集群搭建

ansible配置

vim /etc/ansible/hosts

[k8s]

192.168.153.126

192.168.153.127

192.168.153.125

192.168.153.128

192.168.153.129

[master]

192.168.153.125

[node]

192.168.153.126

192.168.153.127

192.168.153.128

192.168.153.129

配置免密

master节点上执行

ssh-keygen

ssh-copy-id -i ~/.ssh/id_rsa.pub root@192.168.153.125

ssh-copy-id -i ~/.ssh/id_rsa.pub root@192.168.153.126

ssh-copy-id -i ~/.ssh/id_rsa.pub root@192.168.153.127

ssh-copy-id -i ~/.ssh/id_rsa.pub root@192.168.153.128

ssh-copy-id -i ~/.ssh/id_rsa.pub root@192.168.153.129

修改主机名

在master和node节点上修改主机名,主机名需唯一

vim /etc/hostnmae

master

vim hosts.sh

cat >> /etc/hosts <<EOF

192.168.153.125 master

192.168.153.126 node1

192.168.153.127 node2

192.168.153.128 node3

192.168.153.129 node4

EOF

允许 iptables 检查桥接流量

编写如下脚本

vim bri-change.sh

# 加载br_netfilter 模块

modprobe br_netfilter

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

br_netfilter

EOF

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sudo sysctl --system

ansible k8s -m script -a './bri-change.sh'

检查修改效果

ansible k8s -m shell -a 'cat /etc/sysctl.d/k8s.conf'

192.168.153.128 | CHANGED | rc=0 >>

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

192.168.153.126 | CHANGED | rc=0 >>

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

192.168.153.129 | CHANGED | rc=0 >>

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

192.168.153.125 | CHANGED | rc=0 >>

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

192.168.153.127 | CHANGED | rc=0 >>

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

安装docker

安装必要的一些系统工具

yum install -y yum-utils device-mapper-persistent-data lvm2

下载国内的源

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

安装docker

yum install -y docker-ce docker-ce-cli containerd.io

启动docker

systemctl start docker

配置docker

cat >> /etc/docker/daemon.json <<EOF

{

registry-mirrors":[

'https://registry.docker-cn.com.

"http://hub-mirror.c.163.com*,

"https://docker.mirrors.ustc.edu.cn"

]

}

EOF

安装 kubeadm、kubelet 和 kubectl

ansible k8s -m script -a './k8s-repo.sh'

cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes Repo

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

enable=1

EOF

# 将 SELinux 设置为 permissive 模式(相当于将其禁用)

sudo setenforce 0

sudo sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config

sudo yum install -y kubelet-1.18.0 kubeadm-1.18.0 kubectl-1.18.0

sudo systemctl enable

初始化Master

初始化

kubeadm init \

--apiserver-advertise-address=192.168.153.125 \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version v1.18.0 \

--service-cidr=10.1.0.0/16\

--pod-network-cidr=10.244.0.0/16

将apiserver-advertise-address修改为本机地址

开始集群

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

保留节点加入的命令

kubeadm join 192.168.153.125:6443 --token z8weg1.pz9jz431d9gfndmu \

--discovery-token-ca-cert-hash sha256:b7ba775a440aa595a1c5e3691291d8e2325d535c7397681cbc436e850ea94974

部署CNI网络

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

sed -i -r "s#quay.io/coreos/flannel:.*-amd64#lizhenliang/flannel:v0.12.0-amd64#g" kube-flannel.yml

kubectl apply -f kube-flannel.yml

稍微等待pod起来

查看node状态

kubectl get node

NAME STATUS ROLES AGE VERSION

master Ready master 34m v1.18.0

接入节点

使用上面保存节点加入的命令,在各node节点上执行

kubeadm join 192.168.153.125:6443 --token z8weg1.pz9jz431d9gfndmu \

--discovery-token-ca-cert-hash sha256:b7ba775a440aa595a1c5e3691291d8e2325d535c7397681cbc436e850ea94974

在master上查看node接入情况

kubectl get node

NAME STATUS ROLES AGE VERSION

master Ready master 42m v1.18.0

node1 Ready <none> 68s v1.18.0

node2 Ready <none> 66s v1.18.0

node3 Ready <none> 64s v1.18.0

node4 Ready <none> 62s v1.18.0

NFS服务器搭建

安装 nfs 服务器所需的软件包

yum install -y rpcbind nfs-utils

创建 exports 文件

/nfs_data/ *(insecure,rw,sync,no_root_squash)

启动 nfs 服务

mkdir /nfs_data

systemctl enable rpcbind

systemctl enable nfs-server

systemctl start rpcbind

systemctl start nfs-server

exportfs -r

检查配置是否生效

exportfs

# exportfs

/nfs_data <world>

客户端测试NFS

在k8s集群的所有nfs客户端节点都需执行此命令

yum install -y nfs-utils

检查nfs服务器端是否共享

showmount -e 192.168.153.129

Export list for 192.168.153.129:

/nfs_data *

挂载

mkdir /mnt/nfsmount

mount -t nfs 192.168.153.129:/nfs_data /mnt/nfsmount

测试是否挂载成功

客户端

echo "hello nfs server" > /mnt/nfsmount/test.txt

nfs服务端查看

cat /nfs_data/test.txt

hello nfs server

部署nginx服务

方式:helm

使用nodeport暴露服务,将nginx数据挂载到nfs服务器上

安装helm

wget https://get.helm.sh/helm-v3.3.1-linux-amd64.tar.gz

tar -zxvf helm-v3.3.1-linux-amd64.tar.gz

mv linux-amd64/helm /usr/local/bin/

查看helm版本

helm version

version.BuildInfo{Version:"v3.3.1", GitCommit:"249e5215cde0c3fa72e27eb7a30e8d55c9696144", GitTreeState:"clean", GoVersion:"go1.14.7"}

helm chart结构

tree

.

├── Chart.yaml

├── README.md

├── templates

│ ├── configmap1.yaml

│ ├── configmap2.yaml

│ ├── deployment.yaml

│ ├── _helpers.tpl

│ ├── NOTES.txt

│ ├── pvc.yaml

│ ├── pv.yaml

│ └── service.yaml

└── values.yaml

helm chart内容

Chart

apiVersion: v2

name: nginx

description: A Helm chart for Kubernetes

# A chart can be either an 'application' or a 'library' chart.

#

# Application charts are a collection of templates that can be packaged into versioned archives

# to be deployed.

#

# Library charts provide useful utilities or functions for the chart developer. They're included as

# a dependency of application charts to inject those utilities and functions into the rendering

# pipeline. Library charts do not define any templates and therefore cannot be deployed.

type: application

# This is the chart version. This version number should be incremented each time you make changes

# to the chart and its templates, including the app version.

# Versions are expected to follow Semantic Versioning (https://semver.org/)

version: 0.1.0

# This is the version number of the application being deployed. This version number should be

# incremented each time you make changes to the application. Versions are not expected to

# follow Semantic Versioning. They should reflect the version the application is using.

appVersion: latest

deployment.yaml

kind: Deployment

apiVersion: apps/v1

metadata:

name: {{ .Release.Name}}

namespace: {{ .Release.Namespace }}

labels:

{{- include "nginx.labels" . | nindent 4 }}

dce.daocloud.io/component: {{ include "nginx.fullname" . }}

dce.daocloud.io/app: {{ include "nginx.fullname" . }}

spec:

selector:

matchLabels:

{{- include "nginx.selectorLabels" . | nindent 6 }}

template:

metadata:

name: {{ .Release.Name }}

labels:

{{- include "nginx.selectorLabels" . | nindent 8 }}

spec:

volumes:

- name: {{ .Values.configmap1.name }}

configMap:

name: {{ .Values.configmap1.name }}

defaultMode: 420

- name: {{ .Values.configmap2.name }}

configMap:

name: {{ .Values.configmap2.name}}

items:

- key: nginx.conf

path: nginx.conf

defaultMode: 420

- name: {{ .Values.nfs.name }}

persistentVolumeClaim:

claimName: {{ .Release.Name }}-pvc

containers:

- name: {{ .Release.Name }}

image: "{{ .Values.image.repository }}:{{ .Chart.AppVersion }}"

imagePullPolicy: {{ .Values.image.pullPolicy }}

volumeMounts:

- name: {{ .Values.configmap1.name }}

mountPath: {{ .Values.volumemount.configmap1.path }}

- name: {{ .Values.configmap2.name }}

mountPath: {{ .Values.volumemount.configmap2.path }}

subPath: {{ .Values.volumemount.configmap2.subPath }}

- name: {{ .Values.nfs.name }}

mountPath: {{ .Values.nfs.mountpath}}

resources:

{{- toYaml .Values.resources | nindent 12 }}

{{- with .Values.affinity }}

affinity:

{{- toYaml . | nindent 8 }}

{{- end }}

{{- with .Values.tolerations }}

tolerations:

{{- toYaml . | nindent 8 }}

{{- end }}

value.yaml

# Default values for nginx.

# This is a YAML-formatted file.

# Declare variables to be passed into your templates.

image:

repository: nginx

pullPolicy: IfNotPresent

# Overrides the image tag whose default is the chart version.

imagePullSecrets: []

nameOverride: ""

fullnameOverride: ""

service:

type: NodePort

resources:

#We usually recommend not to specify default resources and to leave this as a conscious

# choice for the user. This also increases chances charts run on environments with little

# resources, such as Minikube. If you do want to specify resources, uncomment the following

# lines, adjust them as necessary, and remove the curly braces after 'resources:'.

limits:

cpu: 100m

memory: 128Mi

requests:

cpu: 100m

memory: 128Mi

tolerations: []

affinity: {}

# pv和pvc容量

storage: 10Gi

# pv访问模式

accessModes: ReadWriteOnce

nfs:

name: nginx-data

#

version: 4.1

# 挂载到容器上的路径

mountpath: /usr/share/nginx/html

# nfs服务器上已存在的路径

path: /nfs_data

# nfs服务器地址

server: 192.168.153.129

pv:

# 回收策略

persistentVolumeReclaimPolicy: Retain

configmap1:

# conf.d的volume名字(由小写字母数字及字符'-'组成)

name: nginx-conf-d

configmap2:

# nginx.conf的volume名字(由小写字母数字及字符'-'组成)

name: nginx-conf

# nginx.conf的volume路径

path: /etc/nginx/nginx.conf

volumemount:

# conf.d的volume挂载路径

configmap1:

path: /etc/nginx/conf.d

# nginx.conf的volume挂载路径

configmap2:

path: /etc/nginx/nginx.conf

subPath: nginx.conf

service.yaml

apiVersion: v1

kind: Service

metadata:

name: {{ .Release.Name }}

labels:

{{- include "nginx.labels" . | nindent 4 }}

dce.daocloud.io/component: {{ include "nginx.fullname" . }}

dce.daocloud.io/app: {{ include "nginx.fullname" . }}

spec:

ports:

- port: 80

targetPort: 80

protocol: TCP

name: http

selector:

{{- include "nginx.selectorLabels" . | nindent 4 }}

type: NodePort

configmap1

apiVersion: v1

kind: Service

metadata:

name: {{ .Release.Name }}

labels:

{{- include "nginx.labels" . | nindent 4 }}

dce.daocloud.io/component: {{ include "nginx.fullname" . }}

dce.daocloud.io/app: {{ include "nginx.fullname" . }}

spec:

ports:

- port: 80

targetPort: 80

protocol: TCP

name: http

selector:

{{- include "nginx.selectorLabels" . | nindent 4 }}

type: NodePort

[root@master nginx]# cat templates/configmap1.yaml

kind: ConfigMap

apiVersion: v1

metadata:

name: {{ .Values.configmap1.name }}

data:

conf.d: |

server {

listen 80;

server_name localhost;

charset utf-8;

#access_log /var/log/nginx/log/host.access.log main;

location / {

root /usr/share/nginx/html;

index index.html index.htm;

}

#error_page 404 /404.html;

# redirect server error pages to the static page /50x.html

#

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root /usr/share/nginx/html;

}

}

configmap2

kind: ConfigMap

apiVersion: v1

metadata:

name: {{ .Values.configmap2.name }}

data:

nginx.conf: |

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log;

pid /run/nginx.pid;

# Load dynamic modules. See /usr/share/doc/nginx/README.dynamic.

include /usr/share/nginx/modules/*.conf;

events {

worker_connections 1024;

}

http {

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

# tcp_nopush on;

# tcp_nodelay on;

keepalive_timeout 65;

types_hash_max_size 4096;

include /etc/nginx/mime.types;

default_type application/octet-stream;

# Load modular configuration files from the /etc/nginx/conf.d directory.

# See http://nginx.org/en/docs/ngx_core_module.html#include

# for more information.

include /etc/nginx/conf.d/*.conf;

server {

listen 80;

listen [::]:80;

server_name _;

location / {

root /usr/share/nginx/html;

index index.html index.htm;

}

# Load configuration files for the default server block.

include /etc/nginx/default.d/*.conf;

error_page 404 /404.html;

location = /favicon.ico{

log_not_found off;

}

location = /404.html {

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

}

}

include /etc/nginx/conf.d/*.conf;

}

pv

apiVersion: v1

kind: PersistentVolume

metadata:

name: {{ .Values.nfs.name }}-pv

spec:

capacity:

storage: {{ .Values.storage }}

accessModes:

- {{ .Values.accessModes }}

storageClassName: nfs

persistentVolumeReclaimPolicy: {{ .Values.nfs.pv.persistentVolumeReclaimPolicy }}

mountOptions:

- hard

- nfsvers= {{- .Values.nfs.version}}

nfs:

path: {{ .Values.nfs.path}}

server: {{ .Values.nfs.server }}

pvc

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: {{ .Release.Name }}-pvc

spec:

accessModes:

- {{ .Values.accessModes }}

storageClassName: nfs

volumeMode: Filesystem

resources:

requests:

storage: {{ .Values.storage }}

volumeName: {{ .Values.nfs.name }}-pv

安装nginx

helm install nginx .

查看启动结果

kubectl get po -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-6848cfd5b8-gdttn 1/1 Running 0 12h 10.244.2.4 node2 <none> <none>

nginx-6848cfd5b8-mmj2s 1/1 Running 0 12h 10.244.1.5 node1 <none> <none>

kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.1.0.1 <none> 443/TCP 37h

nginx NodePort 10.1.147.111 <none> 80:31982/TCP 12h

访问

keepalived实现双vip

部署keepalived

安装keepalived

在负载均衡器上安装keepalived

yum install keepalived -y

查看版本

keepalived --version

Keepalived v1.3.5 (03/19,2017), git commit v1.3.5-6-g6fa32f2

配置keepalived的参数

LB1

! Configuration File for keepalived

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

# vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_script chk_nginx {

script "/etc/keepalived/nginx_check.sh"

interval 2

weight -20

}

vrrp_instance VI_1 {

state MASTER

interface ens192

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

track_script {

chk_nginx

}

virtual_ipaddress {

192.168.153.110

}

}

vrrp_instance VI_2 {

state BACKUP

interface ens192

virtual_router_id 52

priority 50

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

track_script {

chk_nginx

}

virtual_ipaddress {

192.168.153.111

}

}

LB2

! Configuration File for keepalived

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

# vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_script chk_nginx {

script "/etc/keepalived/nginx_check.sh"

interval 2

weight -20

}

vrrp_instance VI_1 {

state BACKUP

interface ens192

virtual_router_id 51

priority 50

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

track_script {

chk_nginx

}

virtual_ipaddress {

192.168.153.110

}

}

vrrp_instance VI_2 {

state MASTER

interface ens192

virtual_router_id 52

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

track_script {

chk_nginx

}

virtual_ipaddress {

192.168.153.111

}

}

编写检测nginx存活脚本

用于keepalived定时检测nginx的服务状态,如果nginx停止了,会尝试重新启动nginx,如果启动失败,会将keepalived进程杀死,将vip漂移到备份机器上

vim nginx-check.sh

#!/bin/sh

A=`ps -C nginx --no-header |wc -l`

if [ $A -eq 0 ]

then

/usr/sbin/nginx

sleep 1

A2=`ps -C nginx --no-header |wc -l`

if [ $A2 -eq 0 ]

then

systemctl stop keepalived

fi

fi

迁移到指定目录下

cp nginx-check.sh /etc/keepalived/

开启keepalived

[root@localhost ~]systemctl start keepalived

[root@localhost ~]# systemctl status keepalived

● keepalived.service - LVS and VRRP High Availability Monitor

Loaded: loaded (/usr/lib/systemd/system/keepalived.service; disabled; vendor preset: disabled)

Active: active (running) since Wed 2021-09-08 20:15:14 HKT; 1s ago

Process: 9234 ExecStart=/usr/sbin/keepalived $KEEPALIVED_OPTIONS (code=exited, status=0/SUCCESS)

Main PID: 9235 (keepalived)

CGroup: /system.slice/keepalived.service

├─9235 /usr/sbin/keepalived -D

├─9236 /usr/sbin/keepalived -D

└─9237 /usr/sbin/keepalived -D

Sep 08 20:15:14 localhost.localdomain Keepalived_vrrp[9237]: WARNING - default user 'keepalived_script' for script execution does not exist - please create.

Sep 08 20:15:14 localhost.localdomain Keepalived_vrrp[9237]: Unable to access script `/etc/keepalived/nginx_check.sh`

Sep 08 20:15:14 localhost.localdomain Keepalived_vrrp[9237]: Disabling track script chk_nginx since not found

Sep 08 20:15:14 localhost.localdomain Keepalived_vrrp[9237]: VRRP_Instance(VI_1) removing protocol VIPs.

Sep 08 20:15:14 localhost.localdomain Keepalived_vrrp[9237]: VRRP_Instance(VI_2) removing protocol VIPs.

Sep 08 20:15:14 localhost.localdomain Keepalived_vrrp[9237]: Using LinkWatch kernel netlink reflector...

Sep 08 20:15:14 localhost.localdomain Keepalived_vrrp[9237]: VRRP_Instance(VI_1) Entering BACKUP STATE

Sep 08 20:15:14 localhost.localdomain Keepalived_vrrp[9237]: VRRP sockpool: [ifindex(2), proto(112), unicast(0), fd(10,11)]

Sep 08 20:15:14 localhost.localdomain Keepalived_healthcheckers[9236]: Opening file '/etc/keepalived/keepalived.conf'.

Sep 08 20:15:15 localhost.localdomain Keepalived_vrrp[9237]: VRRP_Instance(VI_2) Transition to MASTER STATE

查看两个LB服务器的ip

LB1

ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens192: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:50:56:a8:b0:b5 brd ff:ff:ff:ff:ff:ff

inet 192.168.153.123/24 brd 192.168.153.255 scope global noprefixroute ens192

valid_lft forever preferred_lft forever

inet 192.168.153.110/32 scope global ens192

valid_lft forever preferred_lft forever

inet6 fe80::250:56ff:fea8:b0b5/64 scope link

valid_lft forever preferred_lft forever

LB2

ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens192: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:50:56:a8:42:11 brd ff:ff:ff:ff:ff:ff

inet 192.168.153.124/24 brd 192.168.153.255 scope global noprefixroute ens192

valid_lft forever preferred_lft forever

inet 192.168.153.111/32 scope global ens192

valid_lft forever preferred_lft forever

inet6 fe80::250:56ff:fea8:4211/64 scope link

valid_lft forever preferred_lft forever

发现VIP成功飘在LB上

部署nginx

安装nginx

此处注意需要加载stream模块

安装之后查看nginx版本

nginx -v

nginx version: nginx/1.19.9

修改nginx配置

upstream myweb{

least_conn;

server 192.168.153.125:30710;

server 192.168.153.126:30710;

server 192.168.153.127:30710;

server 192.168.153.128:30710;

server 192.168.153.129:30710;

}

server {

listen 80;

server_name localhost;

#charset koi8-r;

#access_log logs/host.access.log main;

location / {

proxy_pass http://myweb;

root html;

index index.html index.htm;

}

添加upstream配置

添加完之后,在location处加代理

proxy_pass http://myweb;

修改完之后刷新nginx配置

nginx -s reload

Client访问vip的80端口

# curl 192.168.153.110:80

hello world

# curl 192.168.153.111:80

hello world

模拟LB1宕机

LB1关闭keepalived

systemctl stop keepalived

查看LB2的ip

ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens192: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:50:56:a8:42:11 brd ff:ff:ff:ff:ff:ff

inet 192.168.153.124/24 brd 192.168.153.255 scope global noprefixroute ens192

valid_lft forever preferred_lft forever

inet 192.168.153.111/32 scope global ens192

valid_lft forever preferred_lft forever

inet 192.168.153.110/32 scope global ens192

valid_lft forever preferred_lft forever

inet6 fe80::250:56ff:fea8:4211/64 scope link

valid_lft forever preferred_lft forever

发现VIP1成功漂移到LB2上

排错

k8s的repo有问题

issue:k8s的repo有问题

failure: repodata/repomd.xml from kubernetes: [Errno 256] No more mirrors to try.

https://mirrors.tuna.tsinghua.edu.cn/kubernetes/yum/repos/kubernetes-el7-/repodata/repomd.xml: [Errno 14] HTTPS Error 404 - Not Found

Public key for db7cb5cb0b3f6875f54d10f02e625573988e3e91fd4fc5eef0b1876bb18604ad-kubernetes-cni-0.8.7-0.x86_64.rpm is not installed

fix:换源

cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes Repo

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

enable=1

EOF

镜像拉取失败

issue:镜像拉取失败

[ERROR ImagePull]: failed to pull image registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:v1.8.4: output: Error response from daemon: manifest for registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:v1.8.4 not found: manifest unknown: manifest unknown

fix:拉取其他源的镜像,打tag成指定需要的镜像

docker pull coredns/coredns:1.8.4

docker tag 8d147537fb7d registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:v1.8.4

docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver v1.22.0 838d692cbe28 3 weeks ago 128MB

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager v1.22.0 5344f96781f4 3 weeks ago 122MB

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler v1.22.0 3db3d153007f 3 weeks ago 52.7MB

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy v1.22.0 bbad1636b30d 3 weeks ago 104MB

registry.cn-hangzhou.aliyuncs.com/google_containers/etcd 3.5.0-0 004811815584 2 months ago 295MB

coredns/coredns 1.8.4 8d147537fb7d 3 months ago 47.6MB

registry.cn-hangzhou.aliyuncs.com/google_containers/coredns v1.8.4 8d147537fb7d 3 months ago 47.6MB

registry.cn-hangzhou.aliyuncs.com/google_containers/pause 3.5 ed210e3e4a5b 5 months ago 683kB

master NotReady

issue:cni网络未准备好

kubectl get nodes

NAME STATUS ROLES AGE VERSION

master NotReady master 18m v1.18.0

fix:

- 查看pod状态

kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-7ff77c879f-6l6g7 0/1 Pending 0 19m

coredns-7ff77c879f-fxjbm 0/1 Pending 0 19m

- 查看日志

kubectl logs -n kube-system -p coredns-7ff77c879f-6l6g7

无任何日志输出

- 查看/var/log/messages的日志

tail /var/log/messages

Sep 2 22:18:28 master kubelet: W0902 22:18:28.358395 54235 cni.go:237] Unable to update cni config: no networks found in /etc/cni/net.d

Sep 2 22:18:29 master kubelet: E0902 22:18:29.880901 54235 kubelet.go:2187] Container runtime network not ready: NetworkReady=false reason:NetworkPluginNotReady message:docker: network plugin is not ready: cni config uninitialized

- 查看事件

kubectl get events -n kube-system

LAST SEEN TYPE REASON OBJECT MESSAGE

2m31s Warning FailedScheduling pod/coredns-7ff77c879f-6l6g7 0/1 nodes are available: 1 node(s) had taint {node.kubernetes.io/not-ready: }, that the pod didn't tolerate.

2m27s Warning FailedScheduling pod/coredns-7ff77c879f-fxjbm 0/1 nodes are available: 1 node(s) had taint {node.kubernetes.io/not-ready: }, that the pod didn't tolerate.

fix:部署CNI网络

Pod Status ContainerCreating

kubectl get po

NAME READY STATUS RESTARTS AGE

nginx-869c7bb664-2fb98 1/1 Running 0 5m54s

nginx-869c7bb664-qlm56 0/1 ContainerCreating 0 5m54s

- 查看日志

kubectl logs nginx-869c7bb664-qlm56

Error from server (BadRequest): container "nginx" in pod "nginx-869c7bb664-qlm56" is waiting to start: ContainerCreating

没有给出问题的根源

- 查看pod详细信息

kubectl describe po nginx-869c7bb664-qlm56

Warning FailedMount 2m8s kubelet, node3 MountVolume.SetUp failed for volume "nginx-data-pv" : mount failed: exit status 32

fix:挂载的volume同名了

需修改helm内容

nfs重复挂载

mount.nfs: Stale file handle

表示挂载到nfs服务器上的目录已经被挂载过

fix:取消此目录挂载

umount -f /mnt/nfsmount

wrong fs type, bad option, bad superblock on 192.168.153.129:/mnt/nfsmount

mount: wrong fs type, bad option, bad superblock on 192.168.153.129:/mnt/nfsmount,

missing codepage or helper program, or other error

(for several filesystems (e.g. nfs, cifs) you might

need a /sbin/mount.<type> helper program)

In some cases useful info is found in syslog - try

dmesg | tail or so.

helm install nginx的时候出现此报错

issue:node节点上未安装nfs-utils

fix:安装nfs-utils

yum install nfs-utils -y