OCR 跨平台工程化onnxruntime gpu c++代码

网上关于onnxruntime运行在gpu环境下运行OCR模型的代码较少,经过查阅,其实就是在初始化模型的时候这样写就可以了

void DbNet::setNumThread(int numOfThread) {

numThread = numOfThread;

//===session options===

// Sets the number of threads used to parallelize the execution within nodes

// A value of 0 means ORT will pick a default

//sessionOptions.SetIntraOpNumThreads(numThread);

//set OMP_NUM_THREADS=16

// Sets the number of threads used to parallelize the execution of the graph (across nodes)

// If sequential execution is enabled this value is ignored

// A value of 0 means ORT will pick a default

sessionOptions.SetInterOpNumThreads(numThread);

// Sets graph optimization level

// ORT_DISABLE_ALL -> To disable all optimizations

// ORT_ENABLE_BASIC -> To enable basic optimizations (Such as redundant node removals)

// ORT_ENABLE_EXTENDED -> To enable extended optimizations (Includes level 1 + more complex optimizations like node fusions)

// ORT_ENABLE_ALL -> To Enable All possible opitmizations

sessionOptions.SetGraphOptimizationLevel(GraphOptimizationLevel::ORT_ENABLE_EXTENDED);

}

void DbNet::initModel(const std::string &pathStr) {

#ifdef _WIN32

std::wstring dbPath = strToWstr(pathStr);

session = new Ort::Session(env, dbPath.c_str(), sessionOptions);

#else

OrtSessionOptionsAppendExecutionProvider_CUDA(sessionOptions, 0);

session = new Ort::Session(env, pathStr.c_str(), sessionOptions);

#endif

getInputName(session, inputName);

getOutputName(session, outputName);

}

OrtSessionOptionsAppendExecutionProvider_CUDA(sessionOptions, 0);

这行代码就是将模型运行在gpu0上

sessionOptions.SetInterOpNumThreads(numThread);

这行代码是设置op线程数量

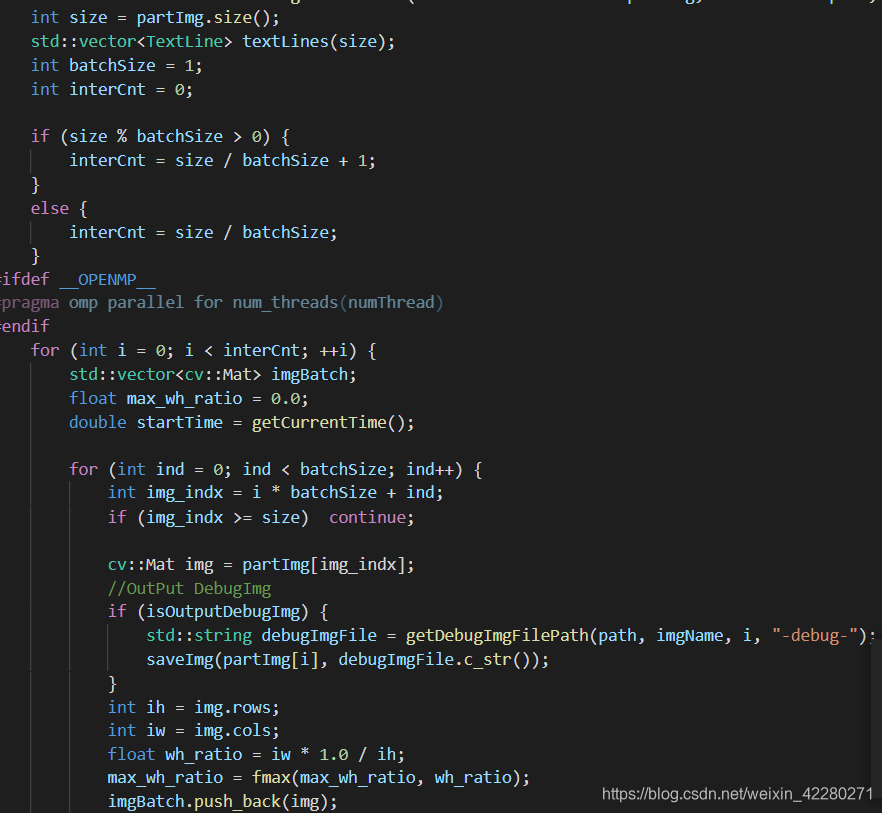

在crnn识别的时候,使用了batch作为预测的方法

所有代码的工程文件均可以在我的资料里面下载到