系列文章目录

文章目录

前言

之前学习了CUDA C编程中动态并行技术, 这里进行总结,以加深理解,并希望能够对大家有所帮助。

一、动态并行概念

之前接触的CUDA C设备函数的调用都是在CPU端进行的,即设备函数的执行在CPU的控制下。自然而然,我们就会想到,设备函数内部可以调用设备函数本身吗?即像CPU上执行的递归函数一样呢,答案是可以,这就是我们要讲的动态并行技术。

CUDA的动态并行允许在GPU设备端直接创建和同步新的GPU内核。通过使用动态并行技术,可以让递归算法更加清晰易懂,也更加容易理解。

有了动态并行,就不用像CPU调用内核函数一样提前定义好网格和块的数量,可以推迟到运行时决定需要在GPU上创建多少个网格和块。简单来说,就是在设备函数中根据应用目的和当前设备函数中的变量来控制该设备函数调用的新的设备函数的块和网格的数量。

参考资料上也提到,动态并行可以动态利用GPU硬件调度器和加载平衡器,并进行调整以适应数据驱动或工作负载。(这里不太理解GPU硬件调度器和加载平衡器,所以动态并行的这个优势没有体会到)。

在GPU端创建工作的能力可以减少在主机和设备间传输执行控制和数据的需求。

二、嵌套执行

在动态并行中,内核执行分为:父母和孩子。父线程、父线程块或父网格启动一个新的网格- 子网格。注意,**子网格必须在父线程、父线程块或父网格完成之前完成。**只有在所有的子网格都完成后,父母才会完成。

设备线程中的网格启动,在线程块间是可见的。这意味着,线程可能与由该线程启动的或由相同线程块中的其他线程启动的子网格同步。**在线程块中,只有当所有线程创建的所有子网格完成之后,线程块的执行才会完成。**如果块中所有线程在所有的子网格完成前就退出了,那么在那些子网格上隐式同步会被触发。

当父母启动一个子网格,父线程块与孩子显示同步后,孩子才开始执行。

父网格和子网格共享相同的全局和常量内存存储, 但有不同的局部内存和共享内存。由于父母和孩子间的弱一致性(这里的弱一致性不太理解),父网格和子网格可以对全局内存并发存取。

有两个时刻,子网格和父线程见到的内存完全相同:子网格开始和子网格完成时。

三、动态并行的限制条件

动态并行只有在计算能力为3.5或更高的设备上才能被支持。

通过动态并行调用的内核不能在物理方面独立的设备上启动。

动态并行的最大嵌套深度限制为24(待验证),个人理解,这是由于内核受限于设备运行时系统需要的内存数量。

为了对每个嵌套层中父网格和子网格间进行同步管理,设备运行时要保留额外的内存。

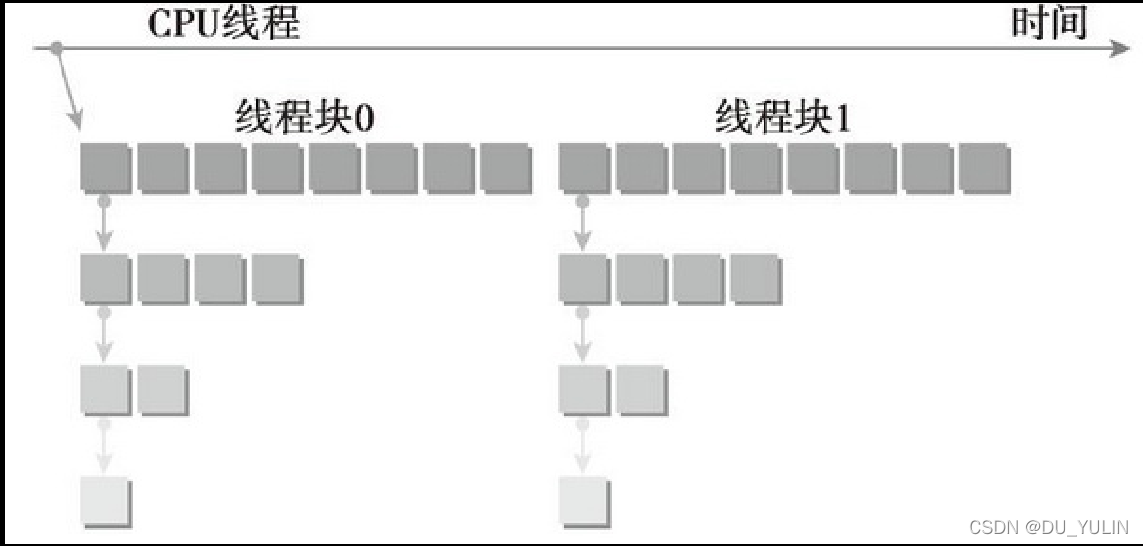

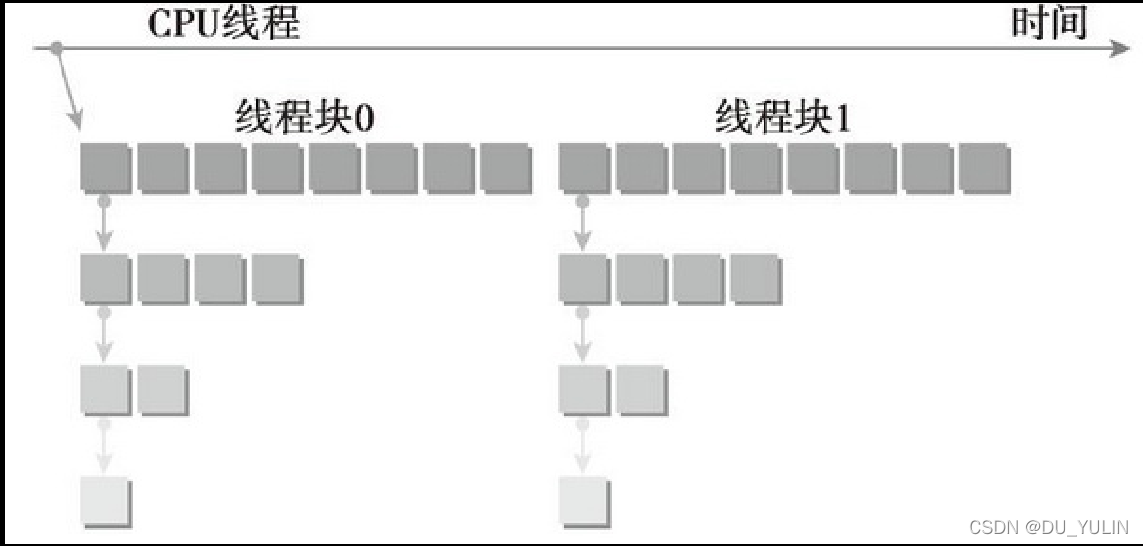

四、嵌套规约

规约就是我们理解的递归,这里介绍以下几种嵌套规约的实现方法:

4.1 基本方法(线程同步及线程块同步)

__global__ void gpuRecursiveReduce(int* g_idata, int* g_odata,

unsigned int isize)

{

unsigned int tid = threadIdx.x;

int* idata = g_idata + blockDim.x * blockIdx.x;

int* odata = &g_odata[blockIdx.x];

if (isize == 2 && tid == 0)

{

g_odata[blockIdx.x] = idata[0] + idata[1];

return;

}

int iStride = isize >> 1;

if (iStride > 1 && tid < iStride)

{

idata[tid] += idata[tid + iStride];

}

//sync at block level

__syncthreads();

//only the thread:tid=0 to generate child grids

if (tid == 0)

{

gpuRecursiveReduce << <1, iStride >> > (idata, odata, iStride);

//sync all child grids launched in this block

cudaDeviceSynchronize();

}

//sync at block level again

__syncthreads();

}

4.2 加速方法(去除线程同步以及线程块同步)

__global__ void gpuRecursiveReduceNosync(int* g_idata, int* g_odata,

__global__ void gpuRecursiveReduceNosync(int* g_idata, int* g_odata,

unsigned int isize)

{

unsigned int tid = threadIdx.x;

int* idata = g_idata + blockDim.x * blockIdx.x;

int* odata = &g_odata[blockIdx.x];

if (isize == 2 && tid == 0)

{

g_odata[blockIdx.x] = idata[0] + idata[1];

return;

}

int iStride = isize >> 1;

if (iStride > 1 && tid < iStride)

{

idata[tid] += idata[tid + iStride];

}

//only the thread:tid=0 to generate child grids

if (tid == 0)

{

gpuRecursiveReduceNosync << <1, iStride >> > (idata, odata, iStride);

}

}

4.3 进一步加速方法(待完善。。。)

__global__ void gpuRecursiveReduce2(int* g_idata, int* g_odata,

__global__ void gpuRecursiveReduce2(int* g_idata, int* g_odata,

unsigned int iStride, unsigned int iDim)

{

unsigned int tid = threadIdx.x;

int* idata = g_idata + iDim * blockIdx.x;

int* odata = &g_odata[blockIdx.x];

if (iStride == 1 && tid == 0)

{

g_odata[blockIdx.x] = idata[0] + idata[1];

return;

}

idata[tid] += idata[tid + iStride];

//only the thread:tid=0&block:blockId=0 to generate child grids

if (tid == 0 && blockIdx.x == 0)

{

gpuRecursiveReduce2 << <gridDim.x, iStride/2 >> > (idata, odata, iStride/2, iDim);

}

}

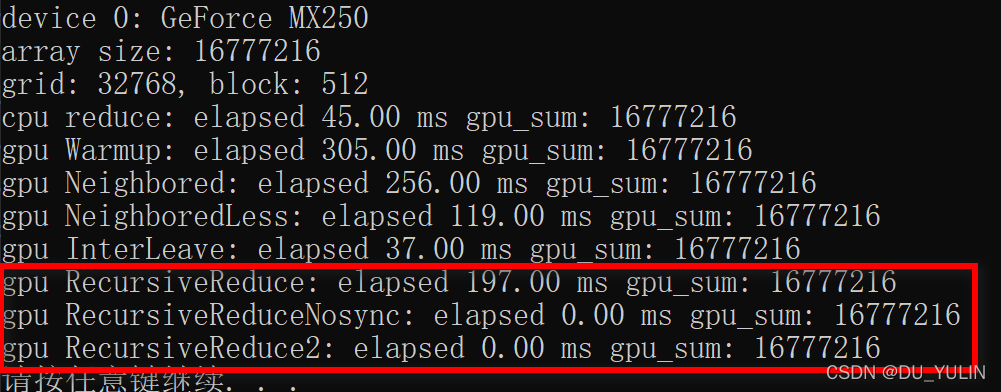

五、运行结果

总结

本人菜鸟一枚,感觉对动态并行编程还不是很通透,以后有更深的理解,再来更新分享吧。

参考资料

《CUDA C编程权威指南》

附录:完整代码

#include <stdio.h>

#include

#include <cuda.h>

#include <cuda_runtime.h>

#include <cuda_runtime_api.h>

#include <device_launch_parameters.h>

#include <device_functions.h>

#include “CudaUtils.h”

//cpu recursive reduce

int recursiveReduce(int* data, const int size)

{

if (size == 1)

{

return data[0];

}

const int stride = size / 2;

// in-place reduction

for (int i = 0; i < stride; i++)

{

data[i] += data[i + stride];

}

//call recursively

return recursiveReduce(data, stride);

}

//accumulate by neighbor elements of array

__global__ void reduceNeighbored(int* g_idata, int* g_odata, unsigned int n)

{

//set thread ID

unsigned int tid = threadIdx.x;

//convert global data pointer to the local pointer of this block

int* idata = g_idata + blockIdx.x * blockDim.x;

//boundary check

if (tid >= n)

return;

// in-place reduction in global memory

for (int stride = 1; stride < blockDim.x; stride *= 2)

{

if (tid % (2 * stride) == 0)

{

idata[tid] += idata[tid + stride];

}

//synchronize within block, wait all threads finish within block

__syncthreads();

}

//write result for this block to global mem

if (tid == 0)

g_odata[blockIdx.x] = idata[0];

}

//accumulate by neighbor elements of array

__global__ void reduceNeighboredLess(int* g_idata, int* g_odata, unsigned int n)

{

//set thread ID

unsigned int tid = threadIdx.x;

unsigned int idx = threadIdx.x + blockIdx.x * blockDim.x;

//convert global data pointer to the local pointer of this block

int* idata = g_idata + blockIdx.x * blockDim.x;

//boundary check

if (idx >= n)

return;

// in-place reduction in global memory

for (int stride = 1; stride < blockDim.x; stride *= 2)

{

int index= 2 * stride * tid;

if (index < blockDim.x)

idata[index] += idata[index + stride];

//synchronize within block, wait all threads finish within block

__syncthreads();

}

//write result for this block to global mem

if (tid == 0)

g_odata[blockIdx.x] = idata[0];

}

//accumulate by neighbor elements of array

__global__ void reduceInterLeave(int* g_idata, int* g_odata, unsigned int n)

{

//set thread ID

unsigned int tid = threadIdx.x;

//convert global data pointer to the local pointer of this block

int* idata = g_idata + blockIdx.x * blockDim.x;

//boundary check

if (tid >= n)

return;

// in-place reduction in global memory

for (int stride = blockDim.x / 2; stride > 0; stride >>= 1)

{

if (tid < stride)

idata[tid] += idata[tid + stride];

//synchronize within block, wait all threads finish within block

__syncthreads();

}

//write result for this block to global mem

if (tid == 0)

g_odata[blockIdx.x] = idata[0];

}

__global__ void gpuRecursiveReduce(int* g_idata, int* g_odata,

unsigned int isize)

{

unsigned int tid = threadIdx.x;

int* idata = g_idata + blockDim.x * blockIdx.x;

int* odata = &g_odata[blockIdx.x];

if (isize == 2 && tid == 0)

{

g_odata[blockIdx.x] = idata[0] + idata[1];

return;

}

int iStride = isize >> 1;

if (iStride > 1 && tid < iStride)

{

idata[tid] += idata[tid + iStride];

}

//sync at block level

__syncthreads();

//only the thread:tid=0 to generate child grids

if (tid == 0)

{

gpuRecursiveReduce << <1, iStride >> > (idata, odata, iStride);

//sync all child grids launched in this block

cudaDeviceSynchronize();

}

//sync at block level again

__syncthreads();

}

__global__ void gpuRecursiveReduceNosync(int* g_idata, int* g_odata,

unsigned int isize)

{

unsigned int tid = threadIdx.x;

int* idata = g_idata + blockDim.x * blockIdx.x;

int* odata = &g_odata[blockIdx.x];

if (isize == 2 && tid == 0)

{

g_odata[blockIdx.x] = idata[0] + idata[1];

return;

}

int iStride = isize >> 1;

if (iStride > 1 && tid < iStride)

{

idata[tid] += idata[tid + iStride];

}

//only the thread:tid=0 to generate child grids

if (tid == 0)

{

gpuRecursiveReduceNosync << <1, iStride >> > (idata, odata, iStride);

}

}

__global__ void gpuRecursiveReduce2(int* g_idata, int* g_odata,

unsigned int iStride, unsigned int iDim)

{

unsigned int tid = threadIdx.x;

int* idata = g_idata + iDim * blockIdx.x;

int* odata = &g_odata[blockIdx.x];

if (iStride == 1 && tid == 0)

{

g_odata[blockIdx.x] = idata[0] + idata[1];

return;

}

idata[tid] += idata[tid + iStride];

//only the thread:tid=0&block:blockId=0 to generate child grids

if (tid == 0 && blockIdx.x == 0)

{

gpuRecursiveReduce2 << <gridDim.x, iStride/2 >> > (idata, odata, iStride/2, iDim);

}

}

int main()

{

int nDevId = 0;

cudaDeviceProp stDeviceProp;

cudaGetDeviceProperties(&stDeviceProp, nDevId);

printf("device %d: %s\n", nDevId, stDeviceProp.name);

cudaSetDevice(nDevId);

bool bResult = false;

//initialization

int size = 1 << 24; //total number of elements to reduce

printf("array size: %d \n", size);

//execution configuration

int nBlockSize = 512;// initial block size

dim3 block(nBlockSize, 1);

dim3 grid((size + block.x - 1) / block.x, 1);

printf("grid: %d, block: %d\n", grid.x, block.x);

//allocate host memory

size_t bytes = size * sizeof(int);

int* h_idata = (int*)malloc(bytes);

int* h_odata = (int*)malloc(grid.x * sizeof(int));

int* tmp = (int*)malloc(bytes);

//initialize the array

for (int i = 0; i < size; i++)

{

h_idata[i] = 1/*i % 255*/;

}

memcpy(tmp, h_idata, bytes);

double dElaps;

int nGpuNum = 0;

//allocate device memory

int* d_idata = NULL;

int* d_odata = NULL;

cudaMalloc(&d_idata, bytes);

cudaMalloc(&d_odata, grid.x * sizeof(int));

//cpu reducation

CudaUtils::Time::Start();

int cpu_sum = recursiveReduce(tmp, size);

CudaUtils::Time::End();

dElaps = CudaUtils::Time::Duration<CudaUtils::Time::MS>();

printf("cpu reduce: elapsed %.2f ms gpu_sum: %d\n",

dElaps, cpu_sum);

// kernel 0: warpup -- reduceNeighbored

cudaMemcpy(d_idata, h_idata, bytes, cudaMemcpyHostToDevice);

cudaDeviceSynchronize();

CudaUtils::Time::Start();

reduceNeighbored << <grid, block >> > (d_idata, d_odata, size);

cudaDeviceSynchronize();

CudaUtils::Time::End();

dElaps = CudaUtils::Time::Duration<CudaUtils::Time::MS>();

cudaMemcpy(h_odata, d_odata, grid.x * sizeof(int), cudaMemcpyDeviceToHost);

size_t gpu_sum = 0;

for (int i = 0; i < grid.x; i++)

gpu_sum += h_odata[i];

printf("gpu Warmup: elapsed %.2f ms gpu_sum: %lld\n",

dElaps, gpu_sum);

// kernel 1: reduceNeighbored

cudaMemcpy(d_idata, h_idata, bytes, cudaMemcpyHostToDevice);

cudaDeviceSynchronize();

CudaUtils::Time::Start();

reduceNeighbored << <grid, block >> > (d_idata, d_odata, size);

cudaDeviceSynchronize();

CudaUtils::Time::End();

dElaps = CudaUtils::Time::Duration<CudaUtils::Time::MS>();

cudaMemcpy(h_odata, d_odata, grid.x * sizeof(int), cudaMemcpyDeviceToHost);

gpu_sum = 0;

for (int i = 0; i < grid.x; i++)

gpu_sum += h_odata[i];

printf("gpu Neighbored: elapsed %.2f ms gpu_sum: %lld\n",

dElaps, gpu_sum);

// kernel 2: reduceNeighboredLess - 减少线程束分化

cudaMemcpy(d_idata, h_idata, bytes, cudaMemcpyHostToDevice);

cudaDeviceSynchronize();

CudaUtils::Time::Start();

reduceNeighboredLess << <grid, block >> > (d_idata, d_odata, size);

cudaDeviceSynchronize();

CudaUtils::Time::End();

dElaps = CudaUtils::Time::Duration<CudaUtils::Time::MS>();

cudaMemcpy(h_odata, d_odata, grid.x * sizeof(int), cudaMemcpyDeviceToHost);

gpu_sum = 0;

for (int i = 0; i < grid.x; i++)

gpu_sum += h_odata[i];

printf("gpu NeighboredLess: elapsed %.2f ms gpu_sum: %lld\n",

dElaps, gpu_sum);

// kernel 3: reduceInterLeave - 减少线程束分化

cudaMemcpy(d_idata, h_idata, bytes, cudaMemcpyHostToDevice);

cudaDeviceSynchronize();

CudaUtils::Time::Start();

reduceInterLeave << <grid, block >> > (d_idata, d_odata, size);

cudaDeviceSynchronize();

CudaUtils::Time::End();

dElaps = CudaUtils::Time::Duration<CudaUtils::Time::MS>();

cudaMemcpy(h_odata, d_odata, grid.x * sizeof(int), cudaMemcpyDeviceToHost);

gpu_sum = 0;

for (int i = 0; i < grid.x; i++)

gpu_sum += h_odata[i];

printf("gpu InterLeave: elapsed %.2f ms gpu_sum: %lld\n",

dElaps, gpu_sum);

// kernel 4: gpuRecursiveReduce

cudaMemcpy(d_idata, h_idata, bytes, cudaMemcpyHostToDevice);

cudaDeviceSynchronize();

CudaUtils::Time::Start();

gpuRecursiveReduce << <grid, block >> > (d_idata, d_odata, size);

cudaDeviceSynchronize();

CudaUtils::Time::End();

dElaps = CudaUtils::Time::Duration<CudaUtils::Time::MS>();

cudaMemcpy(h_odata, d_odata, grid.x * sizeof(int), cudaMemcpyDeviceToHost);

gpu_sum = 0;

for (int i = 0; i < grid.x; i++)

gpu_sum += h_odata[i];

printf("gpu RecursiveReduce: elapsed %.2f ms gpu_sum: %lld\n",

dElaps, gpu_sum);

// kernel 5: gpuRecursiveReduceNosync

cudaMemcpy(d_idata, h_idata, bytes, cudaMemcpyHostToDevice);

cudaDeviceSynchronize();

CudaUtils::Time::Start();

gpuRecursiveReduceNosync << <grid, block >> > (d_idata, d_odata, size);

cudaDeviceSynchronize();

CudaUtils::Time::End();

dElaps = CudaUtils::Time::Duration<CudaUtils::Time::MS>();

cudaMemcpy(h_odata, d_odata, grid.x * sizeof(int), cudaMemcpyDeviceToHost);

gpu_sum = 0;

for (int i = 0; i < grid.x; i++)

gpu_sum += h_odata[i];

printf("gpu RecursiveReduceNosync: elapsed %.2f ms gpu_sum: %lld\n",

dElaps, gpu_sum);

// kernel 6: gpuRecursiveReduce2

cudaMemcpy(d_idata, h_idata, bytes, cudaMemcpyHostToDevice);

cudaDeviceSynchronize();

CudaUtils::Time::Start();

gpuRecursiveReduce2 << <grid, block >> > (d_idata, d_odata, block.x, block.x);

cudaDeviceSynchronize();

CudaUtils::Time::End();

dElaps = CudaUtils::Time::Duration<CudaUtils::Time::MS>();

cudaMemcpy(h_odata, d_odata, grid.x * sizeof(int), cudaMemcpyDeviceToHost);

gpu_sum = 0;

for (int i = 0; i < grid.x; i++)

gpu_sum += h_odata[i];

printf("gpu RecursiveReduce2: elapsed %.2f ms gpu_sum: %lld\n",

dElaps, gpu_sum);

//free host memory

free(h_idata);

free(h_odata);

//free device memory

cudaFree(d_idata);

cudaFree(d_odata);

system("pause");

return 0;

}