人体姿态点算法(mmpose,openpose)tensorrt实现

简介

人体骨骼关键点检测是诸多计算机视觉任务的基础,例如姿态估计,行为识别,人机交互,虚拟现实,智能家居,以及无人驾驶等等。本专栏提供两种形式的人体姿态点算法,一种是topdown的mmpose,另一种是bottomup的openpose。并使用tensorrt加速整个项目包括输入图片的前后处理,可以一键集成到打架斗殴老人监护这种利用关键点检测的算法中,topdown人体姿态点检测值一次检测一个人的关节点,bottomup是检测一张图中所有的人的关节点。代码链接见文末。

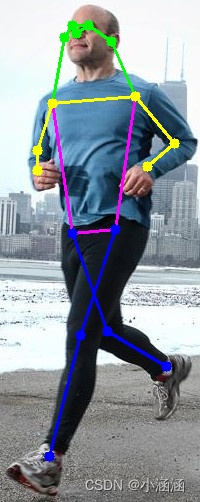

效果展示

openpose

mmpose

tensorrt 常用函数动态库封装

#ifndef TRT_HPP

#define TRT_HPP

#include <string>

#include <vector>

#include <iostream>

#include <numeric>

#include <algorithm>

#include "opencv2/opencv.hpp"

#include "NvInfer.h"

class TrtLogger : public nvinfer1::ILogger {

void log(Severity severity, const char* msg) override

{

// suppress info-level messages

if (severity != Severity::kVERBOSE)

std::cout << msg << std::endl;

}

};

struct TrtPluginParams {

// yolo-det layer

int yoloClassNum = 1;

int yolo3NetSize = 416; // 416 or 608

// upsample layer

float upsampleScale = 2;

};

class PluginFactory;

class Trt {

public:

Trt();

Trt(TrtPluginParams params);

~Trt();

bool BuildEngineWithOnnx(const std::string& onnxModel,

const std::string& engineFile,

const std::vector<std::string>& customOutput,

const std::vector<std::vector<float>>& calibratorData,

int maxBatchSize);

void CreateEngine(const std::string& engineFile);

void img_process(std::string file_list,nvinfer1::Dims3 input_dim,std::vector<std::vector<float>>& out_data);

void CreateEngine(

const std::string& onnxModel,

const std::string& engineFile,

const std::vector<std::string>& customOutput,

int maxBatchSize,

int mode,

const std::vector<std::vector<float>>& calibratorData);

//我的测试程序

void test_img(const std::string& engineFile,const std::string img_path);

void Forward();

bool DeserializeEngine(const std::string& engineFile);

void ForwardAsync(const cudaStream_t& stream);

void DataTransfer(std::vector<float>& data, int bindIndex, bool isHostToDevice);

void DataTransferAsync(std::vector<float>& data, int bindIndex, bool isHostToDevice, cudaStream_t& stream);

void CopyFromHostToDevice(const std::vector<float>& input, int bindIndex);

void CopyFromDeviceToHost(std::vector<float>& output, int bindIndex);

void CopyFromHostToDevice(const std::vector<float>& input, int bindIndex,const cudaStream_t& stream);

void CopyFromDeviceToHost(std::vector<float>& output, int bindIndex,const cudaStream_t& stream);

void SetDevice(int device);

void InitEngine();

int GetDevice() const;

int GetMaxBatchSize() const;

void* GetBindingPtr(int bindIndex) const;

size_t GetBindingSize(int bindIndex) const;

nvinfer1::Dims GetBindingDims(int bindIndex) const;

nvinfer1::DataType GetBindingDataType(int bindIndex) const;

std::vector<std::string> mBindingName;

void BuildEngine(nvinfer1::IBuilder* builder,

nvinfer1::INetworkDefinition* network,

const std::vector<std::vector<float>>& calibratorData,

int maxBatchSize,

int mode);

void SaveEngine(const std::string& fileName);

TrtLogger mLogger;

// tensorrt run mode 0:fp32 1:fp16 2:int8

int mRunMode;

nvinfer1::ICudaEngine* mEngine = nullptr;

nvinfer1::IExecutionContext* mContext = nullptr;

PluginFactory* mPluginFactory;

nvinfer1::IRuntime* mRuntime = nullptr;

std::vector<void*> mBinding;

std::vector<size_t> mBindingSize;

std::vector<nvinfer1::Dims> mBindingDims;

std::vector<nvinfer1::DataType> mBindingDataType;

int mInputSize = 0;

// batch size

int mBatchSize;

};

里面将原始的tensorrt封装成类Trt方便调用,主要的函数有BuildEngineWithOnnx,将你的onnx模型转化成tensorrt所需要的格式。DeserializeEngine将你的tensorrt模型反序列化,Forward前向传播获取结果,CopyFromDeviceToHost CopyFromHostToDevice各个设备上的数据拷贝。

openpose tensorrt实现

openpose初始化

openpose的tensorr实现要借助于上边封装的tinytrt。

//

// Created by zhangyong on 5/17/22.

//

#include "Trt.h"

#include "openpose.h"

#include "utils.h"

#include "resize.h"

//bool Trt::BuildEngineWithOnnx(const std::string& onnxModel,

// const std::string& engineFile,

// const std::vector<std::string>& customOutput,

// const std::vector<std::vector<float>>& calibratorData,

//

// int maxBatchSize)

using namespace std;

using namespace cv;

openpose::openpose(

const std::string& saveEngine

)

{

mNet = new Trt();

mNet->DeserializeEngine(saveEngine);

mNet->InitEngine();

MallocExtraMemory();

}

openpose::openpose(

const std::string& onnxModel,

const std::string& saveEngine,

const std::vector<std::string>& outputBlobName,

const std::vector<std::vector<float>>& calibratorData,

int maxBatchSize

)

{

mNet = new Trt();

mNet->BuildEngineWithOnnx(onnxModel,saveEngine,outputBlobName,calibratorData,maxBatchSize);

MallocExtraMemory();

}

void openpose::DoInference(std::vector<cv::Mat>& inputData,vector<float> &f1,vector<float> &f2) {

cudaFrame = safeCudaMalloc(1024 * 1024 * 3 * sizeof(uchar));

size_t buff_size=c*w*h*sizeof(float);

for (size_t i = 0; i < inputData.size(); ++i)

{

auto& mat = inputData[i];

img_step1_=mat.step[0];

img_h_=mat.rows;

cudaMemcpy(cudaFrame, mat.data, img_step1_ * img_h_, cudaMemcpyHostToDevice);

myresizeAndNorm(cudaFrame, mfloatinput+i*buff_size, mat.cols, mat.rows, w,h,nullptr);

}

mNet->Forward();

std::vector<float> net_output;

std::vector<float> net_output2;

int out1_size=mBatchSize*18*56*56;

int out2_size=mBatchSize*42*56*56;

net_output.resize(out1_size);

net_output2.resize(out2_size);

// f1.resize(out1_size);

// f2.resize(out2_size);

// mNet->CopyFromDeviceToHost(f1,1);

// mNet->CopyFromDeviceToHost(f2,2);

printf("ok1");

mNet->CopyFromDeviceToHost(net_output,1);

mNet->CopyFromDeviceToHost(net_output2,2);

// f1=net_output;

// f2=net_output2;

// f1.insert(f1.begin(),net_output.begin(),net_output.end());

// f2.insert(f2.begin(),net_output2.begin(),net_output2.end());

memcpy(&f1,net_output.data(),out1_size* sizeof(float));

memcpy(&f2,net_output2.data(),out2_size* sizeof(float));

printf("ok");

// mNet->CopyFromHostToDevice(inputData, 0);

}

void openpose::MallocExtraMemory() {

mBatchSize = mNet->GetMaxBatchSize();

mpInputGpu = mNet->GetBindingPtr(0);

mfloatinput=(float*) mpInputGpu;

mInputDataType = mNet->GetBindingDataType(0);

nvinfer1::Dims inputDims = mNet->GetBindingDims(0);

mInputDims = nvinfer1::Dims3(inputDims.d[0],inputDims.d[1],inputDims.d[2]);

mInputSize = mNet->GetBindingSize(0);

w=inputDims.d[2];

h=inputDims.d[3];

c=inputDims.d[1];

cmap_vector = mNet->GetBindingPtr(1);

nvinfer1::Dims cmapDims = mNet->GetBindingDims(1);

mout1Dims = nvinfer1::Dims3(cmapDims.d[0],cmapDims.d[1],cmapDims.d[2]);

mout1Size = mNet->GetBindingSize(1);

paf_vector = mNet->GetBindingPtr(2);

nvinfer1::Dims pafDims = mNet->GetBindingDims(2);

mout2Dims = nvinfer1::Dims3(pafDims.d[0],pafDims.d[1],pafDims.d[2]);

mout2Size = mNet->GetBindingSize(2);

}

可以看到实现使用openpose提取人体关节点,主要使用了上边封装tinytrt中的DeserializeEngine,InitEngine,BuildEngineWithOnnx等函数

openpose前处理

openpose的前处理是简单的resize和scale,这里使用了cuda进行加速,具体代码见:

#include <device_launch_parameters.h>

#include <stdio.h>

__forceinline__ __device__ float3 get(uchar3* src, int x, int y, int w, int h) {

if (x < 0 || x >= w || y < 0 || y >= h) return make_float3(0.5, 0.5, 0.5);

uchar3 temp = src[y*w + x];

return make_float3(float(temp.x) / 255., float(temp.y) / 255., float(temp.z) / 255.);

}

__global__ void resizeNormKernel(uchar3* src, float *dst, int dstW, int dstH, int srcW, int srcH,

float scaleX, float scaleY, float shiftX, float shiftY) {

int idx = blockIdx.x * blockDim.x + threadIdx.x;

const int x = idx % dstW;

const int y = idx / dstW;

if (x >= dstW || y >= dstH)

return;

float w = (x - shiftX + 0.5) * scaleX - 0.5;

float h = (y - shiftY + 0.5) * scaleY - 0.5;

int h_low = (int)h;

int w_low = (int)w;

int h_high = h_low + 1;

int w_high = w_low + 1;

float lh = h - h_low;

float lw = w - w_low;

float hh = 1 - lh, hw = 1 - lw;

float w1 = hh * hw, w2 = hh * lw, w3 = lh * hw, w4 = lh * lw;

float3 v1 = get(src, w_low, h_low, srcW, srcH);

float3 v2 = get(src, w_high, h_low, srcW, srcH);

float3 v3 = get(src, w_low, h_high, srcW, srcH);

float3 v4 = get(src, w_high, h_high, srcW, srcH);

int stride = dstW * dstH;

dst[y*dstW + x] = w1 * v1.x + w2 * v2.x + w3 * v3.x + w4 * v4.x;

dst[stride + y * dstW + x] = w1 * v1.y + w2 * v2.y + w3 * v3.y + w4 * v4.y;

dst[stride * 2 + y * dstW + x] = w1 * v1.z + w2 * v2.z + w3 * v3.z + w4 * v4.z;

}

__global__ void myresizeNormKernel(uchar3* src, float *dst, int dstW, int dstH, int srcW, int srcH,

float scaleX, float scaleY, float shiftX, float shiftY) {

int idx = blockIdx.x * blockDim.x + threadIdx.x;

const int x = blockIdx.x * blockDim.x + threadIdx.x;//cuda线程的x索引.x对应的comuln.这里的x y是相对输出来说的,是指输出的宽高,不是输入的.

const int y = blockIdx.y * blockDim.y + threadIdx.y;

if (x >= dstW || y >= dstH)

return;

float w = (x - shiftX + 0.5) * scaleX - 0.5;

float h = (y - shiftY + 0.5) * scaleY - 0.5;

int h_low = (int)h;

int w_low = (int)w;

int h_high = h_low + 1;

int w_high = w_low + 1;

float lh = h - h_low;

float lw = w - w_low;

float hh = 1 - lh, hw = 1 - lw;

float w1 = hh * hw, w2 = hh * lw, w3 = lh * hw, w4 = lh * lw;

float3 v1 = get(src, w_low, h_low, srcW, srcH);

float3 v2 = get(src, w_high, h_low, srcW, srcH);

float3 v3 = get(src, w_low, h_high, srcW, srcH);

float3 v4 = get(src, w_high, h_high, srcW, srcH);

int stride = dstW * dstH;

dst[y*dstW + x] = w1 * v1.x + w2 * v2.x + w3 * v3.x + w4 * v4.x;

dst[stride + y * dstW + x] = w1 * v1.y + w2 * v2.y + w3 * v3.y + w4 * v4.y;

dst[stride * 2 + y * dstW + x] = w1 * v1.z + w2 * v2.z + w3 * v3.z + w4 * v4.z;

}

int resizeAndNorm(void * p, float *d, int w, int h, int in_w, int in_h, cudaStream_t stream) {

float scaleX = (w*1.0f / in_w);

float scaleY = (h*1.0f / in_h);

float shiftX = 0.f, shiftY = 0.f;

const int n = in_w * in_h;

int blockSize = 1024;

const int gridSize = (n + blockSize - 1) / blockSize;

resizeNormKernel << <gridSize, blockSize, 0, stream >> > ((uchar3*)(p), d, in_w, in_h, w, h, scaleX, scaleY, shiftX, shiftY);

return 0;

}

openpose后处理

openpose的后处理主要在parse文件夹下边。

mmpose

mmpose初始化,前处理后处理和openpose的流程差不多,这里就不在展开讲