#include "../common/common.h"

#include <cuda_runtime.h>

#include <stdio.h>

/*

* This code implements the interleaved and neighbor-paired approaches to

* parallel reduction in CUDA. For this example, the sum operation is used. A

* variety of optimizations on parallel reduction aimed at reducing divergence

* are also demonstrated, such as unrolling.

*/

// Recursive Implementation of Interleaved Pair Approach

int recursiveReduce(int *data, int const size)

{

// terminate check

if (size == 1) return data[0];

// renew the stride

int const stride = size / 2;

// in-place reduction

for (int i = 0; i < stride; i++)

{

data[i] += data[i + stride];

}

// call recursively

return recursiveReduce(data, stride);

}

// Neighbored Pair Implementation with divergence

__global__ void reduceNeighbored (int *g_idata, int *g_odata, unsigned int n)

{

// set thread ID

unsigned int tid = threadIdx.x;

unsigned int idx = blockIdx.x * blockDim.x + threadIdx.x;

// convert global data pointer to the local pointer of this block

int *idata = g_idata + blockIdx.x * blockDim.x;

// boundary check

if (idx >= n) return;

// in-place reduction in global memory

for (int stride = 1; stride < blockDim.x; stride *= 2)

{

if ((tid % (2 * stride)) == 0)

{

idata[tid] += idata[tid + stride];

}

// synchronize within threadblock

__syncthreads();

}

// write result for this block to global mem

if (tid == 0) g_odata[blockIdx.x] = idata[0];

}

// Neighbored Pair Implementation with less divergence

__global__ void reduceNeighboredLess (int *g_idata, int *g_odata,

unsigned int n)

{

// set thread ID

unsigned int tid = threadIdx.x;

unsigned int idx = blockIdx.x * blockDim.x + threadIdx.x;

// convert global data pointer to the local pointer of this block

int *idata = g_idata + blockIdx.x * blockDim.x;

// boundary check

if(idx >= n) return;

// in-place reduction in global memory

for (int stride = 1; stride < blockDim.x; stride *= 2)

{

// convert tid into local array index

int index = 2 * stride * tid;

if (index < blockDim.x)

{

idata[index] += idata[index + stride];

}

// synchronize within threadblock

__syncthreads();

}

// write result for this block to global mem

if (tid == 0) g_odata[blockIdx.x] = idata[0];

}

// Interleaved Pair Implementation with less divergence

__global__ void reduceInterleaved (int *g_idata, int *g_odata, unsigned int n)

{

// set thread ID

unsigned int tid = threadIdx.x;

unsigned int idx = blockIdx.x * blockDim.x + threadIdx.x;

// convert global data pointer to the local pointer of this block

int *idata = g_idata + blockIdx.x * blockDim.x;

// boundary check

if(idx >= n) return;

// in-place reduction in global memory

// 两个元素间的跨度被初始化为线程块的一半,然后在每次循环中减少一半

for (int stride = blockDim.x / 2; stride > 0; stride >>= 1)

{

if (tid < stride) //强制线程块中的前半部分线程执行求和操作

{

idata[tid] += idata[tid + stride];

}

__syncthreads();

}

// write result for this block to global mem

if (tid == 0) g_odata[blockIdx.x] = idata[0];

}

__global__ void reduceUnrolling2 (int *g_idata, int *g_odata, unsigned int n)

{

// set thread ID

unsigned int tid = threadIdx.x;

unsigned int idx = blockIdx.x * blockDim.x * 2 + threadIdx.x;

// convert global data pointer to the local pointer of this block

int *idata = g_idata + blockIdx.x * blockDim.x * 2;

// unrolling 2

// 每个线程都添加一个来自相邻数据块的元素

if (idx + blockDim.x < n) g_idata[idx] += g_idata[idx + blockDim.x];

__syncthreads();

// in-place reduction in global memory

for (int stride = blockDim.x / 2; stride > 0; stride >>= 1)

{

if (tid < stride)

{

idata[tid] += idata[tid + stride];

}

// synchronize within threadblock

__syncthreads();

}

// write result for this block to global mem

if (tid == 0) g_odata[blockIdx.x] = idata[0];

}

__global__ void reduceUnrolling4 (int *g_idata, int *g_odata, unsigned int n)

{

// set thread ID

unsigned int tid = threadIdx.x;

unsigned int idx = blockIdx.x * blockDim.x * 4 + threadIdx.x;

// convert global data pointer to the local pointer of this block

int *idata = g_idata + blockIdx.x * blockDim.x * 4;

// unrolling 4

if (idx + 3 * blockDim.x < n)

{

int a1 = g_idata[idx];

int a2 = g_idata[idx + blockDim.x];

int a3 = g_idata[idx + 2 * blockDim.x];

int a4 = g_idata[idx + 3 * blockDim.x];

g_idata[idx] = a1 + a2 + a3 + a4;

}

__syncthreads();

// in-place reduction in global memory

for (int stride = blockDim.x / 2; stride > 0; stride >>= 1)

{

if (tid < stride)

{

idata[tid] += idata[tid + stride];

}

// synchronize within threadblock

__syncthreads();

}

// write result for this block to global mem

if (tid == 0) g_odata[blockIdx.x] = idata[0];

}

__global__ void reduceUnrolling8 (int *g_idata, int *g_odata, unsigned int n)

{

// set thread ID

unsigned int tid = threadIdx.x;

unsigned int idx = blockIdx.x * blockDim.x * 8 + threadIdx.x;

// convert global data pointer to the local pointer of this block

int *idata = g_idata + blockIdx.x * blockDim.x * 8;

// unrolling 8

if (idx + 7 * blockDim.x < n)

{

int a1 = g_idata[idx];

int a2 = g_idata[idx + blockDim.x];

int a3 = g_idata[idx + 2 * blockDim.x];

int a4 = g_idata[idx + 3 * blockDim.x];

int b1 = g_idata[idx + 4 * blockDim.x];

int b2 = g_idata[idx + 5 * blockDim.x];

int b3 = g_idata[idx + 6 * blockDim.x];

int b4 = g_idata[idx + 7 * blockDim.x];

g_idata[idx] = a1 + a2 + a3 + a4 + b1 + b2 + b3 + b4;

}

__syncthreads();

// in-place reduction in global memory

for (int stride = blockDim.x / 2; stride > 0; stride >>= 1)

{

if (tid < stride)

{

idata[tid] += idata[tid + stride];

}

// synchronize within threadblock

__syncthreads();

}

// write result for this block to global mem

if (tid == 0) g_odata[blockIdx.x] = idata[0];

}

__global__ void reduceUnrollWarps8 (int *g_idata, int *g_odata, unsigned int n)

{

// set thread ID

unsigned int tid = threadIdx.x;

unsigned int idx = blockIdx.x * blockDim.x * 8 + threadIdx.x;

// convert global data pointer to the local pointer of this block

int *idata = g_idata + blockIdx.x * blockDim.x * 8;

// unrolling 8

if (idx + 7 * blockDim.x < n)

{

int a1 = g_idata[idx];

int a2 = g_idata[idx + blockDim.x];

int a3 = g_idata[idx + 2 * blockDim.x];

int a4 = g_idata[idx + 3 * blockDim.x];

int b1 = g_idata[idx + 4 * blockDim.x];

int b2 = g_idata[idx + 5 * blockDim.x];

int b3 = g_idata[idx + 6 * blockDim.x];

int b4 = g_idata[idx + 7 * blockDim.x];

g_idata[idx] = a1 + a2 + a3 + a4 + b1 + b2 + b3 + b4;

}

__syncthreads();

// in-place reduction in global memory

for (int stride = blockDim.x / 2; stride > 32; stride >>= 1)

{

if (tid < stride)

{

idata[tid] += idata[tid + stride];

}

// synchronize within threadblock

__syncthreads();

}

// unrolling warp

if (tid < 32)

{

volatile int *vmem = idata; // 由volatile修饰符修饰的变量会每次从内存中读数据,而不是从寄存器读数据

vmem[tid] += vmem[tid + 32];

vmem[tid] += vmem[tid + 16];

vmem[tid] += vmem[tid + 8];

vmem[tid] += vmem[tid + 4];

vmem[tid] += vmem[tid + 2];

vmem[tid] += vmem[tid + 1];

}

// write result for this block to global mem

if (tid == 0) g_odata[blockIdx.x] = idata[0];

}

__global__ void reduceCompleteUnrollWarps8 (int *g_idata, int *g_odata,

unsigned int n)

{

// set thread ID

unsigned int tid = threadIdx.x;

unsigned int idx = blockIdx.x * blockDim.x * 8 + threadIdx.x;

// convert global data pointer to the local pointer of this block

int *idata = g_idata + blockIdx.x * blockDim.x * 8;

// unrolling 8

if (idx + 7 * blockDim.x < n)

{

int a1 = g_idata[idx];

int a2 = g_idata[idx + blockDim.x];

int a3 = g_idata[idx + 2 * blockDim.x];

int a4 = g_idata[idx + 3 * blockDim.x];

int b1 = g_idata[idx + 4 * blockDim.x];

int b2 = g_idata[idx + 5 * blockDim.x];

int b3 = g_idata[idx + 6 * blockDim.x];

int b4 = g_idata[idx + 7 * blockDim.x];

g_idata[idx] = a1 + a2 + a3 + a4 + b1 + b2 + b3 + b4;

}

__syncthreads();

// in-place reduction and complete unroll

if (blockDim.x >= 1024 && tid < 512) idata[tid] += idata[tid + 512];

__syncthreads();

if (blockDim.x >= 512 && tid < 256) idata[tid] += idata[tid + 256];

__syncthreads();

if (blockDim.x >= 256 && tid < 128) idata[tid] += idata[tid + 128];

__syncthreads();

if (blockDim.x >= 128 && tid < 64) idata[tid] += idata[tid + 64];

__syncthreads();

// unrolling warp

if (tid < 32)

{

volatile int *vsmem = idata;

vsmem[tid] += vsmem[tid + 32];

vsmem[tid] += vsmem[tid + 16];

vsmem[tid] += vsmem[tid + 8];

vsmem[tid] += vsmem[tid + 4];

vsmem[tid] += vsmem[tid + 2];

vsmem[tid] += vsmem[tid + 1];

}

// write result for this block to global mem

if (tid == 0) g_odata[blockIdx.x] = idata[0];

}

template <unsigned int iBlockSize>

__global__ void reduceCompleteUnroll(int *g_idata, int *g_odata,

unsigned int n)

{

// set thread ID

unsigned int tid = threadIdx.x;

unsigned int idx = blockIdx.x * blockDim.x * 8 + threadIdx.x;

// convert global data pointer to the local pointer of this block

int *idata = g_idata + blockIdx.x * blockDim.x * 8;

// unrolling 8

if (idx + 7 * blockDim.x < n)

{

int a1 = g_idata[idx];

int a2 = g_idata[idx + blockDim.x];

int a3 = g_idata[idx + 2 * blockDim.x];

int a4 = g_idata[idx + 3 * blockDim.x];

int b1 = g_idata[idx + 4 * blockDim.x];

int b2 = g_idata[idx + 5 * blockDim.x];

int b3 = g_idata[idx + 6 * blockDim.x];

int b4 = g_idata[idx + 7 * blockDim.x];

g_idata[idx] = a1 + a2 + a3 + a4 + b1 + b2 + b3 + b4;

}

__syncthreads();

// in-place reduction and complete unroll

if (iBlockSize >= 1024 && tid < 512) idata[tid] += idata[tid + 512];

__syncthreads();

if (iBlockSize >= 512 && tid < 256) idata[tid] += idata[tid + 256];

__syncthreads();

if (iBlockSize >= 256 && tid < 128) idata[tid] += idata[tid + 128];

__syncthreads();

if (iBlockSize >= 128 && tid < 64) idata[tid] += idata[tid + 64];

__syncthreads();

// unrolling warp

if (tid < 32)

{

volatile int *vsmem = idata;

vsmem[tid] += vsmem[tid + 32];

vsmem[tid] += vsmem[tid + 16];

vsmem[tid] += vsmem[tid + 8];

vsmem[tid] += vsmem[tid + 4];

vsmem[tid] += vsmem[tid + 2];

vsmem[tid] += vsmem[tid + 1];

}

// write result for this block to global mem

if (tid == 0) g_odata[blockIdx.x] = idata[0];

}

__global__ void reduceUnrollWarps (int *g_idata, int *g_odata, unsigned int n)

{

// set thread ID

unsigned int tid = threadIdx.x;

unsigned int idx = blockIdx.x * blockDim.x * 2 + threadIdx.x;

// convert global data pointer to the local pointer of this block

int *idata = g_idata + blockIdx.x * blockDim.x * 2;

// unrolling 2

if (idx + blockDim.x < n) g_idata[idx] += g_idata[idx + blockDim.x];

__syncthreads();

// in-place reduction in global memory

for (int stride = blockDim.x / 2; stride > 32; stride >>= 1)

{

if (tid < stride)

{

idata[tid] += idata[tid + stride];

}

// synchronize within threadblock

__syncthreads();

}

// unrolling last warp

if (tid < 32)

{

volatile int *vsmem = idata;

vsmem[tid] += vsmem[tid + 32];

vsmem[tid] += vsmem[tid + 16];

vsmem[tid] += vsmem[tid + 8];

vsmem[tid] += vsmem[tid + 4];

vsmem[tid] += vsmem[tid + 2];

vsmem[tid] += vsmem[tid + 1];

}

if (tid == 0) g_odata[blockIdx.x] = idata[0];

}

int main(int argc, char **argv)

{

// 设置设备

int dev = 0;

cudaDeviceProp deviceProp;

CHECK(cudaGetDeviceProperties(&deviceProp, dev));

printf("%s starting reduction at ", argv[0]);

printf("device %d: %s ", dev, deviceProp.name);

CHECK(cudaSetDevice(dev));

bool bResult = false;

// 初始化

int size = 1 << 24; // 包含16M个元素

printf(" with array size %d ", size);

// execution configuration

int blocksize = 512; // 初始化block的大小

if(argc > 1)

{

blocksize = atoi(argv[1]); // block size from command line argument

}

dim3 block (blocksize, 1); //一维块

dim3 grid ((size + block.x - 1) / block.x, 1); //一维网格

printf("grid %d block %d\n", grid.x, block.x);

// 分配主机内存

size_t bytes = size * sizeof(int);

int *h_idata = (int *) malloc(bytes);

int *h_odata = (int *) malloc(grid.x * sizeof(int));

int *tmp = (int *) malloc(bytes);

// 初始化数组

for (int i = 0; i < size; i++)

{

// mask off high 2 bytes to force max number to 255

h_idata[i] = (int)( rand() & 0xFF );

}

memcpy (tmp, h_idata, bytes); // 复制h_idata到tmp

double iStart, iElaps;

int gpu_sum = 0;

// 分配设备内存

int *d_idata = NULL;

int *d_odata = NULL;

CHECK(cudaMalloc((void **) &d_idata, bytes));

CHECK(cudaMalloc((void **) &d_odata, grid.x * sizeof(int)));

// 计算在cpu上的耗时

iStart = seconds();

int cpu_sum = recursiveReduce (tmp, size);

iElaps = seconds() - iStart;

printf("cpu reduce elapsed %f sec cpu_sum: %d\n", iElaps, cpu_sum);

// 核函数 1: reduceNeighbored

// 相邻配对规约方式

CHECK(cudaMemcpy(d_idata, h_idata, bytes, cudaMemcpyHostToDevice));

CHECK(cudaDeviceSynchronize());

iStart = seconds();

reduceNeighbored<<<grid, block>>>(d_idata, d_odata, size);

CHECK(cudaDeviceSynchronize());

iElaps = seconds() - iStart;

CHECK(cudaMemcpy(h_odata, d_odata, grid.x * sizeof(int),

cudaMemcpyDeviceToHost)); //将结果从device上拷贝回来

gpu_sum = 0;

for (int i = 0; i < grid.x; i++) gpu_sum += h_odata[i]; //在host上完成串行求和

printf("gpu Neighbored elapsed %f sec gpu_sum: %d <<<grid %d block "

"%d>>>\n", iElaps, gpu_sum, grid.x, block.x);

// 核函数 2: reduceNeighbored with less divergence

// 改善的相邻配对规约方式

CHECK(cudaMemcpy(d_idata, h_idata, bytes, cudaMemcpyHostToDevice));

CHECK(cudaDeviceSynchronize());

iStart = seconds();

reduceNeighboredLess<<<grid, block>>>(d_idata, d_odata, size);

CHECK(cudaDeviceSynchronize());

iElaps = seconds() - iStart;

CHECK(cudaMemcpy(h_odata, d_odata, grid.x * sizeof(int),

cudaMemcpyDeviceToHost));

gpu_sum = 0;

for (int i = 0; i < grid.x; i++) gpu_sum += h_odata[i];

printf("gpu NeighboredL elapsed %f sec gpu_sum: %d <<<grid %d block "

"%d>>>\n", iElaps, gpu_sum, grid.x, block.x);

// 核函数 3: reduceInterleaved

// 交错配对规约内核

CHECK(cudaMemcpy(d_idata, h_idata, bytes, cudaMemcpyHostToDevice));

CHECK(cudaDeviceSynchronize());

iStart = seconds();

reduceInterleaved<<<grid, block>>>(d_idata, d_odata, size);

CHECK(cudaDeviceSynchronize());

iElaps = seconds() - iStart;

CHECK(cudaMemcpy(h_odata, d_odata, grid.x * sizeof(int),

cudaMemcpyDeviceToHost));

gpu_sum = 0;

for (int i = 0; i < grid.x; i++) gpu_sum += h_odata[i];

printf("gpu Interleaved elapsed %f sec gpu_sum: %d <<<grid %d block "

"%d>>>\n", iElaps, gpu_sum, grid.x, block.x);

// kernel 4: reduceUnrolling2

// 展开的规约

CHECK(cudaMemcpy(d_idata, h_idata, bytes, cudaMemcpyHostToDevice));

CHECK(cudaDeviceSynchronize());

iStart = seconds();

reduceUnrolling2<<<grid.x / 2, block>>>(d_idata, d_odata, size);

CHECK(cudaDeviceSynchronize());

iElaps = seconds() - iStart;

CHECK(cudaMemcpy(h_odata, d_odata, grid.x / 2 * sizeof(int),

cudaMemcpyDeviceToHost));

gpu_sum = 0;

for (int i = 0; i < grid.x / 2; i++) gpu_sum += h_odata[i];

printf("gpu Unrolling2 elapsed %f sec gpu_sum: %d <<<grid %d block "

"%d>>>\n", iElaps, gpu_sum, grid.x / 2, block.x);

// kernel 5: reduceUnrolling4

CHECK(cudaMemcpy(d_idata, h_idata, bytes, cudaMemcpyHostToDevice));

CHECK(cudaDeviceSynchronize());

iStart = seconds();

reduceUnrolling4<<<grid.x / 4, block>>>(d_idata, d_odata, size);

CHECK(cudaDeviceSynchronize());

iElaps = seconds() - iStart;

CHECK(cudaMemcpy(h_odata, d_odata, grid.x / 4 * sizeof(int),

cudaMemcpyDeviceToHost));

gpu_sum = 0;

for (int i = 0; i < grid.x / 4; i++) gpu_sum += h_odata[i];

printf("gpu Unrolling4 elapsed %f sec gpu_sum: %d <<<grid %d block "

"%d>>>\n", iElaps, gpu_sum, grid.x / 4, block.x);

// kernel 6: reduceUnrolling8

CHECK(cudaMemcpy(d_idata, h_idata, bytes, cudaMemcpyHostToDevice));

CHECK(cudaDeviceSynchronize());

iStart = seconds();

reduceUnrolling8<<<grid.x / 8, block>>>(d_idata, d_odata, size);

CHECK(cudaDeviceSynchronize());

iElaps = seconds() - iStart;

CHECK(cudaMemcpy(h_odata, d_odata, grid.x / 8 * sizeof(int),

cudaMemcpyDeviceToHost));

gpu_sum = 0;

for (int i = 0; i < grid.x / 8; i++) gpu_sum += h_odata[i];

printf("gpu Unrolling8 elapsed %f sec gpu_sum: %d <<<grid %d block "

"%d>>>\n", iElaps, gpu_sum, grid.x / 8, block.x);

for (int i = 0; i < grid.x / 16; i++) gpu_sum += h_odata[i];

// kernel 8: reduceUnrollWarps8

// 展开线程的规约

CHECK(cudaMemcpy(d_idata, h_idata, bytes, cudaMemcpyHostToDevice));

CHECK(cudaDeviceSynchronize());

iStart = seconds();

reduceUnrollWarps8<<<grid.x / 8, block>>>(d_idata, d_odata, size);

CHECK(cudaDeviceSynchronize());

iElaps = seconds() - iStart;

CHECK(cudaMemcpy(h_odata, d_odata, grid.x / 8 * sizeof(int),

cudaMemcpyDeviceToHost));

gpu_sum = 0;

for (int i = 0; i < grid.x / 8; i++) gpu_sum += h_odata[i];

printf("gpu UnrollWarp8 elapsed %f sec gpu_sum: %d <<<grid %d block "

"%d>>>\n", iElaps, gpu_sum, grid.x / 8, block.x);

// kernel 9: reduceCompleteUnrollWarsp8

// 完全展开的规约

CHECK(cudaMemcpy(d_idata, h_idata, bytes, cudaMemcpyHostToDevice));

CHECK(cudaDeviceSynchronize());

iStart = seconds();

reduceCompleteUnrollWarps8<<<grid.x / 8, block>>>(d_idata, d_odata, size);

CHECK(cudaDeviceSynchronize());

iElaps = seconds() - iStart;

CHECK(cudaMemcpy(h_odata, d_odata, grid.x / 8 * sizeof(int),

cudaMemcpyDeviceToHost));

gpu_sum = 0;

for (int i = 0; i < grid.x / 8; i++) gpu_sum += h_odata[i];

printf("gpu Cmptnroll8 elapsed %f sec gpu_sum: %d <<<grid %d block "

"%d>>>\n", iElaps, gpu_sum, grid.x / 8, block.x);

// kernel 9: reduceCompleteUnroll

// 模板函数的规约

CHECK(cudaMemcpy(d_idata, h_idata, bytes, cudaMemcpyHostToDevice));

CHECK(cudaDeviceSynchronize());

iStart = seconds();

switch (blocksize)

{

case 1024:

reduceCompleteUnroll<1024><<<grid.x / 8, block>>>(d_idata, d_odata,

size);

break;

case 512:

reduceCompleteUnroll<512><<<grid.x / 8, block>>>(d_idata, d_odata,

size);

break;

case 256:

reduceCompleteUnroll<256><<<grid.x / 8, block>>>(d_idata, d_odata,

size);

break;

case 128:

reduceCompleteUnroll<128><<<grid.x / 8, block>>>(d_idata, d_odata,

size);

break;

case 64:

reduceCompleteUnroll<64><<<grid.x / 8, block>>>(d_idata, d_odata, size);

break;

}

CHECK(cudaDeviceSynchronize());

iElaps = seconds() - iStart;

CHECK(cudaMemcpy(h_odata, d_odata, grid.x / 8 * sizeof(int),

cudaMemcpyDeviceToHost));

gpu_sum = 0;

for (int i = 0; i < grid.x / 8; i++) gpu_sum += h_odata[i];

printf("gpu Cmptnroll elapsed %f sec gpu_sum: %d <<<grid %d block "

"%d>>>\n", iElaps, gpu_sum, grid.x / 8, block.x);

// 释放host内存

free(h_idata);

free(h_odata);

// 释放device内存

CHECK(cudaFree(d_idata));

CHECK(cudaFree(d_odata));

// reset device

CHECK(cudaDeviceReset());

// check the results

bResult = (gpu_sum == cpu_sum);

if(!bResult) printf("Test failed!\n");

return EXIT_SUCCESS;

}

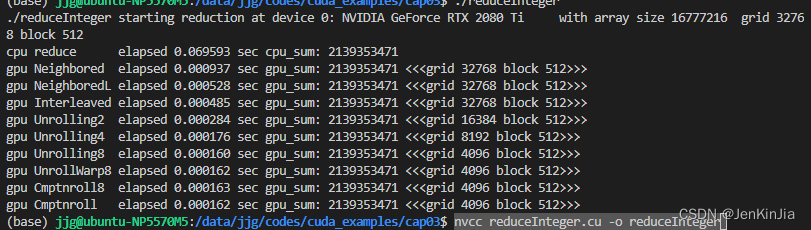

编译:

nvcc reduceInteger.cu -o reduceInteger

执行:./reduceIntege

输出:

?