部署heapster

heapster官方GitHub地址为

准备heapster镜像

运维主机HDSS7-200.host.com上

[root@hdss7-200 ~]# cd /data/k8s-yaml/dashboard/

[root@hdss7-200 dashboard]# mkdir heapster

[root@hdss7-200 dashboard]# cd heapster/

[root@hdss7-200 heapster]# docker pull quay.io/bitnami/heapster:1.5.4

1.5.4: Pulling from bitnami/heapster

4018396ca1ba: Pull complete

0e4723f815c4: Pull complete

d8569f30adeb: Pull complete

Digest: sha256:6d891479611ca06a5502bc36e280802cbf9e0426ce4c008dd2919c2294ce0324

Status: Downloaded newer image for quay.io/bitnami/heapster:1.5.4

quay.io/bitnami/heapster:1.5.4

[root@hdss7-200 heapster]# docker images |grep heapster

quay.io/bitnami/heapster 1.5.4 c359b95ad38b 2 years ago 136MB

[root@hdss7-200 heapster]# docker tag c359b95ad38b harbor.od.com/public/heapster:v1.5.4

[root@hdss7-200 heapster]# docker push !$

docker push harbor.od.com/public/heapster:v1.5.4

The push refers to repository [harbor.od.com/public/heapster]

20d37d828804: Pushed

b9b192015e25: Pushed

b76dba5a0109: Pushed

v1.5.4: digest: sha256:bfb71b113c26faeeea27799b7575f19253ba49ccf064bac7b6137ae8a36f48a5 size: 952

[root@hdss7-200 heapster]# vi rbac.yaml

[root@hdss7-200 heapster]# vi dp.yaml

[root@hdss7-200 heapster]# vi svc.yaml

[root@hdss7-200 heapster]# cat rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: heapster

namespace: kube-system

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: heapster

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:heapster

subjects:

- kind: ServiceAccount

name: heapster

namespace: kube-system

[root@hdss7-200 heapster]# cat dp.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: heapster

namespace: kube-system

spec:

replicas: 1

template:

metadata:

labels:

task: monitoring

k8s-app: heapster

spec:

serviceAccountName: heapster

containers:

- name: heapster

image: harbor.od.com/public/heapster:v1.5.4

imagePullPolicy: IfNotPresent

command:

- /opt/bitnami/heapster/bin/heapster

- --source=kubernetes:https://kubernetes.default

[root@hdss7-200 heapster]# cat svc.yaml

apiVersion: v1

kind: Service

metadata:

labels:

task: monitoring

# For use as a Cluster add-on (https://github.com/kubernetes/tree/master/cluster/addons)

# If you are NOT using this as an addon, you should comment out this line.

kubernetes.io/cluster-service: "true"

kubernetes.io/name: Heapster

name: heapster

namespace: kube-system

spec:

ports:

- port: 80

targetPort: 8082

selector:

k8s-app: heapster

[root@hdss7-200 heapster]#

在21上进行创建pod

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/dashboard/heapster/rbac.yaml

serviceaccount/heapster created

clusterrolebinding.rbac.authorization.k8s.io/heapster created

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/dashboard/heapster/dp.yaml

deployment.extensions/heapster created

[root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/dashboard/heapster/svc.yaml

service/heapster created

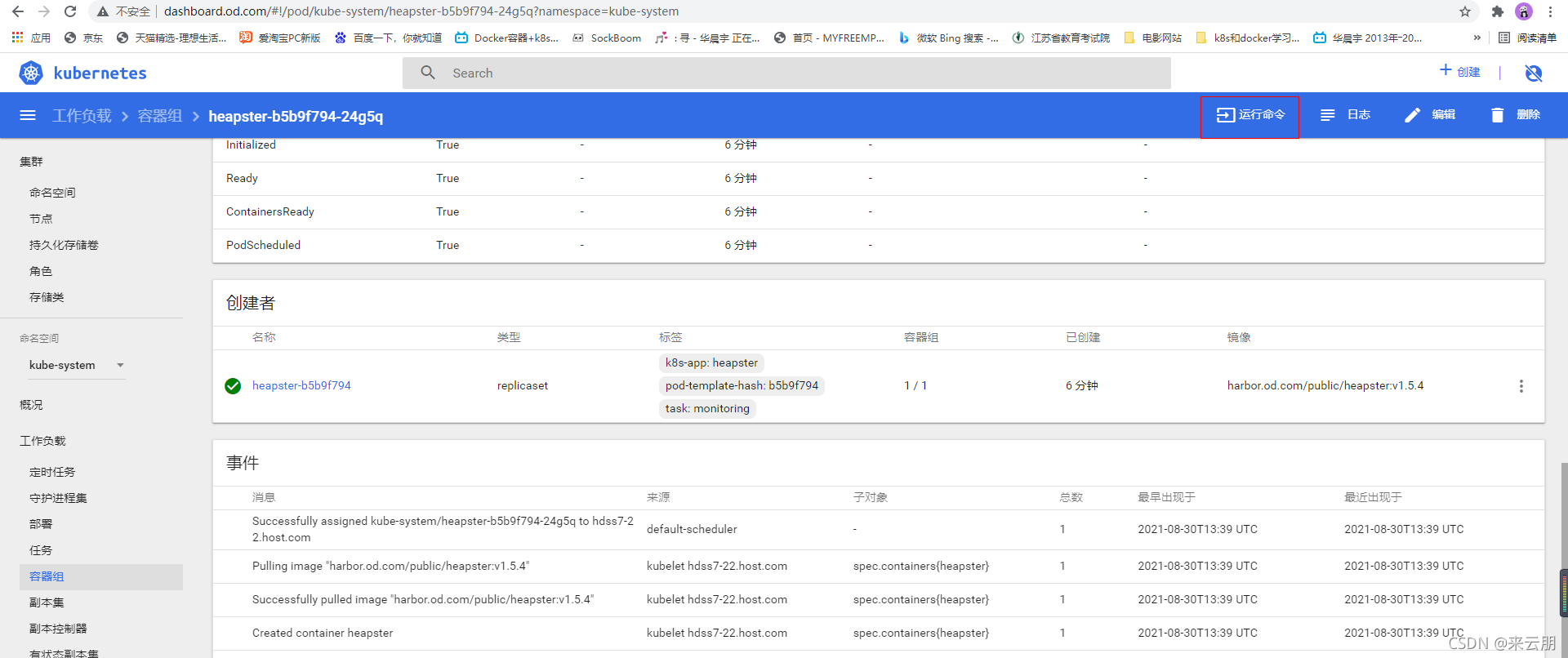

[root@hdss7-21 ~]# kubectl get po -nkube-system

NAME READY STATUS RESTARTS AGE

coredns-6b6c4f9648-ckjh7 1/1 Running 0 29h

heapster-b5b9f794-24g5q 1/1 Running 0 16s

kubernetes-dashboard-76dcdb4677-855kz 1/1 Running 0 24h

traefik-ingress-2jb8z 1/1 Running 0 26h

traefik-ingress-zh4k6 1/1 Running 0 26h

[root@hdss7-21 ~]#

然后在浏览器上进行操作

http://dashboard.od.com/#!/pod?namespace=kube-system

k8s集群平滑升级技巧

这个不在做了,下面是做实验的步骤,想做可以自己做

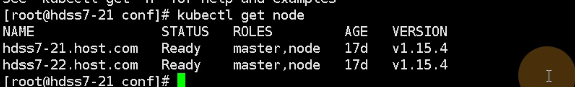

首先自己查看目前是什么版本–下面是v1.15.2

[root@hdss7-21 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

hdss7-21.host.com Ready master,node 32h v1.15.2

hdss7-22.host.com Ready master,node 32h v1.15.2

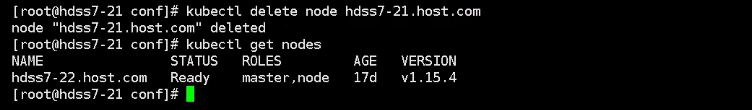

查看本机上有哪些pod在21上的,因为那这个升级的

kubectl get pod -n kube-system -owide

然后将pod给从集群中摘去掉

kubectl delete node hdss7-21.host.com

在看的时候发现所有的pod都转移到22上了

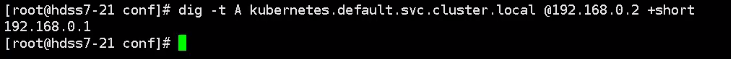

这个时候可以测试下是否还有域名解析

[root@hdss7-21 ~]# dig -t A kubernetes.default.svc.cluster.local @192.168.0.2 +short

192.168.0.1

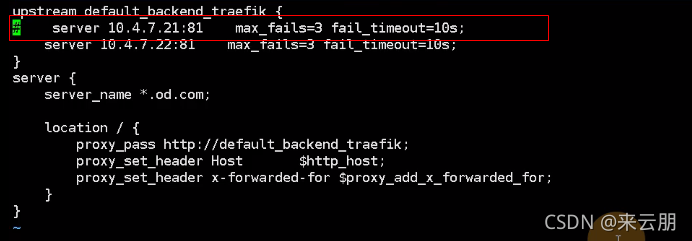

然后去11上将nginx均衡负载给取消掉

vi /etc/nginx/nginx.conf

将od.com 也给先注释掉

[root@hdss7-11 ~]# vim /etc/nginx/conf.d/od.com.conf

然后进行加载nginx

[root@hdss7-11 ~]# nginx -t

[root@hdss7-11 ~]# nginx -s reload

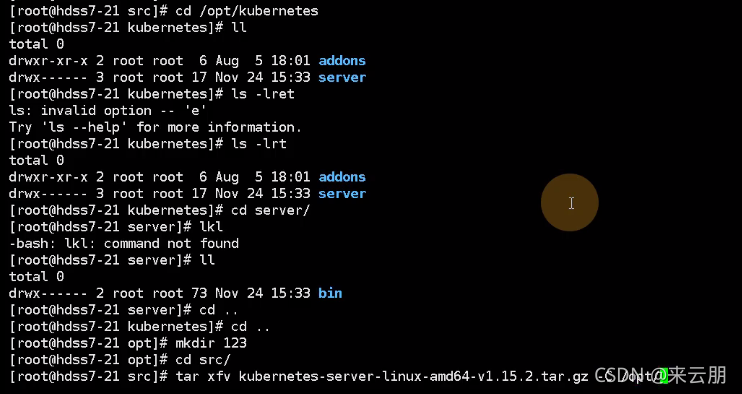

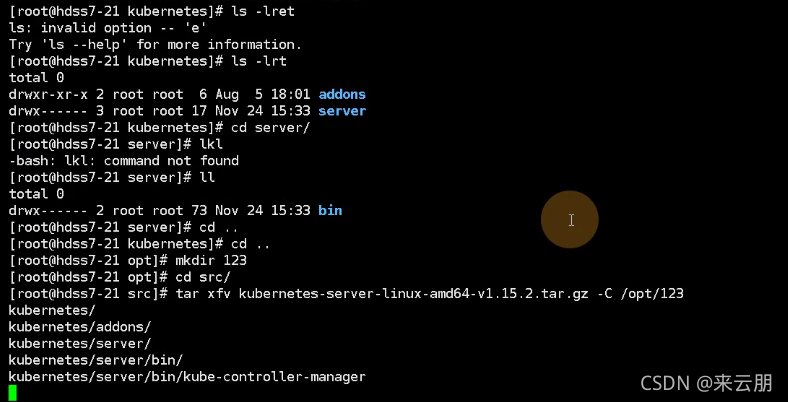

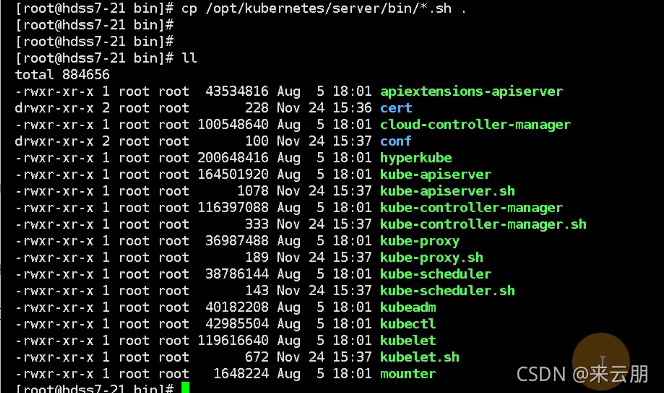

然后进行升级的操作

在21上进行操作

cd /opt/src

需要升级的版本放在这里,然后进行解压

创建一个目录

然后该下名称

然后把升级的版本进行操作

删除需要的内容

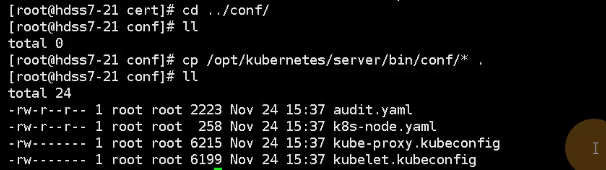

然后把源版本的证书都拷贝过来

然后把sh也拷贝过来

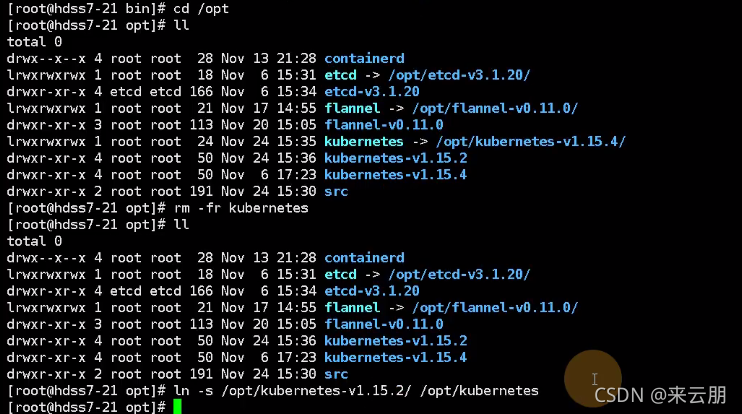

把原来的软连接删除掉重新创建软链接

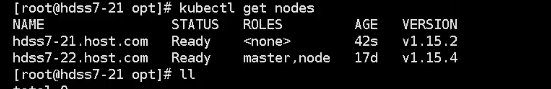

然后重启下组件,这个时候在去看的时候已经被加入了集群

这个时候可以很方便的把原来的版本

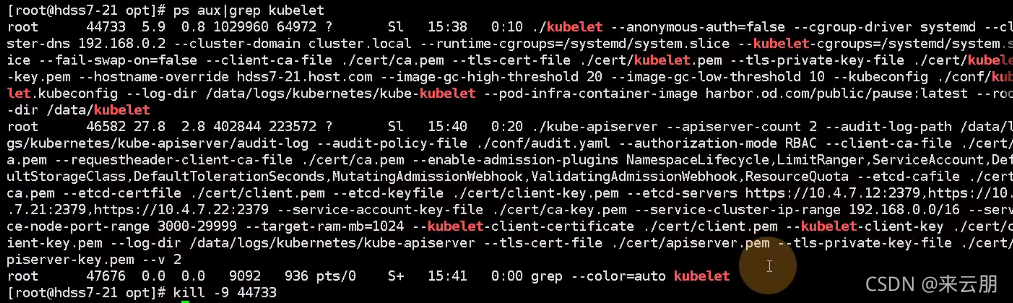

可以把kubelet杀掉重启

记得还需要把11上的那两个注释给删掉了

实操k8s仪表盘鉴权方式详解

[root@hdss7-200 certs]# pwd

/opt/certs

[root@hdss7-200 certs]# (umask 077; openssl genrsa -out dashboard.od.com.key 2048)

Generating RSA private key, 2048 bit long modulus

...............................+++

..+++

e is 65537 (0x10001)

[root@hdss7-200 certs]# openssl req -new -key dashboard.od.com.key -out dashboard.od.com.csr -subj "/CN=dashboard.od.com/C=CN/ST=BJ/L=Beijing/O=OldboyEdu/OU=ops"

[root@hdss7-200 certs]# ll

total 108

-rw-r--r-- 1 root root 1249 Aug 29 12:32 apiserver.csr

-rw-r--r-- 1 root root 566 Aug 29 12:32 apiserver-csr.json

-rw------- 1 root root 1679 Aug 29 12:32 apiserver-key.pem

-rw-r--r-- 1 root root 1598 Aug 29 12:32 apiserver.pem

-rw-r--r-- 1 root root 836 Aug 29 12:10 ca-config.json

-rw-r--r-- 1 root root 993 Aug 29 11:37 ca.csr

-rw-r--r-- 1 root root 328 Aug 29 11:36 ca-csr.json

-rw------- 1 root root 1679 Aug 29 11:37 ca-key.pem

-rw-r--r-- 1 root root 1346 Aug 29 11:37 ca.pem

-rw-r--r-- 1 root root 993 Aug 29 12:31 client.csr

-rw-r--r-- 1 root root 280 Aug 29 12:31 client-csr.json

-rw------- 1 root root 1679 Aug 29 12:31 client-key.pem

-rw-r--r-- 1 root root 1363 Aug 29 12:31 client.pem

-rw------- 1 root root 1679 Aug 30 22:51 dashboard.od.com.key

-rw-r--r-- 1 root root 1005 Aug 30 22:54 dashboard.od.com.csr

-rw-r--r-- 1 root root 1062 Aug 29 12:10 etcd-peer.csr

-rw-r--r-- 1 root root 363 Aug 29 12:10 etcd-peer-csr.json

-rw------- 1 root root 1679 Aug 29 12:10 etcd-peer-key.pem

-rw-r--r-- 1 root root 1428 Aug 29 12:10 etcd-peer.pem

-rw-r--r-- 1 root root 1115 Aug 29 13:03 kubelet.csr

-rw-r--r-- 1 root root 452 Aug 29 13:02 kubelet-csr.json

-rw------- 1 root root 1675 Aug 29 13:03 kubelet-key.pem

-rw-r--r-- 1 root root 1468 Aug 29 13:03 kubelet.pem

-rw-r--r-- 1 root root 1005 Aug 29 13:16 kube-proxy-client.csr

-rw------- 1 root root 1675 Aug 29 13:16 kube-proxy-client-key.pem

-rw-r--r-- 1 root root 1375 Aug 29 13:16 kube-proxy-client.pem

-rw-r--r-- 1 root root 267 Aug 29 13:15 kube-proxy-csr.json

[root@hdss7-200 certs]# openssl x509 -req -in dashboard.od.com.csr -CA ca.pem -CAkey ca-key.pem -CAcreateserial -out dashboard.od.com.crt -days 3650

Signature ok

subject=/CN=dashboard.od.com/C=CN/ST=BJ/L=Beijing/O=OldboyEdu/OU=ops

Getting CA Private Key

[root@hdss7-200 certs]# ll

total 116

-rw-r--r-- 1 root root 1249 Aug 29 12:32 apiserver.csr

-rw-r--r-- 1 root root 566 Aug 29 12:32 apiserver-csr.json

-rw------- 1 root root 1679 Aug 29 12:32 apiserver-key.pem

-rw-r--r-- 1 root root 1598 Aug 29 12:32 apiserver.pem

-rw-r--r-- 1 root root 836 Aug 29 12:10 ca-config.json

-rw-r--r-- 1 root root 993 Aug 29 11:37 ca.csr

-rw-r--r-- 1 root root 328 Aug 29 11:36 ca-csr.json

-rw------- 1 root root 1679 Aug 29 11:37 ca-key.pem

-rw-r--r-- 1 root root 1346 Aug 29 11:37 ca.pem

-rw-r--r-- 1 root root 17 Aug 30 22:57 ca.srl

-rw-r--r-- 1 root root 993 Aug 29 12:31 client.csr

-rw-r--r-- 1 root root 280 Aug 29 12:31 client-csr.json

-rw------- 1 root root 1679 Aug 29 12:31 client-key.pem

-rw-r--r-- 1 root root 1363 Aug 29 12:31 client.pem

-rw-r--r-- 1 root root 1196 Aug 30 22:57 dashboard.od.com.crt

-rw------- 1 root root 1679 Aug 30 22:51 dashboard.od.com.key

-rw-r--r-- 1 root root 1005 Aug 30 22:54 dashboar.od.com.csr

-rw-r--r-- 1 root root 1062 Aug 29 12:10 etcd-peer.csr

-rw-r--r-- 1 root root 363 Aug 29 12:10 etcd-peer-csr.json

-rw------- 1 root root 1679 Aug 29 12:10 etcd-peer-key.pem

-rw-r--r-- 1 root root 1428 Aug 29 12:10 etcd-peer.pem

-rw-r--r-- 1 root root 1115 Aug 29 13:03 kubelet.csr

-rw-r--r-- 1 root root 452 Aug 29 13:02 kubelet-csr.json

-rw------- 1 root root 1675 Aug 29 13:03 kubelet-key.pem

-rw-r--r-- 1 root root 1468 Aug 29 13:03 kubelet.pem

-rw-r--r-- 1 root root 1005 Aug 29 13:16 kube-proxy-client.csr

-rw------- 1 root root 1675 Aug 29 13:16 kube-proxy-client-key.pem

-rw-r--r-- 1 root root 1375 Aug 29 13:16 kube-proxy-client.pem

-rw-r--r-- 1 root root 267 Aug 29 13:15 kube-proxy-csr.json

[root@hdss7-200 certs]# cfssl-certinfo -cert dashboard.od.com.crt

然后在11和12上进行操作

[root@hdss7-11 conf.d]# pwd

/etc/nginx/conf.d

[root@hdss7-11 conf.d]# vi dashboard.com.conf

[root@hdss7-11 conf.d]# cat dashboard.com.conf

server {

listen 80;

server_name dashboard.od.com;

rewrite ^(.*)$ https://${server_name}$1 permanent;

}

server {

listen 443 ssl;

server_name dashboard.od.com;

ssl_certificate "certs/dashboard.od.com.crt";

ssl_certificate_key "certs/dashboard.od.com.key";

ssl_session_cache shared:SSL:1m;

ssl_session_timeout 10m;

ssl_ciphers HIGH:!aNULL:!MDS;

ssl_prefer_server_ciphers on;

location / {

proxy_pass http://default_backend_traefik;

proxy_set_header Host $http_host;

proxy_set_header x-forwarded-for $proxy_add_x_forwarded_for;

}

}

[root@hdss7-11 conf.d]# cd ..

[root@hdss7-11 nginx]# mkdir certs

[root@hdss7-11 nginx]# cd certs/

[root@hdss7-11 certs]# scp hdss7-200:/opt/certs/dashboard.od.com.crt .

The authenticity of host 'hdss7-200 (10.4.7.200)' can't be established.

ECDSA key fingerprint is SHA256:US6HtfZm0h8tdhCJ10iguOgs4d8TanHM47U1bgFA/YU.

ECDSA key fingerprint is MD5:79:a1:9e:8a:6f:19:18:68:15:d7:d2:6e:d0:4a:22:3c.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'hdss7-200' (ECDSA) to the list of known hosts.

dashboard.od.com.crt 100% 1196 455.6KB/s 00:00

[root@hdss7-11 certs]# ll

总用量 4

-rw-r--r--. 1 root root 1196 8月 30 23:07 dashboard.od.com.crt

[root@hdss7-11 certs]# scp hdss7-200:/opt/certs/dashboard.od.com.key .

dashboard.od.com.key 100% 1679 876.9KB/s 00:00

[root@hdss7-11 certs]# nginx -t

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

[root@hdss7-11 certs]# nginx -s reload

[root@hdss7-11 certs]#

这个时候在去登录的时候就应该选高级了

[root@hdss7-21 ~]# kubectl get secret -n kube-system

NAME TYPE DATA AGE

coredns-token-hmhct kubernetes.io/service-account-token 3 31h

default-token-465c2 kubernetes.io/service-account-token 3 34h

heapster-token-847sh kubernetes.io/service-account-token 3 93m

kubernetes-dashboard-admin-token-lx67x kubernetes.io/service-account-token 3 25h

kubernetes-dashboard-key-holder Opaque 2 25h

traefik-ingress-controller-token-zn4hh kubernetes.io/service-account-token 3 28h

[root@hdss7-21 ~]#

可以用下面的方式登录

[root@hdss7-21 ~]# kubectl describe secret kubernetes-dashboard-admin-token-lx67x -n kube-system

实战交付dubbo服务到k8s集群

首先在12上进行操作下,让dashboard也加上

[root@hdss7-12 ~]#

[root@hdss7-12 ~]# cd /etc/nginx/

[root@hdss7-12 nginx]# mkdir certs

[root@hdss7-12 nginx]# cd certs/

[root@hdss7-12 certs]# ll

total 0

[root@hdss7-12 certs]# scp hdss7-200:/opt/certs/od.com.pem .

root@hdss7-200's password:

scp: /opt/certs/od.com.pem: No such file or directory

[root@hdss7-12 certs]# scp hdss7-200:/opt/certs/od.com.pem .

root@hdss7-200's password:

scp: /opt/certs/od.com.pem: No such file or directory

[root@hdss7-12 certs]# scp hdss7-200:/opt/certs/od.com.pem .

root@hdss7-200's password:

scp: /opt/certs/od.com.pem: No such file or directory

[root@hdss7-12 certs]# scp hdss7-200:/opt/certs/dashboard.od.com.crt .

root@hdss7-200's password:

dashboard.od.com.crt 100% 1196 959.6KB/s 00:00

[root@hdss7-12 certs]# scp hdss7-200:/opt/certs/dashboard.od.com.key .

root@hdss7-200's password:

dashboard.od.com.key 100% 1679 1.6MB/s 00:00

[root@hdss7-12 certs]# cd ../conf.d/

[root@hdss7-12 conf.d]# vi dashboard.com.conf

[root@hdss7-12 conf.d]# nginx -t

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

[root@hdss7-12 conf.d]# nginx -s reload

[root@hdss7-12 conf.d]# cat dashboard.com.conf

server {

listen 80;

server_name dashboard.od.com;

rewrite ^(.*)$ https://${server_name}$1 permanent;

}

server {

listen 443 ssl;

server_name dashboard.od.com;

ssl_certificate "certs/dashboard.od.com.crt";

ssl_certificate_key "certs/dashboard.od.com.key";

ssl_session_cache shared:SSL:1m;

ssl_session_timeout 10m;

ssl_ciphers HIGH:!aNULL:!MDS;

ssl_prefer_server_ciphers on;

location / {

proxy_pass http://default_backend_traefik;

proxy_set_header Host $http_host;

proxy_set_header x-forwarded-for $proxy_add_x_forwarded_for;

}

}

[root@hdss7-12 conf.d]#

简单操作副本集的方式

可以看到8443用的是永不排队的方式去轮询的指向两个节点

[root@hdss7-21 ~]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.0.1:443 nq

-> 10.4.7.21:6443 Masq 1 1 0

-> 10.4.7.22:6443 Masq 1 0 0

TCP 192.168.0.2:53 nq

-> 172.7.21.4:53 Masq 1 0 0

TCP 192.168.0.2:9153 nq

-> 172.7.21.4:9153 Masq 1 0 0

TCP 192.168.37.169:443 nq

-> 172.7.22.4:8443 Masq 1 0 0

TCP 192.168.159.79:80 nq

-> 172.7.21.3:80 Masq 1 0 0

TCP 192.168.217.68:80 nq

-> 172.7.22.5:8082 Masq 1 0 0

TCP 192.168.225.63:80 nq

-> 172.7.21.5:80 Masq 1 0 0

-> 172.7.22.3:80 Masq 1 0 0

TCP 192.168.225.63:8080 nq

-> 172.7.21.5:8080 Masq 1 0 0

-> 172.7.22.3:8080 Masq 1 0 0

UDP 192.168.0.2:53 nq

-> 172.7.21.4:53 Masq 1 0 0

[root@hdss7-21 ~]#

交付Dubbo微服务到k8s

. Dubbo是什么

. Dubbo是阿里巴巴SOA服务化治理方案的核心框架,每天为2,000+个服务

提供3,000,000,000+次访问量支持,并被广泛应用于阿里巴巴集团的各成员站点。

. Dubbo是一个分布式服务框架,致力于提供高性能和透明化的RPC远程服

务调用方案,以及SOA服务治理方案。

· 简单的说,dubbo就是个服务框架,如果没有分布式的需求,其实是不需要

用的,只有在分布式的时候,才有dubbo这样的分布式服务框架的需求,并且本质上是个服务调用的东东,说白了就是个远程服务调用的分布式框架

. Dubbo能做什么

· 透明化的远程方法调用,就像调用本地方法一样调用远程方法,只需简单

配置,没有任何API侵入。

· 软负载均衡及容错机制,可在内网替代F5等硬件负载均衡器,降低成本,

减少单点。

· 服务自动注册与发现,不再需要写死服务提供方地址,注册中心基于接口

名查询服务提供者的IP地址,并且能够平滑添加或删除服务提供者。

无论是消费者还是注册者都需要去registry去注册一下

consumer通过rpac协议去到provider调用的

web上可能去后天调用不同的provider

上面图是一个ops的架构图

有状态的服务尽量的交付到集群的外面

无状态服务:就是没有特殊状态的服务,各个请求对于服务器来说统一无差别处理,请求自身携带了所有服务端所需要的所有参数(服务端自身不存储跟请求相关的任何数据,不包括数据库存储信息)

有状态服务:与之相反,有状态服务在服务端保留之前请求的信息,用以处理当前请求,比如session等