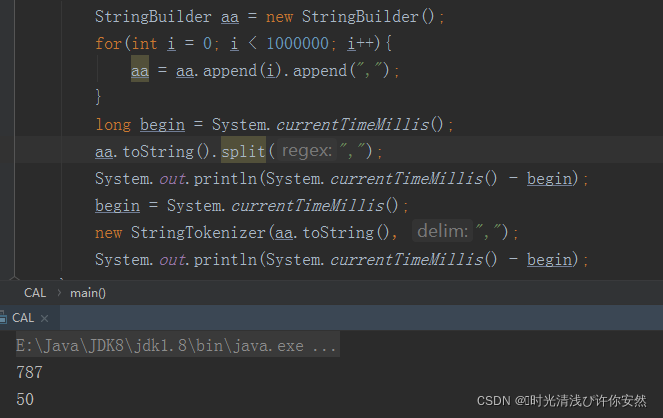

1、split切割

数据量过大时可使用StringTokenizer进行处理

两个代码执行差15倍左右

StringBuilder aa = new StringBuilder();

for(int i = 0; i < 1000000; i++){

aa = aa.append(i).append(",");

}

long begin = System.currentTimeMillis();

aa.toString().split(",");

System.out.println(System.currentTimeMillis() - begin);

begin = System.currentTimeMillis();

new StringTokenizer(aa.toString(), ",");

System.out.println(System.currentTimeMillis() - begin);

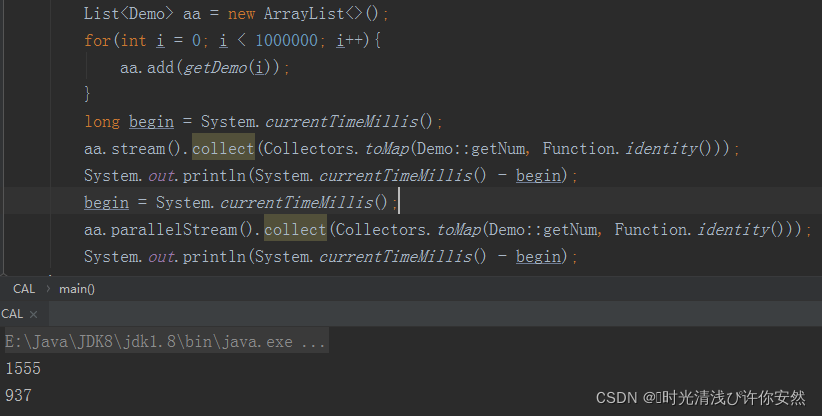

2、无需顺序的map,用parallelStream

List<Demo> aa = new ArrayList<>();

for(int i = 0; i < 10000000; i++){

aa.add(getDemo(i));

}

long begin = System.currentTimeMillis();

aa.stream().collect(Collectors.toMap(Demo::getNum, Function.identity()));

System.out.println(System.currentTimeMillis() - begin);

begin = System.currentTimeMillis();

aa.parallelStream().collect(Collectors.toMap(Demo::getNum, Function.identity()));

System.out.println(System.currentTimeMillis() - begin);