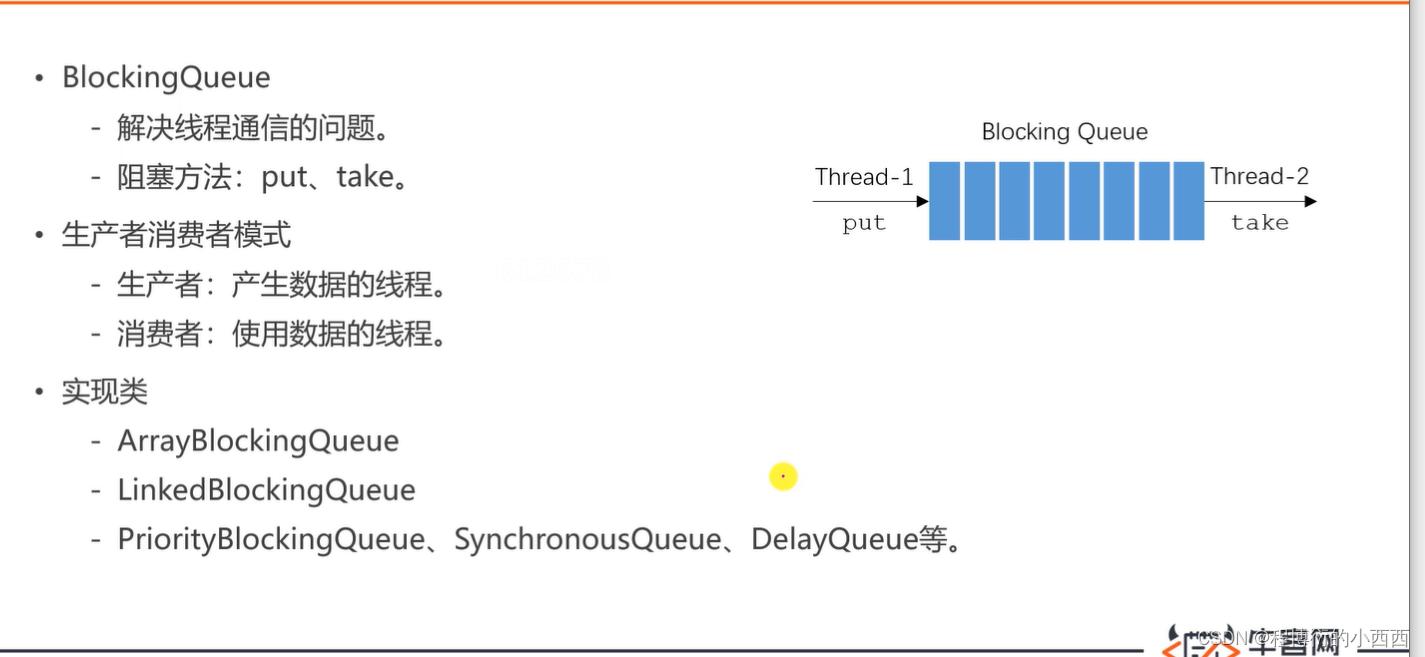

1.阻塞队列(BlockingQueue接口)

确保生产者和消费者之间协调的工作,避免cpu资源浪费

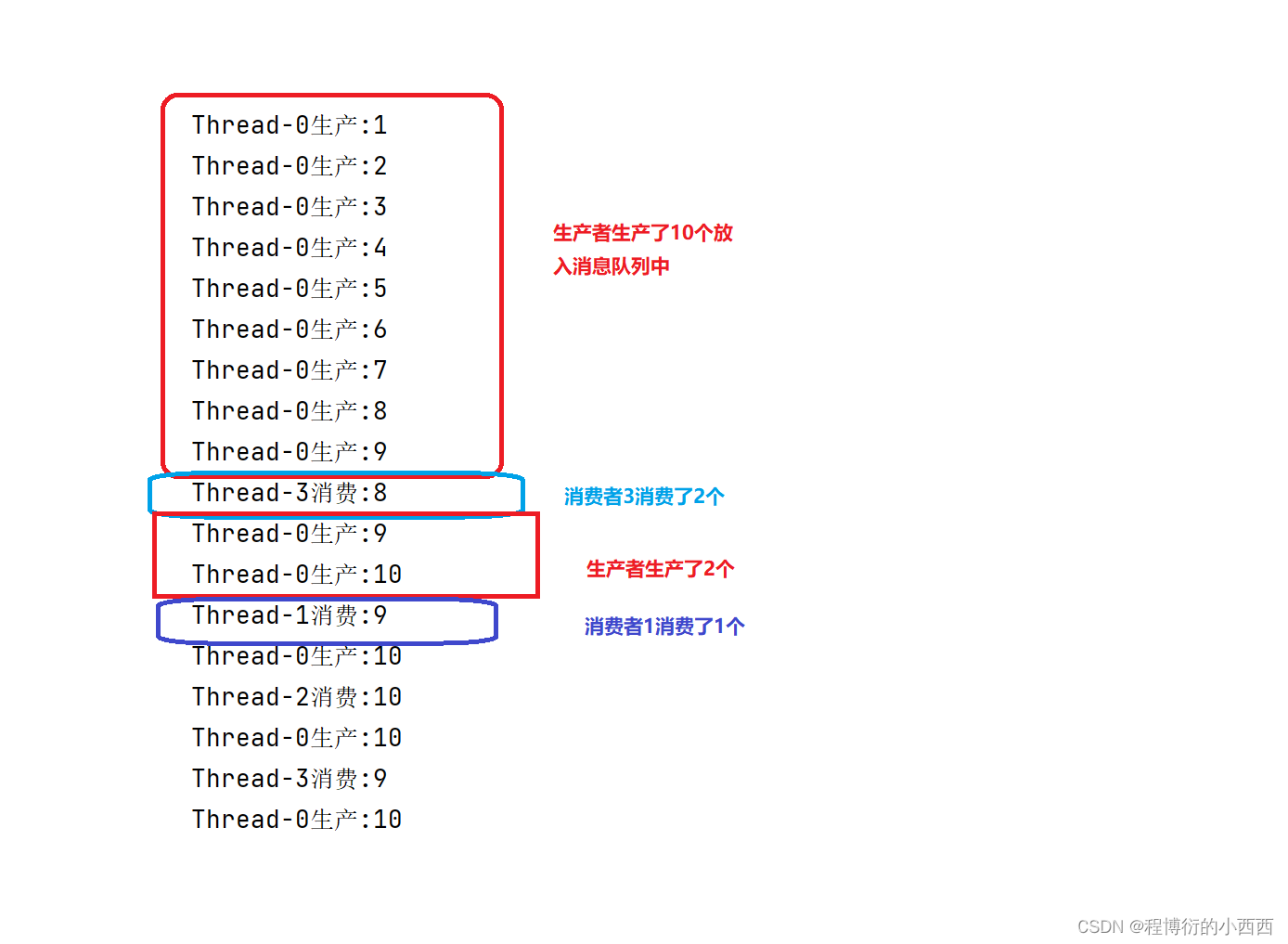

ArrayBlockingQueue的示例

public class BlockingQueueTest {

public static void main(String[] args) {

//阻塞队列的最大容量为10

BlockingQueue queue = new ArrayBlockingQueue(10);

//一个生产者,3个消费者并发

new Thread(new Producer(queue)).start();

new Thread(new Consumer(queue)).start();

new Thread(new Consumer(queue)).start();

new Thread(new Consumer(queue)).start();

}

}

class Producer implements Runnable {

private BlockingQueue<Integer> queue;

//带参构造器

public Producer(BlockingQueue<Integer> queue) {

this.queue = queue;

}

@Override

public void run() {

try {

for (int i = 0; i < 100; i++) {

Thread.sleep(20);

queue.put(i);//将数据交给队列来处理(当队列满时可以实现阻塞)

System.out.println(Thread.currentThread().getName() + "生产:" + queue.size());

}

} catch (Exception e) {

e.printStackTrace();

}

}

}

class Consumer implements Runnable {

private BlockingQueue<Integer> queue;

//带参构造器

public Consumer(BlockingQueue<Integer> queue) {

this.queue = queue;

}

@Override

public void run() {

try {

while (true) {

//用户消费的时间间隔没法确定,随机

Thread.sleep(new Random().nextInt(1000));

queue.take();//从阻塞队列获取数据

System.out.println(Thread.currentThread().getName() + "消费:" + queue.size());

}

} catch (Exception e) {

e.printStackTrace();

}

}

}

最后由于生产者不生产了,队列阻塞,程序停止了

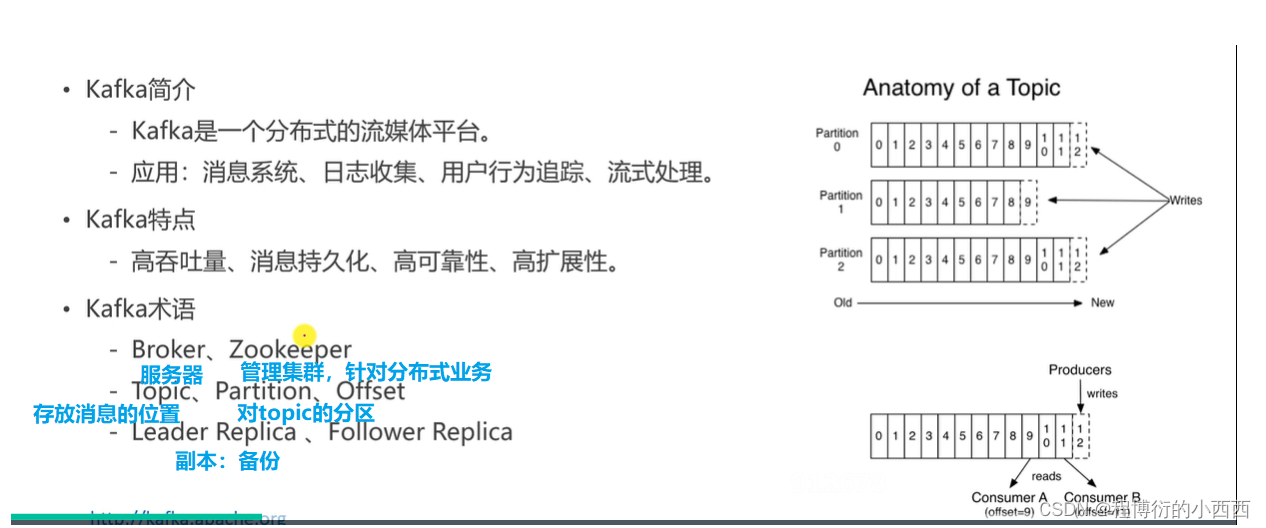

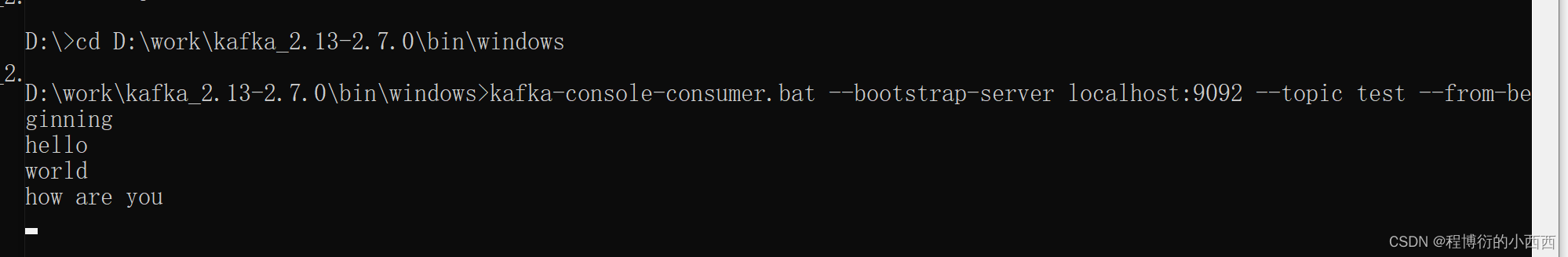

2. kafka入门及安装(kafka_2.13-2.7.0)

特点:把消息存在硬盘(处理海量数据)里,而不是内存里,对硬盘数据的高速度“顺序读写”

关于Kafka使用的重要提示

现象:在windows的命令行里启动kafka之后,当关闭命令行窗口时,就会强制关闭kafka。这种关闭方式为暴力关闭,很可能会导致kafka无法完成对日志文件的解锁。届时,再次启动kafka的时候,就会提示日志文件被锁,无法成功启动。

方案:将kafka的日志文件全部删除,再次启动即可。

建议:不要暴力关闭kafka,建议通过在命令行执行kafka-server-stop命令来关闭它。

其他:将来在Linux上部署kafka之后,采用后台运行的方式,就会避免这样的问题。

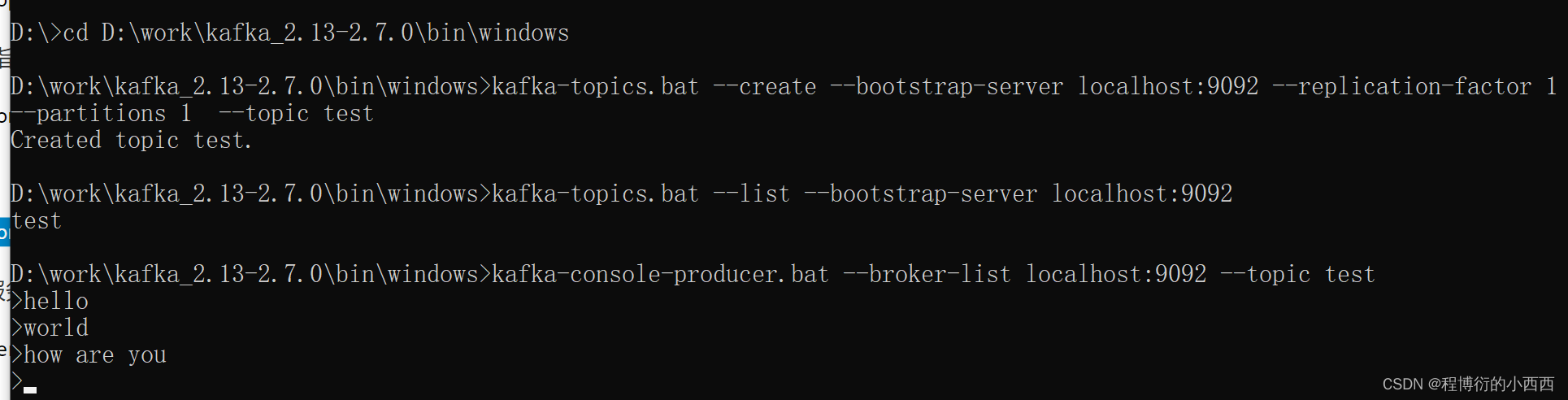

启动zookeeper

D:\work\kafka_2.12-2.3.0>bin\windows\zookeeper-server-start.bat config\zookeeper.properties

启动kafka

D:\work\kafka_2.12-2.3.0>bin\windows\kafka-server-start.bat config\server.properties

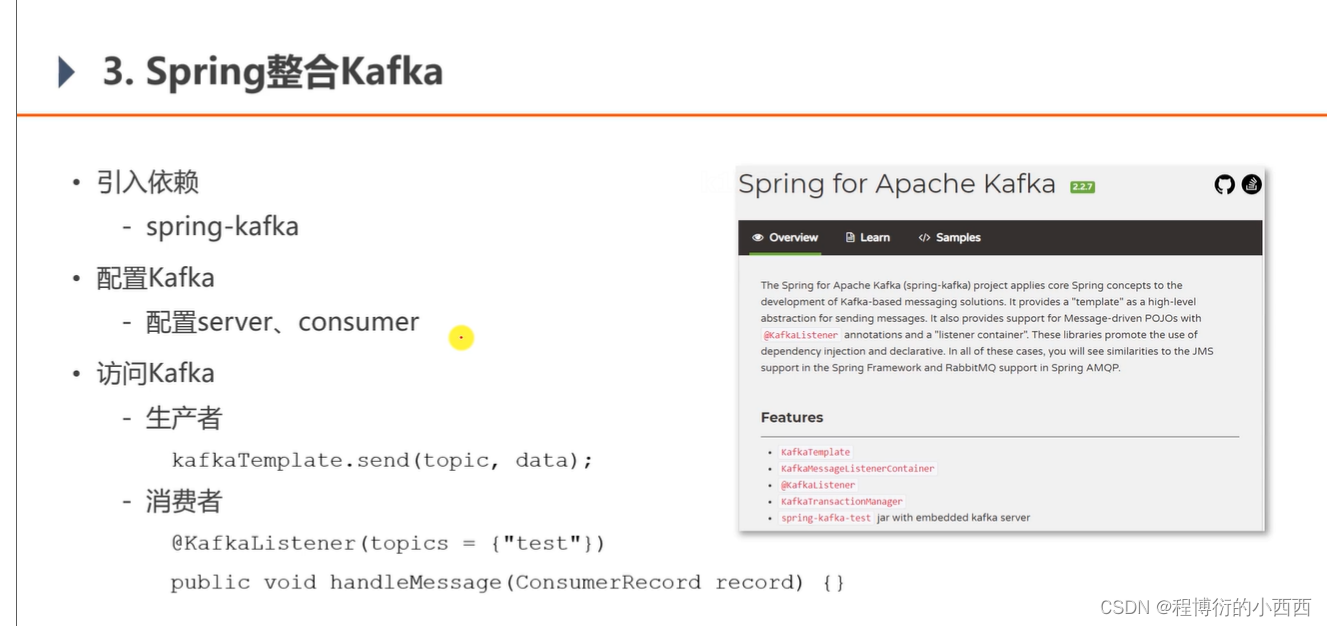

3. spring整合kafka

测试用例:我们制造一个生产者,发送两条消息;消费者监听这个test topic,发现有消息就消费打印

@RunWith(SpringRunner.class)

@SpringBootTest

@ContextConfiguration(classes = CommunityApplication.class)

public class KafkaTest {

@Autowired

private KafkaProducer kafkaProducer;

@Test

public void testKafka() {

kafkaProducer.sendMessage("test", "你好");

kafkaProducer.sendMessage("test", "在吗");

try {

Thread.sleep(1000 * 10);

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}

@Component

class KafkaProducer {

@Autowired

private KafkaTemplate kafkaTemplate;

public void sendMessage(String topic, String content) {

kafkaTemplate.send(topic, content);

}

}

@Component

class KafkaConsumer {

// 服务器一打开,spring会自动监听这个test的主题

@KafkaListener(topics = {"test"})

public void handleMessage(ConsumerRecord record) {

System.out.println(record.value());

}

}