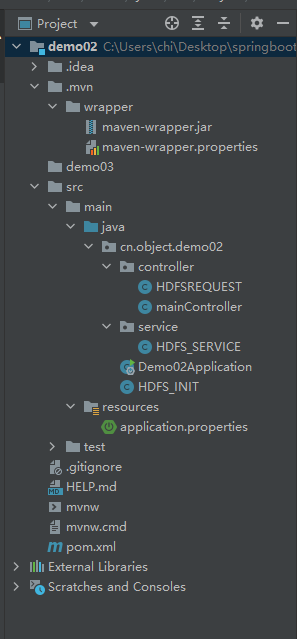

基于maven的springboot项目。项目目录如下

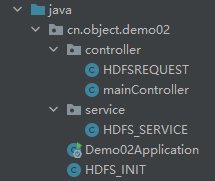

类路径如下:

HDFSREQUEST

package cn.object.demo02.controller;

import lombok.Data;

import lombok.AllArgsConstructor;

import lombok.NoArgsConstructor;

import org.springframework.stereotype.Component;

@Data

//@AllArgsConstructor

//@NoArgsConstructor

@Component

public class HDFSREQUEST {

private String source;

private String destination;

private String content;

}

mainController

package cn.object.demo02.controller;

import cn.object.demo02.service.HDFS_SERVICE;

import org.springframework.web.bind.annotation.*;

import java.io.IOException;

import java.util.List;

//Restful风格

@RestController

@RequestMapping(value = "/hdfs"/*,method = RequestMethod.GET*/)

public class mainController {

@GetMapping

public String get(){

System.out.println("Springboot is running");

return "Springboot and hdfs is running";

}

@RequestMapping(value = "/delete",method = RequestMethod.DELETE)

public String delete(@RequestBody HDFSREQUEST hdfsrequest) throws Exception{

System.out.println(hdfsrequest.getDestination());

boolean isOk = false;

isOk = HDFS_SERVICE.deleteFile(hdfsrequest);

if(isOk)

return "done";

else

return "failure";

}

@RequestMapping(value = "/download",method = RequestMethod.PUT)

public String download(@RequestBody HDFSREQUEST hdfsrequest) throws Exception{

System.out.println(hdfsrequest.getSource()+"\n"+hdfsrequest.getDestination());

HDFS_SERVICE.copyToLocalFile(hdfsrequest);

return "done";

}

@RequestMapping(value = "/upload",method = RequestMethod.POST)

public String upload(@RequestBody HDFSREQUEST hdfsrequest) throws Exception {

System.out.println(hdfsrequest.getSource()+"\n"+hdfsrequest.getDestination());

HDFS_SERVICE.copyFromLocalFile(hdfsrequest);

return "done";

}

@RequestMapping(value = "/mkdir",method = RequestMethod.POST)

public String mkdir(@RequestBody HDFSREQUEST hdfsrequest) throws Exception {

System.out.println(hdfsrequest.getDestination());

HDFS_SERVICE.createFile(hdfsrequest);

return "done";

}

@RequestMapping(value = "/getRootDirectory",method = RequestMethod.GET)

public List<String> getDirectory() throws IOException {

return HDFS_SERVICE.showRoot();

}

@RequestMapping(value = "/{str}",method = RequestMethod.DELETE)

public String delete(@PathVariable String str){

System.out.println(str);

return str;

}

}

HDFS_SERVICE

package cn.object.demo02.service;

import cn.object.demo02.controller.HDFSREQUEST;

import lombok.AllArgsConstructor;

import lombok.Data;

import lombok.NoArgsConstructor;

import org.apache.hadoop.fs.*;

import org.apache.hadoop.fs.FileStatus;

import org.apache.hadoop.fs.Path;

import org.springframework.stereotype.Service;

import java.io.IOException;

import java.util.List;

import static cn.object.demo02.HDFS_INIT.hdfs;

import static cn.object.demo02.HDFS_INIT.hdfsDirectories;

@Data

@AllArgsConstructor

@NoArgsConstructor

@Service

public class HDFS_SERVICE {

private String source;

private String destination;

HDFS_SERVICE(HDFSREQUEST hdfsrequest){

source = hdfsrequest.getSource();

destination = hdfsrequest.getDestination();

}

public static void createFile(HDFSREQUEST hdfsrequest) throws Exception {

FSDataOutputStream outputStream = hdfs.create(new Path(hdfsrequest.getDestination())); // Files are overwritten by default

outputStream.write(hdfsrequest.getContent().getBytes()); // enter the content

outputStream.close();

System.out.println("mkdir is done!");

}

public static boolean deleteFile(HDFSREQUEST hdfsrequest) throws Exception {

Path path = new Path(hdfsrequest.getDestination());

// boolean isok = true;

boolean isok = hdfs.deleteOnExit(path);

if (isok) {

System.out.println(hdfsrequest.getDestination()+"删除成功!");

} else {

System.out.println("删除失败!");

}

// hdfs.close();

return isok;

}

public static List<String> showRoot() throws IOException {

hdfsDirectories.clear();

FileStatus fs = hdfs.getFileStatus(new Path("hdfs:/"));

showDir(fs);

return hdfsDirectories;

}

private static void showDir(FileStatus fileStatus) throws IOException {

Path path = fileStatus.getPath();

hdfsDirectories.add(path.toString());

if(fileStatus.isDirectory()){

FileStatus[] f = hdfs.listStatus(path);

if(f.length > 0)

for(FileStatus file : f)

showDir(file);

}

}

// 复制上传本地文件

public static void copyFromLocalFile(HDFSREQUEST hdfsrequest) throws Exception {

Path src = new Path(hdfsrequest.getSource()); // 本地目录/文件

Path dst = new Path(hdfsrequest.getDestination()); // 目标目录/文件

// 4.拷贝上传本地文件(本地文件,目标路径) 至HDFS文件系统中

hdfs.copyFromLocalFile(src, dst);

System.out.println("upload successful!");

}

public static void copyToLocalFile(HDFSREQUEST hdfsrequest) throws Exception {

Path src = new Path(hdfsrequest.getSource());

Path dst = new Path(hdfsrequest.getDestination()); // 本地目录/文件

// 4.从HDFS文件系统中拷贝下载文件(目标路径,本地文件)至本地

// hdfs.copyToLocalFile(src, dst);

hdfs.copyToLocalFile(false, src, dst, true);//delSrc – whether to delete the src

System.out.println("文件下载成功!");

}

}

HDFS_INIT

package cn.object.demo02;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import java.io.IOException;

import java.util.ArrayList;

import java.util.List;

public class HDFS_INIT {

public static FileSystem hdfs;

public static List<String> hdfsDirectories = new ArrayList<>();

public static void init() throws IOException {

System.out.println("-----------hdfs-starting---------");

// 1.创建配置器

Configuration conf = new Configuration();

// 2.取得FileSystem文件系统 实例

conf.set("fs.defaultFS","hdfs://centos01:9000");

// 3.创建可供hadoop使用的文件系统路径

hdfs = FileSystem.get(conf);

System.out.println("------------hdfs-running--------");

}

// public static void

}

Demo02Application

package cn.object.demo02;

import org.apache.hadoop.fs.FileSystem;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

import java.io.IOException;

@SpringBootApplication

public class Demo02Application {

private static FileSystem hdfs;

public static void main(String[] args) throws IOException {

HDFS_INIT.init();

SpringApplication.run(Demo02Application.class, args);

}

}

pom.xml

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>2.6.4</version>

<relativePath/> <!-- lookup parent from repository -->

</parent>

<groupId>cn.object</groupId>

<artifactId>demo02</artifactId>

<version>0.0.1-SNAPSHOT</version>

<name>demo02</name>

<description>Demo project for Spring Boot</description>

<properties>

<java.version>11</java.version>

</properties>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>2.10.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>2.10.0</version>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

</dependency>

<dependency>

<groupId>org.mybatis.spring.boot</groupId>

<artifactId>mybatis-spring-boot-starter</artifactId>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

</plugin>

</plugins>

</build>

</project>

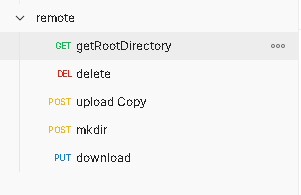

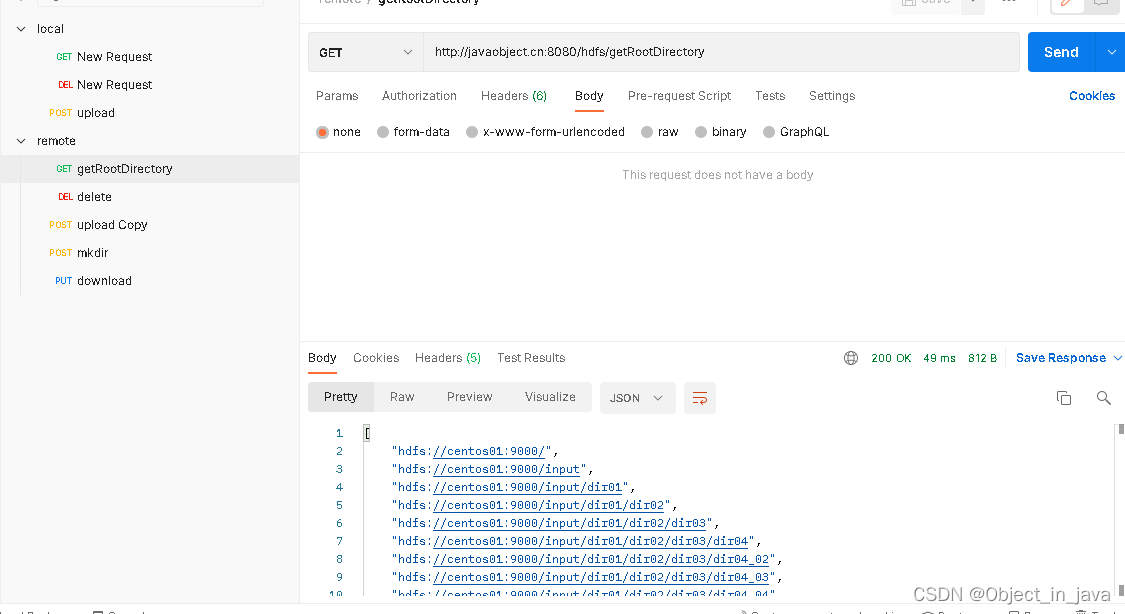

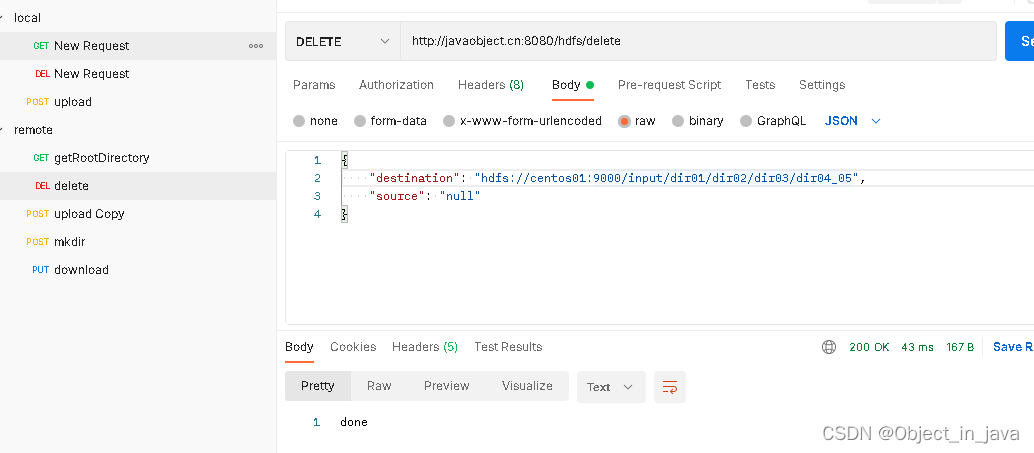

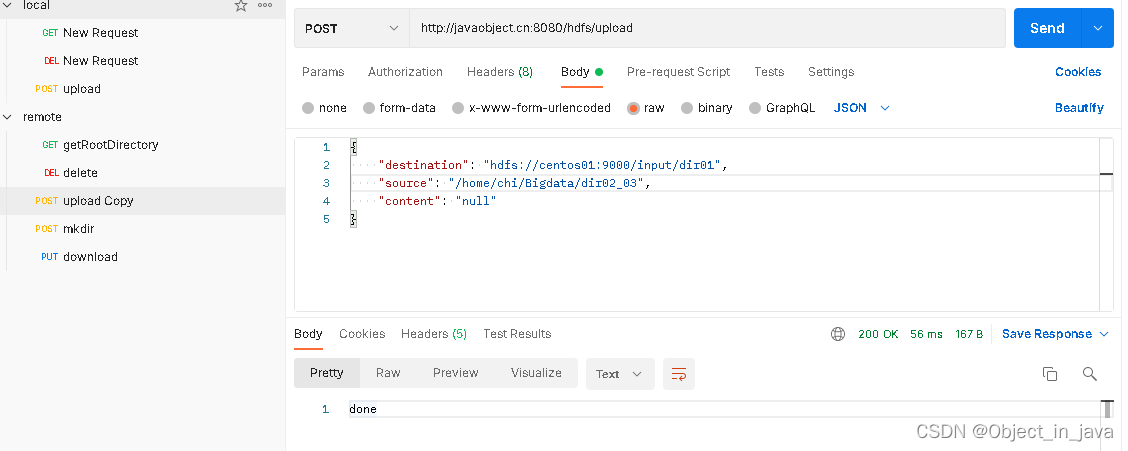

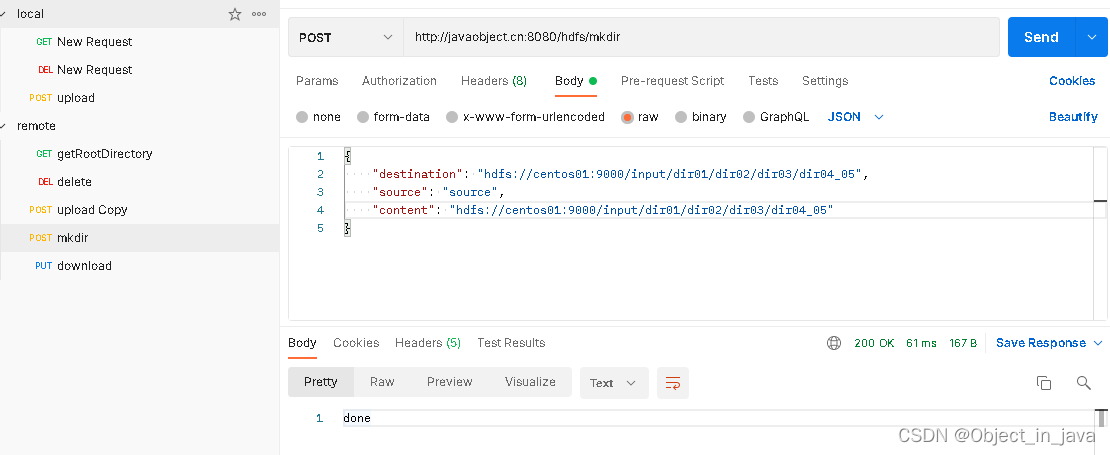

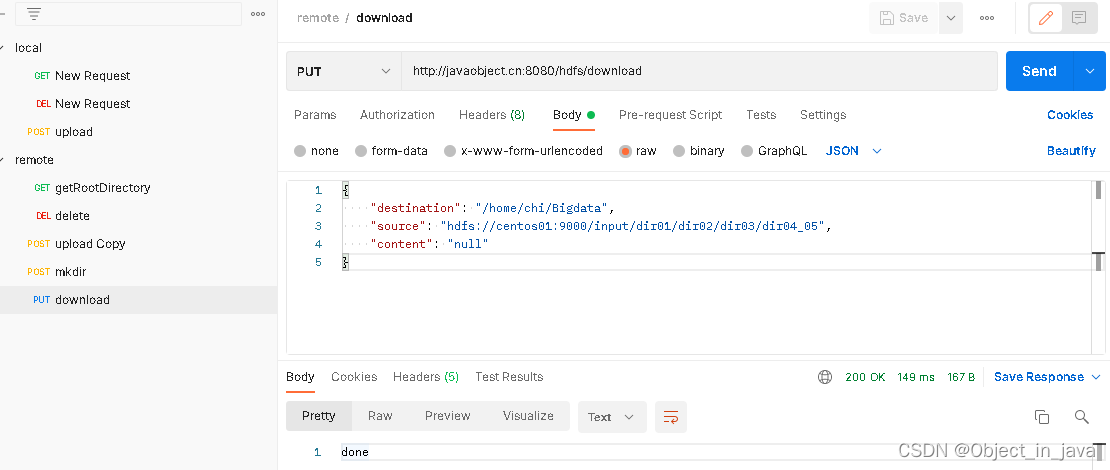

postman测试:

json数据

{

"destination": "hdfs://centos01:9000/input/dir01/dir02/dir03/dir04_05",

"source": "null",

"content": "null"

}

json数据

{

"destination": "hdfs://centos01:9000/input/dir01",

"source": "/home/chi/Bigdata/dir02_03",

"content": "null"

}

json数据

{

"destination": "hdfs://centos01:9000/input/dir01/dir02/dir03/dir04_05",

"source": "source",

"content": "hdfs://centos01:9000/input/dir01/dir02/dir03/dir04_05"

}

json数据

{

"destination": "/home/chi/Bigdata",

"source": "hdfs://centos01:9000/input/dir01/dir02/dir03/dir04_05",

"content": "null"

}

注:

1.

开发环境为windows编译器IDEA。

部署环境为linux,centos。

过程中还应用了xftp,xshell,postman。

需要资源可以私信我。

2.

需要注意的是,删除文件后并不会被及时删除,用get还能看到对应文件,用hadoop fs -ls -R / 的命令也会查看到被删除的文件。当服务器停止时才会真正的删除文件,服务器停止时使用命令,或者重启服务器用get命令都可以观察到文件已被删除,由此推测,删除文件可能是有一种保护机制,先将操作存储在缓冲区内,可以在退出前回溯操作等,具体请查谷歌,stackoverflow,源码或官方文档(要写别的项目了,哪有时间查这种东西hhhh)。