文章链接:日撸 Java 三百行(总述)_minfanphd的博客-CSDN博客

51.1 KNN (K-NearestNeighbor) K指K个邻居

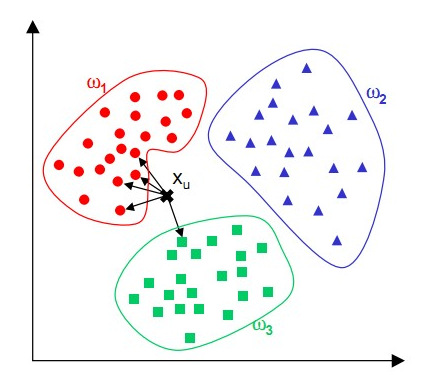

(1)正如物以类聚,人以群居。越是相似的东西就越有可能是一类东西。从这张图片来看,判断Xu属于那种类别,可以取Xu最近的K个邻居(距离),在这个K个点中,那一类东西的概率最高,就把他定位为那个类别。

其中距离的求解:

曼哈顿距离:

欧氏距离:(多维空间)

KNN算法最简单粗暴的就是将预测点与所有点距离进行计算,然后保存并排序,选出前面K个值看看哪些类别比较多。

(2)应用

比如在电商中,可以根据消费者选择的东西去推荐他们可能感兴趣的的商品,还可以在一些网站可以看到相似用户这些。

51.2.代码:

分享一个下载jar包的网址:https://mvnrepository.com/

1.aff内容解读

?在aff文件中有3种类型的花(山鸢尾(Iris-setosa),变色鸢尾(Iris-versicolor),维吉尼亚鸢尾(Iris-virginica)),每一个花类有50个数据,每条记录有 4 项特征(花萼长度、花萼宽度、花瓣长度、花瓣宽度)

2.步骤

(1)解析文本内容,获取数据集

(2)根据获取的数据集划分训练集和测试集,在分割数据集时打乱数据索引位置,以保证在取数时更有说服性

(3)对测试集数据进行预测:对每个测试数据,取他的k个邻居,这k个邻居时距离这个测试数据最近得k个点(计算距离用的欧式距离)

(4)取出的K个邻居,判断每个邻居属于哪一类型的

(5)测试其准确度

package machinelearning.knn;

import weka.core.Instances;

import java.io.File;

import java.io.FileReader;

import java.nio.channels.OverlappingFileLockException;

import java.util.Arrays;

import java.util.Random;

import java.util.function.IntFunction;

/**

* @author: fulisha

* @date: 2022/3/22 9:10

* @description: TODO

*/

public class KnnClassification {

//曼哈顿距离

public static final int MANHATTAN = 0;

//欧几里得距离

public static final int EUCLIDEAN = 1;

//距离测量

public static final int distanceMeasure = EUCLIDEAN;

//随机数

public static final Random random = new Random();

//邻居数量

int numNeighbors = 7;

//The whole dataset

Instances dataset;

//训练场地.由数据的索引表示

int[] trainingSet;

//测试集。由数据的索引表示。

int[] testingSet;

//预测

int[] predictions;

public KnnClassification(String paraFilename) {

try {

File file;

FileReader fileReader = new FileReader(paraFilename);

dataset = new Instances(fileReader);

//最后一个属性是decision类

dataset.setClassIndex(dataset.numAttributes() - 1);

fileReader.close();

} catch (Exception ee) {

System.out.println("Error occurred while trying to read \'" + paraFilename + "\' in KnnClassification constructor.\r\n" + ee);

System.exit(0);

}

}

/**

* 获取数据随机化的随机索引。

*

* @param paraLength The length of the sequence.

* @return An array of indices, e.g., {4, 3, 1, 5, 0, 2} with length 6.

*/

public static int[] getRandomIndices(int paraLength) {

int[] resultIndices = new int[paraLength];

// Step 1. Initialize.

for (int i = 0; i < paraLength; i++) {

resultIndices[i] = i;

}

// Step 2. Randomly swap.

int tempFirst, tempSecond, tempValue;

for (int i = 0; i < paraLength; i++) {

// Generate two random indices.

tempFirst = random.nextInt(paraLength);

tempSecond = random.nextInt(paraLength);

//swap

tempValue = resultIndices[tempFirst];

resultIndices[tempFirst] = resultIndices[tempSecond];

resultIndices[tempSecond] = tempValue;

}

return resultIndices;

}

/**

* 将数据分成培训和测试部分。

*

* @param paraTrainingFraction

*/

public void splitTrainingTesting(double paraTrainingFraction) {

int tempSize = dataset.numInstances();

int[] tempIndices = getRandomIndices(tempSize);

int tempTrainingSize = (int) (tempSize * paraTrainingFraction);

trainingSet = new int[tempTrainingSize];

testingSet = new int[tempSize - tempTrainingSize];

for (int i = 0; i < tempTrainingSize; i++) {

trainingSet[i] = tempIndices[i];

}

for (int i = 0; i < tempSize - tempTrainingSize; i++) {

testingSet[i] = tempIndices[tempTrainingSize + i];

}

}

/**

* 预测整个测试集。结果存储在predictions

*/

public void predict() {

predictions = new int[testingSet.length];

for (int i = 0; i < predictions.length; i++) {

predictions[i] = predict(testingSet[i]);

}

}

/**

* Predict for given instance

* @param paraIndex

* @return

*/

public int predict(int paraIndex) {

int[] tempNeighbors = computeNearests(paraIndex);

int resultPrediction = simpleVoting(tempNeighbors);

return resultPrediction;

}

/**

* 两个实例之间的距离。

* @param paraI The index of the first instance.

* @param paraJ The index of the second instance

* @return

*/

public double distance(int paraI, int paraJ) {

double resultDistance = 0;

double tempDifference;

switch (distanceMeasure) {

//曼哈顿距离

case MANHATTAN:

for (int i = 0; i < dataset.numAttributes() - 1; i++) {

tempDifference = dataset.instance(paraI).value(i) - dataset.instance(paraJ).value(i);

if (tempDifference < 0) {

resultDistance -= tempDifference;

} else {

resultDistance += tempDifference;

}

}

break;

//欧几里得距离

case EUCLIDEAN:

for (int i = 0; i < dataset.numAttributes() - 1; i++) {

tempDifference = dataset.instance(paraI).value(i) - dataset.instance(paraJ).value(i);

resultDistance += tempDifference * tempDifference;

}

break;

default:

System.out.println("Unsupported distance measure: " + distanceMeasure);

}

return resultDistance;

}

/**

* 获得分类器的准确度

* @return

*/

public double getAccuracy() {

double tempCorrect = 0;

for (int i = 0; i < predictions.length; i++) {

if (predictions[i] == dataset.instance(testingSet[i]).classValue()) {

tempCorrect++;

}

}

return tempCorrect / testingSet.length;

}

/**

* 计算最近的k个邻居.在每次扫描中选择一个邻居 事实上,我们只能扫描一次

* @param paraCurrent current instance. We are comparing it with all others.

* @return the indices of the nearest instances

*/

public int[] computeNearests(int paraCurrent) {

int[] resultNearests = new int[numNeighbors];

boolean[] tempSelected = new boolean[trainingSet.length];

double tempDistance;

double tempMinimalDistance;

int tempMinimalIndex = 0;

for (int i = 0; i < numNeighbors; i++) {

tempMinimalDistance = Double.MAX_VALUE;

for (int j = 0; j < trainingSet.length; j++) {

if (tempSelected[j]) {

continue;

}

tempDistance = distance(paraCurrent, trainingSet[j]);

if (tempDistance < tempMinimalDistance) {

tempMinimalDistance = tempDistance;

tempMinimalIndex = j;

}

}

resultNearests[i] = trainingSet[tempMinimalIndex];

tempSelected[tempMinimalIndex] = true;

}

System.out.println("The nearest of " + paraCurrent + " are: " + Arrays.toString(resultNearests));

return resultNearests;

}

/**

* 使用实例进行投票。

* @param paraNeighbors The indices of the neighbors.

* @return The predicted label.

*/

public int simpleVoting(int[] paraNeighbors) {

int[] tempVotes = new int[dataset.numClasses()];

for (int i = 0; i < paraNeighbors.length; i++) {

tempVotes[(int) dataset.instance(paraNeighbors[i]).classValue()]++;

}

int tempMaximalVotingIndex = 0;

int tempMaximalVoting = 0;

for (int i = 0; i < dataset.numClasses(); i++) {

if (tempVotes[i] > tempMaximalVoting) {

tempMaximalVoting = tempVotes[i];

tempMaximalVotingIndex = i;

}

}

return tempMaximalVotingIndex;

}

public static void main(String args[]) {

KnnClassification tempClassifier = new KnnClassification("D:/sampledata/iris.arff");

tempClassifier.splitTrainingTesting(0.7);

tempClassifier.predict();

System.out.println("The accuracy of the classifier is: " + tempClassifier.getAccuracy());

}

}

"C:\Program Files\Java\jdk1.8.0_271\bin\java.exe" "-javaagent:D:\Program Files\JetBrains\IntelliJ IDEA 2020.1.4\lib\idea_rt.jar=63850:D:\Program Files\JetBrains\IntelliJ IDEA 2020.1.4\bin" -Dfile.encoding=UTF-8 -classpath "C:\Program Files\Java\jdk1.8.0_271\jre\lib\charsets.jar;C:\Program Files\Java\jdk1.8.0_271\jre\lib\deploy.jar;C:\Program Files\Java\jdk1.8.0_271\jre\lib\ext\access-bridge-64.jar;C:\Program Files\Java\jdk1.8.0_271\jre\lib\ext\cldrdata.jar;C:\Program Files\Java\jdk1.8.0_271\jre\lib\ext\dnsns.jar;C:\Program Files\Java\jdk1.8.0_271\jre\lib\ext\jaccess.jar;C:\Program Files\Java\jdk1.8.0_271\jre\lib\ext\jfxrt.jar;C:\Program Files\Java\jdk1.8.0_271\jre\lib\ext\localedata.jar;C:\Program Files\Java\jdk1.8.0_271\jre\lib\ext\nashorn.jar;C:\Program Files\Java\jdk1.8.0_271\jre\lib\ext\sunec.jar;C:\Program Files\Java\jdk1.8.0_271\jre\lib\ext\sunjce_provider.jar;C:\Program Files\Java\jdk1.8.0_271\jre\lib\ext\sunmscapi.jar;C:\Program Files\Java\jdk1.8.0_271\jre\lib\ext\sunpkcs11.jar;C:\Program Files\Java\jdk1.8.0_271\jre\lib\ext\zipfs.jar;C:\Program Files\Java\jdk1.8.0_271\jre\lib\javaws.jar;C:\Program Files\Java\jdk1.8.0_271\jre\lib\jce.jar;C:\Program Files\Java\jdk1.8.0_271\jre\lib\jfr.jar;C:\Program Files\Java\jdk1.8.0_271\jre\lib\jfxswt.jar;C:\Program Files\Java\jdk1.8.0_271\jre\lib\jsse.jar;C:\Program Files\Java\jdk1.8.0_271\jre\lib\management-agent.jar;C:\Program Files\Java\jdk1.8.0_271\jre\lib\plugin.jar;C:\Program Files\Java\jdk1.8.0_271\jre\lib\resources.jar;C:\Program Files\Java\jdk1.8.0_271\jre\lib\rt.jar;D:\wzy\fls\weka-dev-3.9.3.jar;D:\wzy\fls\java-learn\out\production\java-learn" machinelearning.knn.KnnClassification

The nearest of 117 are: [131, 105, 109, 135, 125, 107, 118]

The nearest of 31 are: [20, 28, 36, 10, 39, 48, 23]

The nearest of 87 are: [68, 72, 62, 54, 74, 71, 58]

The nearest of 32 are: [46, 33, 19, 48, 10, 16, 5]

The nearest of 101 are: [149, 127, 138, 126, 123, 70, 111]

The nearest of 82 are: [92, 67, 71, 89, 96, 64, 80]

The nearest of 97 are: [74, 71, 91, 78, 61, 63, 75]

The nearest of 132 are: [103, 111, 112, 116, 147, 115, 137]

The nearest of 140 are: [144, 120, 112, 139, 115, 141, 102]

The nearest of 114 are: [149, 127, 138, 115, 126, 70, 148]

The nearest of 37 are: [34, 9, 1, 30, 12, 49, 29]

The nearest of 17 are: [40, 4, 39, 28, 7, 49, 26]

The nearest of 50 are: [52, 65, 76, 58, 75, 77, 51]

The nearest of 100 are: [144, 148, 120, 115, 112, 137, 103]

The nearest of 25 are: [34, 9, 1, 30, 45, 12, 29]

The nearest of 41 are: [8, 38, 45, 13, 12, 1, 3]

The nearest of 121 are: [149, 138, 70, 127, 126, 66, 84]

The nearest of 42 are: [38, 47, 3, 2, 8, 13, 29]

The nearest of 27 are: [28, 39, 48, 7, 4, 10, 40]

The nearest of 124 are: [120, 112, 144, 139, 137, 116, 102]

The nearest of 6 are: [47, 2, 11, 29, 3, 30, 40]

The nearest of 128 are: [103, 111, 116, 137, 112, 147, 115]

The nearest of 146 are: [123, 111, 126, 72, 133, 127, 119]

The nearest of 21 are: [19, 46, 4, 48, 40, 43, 26]

The nearest of 59 are: [89, 53, 80, 64, 96, 90, 92]

The nearest of 95 are: [96, 61, 55, 67, 66, 92, 90]

The nearest of 86 are: [52, 65, 58, 75, 76, 77, 51]

The nearest of 94 are: [96, 90, 89, 92, 55, 67, 53]

The nearest of 69 are: [80, 89, 92, 53, 67, 79, 62]

The nearest of 104 are: [112, 120, 144, 116, 103, 137, 115]

The nearest of 134 are: [103, 133, 111, 116, 137, 119, 72]

The nearest of 142 are: [149, 127, 138, 126, 123, 70, 111]

The nearest of 83 are: [133, 149, 123, 127, 72, 126, 138]

The nearest of 0 are: [4, 39, 28, 40, 7, 49, 48]

The nearest of 88 are: [96, 61, 66, 55, 92, 67, 84]

The nearest of 143 are: [120, 144, 102, 112, 139, 109, 125]

The nearest of 73 are: [63, 91, 78, 55, 72, 54, 74]

The nearest of 56 are: [51, 85, 91, 127, 70, 138, 63]

The nearest of 22 are: [2, 40, 47, 4, 35, 49, 11]

The nearest of 81 are: [80, 79, 89, 53, 92, 67, 64]

The nearest of 99 are: [96, 92, 67, 61, 89, 55, 71]

The nearest of 136 are: [148, 115, 144, 120, 137, 110, 112]

The nearest of 145 are: [141, 147, 139, 112, 115, 110, 120]

The nearest of 113 are: [149, 138, 126, 119, 123, 127, 70]

The nearest of 122 are: [105, 118, 107, 130, 135, 125, 102]

The accuracy of the classifier is: 0.9777777777777777

Process finished with exit code 0

总结:

今天是第一次接触机器学习,去看这个KNN分类器看得云里雾里的, 但是今天这个KNN分类器的基本思想是掌握了,但从知道到实现还是有一定距离。