CrawlSpider

创建CrawlSpider 的爬虫文件

命令:

scrapy genspider -t crawl 爬虫文件名 域名

Rule

功能:Rule用来定义CrawlSpider的爬取规则

参数:

link_extractor: Link Extractor对象,它定义如何从每个已爬网页面中提取链接。

callback :回调函数 处理link_extractor形成的response

cb_kwargs : cb:callback 回调函数的参数,是一个包含要传递给回调函数的关键字参数的dict

follow :它指定是否应该从使用此规则提取的每个响应中跟踪链接。

?????两个值:True /False;follow=True link_extractor形成的response 会交给rule;False 则不会;

process_links : 用于过滤链接的回调函数 , 处理link_extractor提取到的链接

process_request : 用于过滤请求的回调函数

errback:处理异常的函数

LinkExractor

LinkExractor也是scrapy框架定义的一个类,它唯一的目的是从web页面中提取最终将被跟踪的额连接。

我们也可定义我们自己的链接提取器,只需要提供一个名为extract_links的方法,它接收Response对象并返回scrapy.link.Link对象列表。

参数:

allow(允许):正则表达式或其列表匹配url,若为空则匹配所有url

deny(不允许):正则表达式或其列表排除url,若为空则不排除url

allow_domains(允许的域名):str或其列表

deny_domains(不允许的域名):str或其列表

restrict_xpaths(通过xpath 限制匹配区域):xpath表达式 或列表

restrict_css(通过css 限制匹配区域):css表达式

restrict_text(通过text 限制匹配区域):正则表达式

tags=(‘a’, ‘area’):允许的标签

attrs=(‘href’,):允许的属性

canonicalize:规范化每个提取的url

unique(唯一):将匹配到的重复链接过滤

process_value:接收 从标签提取的每个值 函数

deny_extensions:不允许拓展,提取链接的时候,忽略一些扩展名.jpg .xxx

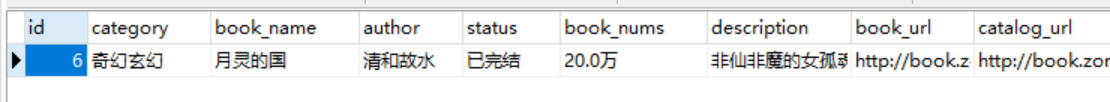

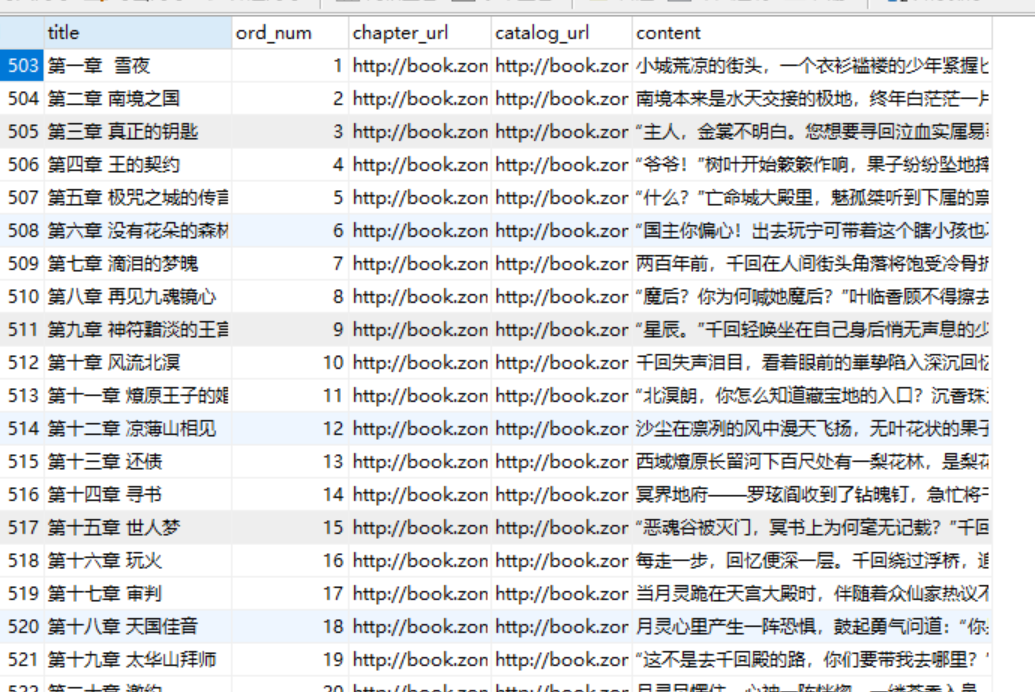

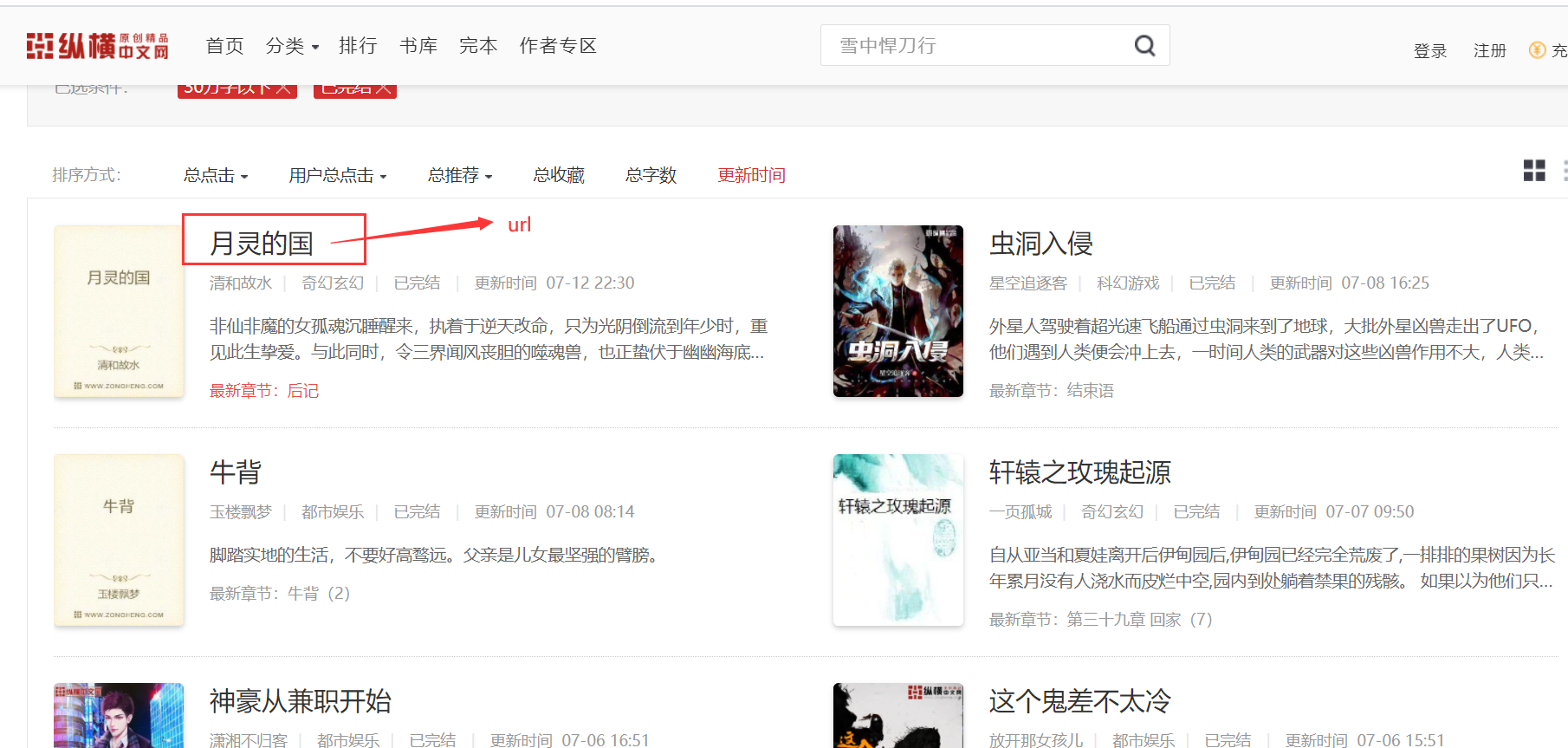

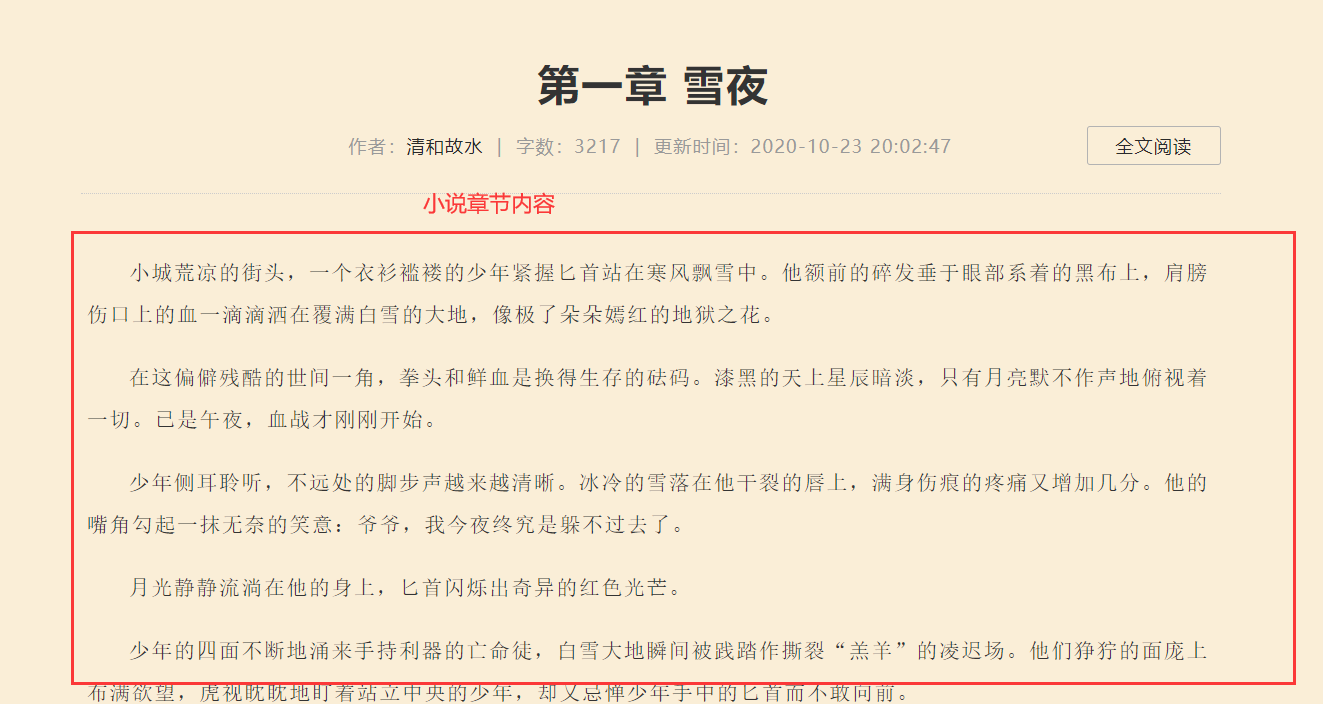

案例:爬取纵横小说

需求分析

需爬取内容:小说书名、作者、是否完结、字数、简介、各个章节及其内容

页面结构

一级页面: 各个小说的url

二级页面: 小说书名、作者、是否完结、字数、简介、章节目录url

三级页面: 各个章节名称

四级页面: 小说各个章节内容

需求字段

小说信息

章节信息

代码实现

spider文件

根据目标数据——要存储的数据,在rules中定义Rule规则,按需配置callback函数,解析response获得想要的数据。

- parse_book函数获取小说信息

- parse_catalog函数获取章节信息

- parse_chapter函数获取章节内容

#(爬虫文件名:zh)

import scrapy

from scrapy.linkextractors import LinkExtractor

from scrapy.spiders import CrawlSpider, Rule

from ..items import BookItem,ChapterItem,CatalogItem

class ZhSpider(CrawlSpider):

name = 'zh'

allowed_domains = ['book.zongheng.com']

start_urls = ['http://book.zongheng.com/store/c0/c0/b0/u0/p1/v9/s1/t1/u0/i1/ALL.html'] #起始的url 一级界面的url

#定义爬取规则 1.提取url(LinkExtractor对象) 2.形成请求 3.响应的处理规则

rules = (

Rule(LinkExtractor(allow=r'http://book.zongheng.com/book/\d+.html',restrict_xpaths='//div[@class="bookname"]'), callback='parse_book', follow=True,process_links='get_booklink'), #这里加restrict_xpaths限制匹配区域

Rule(LinkExtractor(allow=r'http://book.zongheng.com/showchapter/\d+.html'), callback='parse_catalog', follow=True),

Rule(LinkExtractor(allow=r'http://book.zongheng.com/chapter/\d+/\d+.html',restrict_xpaths='//ul[@class="chapter-list clearfix"]'), callback='parse_chapter',follow=False,process_links='get_chapterlink'), #这里加restrict_xpaths限制匹配区域

)

def get_booklink(self,links) :#处理 LinkExtractor 提取到的url 每本书的url

for index,link in enumerate(links):

if index==0:

yield link

else:

return

def get_chapterlink(self,links): #处理 LinkExtractor 提取到的url 章节的url

for index,link in enumerate(links):

if index<=20:

yield link

else:

return

def parse_book(self, response):

#类别

category = response.xpath('//div[@class="book-info"]/div[@class="book-label"]/a[2]/text()').extract()[0].strip()

#书名

book_name = response.xpath('//div[@class="book-info"]/div[@class="book-name"]/text()').extract()[0].strip()

#作者

author = response.xpath('//div[@class="au-name"]/a/text()').extract()[0].strip()

#状态

status = response.xpath('//div[@class="book-info"]/div[@class="book-label"]/a[1]/text()').extract()[0].strip()

#字数

book_nums = response.xpath('//div[@class="book-info"]/div[@class="nums"]/span/i/text()').extract()[0].strip()

#描述

description = ' '.join(response.xpath('//div[@class="book-info"]/div[@class="book-dec Jbook-dec hide"]/p/text()').extract())

#书的url

book_url = response.url

#目录的URL

catalog_url =response.xpath('//div[@class="book-info"]//div[@class="fr link-group"]/a/@href').extract()[0].strip()

item = BookItem()

item['category']=category

item['book_name']=book_name

item['author']=author

item['status']=status

item['book_nums']=book_nums

item['description']=description

item['book_url']=book_url

item['catalog_url']=catalog_url

yield item

def parse_catalog(self,response):

a_text = response.xpath('//ul[@class="chapter-list clearfix"]/li/a')

chapter_list = []

catalog_url = response.url

for a in a_text:

title = a.xpath('./text()').extract()[0]

chapter_url = a.xpath('./@href').extract()[0]

chapter_list.append((title,chapter_url,catalog_url)) #章节名 章节url 目录url

item = CatalogItem()

item['chapter_list']=chapter_list

yield item

def parse_chapter(self,response):

content = ''.join(response.xpath('//div[@class="content"]/p/text()').extract())

chapter_url=response.url

item = ChapterItem()

item['content']=content #小说章节的内容

item['chapter_url']=chapter_url #章节的url

yield item

items文件

items文件里的字段,是根据目标数据的需求确定的

import scrapy

class BookItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

category = scrapy.Field()

book_name = scrapy.Field()

author = scrapy.Field()

status =scrapy.Field()

book_nums =scrapy.Field()

description =scrapy.Field()

book_url =scrapy.Field()

catalog_url = scrapy.Field()

class CatalogItem(scrapy.Item):

chapter_list = scrapy.Field()

class ChapterItem(scrapy.Item):

content =scrapy.Field()

chapter_url = scrapy.Field()

pipelines文件

此处是将数据写入数据库

- 先写入小说信息

- 写入章节信息,除了章节内容之外的部分

- 根据章节url,写入章节内容

注意:记得提前创建好数据库和各个表,同时写代码时表的字段千万不要写错!!!

import pymysql

# zhnovel是我的项目文件夹名

from zhnovel.items import BookItem,ChapterItem,CatalogItem

from scrapy.exceptions import DropItem

class ZhnovelPipeline:

#打开数据库

def open_spider(self,spider):

data_config = spider.settings['DATABASE_CONFIG']

#建立连接

self.conn = pymysql.connect(**data_config)

#定义游标

self.cur = self.conn.cursor()

spider.conn = self.conn

spider.cur = self.cur

#数据存储

def process_item(self, item, spider):

if isinstance(item,BookItem):

sql = "select id from novel where book_name=%s and author=%s"

self.cur.execute(sql,(item['book_name'],item['author']))

if not self.cur.fetchone():

sql = "insert into novel(category,book_name,author,status,book_nums,description,book_url,catalog_url) values(%s,%s,%s,%s,%s,%s,%s,%s)"

self.cur.execute(sql,(item['category'],item['book_name'],item['author'],item['status'],item['book_nums'], item['description'],item['book_url'],item['catalog_url']))

self.conn.commit()

return item

elif isinstance(item,CatalogItem):

sql = 'delete from chapter'

self.cur.execute(sql)

sql = 'insert into chapter(title,ord_num,chapter_url,catalog_url) values(%s,%s,%s,%s)'

data_list = []

for index, chapter in enumerate(item['chapter_list']):

ord_num = index+1

title, chapter_url, catalog_url = chapter

data_list.append((title, ord_num, chapter_url, catalog_url))

self.cur.executemany(sql,data_list)

self.conn.commit()

return item

elif isinstance(item,ChapterItem):

sql = "update chapter set content=%s where chapter_url=%s"

self.cur.execute(sql,(item['content'],item['chapter_url']))

self.conn.commit()

return item

else:

return DropItem

#关闭数据库

def close_spider(self,spider):

self.cur.close()

self.conn.close()

settings文件

- 设置robots协议,添加全局请求头,开启管道

- 开启下载延迟(可以不开,最好开启)

- 配置数据库

ROBOTSTXT_OBEY = False #robots协议

DOWNLOAD_DELAY = 1 #下载延迟1s

DEFAULT_REQUEST_HEADERS = {

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

'Accept-Language': 'en',

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.114 Safari/537.36 Edg/91.0.864.54'

}

DATABASE_CONFIG={ #配置数据库

'host':'localhost', #ip为127.0.0.1 或写 localhost

'port':3306, #端口3306

'user':'root', #这里是你登录mysql的用户名

'password':'123456', #这是登录mysql的密码

'db':'zhnovel', #你的数据库

'charset':'utf8', #编码utf8

}

结果: