系列文章

Python 学习 01 —— Python基础

Python 库学习 —— urllib 学习

Python 库学习 —— BeautifulSoup4学习

Python 库学习 —— Re 正则表达式

Python 库学习 —— Excel存储(xlwt、xlrd)

Python 学习 02 —— Python爬虫

Python 库学习 —— Flask 基础学习

Python 学习03 —— 爬虫网站项目

三、实战项目

1、项目说明

-

源码分享:

CSDN:https://download.csdn.net/download/qq_39763246/20255591

百度:https://pan.baidu.com/s/1rbeB8qSSV-reki6umd9_3g 提取码: fxq4

Gitee:https://gitee.com/coder-zcy/douban_spider

-

技术框架:

后端使用Flask框架进行路由解析和模板渲染,前端是在网上先搜了个模板然后用Bootstrap和Layui修改了下,数据存储用了Excel和SQLite。

-

项目内容:

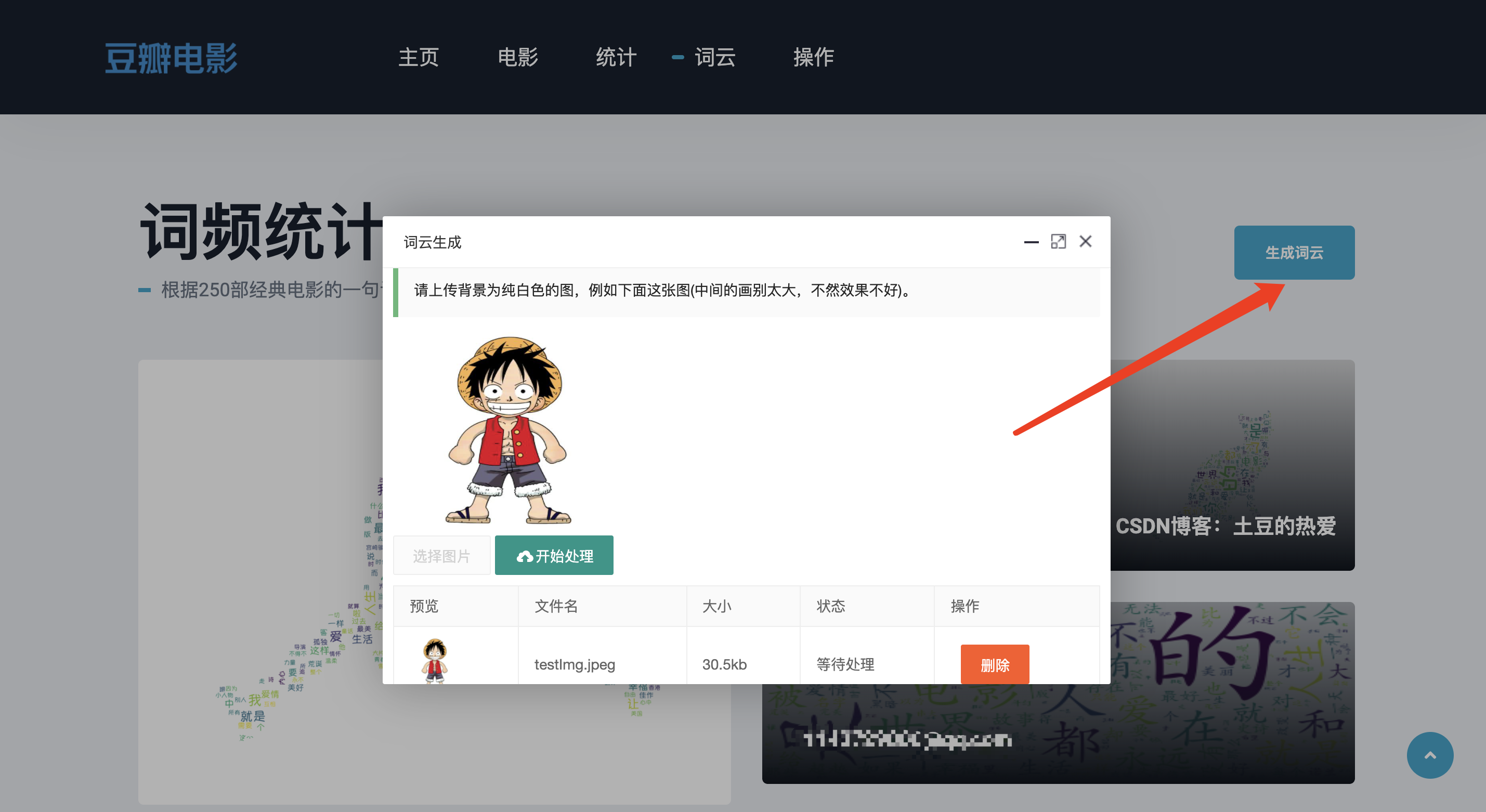

主要是把前面Python学习的内容进行了整合练手,使用Python对豆瓣电影Top250进行爬取,将爬取到的数据存储到SQLite和Excel,然后通过Layui和ECharts将数据展现到网站上。同时,利用WordCloud进行词云图片生成,用户可以上传图片,由网站图片进行词云化处理。

下面是网站的实际效果。

2、项目代码

这里只贴Python部分,前端内容太多了。代码里的注释还是比较详细的,就不再过多说明了。用到的其他技术可以参考我之前写的文章。

-

项目结构

-

爬虫部分 spider.py

# -*- coding = utf-8 -*- # @Time : 2021/7/3 6:33 下午 # @Author : zcy # @File : main.py # @Software : PyCharm import re # 正则表达式,用于文字匹配 from bs4 import BeautifulSoup # 用于网页解析,对HTML进行拆分,方便获取数据 import urllib.request, urllib.error, urllib.parse # 用于指定URL,给网址就能进行爬取 import xlwt # 用于Excel操作 import sqlite3 # 用于进行SQLite数据库操作 import ssl import os # 正则表达式:.表示任意字符,*表示0次或任意次,?表示0次或1次。(.*?)惰性匹配。 findLink = re.compile(r'<a href="(.*?)">') # 获取电影详情链接。 findImg = re.compile(r'<img.*src="(.*?)"', re.S) # 获取影片图片。re.S 让换行符包含在字符串中 findTitle = re.compile(r'<span class="title">(.*)</span>') # 获取影片名称(可能有两个) findOther = re.compile(r'<span class="other">(.*)</span>') # 获取影片别称(多个别称以\分割) findRating = re.compile(r'<span class="rating_num" property="v:average">(.*)</span>') # 获取影片评分 findJudge = re.compile(r'<span>(\d*)人评价</span>') # 获取评价人数 findInq = re.compile(r'<span class="inq">(.*)</span>') # 获取影片概括 findBd = re.compile(r'<p class="">(.*?)</p>', re.S) # 获取影片相关内容 # 如果出现异常,则爬取失败 def spiderStart(): try: # 处理SSL ssl._create_default_https_context = ssl._create_unverified_context # 豆瓣Top250网址(末尾的参数 ?start= 加不加都可以访问到第一页) baseUrl = "https://movie.douban.com/top250?start=" # 1. 爬取网页并解析数据 dataList = getData(baseUrl) # 2. 保存数据 savePath = r"static\file\豆瓣电影Top250.xlsx" saveData(dataList, savePath) # Excel dbPath = r"static\file\movie250.db" saveDataToDB(dataList, dbPath) # SQLite return "success" except Exception: return "false" return "false" # 得到一个指定URL的网页内容 def askURL(url): # 模拟头部信息,像豆瓣服务器发送消息 # User-Agent 表明这是一个浏览器(这个来自F12里Network中 Request headers的User-Agent) head = { "User-Agent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.114 Safari/537.36" } # 封装头部信息 request = urllib.request.Request(url, headers=head) html = "" try: # 发起请求并获取响应 response = urllib.request.urlopen(request) # 读取整个HTML源码,并解码为UTF-8 html = response.read().decode("utf-8") except urllib.error.URLError as e: # 异常捕获 if hasattr(e, "code"): print("状态码:", e.code) if hasattr(e, "reason"): print("原因:", e.reason) return html # 爬取网页,返回数据列表 def getData(baseurl): dataList = [] # 爬取所有网页并获取需要的HTML源码 for i in range(0, 10): # 豆瓣Top250 共有10页,每页25条。 range(0,10)的范围是[0,10)。 url = baseurl + str(i * 25) # 最终 url = https://movie.douban.com/top250?start=225 html = askURL(url) # 将每个页面HTML源码获取出来 # 对页面源码逐一解析 soup = BeautifulSoup(html, "html.parser") # 设置解析器 for item in soup.find_all("div", class_="item"): # 查找 class为item的div标签 data = [] # 保存一部电影数据(每部电影由<div class="item">划分) item = str(item) # 把item转为字符串类型(原本是Tag类型对象) # findall返回匹配成功的列表。 link = re.findall(findLink, item)[0] # 获取影片详情页链接,只需要一个就行。这里会返回两个。 data.append(link) # 把link追加到data里 img = re.findall(findImg, item)[0] # 获取影片图片链接 data.append(img) titles = re.findall(findTitle, item) # 获取影片名称 # 有的影片title是两个,有的是一个。如果只有一个,则第二个保存空。 if (len(titles) == 2): data.append(titles[0]) # 中文名称 titles[1] = re.sub("(/)|( )", " ", titles[1]) data.append("".join(titles[1].split())) # 英文名称 else: data.append(titles[0]) # 中文名称 data.append("") # 没有英文名称,添加空 other = re.findall(findOther, item)[0] # 获取影片别称 other = other.replace("/", "", 1) other = re.sub("( )|(NBSP)|(\\xa0)|( )", "", other) data.append(other) bd = re.findall(findBd, item)[0] # 获取影片相关 director = re.sub("( )|(\\xa0)|(\n)", "", bd[bd.index("导演: ") + 4:]) director = director[:director.index(" ")] # 寻找其他信息 info = re.sub("( )|(\\xa0)|(\n)", "", bd[bd.index("<br/>") + 5:]) info = info.split("/") year = info[0].strip() country = info[1].strip() leibie = info[2].strip() data.append(director) # 导演 data.append(country) # 国家 data.append(leibie) # 类别 data.append(year) # 年份 rating = re.findall(findRating, item)[0] # 获取影片评分 data.append(rating) judgeNum = re.findall(findJudge, item)[0] # 获取影片评论人数 data.append(judgeNum) inq = re.findall(findInq, item) # 获取影片概述(可能不存在) if len(inq) != 0: inq = inq[0].replace("。", "") # 去掉句号 data.append(inq) else: data.append("") dataList.append(data) # 返回解析好的数据 return dataList # 保存数据(Excel) def saveData(dataList, savePath): print("正在保存...") book = xlwt.Workbook(encoding="utf-8") sheet = book.add_sheet("豆瓣电影Top250", cell_overwrite_ok=True) # 第二个参数表示 可以对单元格进行覆盖 # 写入列名(第一行存储列名) col = ("详情链接", "图片链接", "中文名", "外国名", "别称", "导演", "国家", "类型", "年份", "评分", "评价人数", "一句话概括") for i in range(0, len(col)): sheet.write(0, i, col[i]) # 写入电影信息(从第二行开始) for i in range(0, 250): data = dataList[i] # 取出某部电影数据 for j in range(0, len(col)): sheet.write(i + 1, j, data[j]) # 判断是否已经存在excel,若存在则删除原文件 try: f = open(savePath) print("Excel文件存在,进行删除") os.remove(savePath) except IOError: print("Excel文件不存在,直接保存") book.save(savePath) # 保存Excel print("保存完成!!") return True # 初始化数据库 def iniDB(dbPath): print("正在初始化...") # 删除表 sql = "drop table if exists movie250" connect = sqlite3.connect(dbPath) cursor = connect.cursor() cursor.execute(sql) connect.commit() # 创建表 sql = ''' create table if not exists movie250( id integer primary key autoincrement, info_link text, pic_link text, c_name varchar, e_name varchar, other_name varchar, director varchar, country varchar, types varchar, years varchar, score numeric, rated numeric, introduction text ); ''' cursor.execute(sql) connect.commit() cursor.close() connect.close() print("初始化完成!!") # 保存数据(SQLite) def saveDataToDB(dataList, dbPath): iniDB(dbPath) # 初始化数据库 connect = sqlite3.connect(dbPath) cursor = connect.cursor() print("正在入库...") # dataList存储了250部电影的信息,data一部电影的信息列表,它包含了9个元素。 i = 1 for data in dataList: # 这个for循环是为了将列表字符串元素都加上双引号,因为后面拼接sql时 每个元素需要双引号括起来。 for index in range(len(data)): # 下标6和7的元素是score和rated,是数字类型,不需要引号。 if index == 9 or index == 10: continue data[index] = '"' + data[index] + '"' # 下面是用 %s 作为占位符,填充的是 ",".join(data)。 # ",".join(data)表明 把列表data转为字符串且每个元素用逗号隔开。 sql = ''' insert into movie250( id, info_link, pic_link, c_name, e_name, other_name, director, country, types, years, score, rated, introduction) values(%d, %s)''' % (i, ",".join(data)) i += 1 cursor.execute(sql) connect.commit() cursor.close() connect.close() print("入库完毕!!") return True -

词云部分 word.py

# -*- coding = utf-8 -*- # @Time : 2021/7/10 11:51 下午 # @Author : 张城阳 # @File : word.py # @Software : PyCharm import jieba # 分词 from matplotlib import pyplot as plt # 绘图,数据可视化(生成图片) from wordcloud import WordCloud, ImageColorGenerator # 词云,生成有遮罩效果的图 from PIL import Image # 图片处理 import numpy as np # 矩阵运算 import sqlite3 # SQLite数据库 def generateImage(image, font, dpi): # 准备词云所需要的词 connect = sqlite3.connect("static/file/movie250.db") cursor = connect.cursor() sql = "select introduction from movie250" data = cursor.execute(sql) text = "" for item in data: text = text + item[0] cursor.close() connect.close() # 分词 cut = jieba.cut(text) string = " ".join(cut) # 准备好遮罩对象 imgArray = Image.open(image) # 打开遮罩图片 img_array = np.array(imgArray) # 把图片转为数组 wc = WordCloud( background_color='white', # 输出图片的背景 mask=img_array, # 遮罩图片数组 font_path="static/fonts/" + font # 从电脑内置的字体中复制过来的(C:\Window\Fonts,Mac用字体册) ) wc.generate_from_text(string) # image_colors = ImageColorGenerator(img_array) # wc.recolor(color_func=image_colors) # 绘制图片 fig = plt.figure(1) plt.imshow(wc) # 按wc规则绘制 plt.axis('off') # 不显示坐标轴 # plt.show() # 显示生成的图片 plt.savefig(image, dpi=dpi) return "success" def generateRec(height, width): # 准备词云所需要的词 connect = sqlite3.connect("static/file/movie250.db") cursor = connect.cursor() sql = "select introduction from movie250" data = cursor.execute(sql) text = "" for item in data: text = text + item[0] cursor.close() connect.close() # 分词 cut = jieba.cut(text) string = " ".join(cut) # 生成对象 wc = WordCloud(font_path='static/fonts/Kaiti.ttc', width=width, height=height, mode='RGBA', background_color=None).generate( string) # 显示词云 plt.imshow(wc, interpolation='bilinear') plt.axis('off') plt.show() # 保存到文件 wc.to_file('static/images/word-rectangle.png') return "success" -

网站部分 app.py

from flask import Flask, render_template, request, send_from_directory, jsonify, redirect, url_for from werkzeug.utils import secure_filename import sqlite3, datetime, random, spider, os import word as wordImage app = Flask(__name__) # 如果JSON数据中有中文,需要设置编码 app.config['JSON_AS_ASCII'] = False # 跳转到主页 @app.route('/') def index(): connect = sqlite3.connect("static/file/movie250.db") # 连接到数据库 cursor = connect.cursor() # 获取到游标 sql = "select rated from movie250" data = cursor.execute(sql) total = 0 for i in data: total += int(i[0]) return render_template('index.html', total=total) # 跳转到主页 @app.route('/index') def home(): return index() # 跳转到电影页 @app.route('/movie') def movie(): return render_template('movie.html') # 获取电影分页数据,以JSON格式传给前端 @app.route('/getMovie', methods=["GET"]) def getMovie(): page = int(request.args.get('page')) limit = int(request.args.get('limit')) dataList = [] # 用于存储电影列表 connect = sqlite3.connect("static/file/movie250.db") # 连接到数据库 cursor = connect.cursor() # 获取到游标 sql = "select * from movie250 limit " + str((page - 1) * limit) + "," + str(limit) # 查询SQL语句 data = cursor.execute(sql) # 执行SQL,data只是结果集,还需要提取每个电影数据 for item in data: jsonDic = {} jsonDic['id'] = item[0] jsonDic['info_lin'] = item[1] jsonDic['pic_link'] = item[2] jsonDic['c_name'] = item[3] jsonDic['e_name'] = item[4] jsonDic['other_name'] = item[5] jsonDic['director'] = item[6] jsonDic['country'] = item[7] jsonDic['types'] = item[8] jsonDic['years'] = item[9] jsonDic['score'] = item[10] jsonDic['rated'] = item[11] jsonDic['introduction'] = item[12] dataList.append(jsonDic) cursor.close() connect.close() return jsonify(dataList) # 跳转到评分页 @app.route('/statics') def score(): connect = sqlite3.connect("static/file/movie250.db") # 连接到数据库 cursor = connect.cursor() # 获取到游标 # 注意!注意!:如果要传字符串给前端,前端引用时 {{ chart4[0]|tojson}} 要加上tojson # 图1 柱状图 统计各个评分的电影数目 chart1 = [[], []] # 第一个列表是评分,第二个列表是对应的电影数目 sql1 = "select score, count(score) from movie250 group by score" # 查询每个评分有多少部电影 data1 = cursor.execute(sql1) # 执行SQL for item in data1: chart1[0].append(item[0]) chart1[1].append(item[1]) # 图2 饼图 统计各个国家在Top250中有多少部电影 sql2 = "select country from movie250" data2 = cursor.execute(sql2) # 执行SQL chart2Dic = {} # 字典chart2 key 是国家,value是该国家有多少部电影在Top250里 for item in data2: # 取出来的item是元祖类型,它只有一个元素 countryList = item[0].split(" ") # 有些电影里面有多个国家 for country in countryList: # 有个异常数据需要剔除,大闹天宫,国家也是时间 try: if country.index("1964"): continue except ValueError: try: chart2Dic[country] = chart2Dic[country] + 1 except KeyError: chart2Dic[country] = 1 chart2 = [] # 根据饼图要求,列表每个元素都是字典 for key, value in chart2Dic.items(): tempDic = {} tempDic["value"] = value tempDic["name"] = key chart2.append(tempDic) # 图3 折线图 统计各个年份的电影数目 chart3 = [[], []] # 第一个是年份列表,第二个是对应的电影数目 sql3 = "select years, count(years) from movie250 group by years" # 查询每个评分有多少部电影 data3 = cursor.execute(sql3) # 执行SQL for item in data3: # 有个异常数据 大闹天宫 剔除掉, 它年份是中国大陆 try: if item[0].index("中国大陆"): continue except ValueError: chart3[0].append(int(item[0])) chart3[1].append(item[1]) # 图4 柱状图,统计各个类型电影的数目 sql4 = "select types from movie250" data4 = cursor.execute(sql4) # 执行SQL chart4Dic = {} # 字典 key 是类型,value是该类型有多少部电影在Top250里 for item in data4: typeList = item[0].split(" ") # 电影有多个类型 # 有个异常数据 大闹天宫 剔除掉, 它电影类型是中国大陆 try: if typeList.index("1978(中国大陆)"): continue except ValueError: for types in typeList: try: chart4Dic[types] = chart4Dic[types] + 1 except KeyError: chart4Dic[types] = 1 chart4 = [] # 第一个是类型列表,第二个是对应的电影数目 for key, value in chart4Dic.items(): tempDic = {} tempDic["value"] = value tempDic["name"] = key chart4.append(tempDic) cursor.close() connect.close() return render_template('score.html', chart1=chart1, chart2=chart2, chart3=chart3, chart4=chart4) # 跳转到词云页 @app.route('/word') def word(): return render_template('word.html') # 爬取数据 @app.route('/spider') def spiderFunction(): state = spider.spiderStart() return jsonify({"state": state}) # 跳转到临时页面 @app.route('/temp') def temp(): return render_template('temp.html') # 捕获404异常错误 当发生404错误时,会被该路由匹配 @app.errorhandler(404) def handle_404_error(err_msg): # err_msg 就是错误代码404 return render_template('error.html') # Excel文件下载 @app.route('/download_excel') def download_excel(): filename = "豆瓣电影Top250.xlsx" dirpath = os.path.join(app.root_path, 'static', 'file') return send_from_directory(dirpath, filename, as_attachment=True) # DB文件下载 @app.route('/download_db') def download_db(): filename = 'movie250.db' dirpath = os.path.join(app.root_path, 'static', 'file') return send_from_directory(dirpath, filename, as_attachment=True) # 跳转到图片处理页面 @app.route('/generateImg', methods=['GET', 'POST']) def toGenerateImg(): return render_template('generate_wordcloud.html') # 文件上传存放的文件夹, 值为非绝对路径时,相对于项目根目录 IMAGE_FOLDER = 'static/file/upload' # 生成无重复随机数 gen_rnd_filename = lambda: "%s%s" % ( datetime.datetime.now().strftime('%Y%m%d%H%M%S'), str(random.randrange(1000, 10000))) # 文件名合法性验证 allowed_file = lambda filename: '.' in filename and filename.rsplit('.', 1)[1] in set( ['png', 'jpg', 'jpeg', 'gif', 'bmp']) app.config.update( SECRET_KEY=os.urandom(24), # 上传文件夹 UPLOAD_FOLDER=os.path.join(app.root_path, IMAGE_FOLDER), # 最大上传大小,当前16MB MAX_CONTENT_LENGTH=16 * 1024 * 1024 ) @app.route('/showimg/<filename>') def showimg_view(filename): return send_from_directory(app.config['UPLOAD_FOLDER'], filename) @app.route('/upload/', methods=['POST', 'OPTIONS']) def upload_view(): res = dict(code=-1, msg=None) f = request.files.get('file') if f and allowed_file(f.filename): filename = secure_filename(gen_rnd_filename() + "." + f.filename.split('.')[-1]) # 随机命名 # 自动创建上传文件夹 if not os.path.exists(app.config['UPLOAD_FOLDER']): os.makedirs(app.config['UPLOAD_FOLDER']) # 保存图片 f.save(os.path.join(app.config['UPLOAD_FOLDER'], filename)) # 处理图片 生成词云 wordImage.generateImage(IMAGE_FOLDER+'/'+filename, '娃娃体.otf', 800) imgUrl = url_for('showimg_view', filename=filename, _external=True) res.update(code=0, data=dict(src=imgUrl)) else: res.update(msg="Unsuccessfully obtained file or format is not allowed") return jsonify(res) if __name__ == '__main__': app.run()