Python爬虫爬取王者荣耀英雄人物高清图片

实现效果:

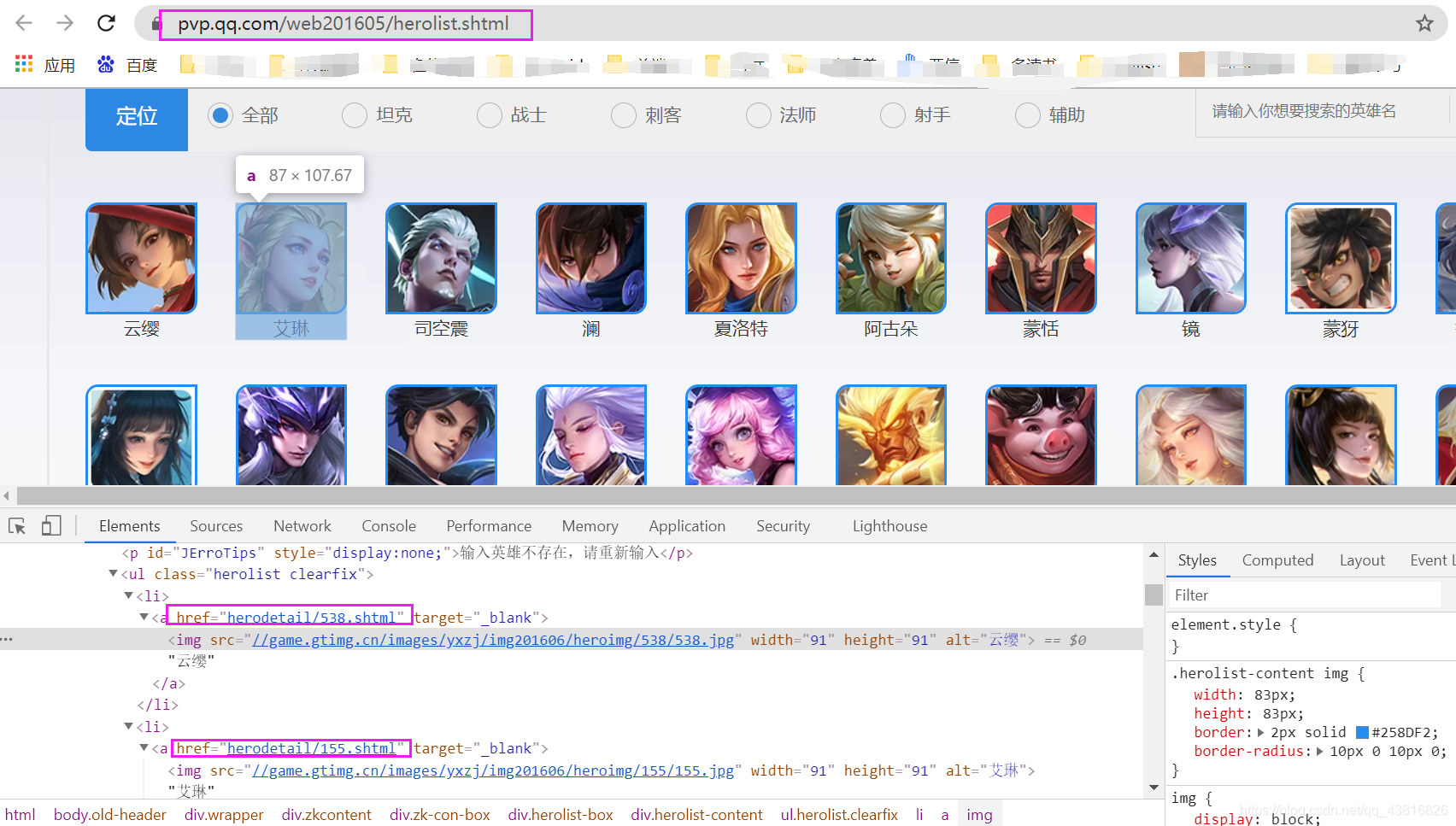

网页分析

从第一个网页中,获取每个英雄头像点击后进入的新网页地址,即a标签的 href 属性值:

划线部分的网址是需要拼接的

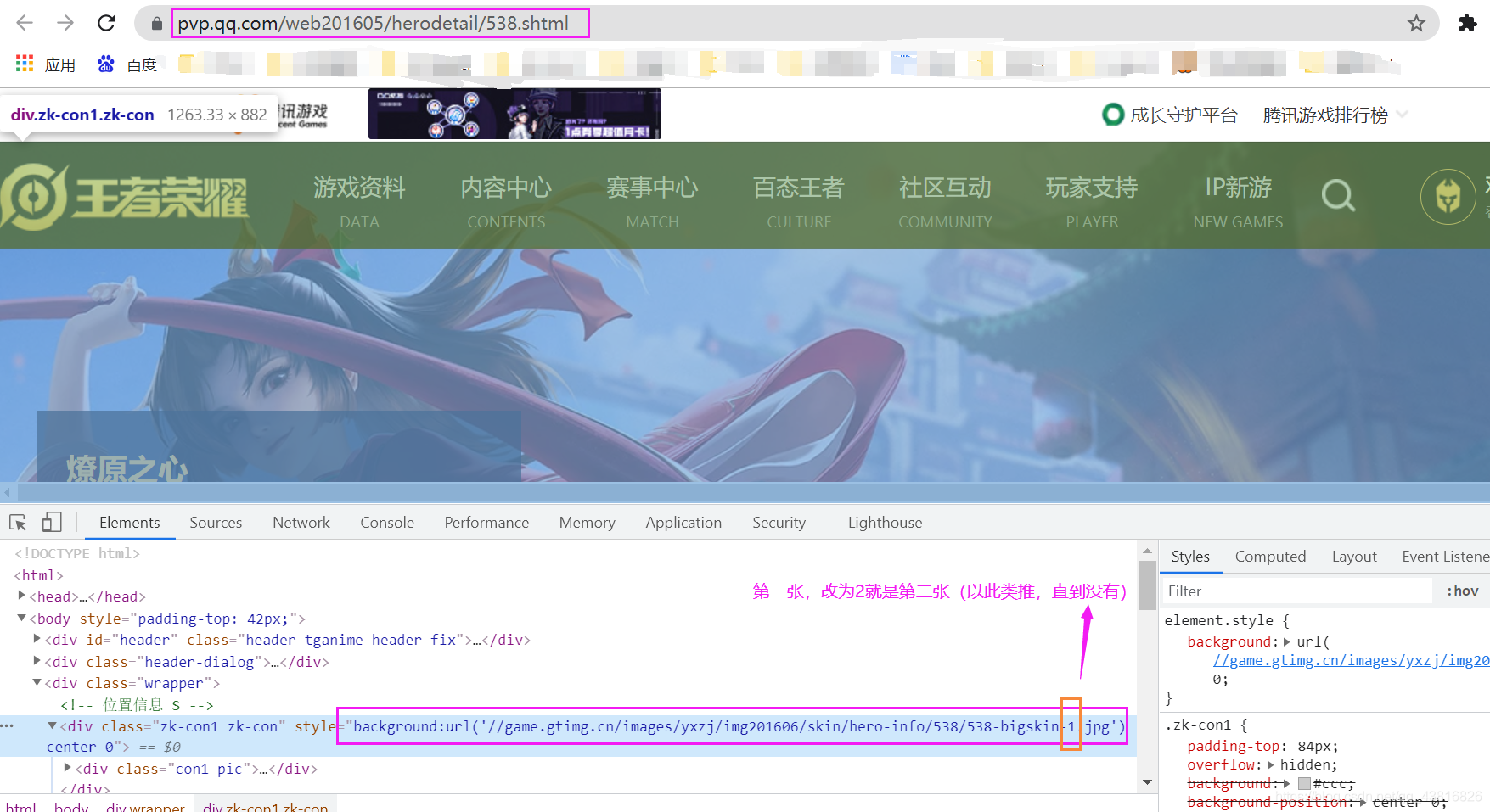

在每个英雄的具体网页内,爬取英雄皮肤图片:

Tip:

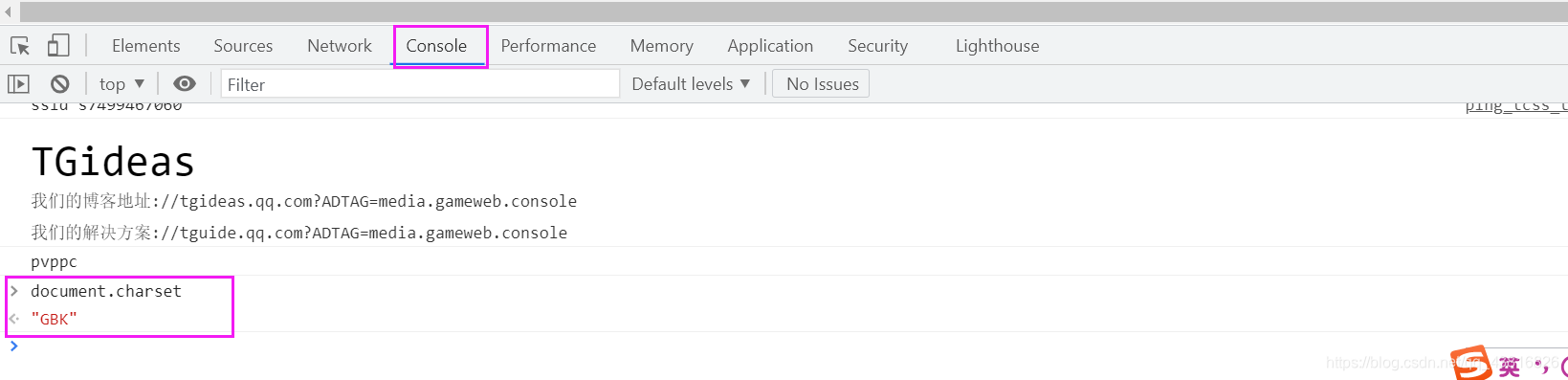

网页编码要去控制台查一下,不要习惯性写 “utf-8”,不然会出现中文乱码。

源码粘贴

"""

@File :getSkins.py

@Author :

@Date :2021/7/22

@Desc :

"""

import requests

from bs4 import BeautifulSoup

import urllib

import codecs

def getSkin():

link = "https://pvp.qq.com/web201605/herolist.shtml"

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/88.0.4324.96 Safari/537.36'

}

r = requests.get(link, headers=headers)

# 网页编码格式

r.encoding = 'GBK'

soup = BeautifulSoup(r.text, 'lxml')

# 查找图片所在标签

a_list = soup.find("div",class_="herolist-content")

ul = a_list.findAll("li")

# 图片保存路径

path = "D:\MyWeb\Heroes\\"

for u in ul:

l = str(u).split("<li><a")

ll = str(l[:2:]).split("target=")

lll = str(ll[0]).split("\"") #三次分割出a标签的href

# print(lll[1])

link = "https://pvp.qq.com/web201605/"+lll[1] #获取每一个英雄的具体网址

# print(link)

r = requests.get(link, headers=headers)

# 网页编码

r.encoding = "GBK"

soup = BeautifulSoup(r.text, 'lxml')

a_list = soup.find("div", class_="zk-con1 zk-con").get('style')

img_name = soup.find("h2", class_="cover-name").text #获取英雄名字

img_url = str(str(a_list).split("//")[1]).split("')")[0] #获取图片地址

# img_url = img_url.replace("n-1","n-2") #爬取每个英雄第二张图片,图片命名也要改成 "2.png"

# print(img_name,":",img_url)

# 保存图片到本地

bytes = urllib.request.urlopen("http://"+img_url)

f = codecs.open(path+img_name+".png","wb")

f.write(bytes.read())

f.flush()

f.close()

print("End======")

getSkin()