文章目录

(一)数据合并

1.1 数据合并之join

jion()

import numpy as np

import pandas as pd

df1 = pd.DataFrame(np.ones((2,4)),index=["A","B"],columns=list("abcd"))

print(df1)

df2 = pd.DataFrame(np.zeros((3,3)),index=["A","B","C"],columns=list("xyz"))

print("$"*100)

print(df2)

t1 = df1.join(df2)

t2 = df2.join(df1)

print("$"*100)

print(t1)

print("$"*100)

print(t2)

OUT:

a b c d

A 1.0 1.0 1.0 1.0

B 1.0 1.0 1.0 1.0

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

x y z

A 0.0 0.0 0.0

B 0.0 0.0 0.0

C 0.0 0.0 0.0

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

a b c d x y z

A 1.0 1.0 1.0 1.0 0.0 0.0 0.0

B 1.0 1.0 1.0 1.0 0.0 0.0 0.0

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

x y z a b c d

A 0.0 0.0 0.0 1.0 1.0 1.0 1.0

B 0.0 0.0 0.0 1.0 1.0 1.0 1.0

C 0.0 0.0 0.0 NaN NaN NaN NaN

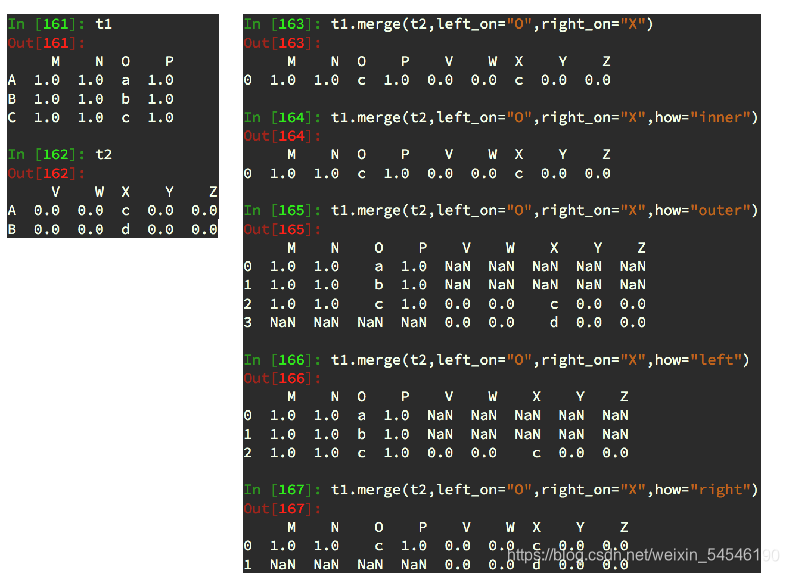

1.2 数据合并之merge

merge:按照指定的列把数据按照一定的方式合并到一起

- 默认的合并方式inner,交集

- merge outer,并 集,NaN补全

- merge left,左边为准,NaN补全

- merge right,右边为准,NaN补全

1.2.1 默认的合并方式inner,交集

import numpy as np

import pandas as pd

df1 = pd.DataFrame(np.ones((2,4)),index=["A","B"],columns=list("abcd"))

print(df1)

df2 = pd.DataFrame(np.zeros((3,3)),index=["A","B","C"],columns=list("xyz"))

#print("$"*100)

#print(df2)

# 数据合并之merge

df3 = pd.DataFrame(np.zeros((3,3)),columns=list("fax"))

print("$"*100)

print(df3)

t3 = df1.merge(df3,on="a")

print("$"*100)

print(t3)

OUT:

a b c d

A 1.0 1.0 1.0 1.0

B 1.0 1.0 1.0 1.0

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

f a x

0 0.0 0.0 0.0

1 0.0 0.0 0.0

2 0.0 0.0 0.0

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

Empty DataFrame

Columns: [a, b, c, d, f, x]

Index: []

为什么会出现Empty DataFrame这种结果呢!

答:因为df1全为1,df3的a列索引全为0,又因为默认情况下merge取交集,所以两个取交集(merge)肯定是空集。

df3.loc[1,"a"] = 1

print("$"*100)

print(df1)

print("$"*100)

print(df3)

t4 = df1.merge(df3,on="a")

print("$"*100)

print(t4)

OUT:

a b c d

A 1.0 1.0 1.0 1.0

B 1.0 1.0 1.0 1.0

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

f a x

0 0.0 0.0 0.0

1 0.0 1.0 0.0

2 0.0 0.0 0.0

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

a b c d f x

0 1.0 1.0 1.0 1.0 0.0 0.0

1 1.0 1.0 1.0 1.0 0.0 0.0

**解释:**df3的第二行第一列换成了1,与df1中a这一列的两行1都是相等的,所以把df3的第二行分别合并到df1上,又因为df3的第二行的f和x都是0,所以合并后全部是0.(看下面的例子加深印象)

print(df1)

df4 = pd.DataFrame(np.arange(9).reshape(3,3),columns=list("fax"))

print("$"*100)

print(df4)

t5 = df1.merge(df4,on="a")

print("$"*100)

print(t5)

OUT:

a b c d

A 1.0 1.0 1.0 1.0

B 1.0 1.0 1.0 1.0

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

f a x

0 0 1 2

1 3 4 5

2 6 7 8

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

a b c d f x

0 1.0 1.0 1.0 1.0 0 2

1 1.0 1.0 1.0 1.0 0 2

1.2.2 merge outer,并 集,NaN补全

t7 = df1.merge(df4,on="a",how="outer")

print("#"*50)

print(df1)

print("#"*50)

print(t7)

OUT:

a b c d

A 1.0 1.0 1.0 1.0

B 1.0 1.0 1.0 1.0

##################################################

a b c d f x

0 1.0 1.0 1.0 1.0 0 2

1 1.0 1.0 1.0 1.0 0 2

2 4.0 NaN NaN NaN 3 5

3 7.0 NaN NaN NaN 6 8

1.1.3 merge left,左边为准,NaN补全

以df1为准

t8 = df1.merge(df4,on="a",how="left")

print("#"*50)

print(df1)

print("#"*50)

print(df4)

print("#"*50)

print(t8)

OUT:

a b c d

A 1.0 1.0 1.0 1.0

B 1.0 1.0 1.0 1.0

##################################################

f a x

0 0 1 2

1 3 4 5

2 6 7 8

##################################################

a b c d f x

0 1.0 1.0 1.0 1.0 0 2

1 1.0 1.0 1.0 1.0 0 2

1.2.4 merge right,右边为准,NaN补全

以df4为准

t9 = df1.merge(df4,on="a",how="right")

print('\n')

print("#"*50)

print(df1)

print("#"*50)

print(df4)

print("#"*50)

print(t9)

OUT:

a b c d

A 1.0 1.0 1.0 1.0

B 1.0 1.0 1.0 1.0

##################################################

f a x

0 0 1 2

1 3 4 5

2 6 7 8

##################################################

a b c d f x

0 1.0 1.0 1.0 1.0 0 2

1 1.0 1.0 1.0 1.0 0 2

2 4.0 NaN NaN NaN 3 5

3 7.0 NaN NaN NaN 6 8

(二)实例

接上一篇的实例代码:

数据科学库(五 · 一)数字的合并与分组聚合(太具有逻辑性,多复习)

题目·现在我们有一组关于全球星巴克店铺的统计数据,如果我想知道美国的星巴克数量和中国的哪个多,或者我想知道中国每个省份星巴克的数量的情况,那么应该怎么办

2.1 查看starbucks_store_worldwide.csv文件的数据

# coding=utf-8

import pandas as pd

import numpy as np

file_path = "starbucks_store_worldwide.csv"

df = pd.read_csv(file_path)

print(df.head(1))

print(df.info())

OUT:

Brand Store Number ... Longitude Latitude

0 Starbucks 47370-257954 ... 1.53 42.51

[1 rows x 13 columns]

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 25600 entries, 0 to 25599

Data columns (total 13 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 Brand 25600 non-null object

1 Store Number 25600 non-null object

2 Store Name 25600 non-null object

3 Ownership Type 25600 non-null object

4 Street Address 25598 non-null object

5 City 25585 non-null object

6 State/Province 25600 non-null object

7 Country 25600 non-null object

8 Postcode 24078 non-null object

9 Phone Number 18739 non-null object

10 Timezone 25600 non-null object

11 Longitude 25599 non-null float64

12 Latitude 25599 non-null float64

dtypes: float64(2), object(11)

memory usage: 2.5+ MB

None

- 分组和聚合

在pandas中类似的分组的操作我们有很简单的方式来完成df.groupby(by="columns_name"),那么问题来了,调用groupby方法之后返回的是什么内容?

2.2 美国的星巴克数量和中国的哪个多

grouped = df.groupby(by="Country")

print(grouped)

#DataFrameGroupBy

#可以进行遍历

#for i,j in grouped:

# print(i)

# print("-"*100)

# print(j, type(j))

# print("*" * 100)

#df[df["Country"]=="US"] 直接查看美国的数据

#调用聚合方法

country_count = grouped["Brand"].count()

## count():表示统计了每个国家Brand的总数量

print(country_count)

print(country_count["US"])

print(country_count["CN"]) #CN:指中国

OUT:

<pandas.core.groupby.generic.DataFrameGroupBy object at 0x000001BF17B2FBE0>

Country

AD 1

AE 144

AR 108

AT 18

AU 22

...

TT 3

TW 394

US 13608

VN 25

ZA 3

Name: Brand, Length: 73, dtype: int64

13608

2734

结果:由上述数据可知道美国有13608家星巴克,中国有2734家辛巴克。

2.3 统计中国每个省份星巴克的数量的情况

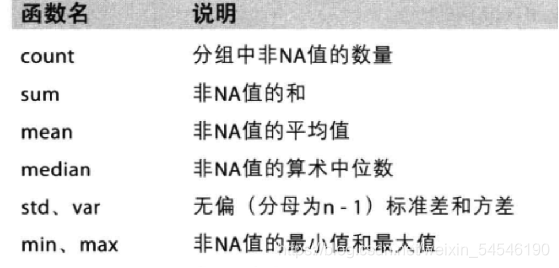

- DataFrameGroupBy对象有很多经过优化的方法

- 如果我们需要对国家和省份进行分组统计,应该怎么操作呢?

grouped = df.groupby(by=[df["Country"],df["State/Province"]])

+ **统计中国每个省份星巴克的数量的情况**

```python

# coding=utf-8

import pandas as pd

import numpy as np

file_path = "starbucks_store_worldwide.csv"

df = pd.read_csv(file_path)

#print(df.head(1))

print(df.info())

grouped = df.groupby(by="Country")

print(grouped)

#DataFrameGroupBy

#可以进行遍历

#for i,j in grouped:

# print(i)

# print("-"*100)

# print(j, type(j))

# print("*" * 100)

#df[df["Country"]=="US"]

#调用聚合方法

#country_count = grouped["Brand"].count()

## count():表示统计数量

#print(country_count)

#print(country_count["US"])

#print(country_count["CN"]) #CN:指中国

# 统计中国每个省份星巴克的数量的情况

china_data = df[df["Country"]=="CN"] # 提取出中国的数据

grouped = china_data.groupby(by="State/Province").count()["Brand"]

#统计中国每个State/Province,Brand的数量

print("$"*50)

print(grouped)

#这里为了避免争端,所以输出的每个省份名字都用数字表示

OUT:

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 25600 entries, 0 to 25599

Data columns (total 13 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 Brand 25600 non-null object

1 Store Number 25600 non-null object

2 Store Name 25600 non-null object

3 Ownership Type 25600 non-null object

4 Street Address 25598 non-null object

5 City 25585 non-null object

6 State/Province 25600 non-null object

7 Country 25600 non-null object

8 Postcode 24078 non-null object

9 Phone Number 18739 non-null object

10 Timezone 25600 non-null object

11 Longitude 25599 non-null float64

12 Latitude 25599 non-null float64

dtypes: float64(2), object(11)

memory usage: 2.5+ MB

None

<pandas.core.groupby.generic.DataFrameGroupBy object at 0x000001AF5AA0FBE0>

$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$

State/Province

11 236

12 58

13 24

14 8

15 8

21 57

22 13

23 16

31 551

32 354

33 315

34 26

35 75

36 13

37 75

41 21

42 76

43 35

44 333

45 21

46 16

50 41

51 104

52 9

53 24

61 42

62 3

63 3

64 2

91 162

92 13

Name: Brand, dtype: int64

2.4 数据按照多个条件进行分组

- 获取分组之后的某一部分数据:

df.groupby(by=["Country","State/Province"])["Country"].count()

- 对某几列数据进行分组:

df["Country"].groupby(by=[df["Country"],df["State/Province"]]).count()

- 数据按照多个条件进行分组实例

# coding=utf-8

import pandas as pd

import numpy as np

file_path = "starbucks_store_worldwide.csv"

df = pd.read_csv(file_path)

#print(df.head(1))

print(df.info())

grouped = df.groupby(by="Country")

print(grouped)

# 数据按照多个条件进行分组

grouped = df["Brand"].groupby(by=[df["Country"],df["State/Province"]]).count()

print(grouped)

OUT:

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 25600 entries, 0 to 25599

Data columns (total 13 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 Brand 25600 non-null object

1 Store Number 25600 non-null object

2 Store Name 25600 non-null object

3 Ownership Type 25600 non-null object

4 Street Address 25598 non-null object

5 City 25585 non-null object

6 State/Province 25600 non-null object

7 Country 25600 non-null object

8 Postcode 24078 non-null object

9 Phone Number 18739 non-null object

10 Timezone 25600 non-null object

11 Longitude 25599 non-null float64

12 Latitude 25599 non-null float64

dtypes: float64(2), object(11)

memory usage: 2.5+ MB

None

<pandas.core.groupby.generic.DataFrameGroupBy object at 0x000002112656FBE0>

Country State/Province

AD 7 1

AE AJ 2

AZ 48

DU 82

FU 2

..

US WV 25

WY 23

VN HN 6

SG 19

ZA GT 3

Name: Brand, Length: 545, dtype: int64

(三)DataFrame和Series的区别

- dataframe,是一个二维结构,除了拥有index和value之外,还拥有column。

- series,只是一个一维数据结构,它由index和value组成。 dataframe,是一个二维结构,除了拥有index和value之外,还拥有column。

- dataframe由多个series组成,无论是行还是列,单独拆分出来都是一个series。

这里可以参考我之前写的:数据科学库笔记(四)pandas

(四)python数据科学库学习目录

学习python数据科学库笔记的顺序:

python数据科学库(一)

python数据科学库(二)matplotlib

python数据科学库笔记(三)Numpy

Python下载并安装第三方库(cvxpy)

python数据科学库笔记(四)pandas

python数据科学库(五 · 一)数字的合并与分组聚合(太具有逻辑性,多复习)