通过kaggle竞赛(GPS定位预测)的baseline1的学习,对本baseline用到的工具包以demo的形式进行整理:

目录

1.pathlib

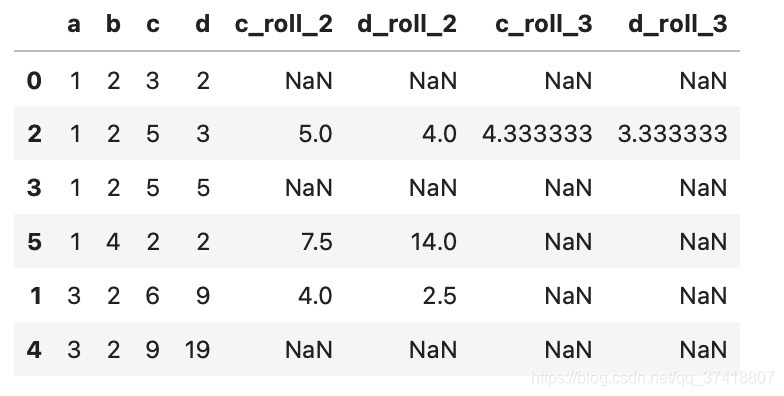

文件目录大致如下图所示:

pathlib import Path

path_data = Path('../input/google-smartphone-decimeter-challenge')

# 路径拼接

print('1.路径拼接结果:',path_data/'train')

# 非递归获取当前目录下的指定文件路径

three_csv_paths = path_data.glob('*.csv')

print('2.当前目录的csv文件目录:',list(three_csv_paths))

all_csv_path = path_data.rglob('*.csv')

print('3.5个所有目录下的csv文件:',list(all_csv_path)[:5])

输出结果:

1.路径拼接结果: …/input/google-smartphone-decimeter-challenge/train

2.当前目录的csv文件目录: [PosixPath(’…/input/google-smartphone-decimeter-challenge/sample_submission.csv’), PosixPath(’…/input/google-smartphone-decimeter-challenge/baseline_locations_train.csv’), PosixPath(’…/input/google-smartphone-decimeter-challenge/baseline_locations_test.csv’)]

3.5个所有目录下的csv文件: [PosixPath(’…/input/google-smartphone-decimeter-challenge/sample_submission.csv’), PosixPath(’…/input/google-smartphone-decimeter-challenge/baseline_locations_train.csv’), PosixPath(’…/input/google-smartphone-decimeter-challenge/baseline_locations_test.csv’), PosixPath(’…/input/google-smartphone-decimeter-challenge/metadata/constellation_type_mapping.csv’), PosixPath(’…/input/google-smartphone-decimeter-challenge/test/2021-04-29-US-MTV-2/SamsungS20Ultra/SamsungS20Ultra_derived.csv’)]

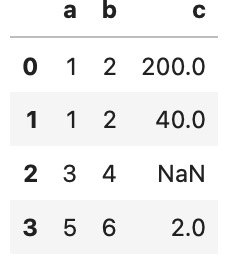

2. pandas.merge

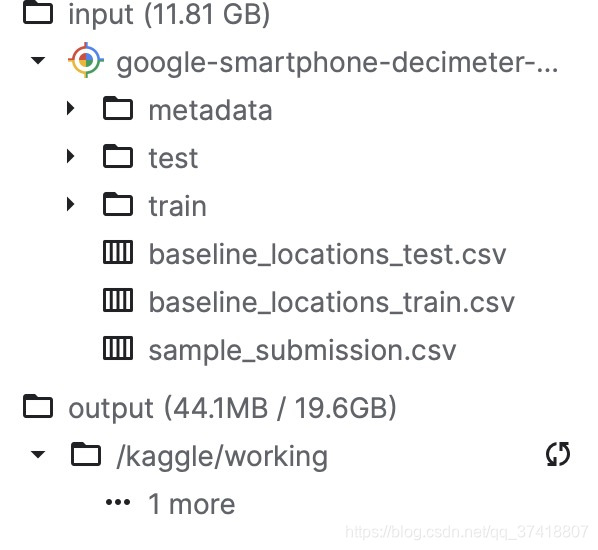

(1) 表1与表2依靠列a进行合并

import pandas as pd

table1 = pd.DataFrame([[1,2],[3,4],[5,6]],columns = ['a','b'])

table2 = pd.DataFrame([[1,200],[1,40],[5,2]],columns = ['a','c'])

table1.merge(table2,on = 'a')

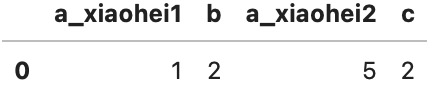

(2)表1以b列为基准,表2的c列为基准进行合并

# suffices为添加表1与表2中重复的列名添加后缀

table1.merge(table2,left_on = 'b',right_on='c',suffixes = ('_xiaohei1','_xiaohei2'))

(3)左(右)连接

table1.merge(table2,how = 'left',on = 'a')

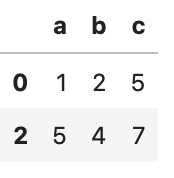

3.pd.drop_duplicates(去重)

(1)以a列为基准去重

import pandas as pd

"""

params:

keep:

‘first’:保留第一个重复行

‘last’:保留第一个重复行

"""

table = pd.DataFrame([[1,2,5],[1,4,7],[5,4,7]],columns = ['a','b','c'])

table.drop_duplicates(['a'])

(2)以(b,c)列为基准去重

table.drop_duplicates(['b','c'])

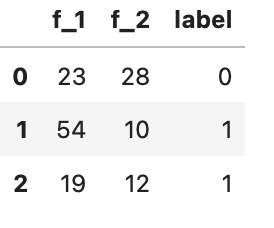

4.pandas.map方法

import pandas as pd

data = pd.DataFrame([[23,28,'xiaohei'],[54,10,'xiaobai'],[19,12,'xiaobai']],columns = ['f_1','f_2','label'])

map_ = {'xiaohei':0,'xiaobai':1}

data['label'] = data['label'].map(map_)

data

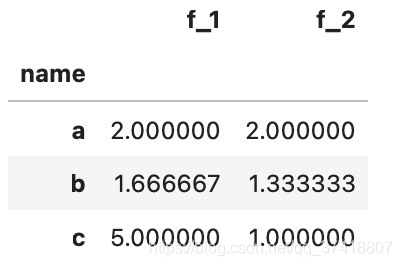

5.pandas.groupby

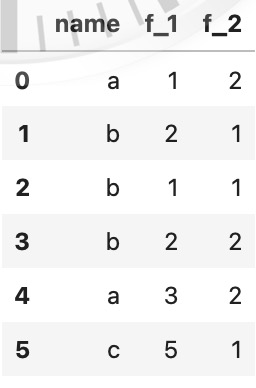

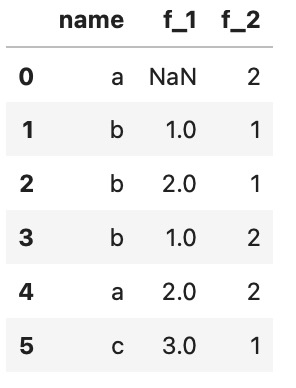

(1)数据展示

import pandas as pd

data = pd.DataFrame([['a',1,2],['b',2,1],['b',1,1],['b',2,2],['a',3,2],['c',5,1]],columns = ['name','f_1','f_2'])

data

(2)常用操作函数

data.groupby('name').mean()

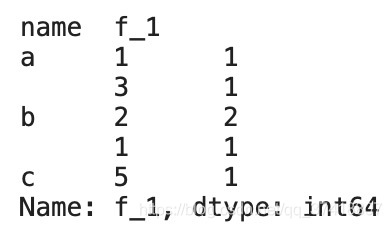

data.groupby(['name'])['f_1'].value_counts()

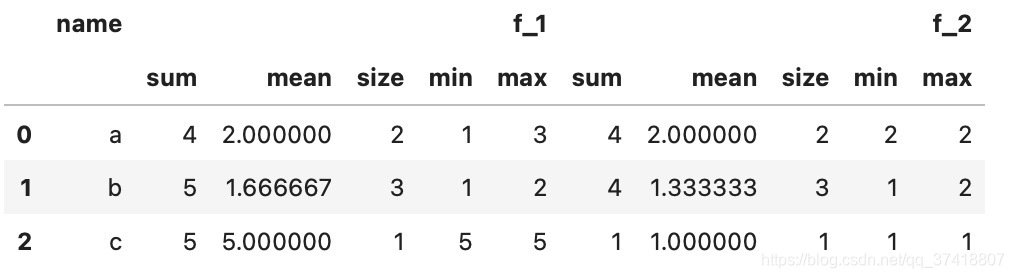

(3)自定义分组数据操作

groupby_columns = ['name']

values_columns = ['f_1','f_2']

function_names = ['sum','mean','size','min','max']

tmp = data.groupby(groupby_columns)[values_columns].agg(function_names).reset_index()

tmp

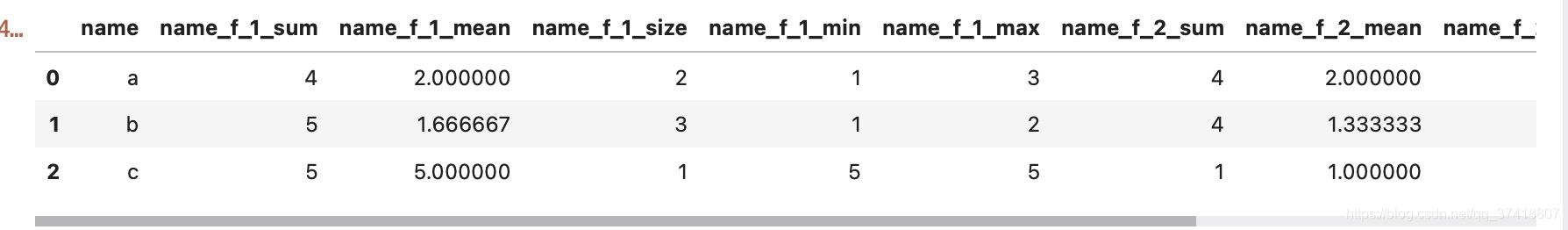

# 自定义列名

tmp_columns = groupby_columns.copy()

for i in values_columns:

for j in function_names:

tmp_columns.append('_'.join(groupby_columns) + '_' + i + '_' + j)

tmp.columns = tmp_columns

tmp

6.shift操作(便于提取时序特征)

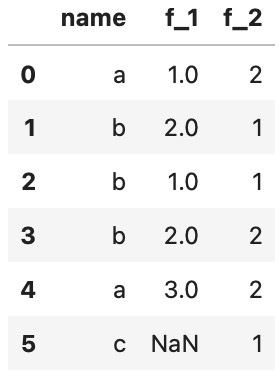

(1) 基本操作

import pandas as pd

shift_list = [-1,1]

data = pd.DataFrame([['a',1,2],['b',2,1],['b',1,1],['b',2,2],['a',3,2],['c',5,1]],columns = ['name','f_1','f_2'])

data

# 向上平移1

data['f_1'] = data['f_1'].shift(1)

data

# 向下平移1

data['f_1'] = data['f_1'].shift(-1)

data

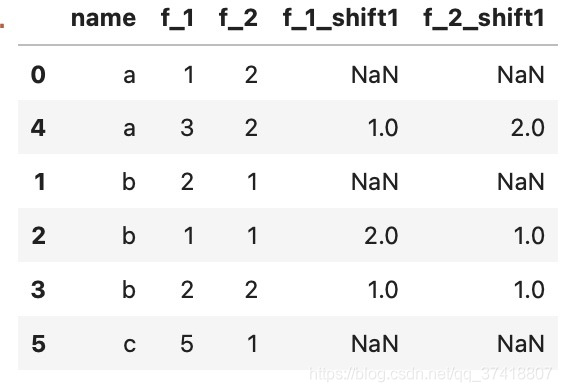

(2) 分组后的shift操作

shift_list = [-1,1]

shift_columns = ['f_1','f_2']

tmp_data = data.groupby(['name']).shift(1)

for column in shift_columns:

data[column+'_shift1'] = tmp_data[column]

data.sort_values(['name'])

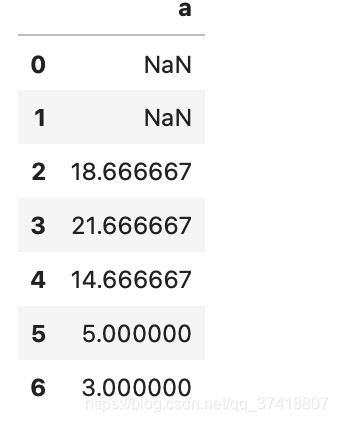

7.特征平滑操作(窗口平移滑动操作)

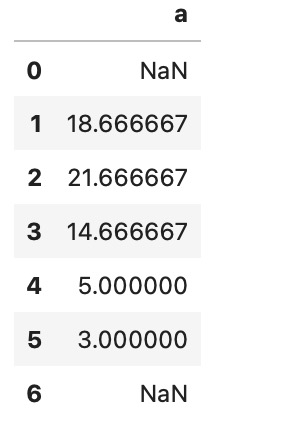

(1)简单操作

import pandas as pd

data1 = pd.DataFrame([[1],[23],[32],[10],[2],[3],[4]],columns = ['a'])

data1.rolling(3).mean()

data1.rolling(3,center = True).mean()

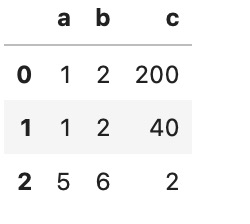

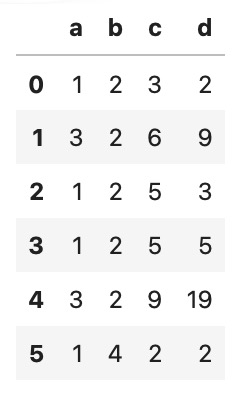

(2) 与groupby结合

import pandas as pd

data = pd.DataFrame([[1,2,3,2],[3,2,6,9],[1,2,5,3],[1,2,5,5],[3,2,9,19],[1,4,2,2]],columns = ['a','b','c','d'])

data

groupby_columns = ['a','b']

roll_columns = ['c','d']

window_list = [2,3]

# 对每个窗口大小

for i in window_list:

temp = data.groupby(groupby_columns)[roll_columns].rolling(i).mean(center = True).reset_index()

for col in roll_columns:

data[col+'_roll_'+str(i)] = temp[col]

data.sort_values(['a','b'])