对于一个带有视觉的机器人系统,相机所获得的所有信息都是在相机坐标系下描述的。要想让机器人利用视觉系统得到的信息,首先就是要确定相机坐标系与机器人之间的相对关系,这便是机器人手眼标定的研究内容。

在机器人系统中,相机的安装方式可以分为两大类:

1.眼在手外,eye-to-hand:也即摄像头安装在手臂之外的部分,与机器人的基座(世界坐标系)相对固定,不随着机械臂的运动而运动;

2.眼在手上,eye-in-hand:也即摄像头安装在机械臂上,会随着机械臂的运动而发生运动。

这两个方式的求解略有不同,但基本原理相似。

文章目录

一.手眼标定流程

1 配置功能包

1.1 配置Kinova机械臂

新建工作空间

mkdir -p ~/kinova_ws/src

cd ~/kinova_ws/src

catkin_init_workspace

cd ..

catkin_make

echo 'source ~/kinova_ws /devel/setup.bash' >> ~/.bashrc

source ~/.bashrc

下载kinova-ros功能包, 编译

cd ~/kinova_ws/src

git clone https://github.com/Kinovarobotics/kinova-ros.git kinova-ros

cd ~/kinova_ws

catkin_make

安装Moveit和pr2

sudo apt-get install ros-kinetic-moveit

sudo apt-get install ros-kinetic-trac-ik

sudo apt-get install ros-kinetic-pr2*

1.2 配置Azure Kinect

可参考:Azure Kinect SDK Ubuntu18.04安装使用:https://bokai.blog.csdn.net/article/details/119115883

安装步骤Ubuntu16.04类似. 安装过程中, 如有条件, 建议翻墙

1.3 配置visp

此处以ros-kinetic版本为例

sudo apt-get install ros-kinetic-visp

cd ~/catkin_ws/src

git clone -b kinetic-devel https://github.com/lagadic/vision_visp.git

cd ..

catkin_make

如果编译不通过,可以删除visp_tracker和visp_auto_tracker两个功能包,这两个功能包用于跟踪,不影响手眼标定

1.4 配置aruco_ros

cd ~/catkin_ws/src

git clone -b kinetic-devel https://github.com/pal-robotics/aruco_ros

cd ..

catkin_make

1.5 配置easy_handeye

cd ~/catkin_ws/src

git clone https://github.com/IFL-CAMP/easy_handeye

cd ..

catkin_make

2 编写launch文件

代码样例如下, 可供参考.

在easy_handeye/launch文件夹下新建easyhand.launch文件,输入如下代码

<launch>

<arg name="namespace_prefix" default="kinova_k4a_handeyecalibration"/>

<arg name="marker_size" doc="Size of the ArUco marker used, in meters" default="0.034"/>

<arg name="marker_id" doc="The ID of the ArUco marker used" default="582"/>

<arg name="corner_refinement" default="LINES"/>

<!-- start aruco -->

<node name="aruco_tracker" pkg="aruco_ros" type="single">

<remap from="/camera_info" to="/rgb/camera_info" />

<remap from="/image" to="/rgb/image_raw" />

<param name="image_is_rectified" value="true"/>

<param name="marker_size" value="$(arg marker_size)"/>

<param name="marker_id" value="$(arg marker_id)"/>

<param name="reference_frame" value="depth_camera_link"/>

<param name="camera_frame" value="depth_camera_link"/>

<param name="marker_frame" value="aruco_marker_frame"/>

<param name="corner_refinement" value="$(arg corner_refinement)"/>

</node>

<!-- start robot_state_publisher -->

<node name="joint_state_publisher" pkg="joint_state_publisher" type="joint_state_publisher">

</node>

<!-- start easy_handeye -->

<include file="$(find easy_handeye)/launch/calibrate.launch">

<arg name="namespace_prefix" value="$(arg namespace_prefix)"/>

<arg name="eye_on_hand" value="false"/>

<arg name="tracking_base_frame" value="depth_camera_link"/>

<arg name="tracking_marker_frame" value="aruco_marker_frame"/>

<arg name="robot_base_frame" value="j2n6s300_link_base"/>

<arg name="robot_effector_frame" value="j2n6s300_end_effector"/>

<arg name="freehand_robot_movement" value="false"/>

<arg name="robot_velocity_scaling" value="0.5"/>

<arg name="robot_acceleration_scaling" value="0.2"/>

</include>

</launch>

3 进行手眼标定

使用shell脚本启动, 依次启动Azure Kinect,Kinova,Moveit和上述launch文件,脚本代码如下

#! /bin/bash

{

gnome-terminal -x bash -c "roslaunch azure_kinect_ros_driver driver.launch;exec bash"

}

sleep 6

{

gnome-terminal -x bash -c "roslaunch kinova_bringup kinova_robot.launch;exec bash"

}

sleep 6

{

gnome-terminal -x bash -c "roslaunch j2n6s300_moveit_config j2n6s300_demo.launch;exec bash"

}

sleep 10

{

gnome-terminal -x bash -c "roslaunch easy_handeye easyhand.launch;exec bash"

}

sleep 6

{

gnome-terminal -x bash -c "rqt_image_view;exec bash"

}

如果运行成功, 将会看到如下界面

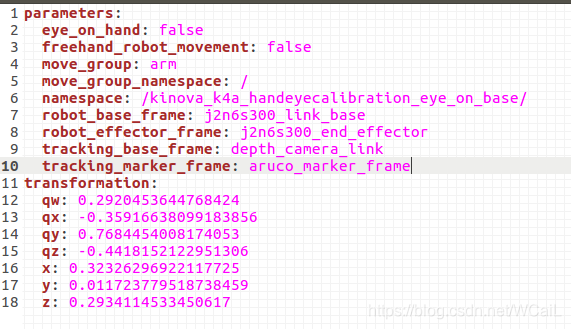

在rqt_image_view界面中可以看到标定板, 确认识别到标定板后, 点击上述界面中Take Sample进行采样. 通过Kinova提供的手柄移动手爪位置, 在不同位置进行采样, 选取20个点即可, 点击Compute计算机械臂与Kinect的坐标变换. 点击Save保存为yaml文件, 保存路径在/home/.ros/easy_handeye, 此目录为隐藏文件夹, ctrl+h可显示隐藏文件夹.yaml文件样例如下:

在rqt_image_view界面中可以看到标定板, 确认识别到标定板后, 点击上述界面中Take Sample进行采样. 通过Kinova提供的手柄移动手爪位置, 在不同位置进行采样, 选取20个点即可, 点击Compute计算机械臂与Kinect的坐标变换. 点击Save保存为yaml文件, 保存路径在/home/.ros/easy_handeye, 此目录为隐藏文件夹, ctrl+h可显示隐藏文件夹.yaml文件样例如下:

4 问题解决

问题一: No module named 'transforms3d’

解决方法:

pip install transforms3d

问题二:

[ERROR] [1628769491.142888273]: Skipped loading plugin with error: XML Document ‘/opt/ros/kinetic/share/pr2_motor_diagnostic_tool/plugin.xml’ has no Root Element. This likely means the XML is malformed or missing…

解决方法:

解决方法:

/opt/ros/kinetic/share/pr2_motor_diagnostic_tool文件夹下新建plugin.xml文件

sudo gedit plugin.xml

输入如下代码,保存.

<library path="src">

<class name="MyPlugin" type="my_module.MyPlugin" base_class_type="rqt_gui_py::Plugin">

<description>

PR2 Actuator diagnostic tool.

</description>

<qtgui>

<!-- optional grouping...

<group>

<label>Group</label>

</group>

<group>

<label>Subgroup</label>

</group>

-->

<label>PR2 Actuator Diagnostic Tool Plugin</label>

<icon type="theme">applications-development</icon>

<statustip>Great user interface to provide real value.</statustip>

</qtgui>

</class>

</library>

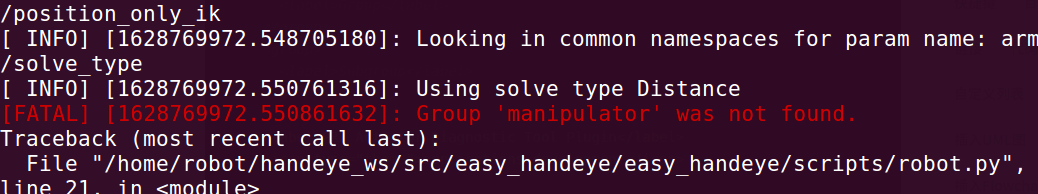

问题三:[FATAL] [1628769972.550861632]: Group ‘manipulator’ was not found.

解决方法:

解决方法:

修改easy_handeye功能包中calibrate.launch文件

<arg name="move_group" default="manipulator" doc="the name of move_group for the automatic robot motion with MoveIt!" />

将manipulator修改为自己机械臂的Group名, 例如:

<arg name="move_group" default="arm" doc="the name of move_group for the automatic robot motion with MoveIt!" />

问题四:AttributeError: ‘module’ object has no attribute 'CALIB_HAND_EYE_TSAI’

解决方法:

解决方法:

ros环境下提示python2.7环境的opencv没有cv2.CALIB_HAND_EYE_TSAI这个参数,是库引入冲突导致. 在报错的python文件顶部添加

import sys

sys.path.remove('/opt/ros/kinetic/lib/python2.7/dist-packages')

import cv2

sys.path.append('/opt/ros/kinetic/lib/python2.7/dist-packages')

二.坐标变换

通过手眼标定我们得到了机械臂link_base坐标系与Azure Kinect深度相机坐标系的变换关系, 于是我们便可以使用tf功能包, 将深度相机坐标系中的点的坐标,转换为机械臂link_base坐标系的坐标. 具体实现方法如下:

1 发布坐标变换

创建一个发布机械臂link_base坐标系与Azure Kinect深度相机坐标系之间tf变换的节点, 具体代码如下:

#include <ros/ros.h>

#include <tf/transform_broadcaster.h>

int main(int argc, char** argv){

ros::init(argc, argv, "arm_camera_tf_pub");

ros::NodeHandle n;

ros::Rate rate(50);

tf::TransformBroadcaster broadcaster;

while(n.ok()){

broadcaster.sendTransform(tf::StampedTransform(tf::Transform(tf::Quaternion(-0.35916638099183856, 0.7684454008174053, -0.4418152122951306, 0.2920453644768424),

tf::Vector3(0.32326296922117725, 0.011723779518738459, 0.2934114533450617)),

ros::Time::now(),"/j2n6s300_link_base", "/depth_camera_link"));

rate.sleep();

}

return 0;

}

2 监听坐标变换

tf消息广播之后, 其他节点就可以监听该tf消息, 从而获取需要的坐标变换, 并将一个坐标系下的点的坐标转换到另一个坐标系下, 具体代码如下:

#include <ros/ros.h>

#include <geometry_msgs/PointStamped.h>

#include <tf/transform_listener.h>

using namespace std;

void transformPoint(const tf::TransformListener& listener){

geometry_msgs::PointStamped camera_point;

camera_point.header.frame_id = "/depth_camera_link";

camera_point.header.stamp = ros::Time();

//深度相机坐标系下点的坐标

camera_point.point.x = 0;

camera_point.point.y = 0.6;

camera_point.point.z = 0.32;

try{

geometry_msgs::PointStamped arm_point;

listener.transformPoint("/j2n6s300_link_base", camera_point, arm_point);

//显示机械臂link_base坐标系下点的坐标

cout << arm_point.point.x << "," << arm_point.point.y << "," << arm_point.point.z << endl;

}

catch(tf::TransformException& ex){

ROS_ERROR("Received an exception trying to transform a point from \"depth_camera_link\" to \"j2n6s300_link_base\": %s", ex.what());

}

}

int main(int argc, char** argv){

ros::init(argc, argv, "transpoint_test");

ros::NodeHandle n;

tf::TransformListener listener(ros::Duration(10));

ros::Timer timer = n.createTimer(ros::Duration(1.0), boost::bind(&transformPoint, boost::ref(listener)));

ros::spin();

}

注意: 编译前CMakeLists中需要添加

find_package(catkin REQUIRED COMPONENTS

roscpp

tf

geometry_msgs

)

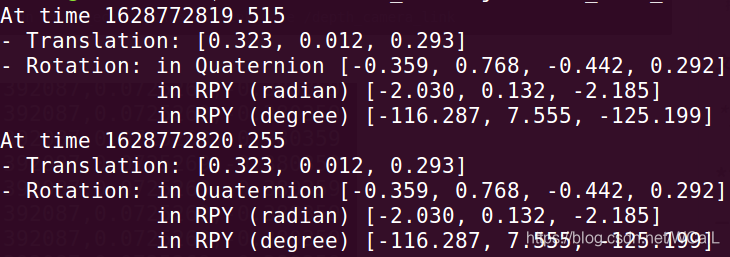

3 坐标变换结果

可通过tf_echo查看相应坐标系之间的变换关系

rosrun tf tf_echo /j2n6s300_link_base /depth_camera_link

运行结果:

上述代码运行结果:

三 参考文献

Kinect v2 在ros上利用easy_handeye进行手眼标定:https://www.guyuehome.com/22537

tf(Transform Frame)变换:https://blog.csdn.net/weixin_38145317/article/details/83956463

ROS+Kinova Jaco+KinectV2学习总结(持续更新):https://blog.csdn.net/qq_34897331/article/details/100534597

古月私房课 |“手眼”结合完成物体抓取应用:https://zhuanlan.zhihu.com/p/63757762

3D 视觉之手眼标定:https://mp.weixin.qq.com/s/nJx1dlpBXaL2_iT_J4W5Kg