需要用到的库

requests、xlwt、time、bs4四个库

网页源码提取书名分析

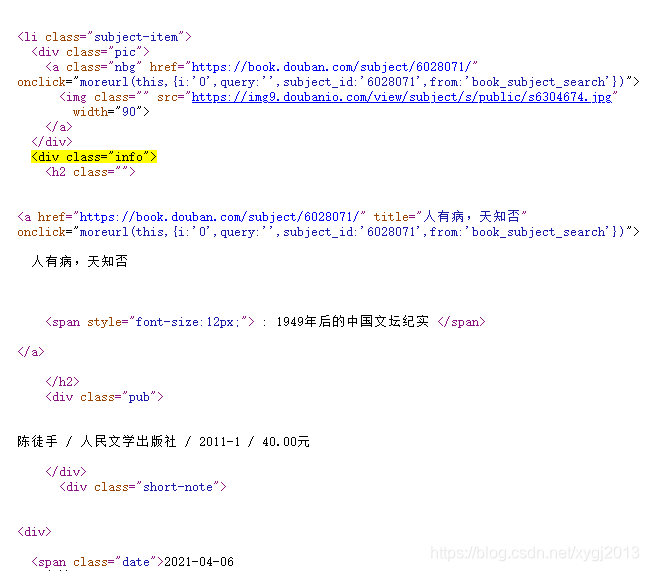

可以看到书籍的全部信息都包含在<li>标签中,但标签的范围可以进步缩小到<h2>标签中,可以进一步搜索所有<li>标签中<h2>标签。之后只要得到<h2>标签中文字内容即可。

数据保存

利用xlwt库,简单的循环写入操作后即可保存为xls文件

源代码

# python3.7 64位

# 2021.08.20

# 分析:无需登录或使用cookies,可直接获得HTML内容

from requests import get

from bs4 import BeautifulSoup

import time

import xlwt

def get_html_text(page_url):

try:

r = get(page_url, headers=headers)

r.raise_for_status()

r.encoding = r.apparent_encoding

soup = BeautifulSoup(r.text, "html.parser")

# print(soup) #已验证

books_info = soup.find_all("li", class_="subject-item")

books_list = []

# print(books_info)

for book_info in books_info:

books_list.append(str(book_info.find("h2").get_text()).replace(' ', '').replace("\n", ''))

# print(books_list) # 已验证

return books_list

except:

print('fail')

def save2xls(total_book):

workbook = xlwt.Workbook()

worksheet = workbook.add_sheet('to read book list')

worksheet.write(0, 0, '书名')

for j in range(len(total_book)):

worksheet.write(j + 1, 0, total_book[j])

workbook.save('to read book.xls')

return 1

if __name__ == "__main__":

TotalBook = []

BookListUrl = [

r"https://book.douban.com/people/更改为自己的/wish?start=%d&sort=time&rating=all&filter=all&mode=grid" % num for num

in list(range(0, 840, 15))]

headers = {

"User-Agent": r'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (XHTML, like Gecko) '

r'Chrome/70.0.3538.110 Safari/537.36 '

}

for i in range(len(BookListUrl)):

TotalBook += get_html_text(BookListUrl[i])

time.sleep(1.5)

save2xls(TotalBook)