__all__ = [

'ErnieGramModel',

'ErnieGramForSequenceClassification',

'ErnieGramForTokenClassification',

'ErnieGramForQuestionAnswering',

]

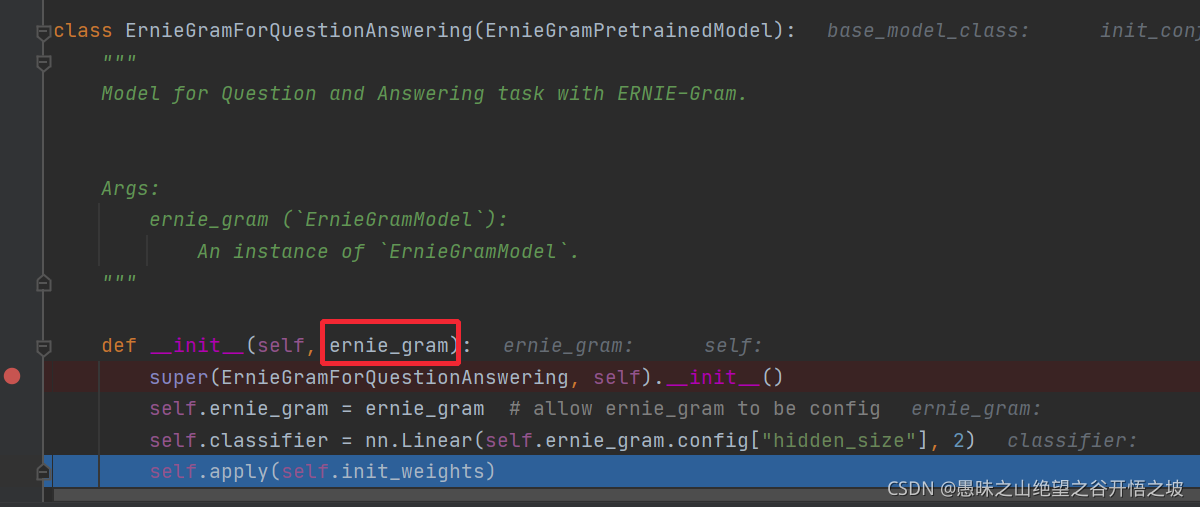

比较通用的定义,一个是预训练模型本身,其他就是把下游任务也一起加进来了。下游任务无非就是句子级别的分类应用,还有就是字符级别分类的应用,衍生了很多不同的应用名称而已,本质还是分类。只是不同级别的分类,和分类的类别数不一样而已。

问答阅读理解,就是单词级别的分类是二分类。

class ErnieGramEmbeddings(nn.Layer):

统一的输入编码层定义

class ErnieGramPretrainedModel(PretrainedModel):

r"""

An abstract class for pretrained ERNIE-Gram models. It provides ERNIE-Gram related

`model_config_file`, `resource_files_names`, `pretrained_resource_files_map`,

`pretrained_init_configuration`, `base_model_prefix` for downloading and

loading pretrained models.

Refer to :class:`~paddlenlp.transformers.model_utils.PretrainedModel` for more details.

"""

预训练模型配置定义,已经参数初始化

sequence_output = encoder_outputs

pooled_output = self.pooler(sequence_output)

return sequence_output, pooled_output

class ErniePooler(nn.Layer):

def __init__(self, hidden_size):

super(ErniePooler, self).__init__()

self.dense = nn.Linear(hidden_size, hidden_size)

self.activation = nn.Tanh()

def forward(self, hidden_states):

# We "pool" the model by simply taking the hidden state corresponding

# to the first token.

first_token_tensor = hidden_states[:, 0]

pooled_output = self.dense(first_token_tensor)

pooled_output = self.activation(pooled_output)

return pooled_output

就两类输出,取第一时刻,加了个不变的全连接和激活函数而已

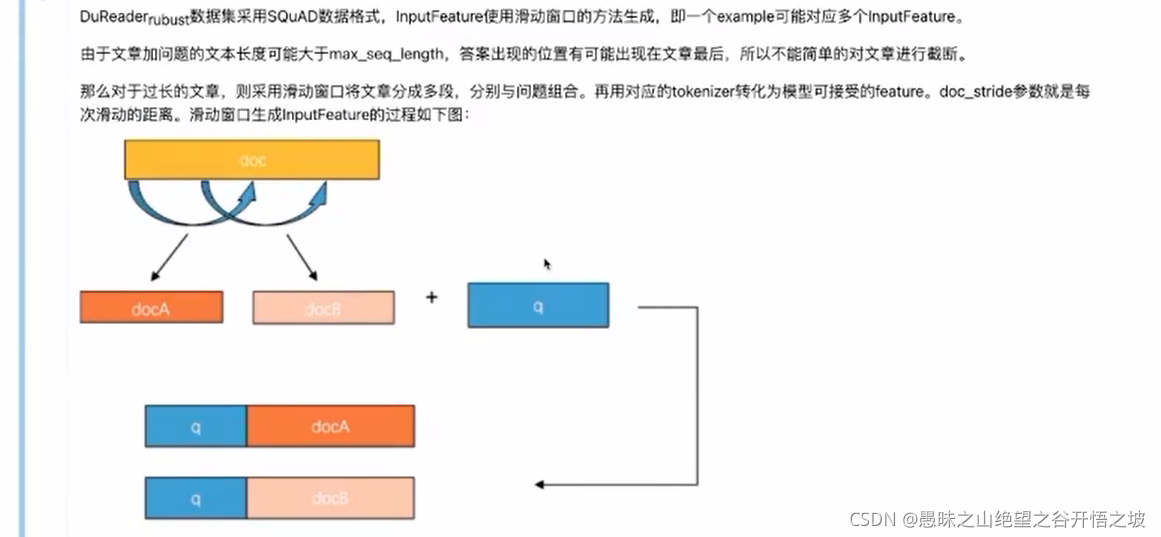

这个层面的处理更多是,数据的预处理和后处理的技巧而已,给到模型的只管标注的输入和标准的输出,然后再把标准的根据预处理的技巧,后处理回去而已。

# Detect if the answer is out of the span (in which case this feature is labeled with the CLS index).

if not (offsets[token_start_index][0] <= start_char and

offsets[token_end_index][1] >= end_char):

tokenized_examples[i]["start_positions"] = cls_index

tokenized_examples[i]["end_positions"] = cls_index

else:

# Otherwise move the token_start_index and token_end_index to the two ends of the answer.

# Note: we could go after the last offset if the answer is the last word (edge case).

while token_start_index < len(offsets) and offsets[

token_start_index][0] <= start_char:

token_start_index += 1

tokenized_examples[i]["start_positions"] = token_start_index - 1

while offsets[token_end_index][1] >= end_char:

token_end_index -= 1

tokenized_examples[i]["end_positions"] = token_end_index + 1

注意上面的not A and B,就not A or not B,已经把那种特殊情况越界的样本给排除了,直接置为特殊符号cls,这个类别在实际分类是不会分类到的,所以不会有参与影响结果损失的优化。

剩下就是正常的,把实际样本的位置,映射到截断后的特征样本的位置上。

encoder_layer = nn.TransformerEncoderLayer(

hidden_size,

num_attention_heads,

intermediate_size,

dropout=hidden_dropout_prob,

activation=hidden_act,

attn_dropout=attention_probs_dropout_prob,

act_dropout=0)

self.encoder = nn.TransformerEncoder(encoder_layer, num_hidden_layers)

每层输入输出是怎么样定义的,然后把这层循环多少次而已

继承预训练定义,然后会把标准的模型当做参数传进来

class ErnieGramTokenizer(ErnieTokenizer):

很多模型,只是在模型的预训练阶段采取了一定的措施技巧,然向量表示和模型效果更好,在微调阶段和标准的流程都差不多的

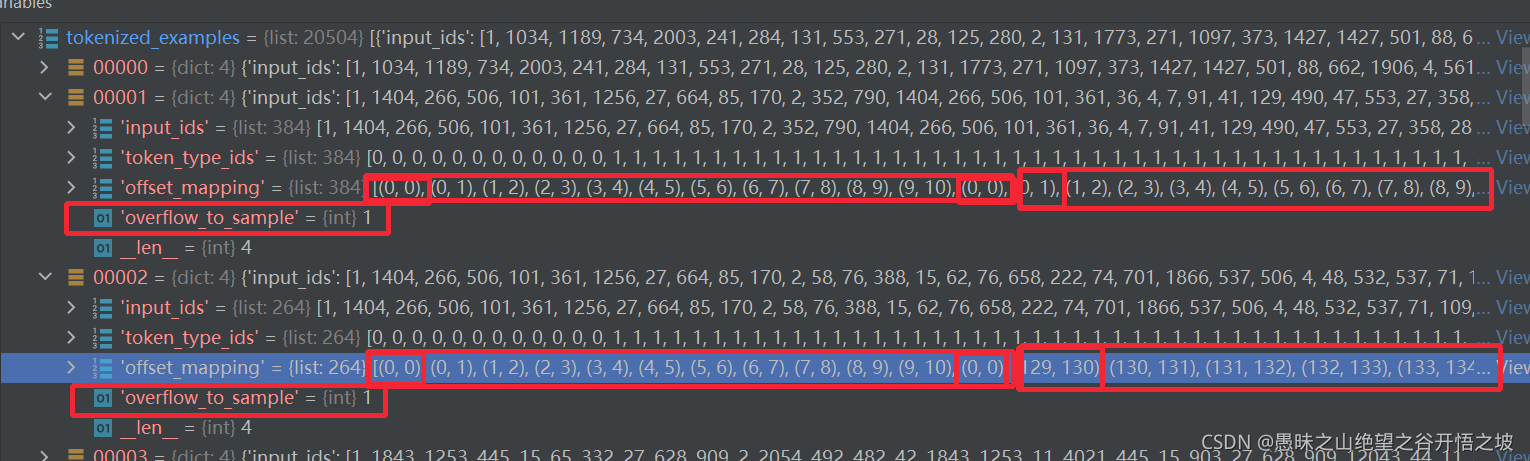

从以上结果可以看出,数据集中的example已经被转换成了模型可以接收的feature,包括input_ids、token_type_ids、答案的起始位置等信息。 其中:

input_ids: 表示输入文本的token ID。

token_type_ids: 表示对应的token属于输入的问题还是答案。(Transformer类预训练模型支持单句以及句对输入)。

overflow_to_sample: feature对应的example的编号。

offset_mapping: 每个token的起始字符和结束字符在原文中对应的index(用于生成答案文本)。

start_positions: 答案在这个feature中的开始位置。

end_positions: 答案在这个feature中的结束位置。

输入的id是句子对,前面是问题,后面是文本,开头有个特殊符号CLS,中间和结尾有个SEP,这个特征样本来自同一条数据样本,所以,里面会有重叠的字符,出现在原文本的索引就可以看出来

从以上结果可以看出,数据集中的example已经被转换成了模型可以接收的feature,包括input_ids、token_type_ids、答案的起始位置等信息。 其中:

input_ids: 表示输入文本的token ID。

token_type_ids: 表示对应的token属于输入的问题还是答案。(Transformer类预训练模型支持单句以及句对输入)。

overflow_to_sample: feature对应的example的编号。因为一个样本会生产多个特征样本,所以要把变化记住

offset_mapping: 每个token的起始字符和结束字符在原文中对应的index(用于生成答案文本)。有些特征样本会重复多次,因为是滑动窗口组成的,有重叠部分

start_positions: 答案在这个feature中的开始位置。

end_positions: 答案在这个feature中的结束位置。

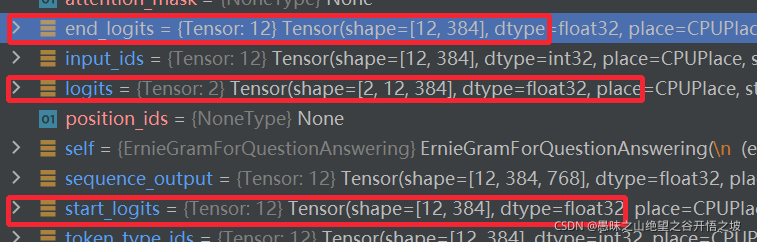

logits = self.classifier(sequence_output)

logits = paddle.transpose(logits, perm=[2, 0, 1])

start_logits, end_logits = paddle.unstack(x=logits, axis=0)

return start_logits, end_logits

把输入两个类别的输出给自拆成两个类别的输出

def forward(self, y, label):

start_logits, end_logits = y

start_position, end_position = label

start_position = paddle.unsqueeze(start_position, axis=-1)

end_position = paddle.unsqueeze(end_position, axis=-1)

start_loss = paddle.nn.functional.cross_entropy(

input=start_logits, label=start_position)

end_loss = paddle.nn.functional.cross_entropy(

input=end_logits, label=end_position)

loss = (start_loss + end_loss) / 2

return loss

两个交叉熵损失而已

tokenized_examples = tokenizer(

questions,

contexts,

stride=args.doc_stride,

max_seq_len=args.max_seq_length)

# Let's label those examples!

for i, tokenized_example in enumerate(tokenized_examples):

# We will label impossible answers with the index of the CLS token.

input_ids = tokenized_example["input_ids"]

cls_index = input_ids.index(tokenizer.cls_token_id)

# The offset mappings will give us a map from token to character position in the original context. This will

# help us compute the start_positions and end_positions.

offsets = tokenized_example['offset_mapping']

# Grab the sequence corresponding to that example (to know what is the context and what is the question).

sequence_ids = tokenized_example['token_type_ids']

# One example can give several spans, this is the index of the example containing this span of text.

sample_index = tokenized_example['overflow_to_sample']

answers = examples[sample_index]['answers']

answer_starts = examples[sample_index]['answer_starts']

# Start/end character index of the answer in the text.

start_char = answer_starts[0]

end_char = start_char + len(answers[0])

# Start token index of the current span in the text.

token_start_index = 0

while sequence_ids[token_start_index] != 1:

token_start_index += 1

# End token index of the current span in the text.

token_end_index = len(input_ids) - 1

while sequence_ids[token_end_index] != 1:

token_end_index -= 1

# Minus one more to reach actual text

token_end_index -= 1

# Detect if the answer is out of the span (in which case this feature is labeled with the CLS index).

if not (offsets[token_start_index][0] <= start_char and

offsets[token_end_index][1] >= end_char):

tokenized_examples[i]["start_positions"] = cls_index

tokenized_examples[i]["end_positions"] = cls_index

else:

# Otherwise move the token_start_index and token_end_index to the two ends of the answer.

# Note: we could go after the last offset if the answer is the last word (edge case).

while token_start_index < len(offsets) and offsets[

token_start_index][0] <= start_char:

token_start_index += 1

tokenized_examples[i]["start_positions"] = token_start_index - 1

while offsets[token_end_index][1] >= end_char:

token_end_index -= 1

tokenized_examples[i]["end_positions"] = token_end_index + 1

return tokenized_examples

把实际样本的各种位置,映射到样本特征的各种位置