此博客仅用于记录个人学习进度,学识浅薄,若有错误观点欢迎评论区指出。欢迎各位前来交流。(部分材料来源网络,若有侵权,立即删除)

本人博客所有文章纯属学习之用,不涉及商业利益。不合适引用,自当删除!

若被用于非法行为,与我本人无关

代码

from bs4 import BeautifulSoup

import requests

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.130 Safari/537.36'

,'Cookie':'FSSBBIl1UgzbN7N80S=pBl4cwL0BeYX7BPlMyu8rsdbp9lbioYMJaCy1tcn0qYZmGlqCZ41NqycE3AgE8WF; FSSBBIl1UgzbN7N80T=4nVkJRj8lDbIG2U8bykrp6XGWOzeYxavtdqV1340ExDzvNRE3c_j5vi.4dRnL0zOyWGRxZTriiaH69dIW7Dj6XvhptebZ2pmlNI6IZbBxDSRxjusk.MGvxkEEsHcvQt1lDovyurQAdHeF62SyyFz7_BCl73XGMLMFT7eZrXPZ0t4CKONrOscekARY4.Qg.lM7gHMqAA8uHLO0y675xXTqUsfpHObImX3D1vZwKlvkRip6wUu9TOnVXfWnHTw8I3CrC2AoXe_BwPPt6pxtbG2L0XIuv7T9aT.IUoGriJYlscW5rCRoWCyqVFNwojdxN8ogLNl; JSESSIONID=E8C04D8C5697FC67AF480BB7E5FDCE70'

}

def get_the_place():

print("=" * 100)

print("1. 查看学院设置官网")

print("2. 查看机关部处官网")

print("3. 查看业务单位官网")

print("4. 查看科研平台官网")

print("=" * 100)

id=input("请输入命令:")

if (id=='1'):

str='xysz'

elif(id=='2'):

str='jgbc'

elif(id=='3'):

str='ywdw'

elif(id=='4'):

str='kypt'

else:

return 0

for i in range(1):

url = 'https://www.scu.edu.cn/zzjg/{}.htm'.format(str)

#print(url)

#https: // www.scu.edu.cn / zzjg / xysz.htm

#https: // www.scu.edu.cn / zzjg / ywdw.htm

#https: // www.scu.edu.cn / zzjg / jgbc.htm

rs = requests.session()

r = rs.get(url, headers=headers)

#print(r.text)

# random_sleep(1.5, 0.4)

soup = BeautifulSoup(r.content, 'lxml')

if(id!='4'):

SET = soup.find('ul', {'class': 'settingList clearfix jgbcbox'})

Li = SET.find_all('li')

for li in Li:

data=[]

A=li.find_all('a')

for a in A:

data.append(a.text)

data.append(a['href'])

print(data)

else:

block=soup.find('div',{'style':'display: block;'})

SET1 = block.find('ul', {'class': 'settingList clearfix jgbcbox'})

Li = SET1.find_all('li')

for li in Li:

data = []

name = li.find('a').text

net = li.find('a')['href']

data.append(name)

data.append(net)

print(data)

SET2 =block.find_all('ul', {'class': 'settingList clearfix'})

for set2 in SET2:

Li = set2.find_all('li')

for li in Li:

data = []

name = li.find('a').text

net = li.find('a')['href']

data.append(name)

data.append(net)

print(data)

none = soup.find('div', {'style': 'display: none;'})

SET3 = none.find_all('ul', {'class': 'settingList clearfix'})

for set3 in SET3:

Li = set3.find_all('li')

for li in Li:

data = []

name = li.find('a').text

net = li.find('a')['href']

data.append(name)

data.append(net)

print(data)

while 1:

get_the_place()

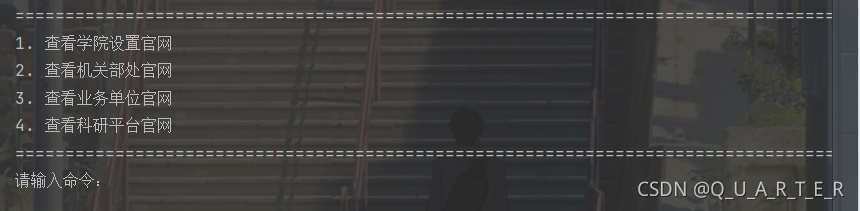

实现截图