刘二大人《PyTorch深度学习实践》笔记

线性模型

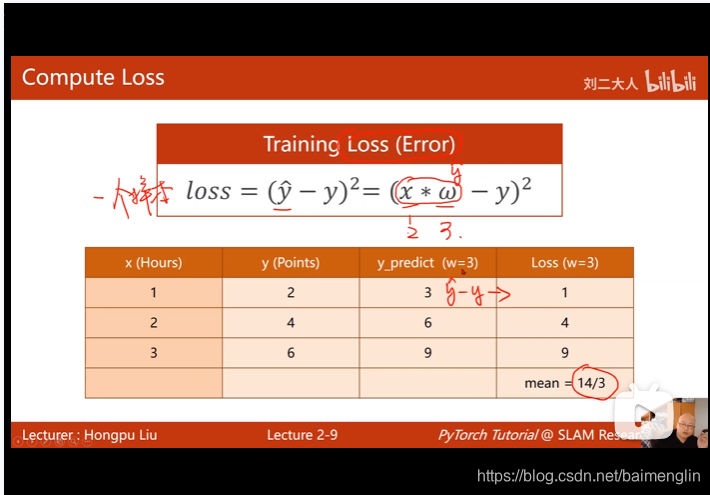

损失函数是针对一个样本的

training set得到的是一个平均平方误差MSE

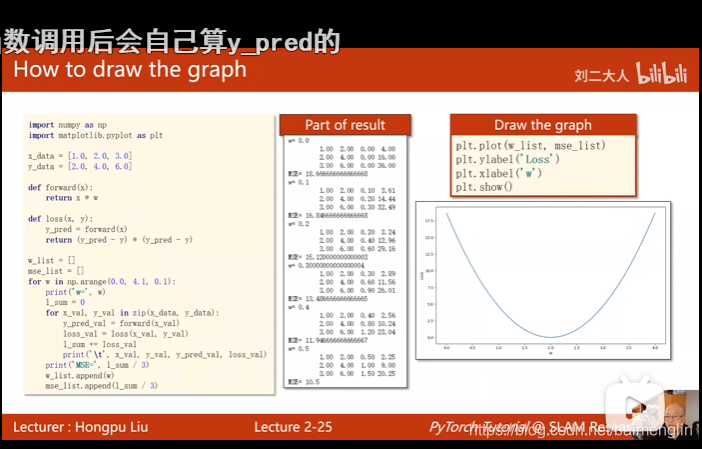

穷举法绘制损失曲线:numpy和matplotlib

import numpy as np

import matplotlib.pyplot as plt

#数据集

x_data = [1.0,2.0,3.0]

y_data = [2.0,4.0,6.0]

#定义模型

def forward(x):

return x * w

#定义损失函数

def loss(x,y):

y_pred = forward(x)

return (y_pred-y) * (y_pred-y)

#存放权重和权重损失值对应的列表

w_list = []

mse_list = []

#w取值为0-4,间隔为0.1

for w in np.arange(0.0,4.1,0.1):

print("w=",w)

l_sum = 0

# 把数据集里的数据取出来拼成x_val和y_val

for x_val,y_val in zip(x_data,y_data):

y_pred_val = forward(x_val)

loss_val = loss(x_val, y_val)

l_sum += loss_val#先求和

print('\t',x_val,y_val,y_pred_val,loss_val)

print("MSE=",l_sum/3)#取平均

w_list.append(w)

mse_list.append(l_sum/3)

plt.plot(w_list, mse_list)

plt.ylabel('Loss')

plt.xlabel('w')

plt.show()

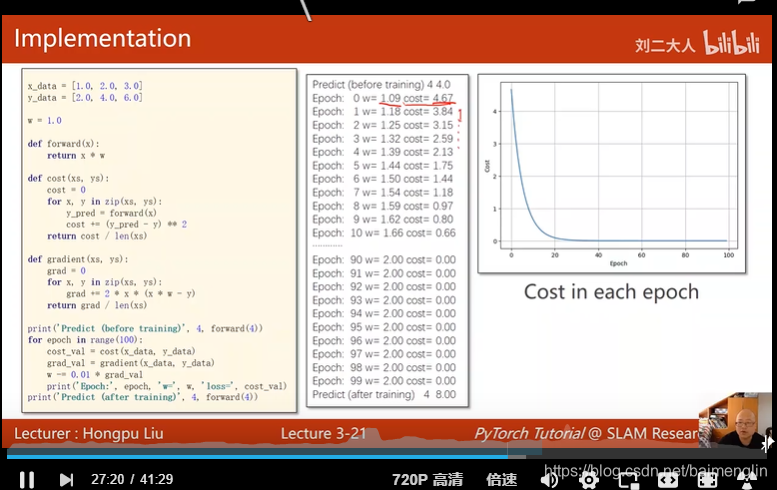

梯度下降

x_data = [1.0, 2.0, 3.0]

y_data = [2.0, 4.0, 6.0]

w = 1.0

def forward(x):

return x * w

def cost(xs, ys):

cost = 0

for x, y in zip(xs, ys):

y_pred = forward(x)

cost += (y_pred - y) ** 2

return cost / len(xs)

def gradient(xs, ys):

grad = 0

for x, y in zip(xs, ys):

grad += 2 * x * (x * w - y) # 求导的导数公式

return grad / len(xs)

print('predict (before training)', 4, forward(4))

for epoch in range(100):

cost_val = cost(x_data, y_data)

grad_val = gradient(x_data, y_data)

w -= 0.01 * grad_val

print('epoch:', epoch, 'w=', w, 'loss=', cost_val)

print('predict (after training)', 4, forward(4))

随机梯度下降:取一个损失更新(以前是平均全部损失)(一加入了噪声,二大样本计算量大)

x_data = [1.0, 2.0, 3.0]

y_data = [2.0, 4.0, 6.0]

w = 1.0

def forward(x):

return x * w

def loss(x, y):

y_pred = forward(x)

return (y_pred - y) ** 2

def gradient(x, y):

return 2 * x * (x * w - y)

print('predict (before training)', 4, forward(4))

for epoch in range(100):

for x, y in zip(x_data, y_data):

grad = gradient(x, y)

w -= 0.01 * grad # 对每一个样本及时更新,没办法并行运算,batch

print('\tgrad:', x, y, grad)

l = loss(x, y)#计算现在的损失

print("progress:", epoch, "w=", w, "loss", l)

print('predict (after training)', 4, forward(4))

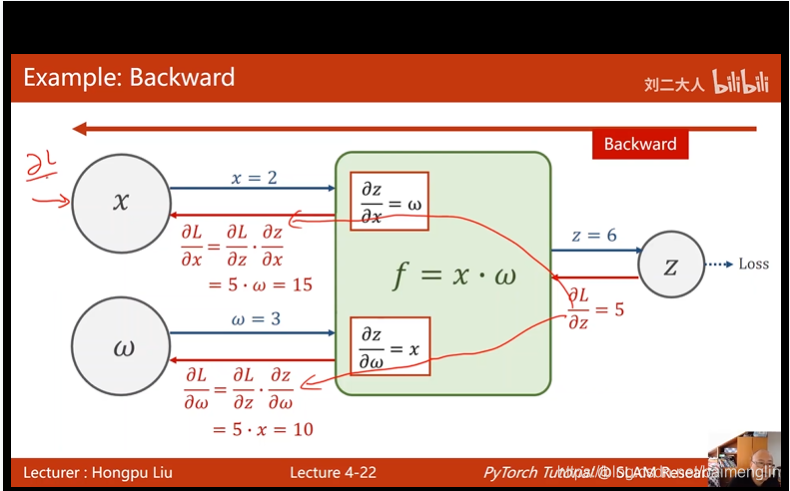

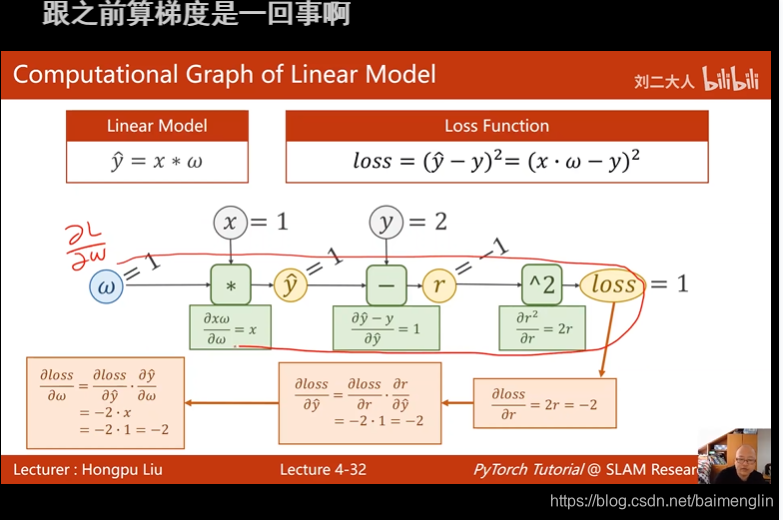

反向传播

import torch

x_data = [1.0, 2.0, 3.0]

y_data = [2.0, 4.0, 6.0]

w = torch.Tensor([1.0])

w.requires_grad = True # 需要计算梯度

def forward(x):

return x * w # tensor

def loss(x, y):

y_pred = forward(x)

return (y_pred - y) ** 2#写代码先画计算图

#训练过程

print('predict (before training)', 4, forward(4).item())

for epoch in range(100):

for x, y in zip(x_data, y_data):

l = loss(x, y) # 前向,计算loss

l.backward() # 做完后计算图会释放,再做loss计算,会创建一个新的计算图

print('\tgrad:', x, y, w.grad.item()) # 获取梯度,item取值,要是张量,就是计算图一直累积

w.data -= 0.01 * w.grad.data # 不取data,会是TENSOR有计算图,纯数值的计算

w.grad.data.zero_() # 计算出来的梯度不清零会累加

print("progress:", epoch, l.item())

print('predict (after training)', 4, forward(4).item())

l.item()可以把损失值取出来,sum+=l.item()

w是Tensor(张量类型),Tensor中包含data和grad,data和grad也是Tensorl。grad初始为None,调用l.backward()方法后w.grad为Tensor,故更新w.data时需使用w.grad.data。如果w需要计算梯度,那构建的计算图中,跟w相关的tensor都默认需要计算梯度。

每一次epoch的训练过程,总结就是

①前向传播,求y hat (输入的预测值)

②根据y_hat和y_label(y_data)计算loss

③反向传播 backward (计算梯度)

④根据梯度,更新参数

Tensor和tensor的区别点这里

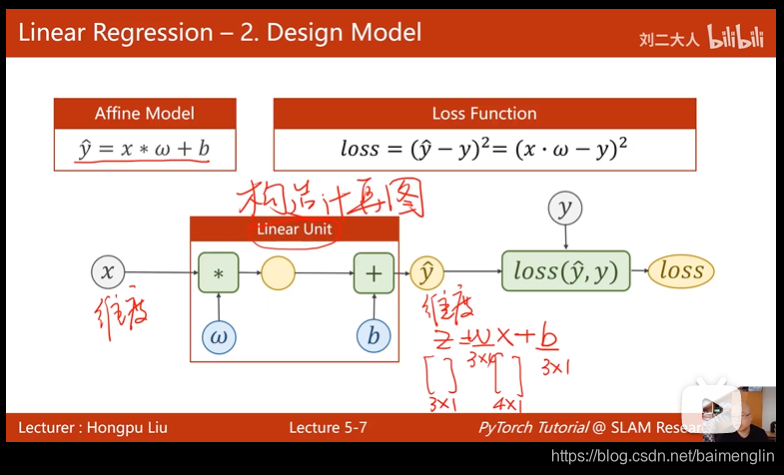

用pytorch实现线性回归

数据集准备、设计模型、构造损失函数和优化器、训练周期(前馈反馈更新)

import torch

# prepare dataset

# x,y是矩阵,3行1列 也就是说总共有3个数据,每个数据只有1个特征

x_data = torch.Tensor([[1.0], [2.0], [3.0]])

y_data = torch.Tensor([[2.0], [4.0], [6.0]])

#design model using class

"""

our model class should be inherit from nn.Module, which is base class for all neural network modules.

member methods __init__() and forward() have to be implemented

class nn.linear contain two member Tensors: weight and bias

class nn.Linear has implemented the magic method __call__(),which enable the instance of the class can

be called just like a function.Normally the forward() will be called

"""

class LinearModel(torch.nn.Module):

def __init__(self):

super(LinearModel, self).__init__()#调用父类的init

# (1,1)是指输入x和输出y的特征维度,这里数据集中的x和y的特征都是1维的

# 该线性层需要学习的参数是w和b 获取w/b的方式分别是~linear.weight/linear.bias

self.linear = torch.nn.Linear(1, 1)#类后+括号,构造对象

def forward(self, x):

y_pred = self.linear(x)

return y_pred

model = LinearModel()

# construct loss and optimizer

# criterion = torch.nn.MSELoss(size_average = False)

criterion = torch.nn.MSELoss(reduction = 'sum')

optimizer = torch.optim.SGD(model.parameters(), lr = 0.01) # model.parameters()自动完成参数的初始化操作

# training cycle forward, backward, update

for epoch in range(100):

y_pred = model(x_data) # forward:predict

loss = criterion(y_pred, y_data) # forward: loss

print(epoch, loss.item())

optimizer.zero_grad() # the grad computer by .backward() will be accumulated. so before backward, remember set the grad to zero

loss.backward() # backward: autograd,自动计算梯度

optimizer.step() # update 参数,即更新w和b的值

print('w = ', model.linear.weight.item())

print('b = ', model.linear.bias.item())

x_test = torch.Tensor([[4.0]])

y_test = model(x_test)

print('y_pred = ', y_test.data)