前言:用requests爬取bilibili评论区一、二级评论,并存入mysql数据库或csv文件

目标数据:姓名, 性别, 用户等级, 用户uid, 个性签名, 评论时间, 内容, 点赞, 回复, rpid

案例视频:开学啦~我终于收到了霍格沃茨的录取通知书_哔哩哔哩_bilibili![]() https://www.bilibili.com/video/BV14h411n7ok

https://www.bilibili.com/video/BV14h411n7ok

目录

一、案例分析

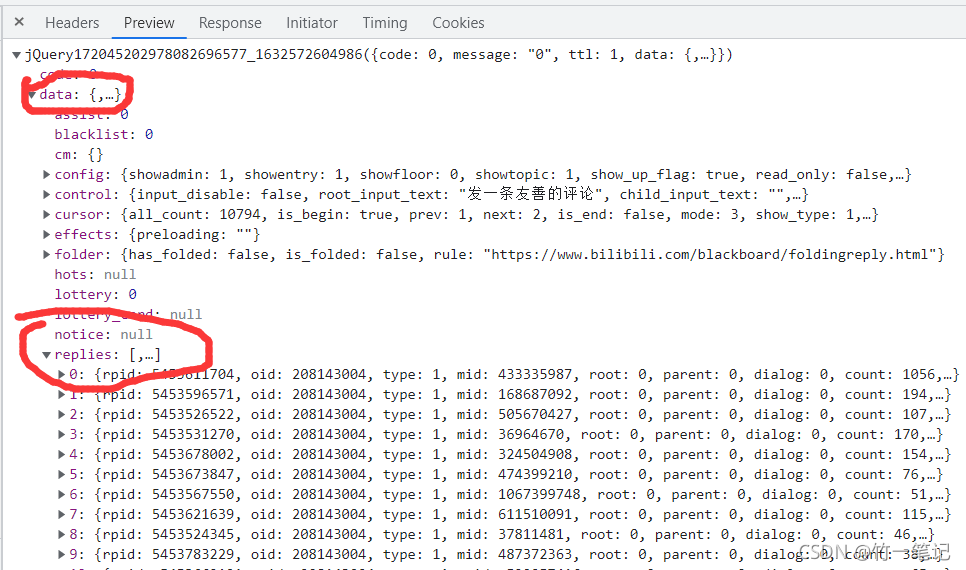

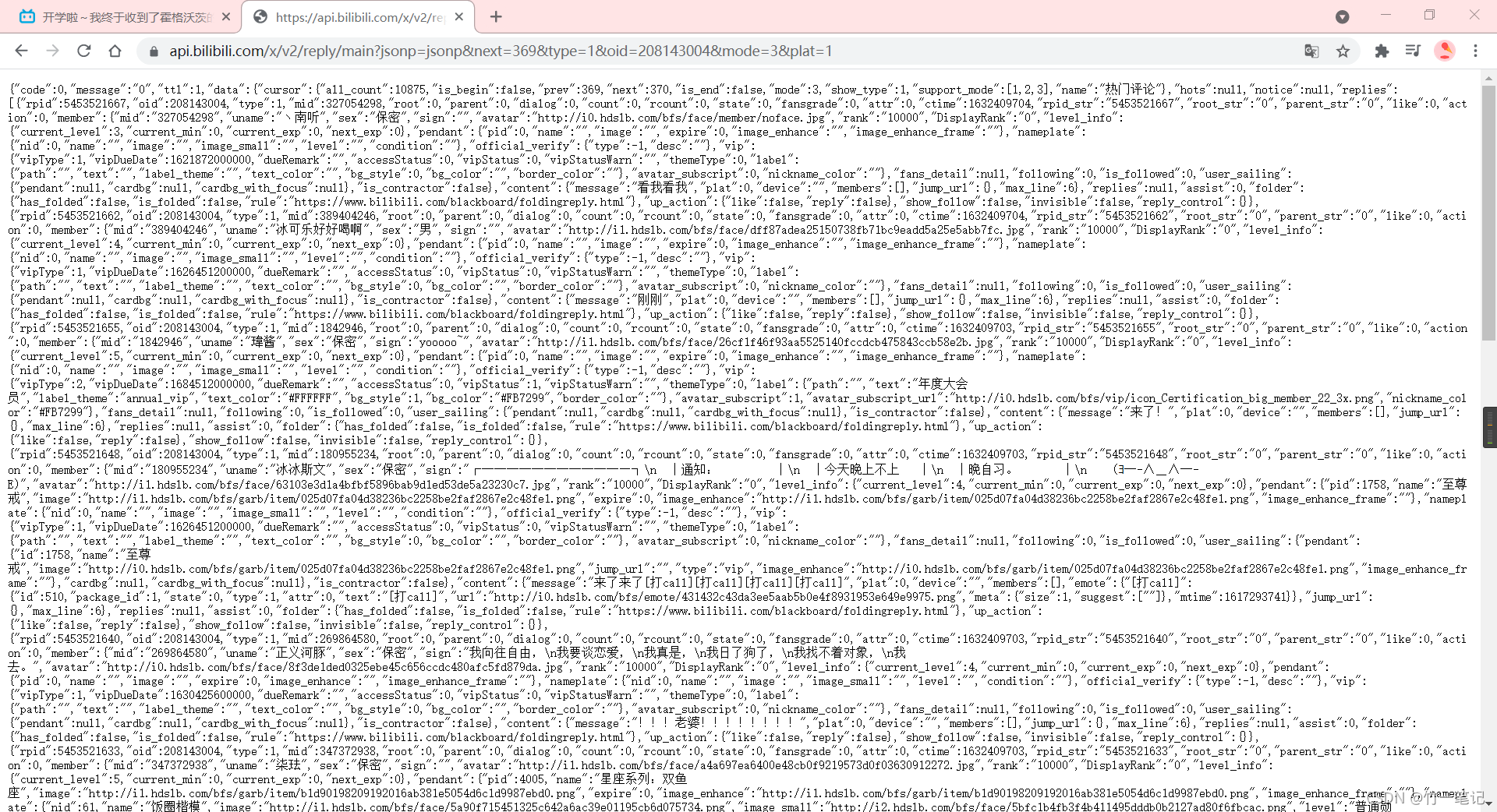

?首先获取评论数据api,发现所有数据都在json格式的文件中,可以用字典提取数据 ????

?

?

?

?

?

通过f12我们可以得到评论api。删除第一个和最后一个参数,得到

一级评论:https://api.bilibili.com/x/v2/reply/main?jsonp=jsonp&next=0&type=1&oid=208143004&mode=3&plat=1

- ??????? next:翻页

- ????????oid:视频编号(aid)

- ????????mode:1、2表示按热度、时间排序;? 0、3表示按热度排序,并显示评论和用户信息

二级评论:https://api.bilibili.com/x/v2/reply/reply?jsonp=jsonp&pn=1&type=1&oid=208143004&ps=10&root=5453611704

- ??????? pn:翻页

- ??????? oid:视频oid

- ??????? root:楼主的回复的rpid

- ??????? ps: 单页显示数量(最大为20)

为什么要删除第一个和最后一个参数?因为我们不需要js请求,最后一个参数也没有什么影响

其余参数为固定参数,不可变

视频的oid可通过视频BV号获取,rpid可以通过一级评论获取

二、获取数据

??????? 1.数据获取 # 一级评论。

????????定义bilibili类。首先通过视频BV号获取oid

import requests

import re

class Bilibili:

def __init__(self, BV):

self.homeUrl = "https://www.bilibili.com/video/BV14h411n7ok"

self.oid_get(BV)

# 获取视频 oid

def oid_get(self, BV):

# 请求视频页面

response = requests.get(url=self.homeUrl + BV).text

# 用正则表达式 通过视频 bv 号获取 oid

self.oid = re.findall("\"aid\":([0-9]*),", response)[0]??????? 我们通过oid构造出评论数据的url。oid,mode,ps参数可以预先设置,但是翻页和二级评论的页面数是需要改变的

????????为了防止被识别为爬虫程序,需要设置请求头user-agent和cookie。

??????? user-agent可以用fake_useragent库构造。但这个库在使用过程中出现了很多问题。

??????? 我干脆直接复制浏览器的过来了。如果需要的话可以在

https://fake-useragent.herokuapp.com/browsers/0.1.11?这里看看,里面有很多的user-agent,可以自己做一个useragent池。需要科学上网才能访问到。也可以在这找。把ua和cookie都复制过来(字典格式)

什么是科学上网?

?

?

?

?

?

?

import requests

import re

import queue

import time

class Bilibili:

def __init__(self, BV, mode, cookies, page):

self.homeUrl = "https://www.bilibili.com/video/"

self.oid_get(BV)

self.replyUrl = "https://api.bilibili.com/x/v2/reply/main?jsonp=jsonp&type=1&oid={oid}&mode={mode}&plat=1&next=".format(oid=self.oid, mode=mode)

self.rreplyUrl = "https://api.bilibili.com/x/v2/reply/reply?jsonp=jsonp&type=1&oid={oid}&ps=20&root={root}&pn=".format(oid=self.oid, root="{root}")

self.headers = {"user-agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/93.0.4577.82 Safari/537.36"}

self.cookies = cookies

self.q = queue.Queue() # 用来存放爬取的数据,通过队列可以按顺序,使用多线程存入数据库或csv文件中

# 这里我们用到了队列,好处在于,可以用多线程边爬边存,按顺序先进先出

self.count = 1 # count变量指定主楼数,区别是评论还是评论的评论

# 获取视频 oid

def oid_get(self, BV):

response = requests.get(url=self.homeUrl + BV).text

# 正则表达式 通过视频 bv 号获取 oid

self.oid = re.findall("\"aid\":([0-9]*),", response)[0]??????? 请求函数需要传递url,page(最大页面数)

??????? 通过requests库请求数据,需要的数据都在data->replies里面

?

? ?

?

def content_get(self, url, page):

now = 0 # 当前页面

while now<=page:

print("page : <{now}>/<{page}>".format(now=now, page=page))

response = requests.get(url=url+str(now), cookies=self.cookies, headers=self.headers, timeout=10).json() # 把response解析为json格式,通过字典获取

replies = response['data']['replies'] # 评论数据在data->replies 里面,每页有 20 条

now += 1

for reply in replies: # 遍历获取每一条,用reply_clean函数提取数据

line = self.reply_clean(reply)

self.count += 1????????我们预先定义一个reply_clean函数,用来提取数据

???????? 2.数据清洗

??????? reply里面的数据很多,但大多数对我们来说都是没有用的。

def reply_clean(self, reply):

name = reply['member']['uname'] # 名字

sex = reply['member']['sex'] # 性别

if sex=="保密":

sex = ' '

mid = reply['member']['mid'] # 帐号的uid

sign = reply['member']['sign'] # 标签

rpid = reply['rpid'] # 爬二级评论要用到

rcount = reply['rcount'] # 回复数

level = reply['member']['level_info']['current_level'] # 等级

like = reply['like'] # 点赞数

content = reply['content']['message'].replace("\n","") # 评论内容

t = reply['ctime']

timeArray = time.localtime(t)

otherStyleTime = time.strftime("%Y-%m-%d %H:%M:%S", timeArray) # 评论时间,时间戳转为标准时间格式

return [count, name, sex, level, mid, sign, otherStyleTime, content, like, rcount, rpid]?评论时间为时间戳,用time库把时间戳转换为给人看的时间格式。

?到此处,我们已经获取到了一级评论,并把数据返回为列表类型

??????? 3.数据获取 # 二级评论

为了增加代码的复用性,我们可以改变一下上面两个函数

# level_1判断是否为一级评论。如果为二级评论,则不请求下一级评论(评论的评论)

def content_get(self, url, page, level_1=True):

now = 0

while now<=page:

print("page : <{now}>/<{page}>".format(now=now, page=page))

response = requests.get(url=url+str(now), cookies=self.cookies, headers=self.headers, timeout=10).json()

replies = response['data']['replies'] # 评论数据在data->replies 里面,一共有 20 条

now += 1

for reply in replies:

if level_1:

line = self.reply_clean(reply, self.count)

self.count += 1

else:

line = self.reply_clean(reply)

self.q.put(line)

# 这儿我们可以筛选一下,如果一级评论有跟评,调用函数请求二级评论

if level_1==True and line[-2] != 0:

# root表示rpid。在二级评论api中是root参数。page页数,由于我们把最大显示量设置为20,所以除以20。加0.5向上取整

self.content_get(url=self.rreplyUrl.format(root=str(line[-1])), page=int(line[-2]/20+0.5), level_1=False) # 递归获取二级评论

?我们通过传递给content_get的url参数,可以分别请求一级或二级评论。 而两种评论的json格式是完全一样的。都在data->replies中。self.count参数表示主楼数

??????? 4.数据清洗 # 二级评论

# 这个函数可以爬一级评论也能爬二级评论

# count 参数,看看是不是二级评论。

def reply_clean(self, reply, count=False):

name = reply['member']['uname'] # 名字

sex = reply['member']['sex'] # 性别

if sex=="保密":

sex = ' '

mid = reply['member']['mid'] # 帐号的uid

sign = reply['member']['sign'] # 标签

rpid = reply['rpid'] # 爬二级评论要用到

rcount = reply['rcount'] # 回复数

level = reply['member']['level_info']['current_level'] # 等级

like = reply['like'] # 点赞数

content = reply['content']['message'].replace("\n","") # 评论内容

t = reply['ctime']

timeArray = time.localtime(t)

otherStyleTime = time.strftime("%Y-%m-%d %H:%M:%S", timeArray) # 评论时间,时间戳转为标准时间格式

# 如果是二级评论,则返回数据第一个为"回复",否则为楼号

# 二级评论没有回复数rcount,三级评论都显示为 回复xxx @谁谁谁

if count:

return [count, name, sex, level, mid, sign, otherStyleTime, content, like, rcount, rpid]

else:

return ["回复", name, sex, level, mid, sign, otherStyleTime, content, like, ' ', rpid]?

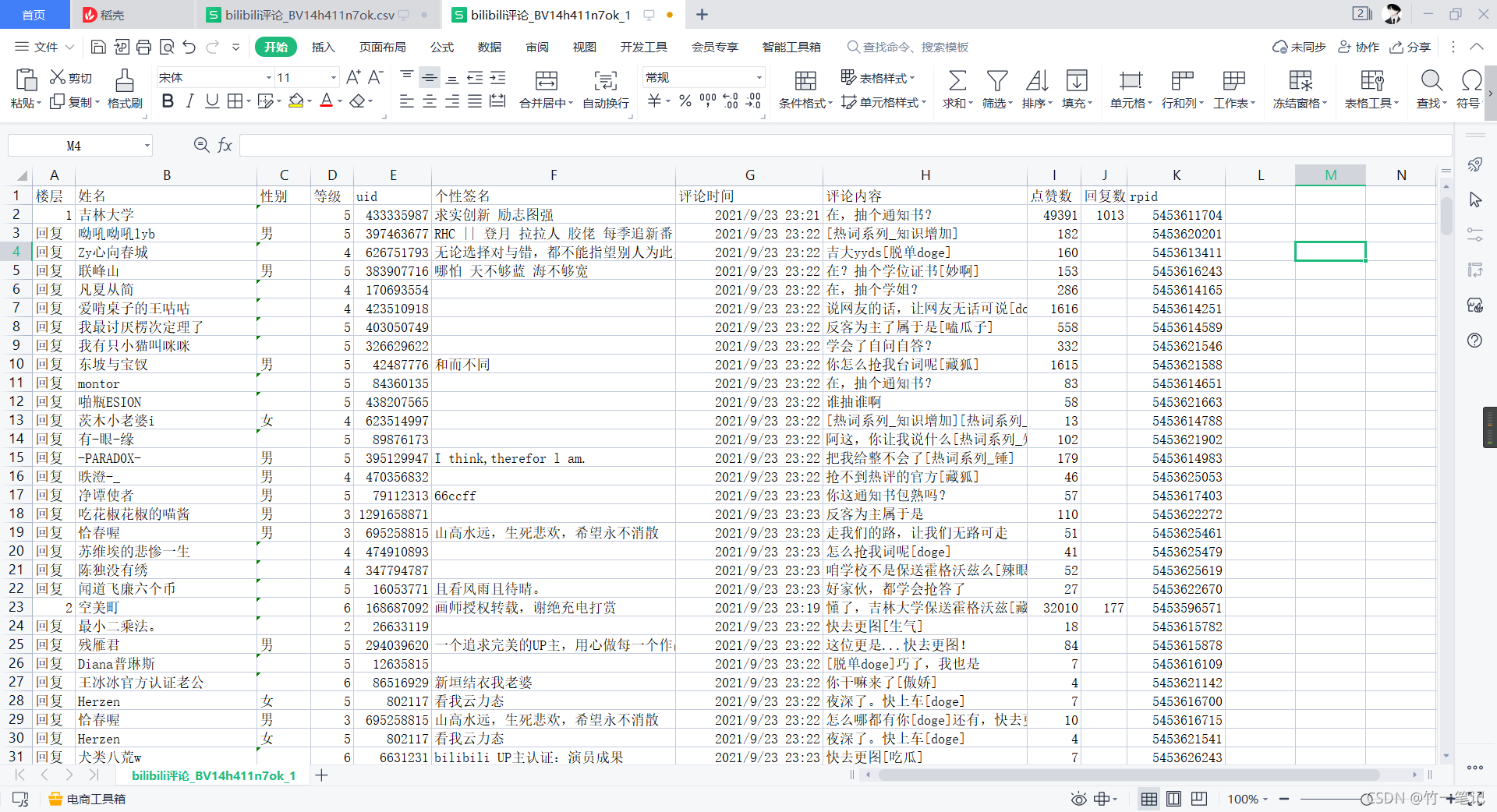

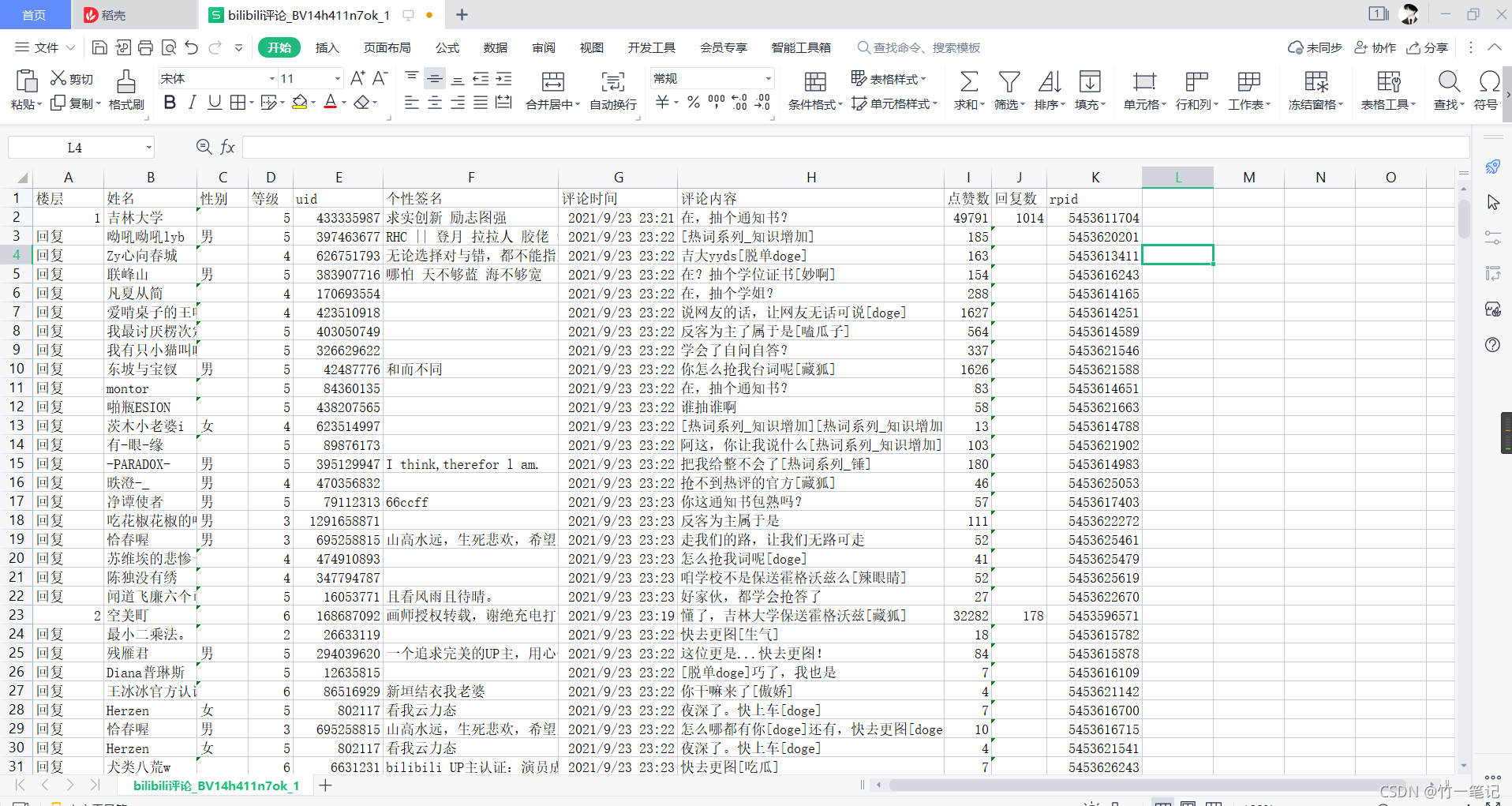

三、数据存储

数据存储选取了两种方式作为安全:csv和mysql数据库

1、csv:

??????? 用csv模块,while True重复读取队列元素。如果10秒钟还没有数据进来,说明爬完了,或者程序死了。不管是反爬死了还是咋死了,反正它死了

import csv

def csv_writeIn(self, BV):

file = open("bilibili评论_"+BV+".csv", "w", encoding="utf-8", newline="")

f = csv.writer(file)

line1 = ['楼层', '姓名', '性别', '等级', 'uid', '个性签名', '评论时间', '评论内容', '点赞数', '回复数', 'rpid']

f.writerow(line1)

file.flush()

while True:

try:

line = self.q.get(timeout=10)

except:

break

f.writerow(line)

file.flush()

file.close()执行一下,打开csv文件

?

?

?我们发现,csv乱码。这是因为,csv默认打开编码方式为ANSI,而我们是用utf-8存储的

这时候我们可以选择用记事本打开,另存为ANSI编码,再用csv打开

??  ?

?

2、mysql

import pymysql as pysql

def mysql_connect(self, host, user, password, BV):

# 连接数据库,如果失败退出程序

try:

self.conn = pysql.connect(host=host, user=user, password=password)

self.cursor = self.conn.cursor()

except:

print("mysql connect error ... ")

exit(1)

# 创建库,创建表

self.cursor.execute('create database if not exists bilibili')

self.cursor.execute('use bilibili')

sql = '''

create table if not exists {BV} (

floor char(6),

name char(20),

sex char(2),

level char(1),

uid char(10),

sign char(100),

time char(23),

content char(100),

star char(6),

reply char(6),

rpid char(10))

'''

self.cursor.execute(sql.format(BV=BV)) # 用视频BV号创建表

def mysql_writeIn(self, BV):

sql = '''insert into BV

(`floor`, `name`, `sex`, `level`, `uid`, `sign`, `time`, `content`, `star`, `reply`, `rpid`) value

("{floor}", "{name}", "{sex}", "{level}", "{uid}", "{sign}", "{t}", "{content}", "{star}", "{reply}", "{rpid}")'''

sql = sql.replace('BV', BV)

# 用另一个线程写入表,设置timeout失败退出。

while True:

try:

line = self.q.get(timeout=10)

except:

self.conn.close()

break

# 由于数据长度是固定长度,有可能出现太长了写不进去的情况。这里根据实际需要,创建表的时候注意一个。实在太长就不要了吧哈哈哈哈

try:

self.cursor.execute(sql.format(floor=line[0], name=line[1], sex=line[2], level=line[3], \

uid=line[4], sign=line[5], t=line[6], content=line[7], \

star=line[8], reply=line[9], rpid=line[10]))

except Exception as e:

print(e)

continue

# 记得提交,不然白存

self.conn.commit()四、多线程

??????? 我们需要通过函数调用上面几个部分。多线程这里我们使用threading模块的Thread类构造

?????????各模块由main函数调用。

from threading import Thread

def main(self, page, BV):

self.mysql_connect(host='localhost', user='root', password='SpiderXbest', BV=BV)

T = []

T.append(Thread(target=self.content_get, args=(self.replyUrl, page)))

T.append(Thread(target=self.mysql_writeIn, args=(BV, )))

# T.append(Thread(target=self.csv_writeIn, args=(BV, )))

# 二选一,要么csv要么mysql

print("开始爬取...")

for t in T:

t.start()

for t in T:

t.join()五、综合。

if __name__ == '__main__':

cookie = "fingerprint=cdc14f481fb201fec2035d743ff230b; buvid_fp=DE7C7303-E24E-462C-B112-EE78EB55C45B148824infoc; buvid_fp_plain=1BC352F4-4DB9-D82C-44A2-FB17273D240infoc; b_ut=-1; i-wann-go-back=-1; _uuid=43C8466C-79D5-F07A-032C-F6EF1635706854601infoc; buvid3=DE703-E24E-462C-B112-EE78EB55C45B148824infoc; CURRENT_FNVAL=80; blackside_state=1; sid=7wo01l; rpdid=|(u)mmY|~YJ|0J'uYJklJ~ul|; CURRENT_QUALITY=112; PVID=4; bfe_id=cade759d3229a3973a5d4e9161f3bc; innersign=1"

cookies = {}

for c in cookie.split(";"):

b = c.split("=")

cookies[b[0]] = b[1]

BV = 'BV14h411n7ok'

bilibili = Bilibili(BV, 0, cookies, 1)

bilibili.main(1, BV)?在传递cookie的时候,最好把它变成字典格式。

?运行一下程序,爬一页试试

?

?

? ?

?

?ok,成功了我们只爬取一页,拨心回复只有最多20条。具体一个视频有多少页呢?我们在浏览器中请求一级评论url看看

?

?

?

?

? ?

?

?

?

?

?

?冰冰姐这个视频有一万多一点评论,到370页的时候就不动了。所以我们可以手动二分法看看具体有多少页,或者写一个判断函数,当请求函数content_get的replies为null的时候,说明结束了。

?

六、完整代码

# -- coding: utf-8 --

# Author : 竹一

# Time : 2021/9/25 10:37

# version : 1.0

# Software: PyCharm

import requests

import re

import time

from fake_useragent import UserAgent

import queue

import csv

import pymysql as pysql

from threading import Thread

class Bilibili:

def __init__(self, BV, mode, cookies, page):

self.homeUrl = "https://www.bilibili.com/video/"

self.oid_get(BV)

self.replyUrl = "https://api.bilibili.com/x/v2/reply/main?jsonp=jsonp&type=1&oid={oid}&mode={mode}&plat=1&next=".format(oid=self.oid, mode=mode)

self.rreplyUrl = "https://api.bilibili.com/x/v2/reply/reply?jsonp=jsonp&type=1&oid={oid}&ps=20&root={root}&pn=".format(oid=self.oid, root="{root}")

self.headers = {"user-agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/93.0.4577.82 Safari/537.36"}

self.cookies = cookies

self.q = queue.Queue()

self.count = 1

# 获取视频 oid

def oid_get(self, BV):

response = requests.get(url=self.homeUrl + BV).text

# 正则表达式 通过视频 bv 号获取 oid

self.oid = re.findall("\"aid\":([0-9]*),", response)[0]

# level_1是否为一级评论

def content_get(self, url, page, level_1=True):

now = 0

while now<=page:

if level_1:

print("page : <{now}>/<{page}>".format(now=now, page=page))

response = requests.get(url=url+str(now), cookies=self.cookies, headers=self.headers).json()

replies = response['data']['replies'] # 评论数据在data->replies 里面,一共有 20 条

now += 1

for reply in replies:

if level_1:

line = self.reply_clean(reply, self.count)

self.count += 1

else:

line = self.reply_clean(reply)

self.q.put(line)

# 这儿我们可以筛选一下,如果有二级评论,调用函数请求二级评论

if level_1==True and line[-2] != 0:

self.content_get(url=self.rreplyUrl.format(root=str(line[-1])), page=int(line[-2]/20+0.5), level_1=False) # 递归获取二级评论

# 这个函数可以爬一级评论也能爬二级评论

def reply_clean(self, reply, count=False):

name = reply['member']['uname'] # 名字

sex = reply['member']['sex'] # 性别

if sex=="保密":

sex = ' '

mid = reply['member']['mid'] # 帐号的uid

sign = reply['member']['sign'] # 标签

rpid = reply['rpid'] # 爬二级评论要用到

rcount = reply['rcount'] # 回复数

level = reply['member']['level_info']['current_level'] # 等级

like = reply['like'] # 点赞数

content = reply['content']['message'].replace("\n","") # 评论内容

t = reply['ctime']

timeArray = time.localtime(t)

otherStyleTime = time.strftime("%Y-%m-%d %H:%M:%S", timeArray) # 评论时间,时间戳转为标准时间格式

if count:

return [count, name, sex, level, mid, sign, otherStyleTime, content, like, rcount, rpid]

else:

return ["回复", name, sex, level, mid, sign, otherStyleTime, content, like, ' ', rpid]

def csv_writeIn(self, BV):

print("csv文件 数据存储中......")

file = open("bilibili评论_"+BV+".csv", "w", encoding="utf-8", newline="")

f = csv.writer(file)

line1 = ['楼层', '姓名', '性别', '等级', 'uid', '个性签名', '评论时间', '评论内容', '点赞数', '回复数', 'rpid']

f.writerow(line1)

file.flush()

while True:

try:

line = self.q.get(timeout=10)

except:

break

f.writerow(line)

file.flush()

file.close()

def mysql_connect(self, host, user, password, BV):

try:

self.conn = pysql.connect(host=host, user=user, password=password)

self.cursor = self.conn.cursor()

print("mysql 数据库 连接成功!")

except:

print("mysql connect error ... ")

exit(1)

self.cursor.execute('create database if not exists bilibili')

self.cursor.execute('use bilibili')

sql = '''

create table if not exists {BV} (

floor char(5),

name char(20),

sex char(2),

level char(1),

uid char(10),

sign char(100),

time char(23),

content char(100),

star char(6),

reply char(6),

rpid char(10))

'''

self.cursor.execute(sql.format(BV=BV))

def mysql_writeIn(self, BV):

print("mysql 数据存储中 ...")

sql = '''insert into BV

(`floor`, `name`, `sex`, `level`, `uid`, `sign`, `time`, `content`, `star`, `reply`, `rpid`) value

("{floor}", "{name}", "{sex}", "{level}", "{uid}", "{sign}", "{t}", "{content}", "{star}", "{reply}", "{rpid}")'''

sql = sql.replace('BV', BV)

while True:

try:

line = self.q.get(timeout=10)

except:

self.conn.close()

break

try:

self.cursor.execute(sql.format(floor=line[0], name=line[1], sex=line[2], level=line[3], \

uid=line[4], sign=line[5], t=line[6], content=line[7], \

star=line[8], reply=line[9], rpid=line[10]))

except Exception as e:

print(e)

continue

self.conn.commit()

def main(self, page, BV, host, user, password):

self.mysql_connect(host=host, user=user, password=password, BV=BV)

T = []

T.append(Thread(target=self.content_get, args=(self.replyUrl, page)))

T.append(Thread(target=self.mysql_writeIn, args=(BV, )))

# T.append(Thread(target=self.csv_writeIn, args=(BV, )))

print("开始爬取...")

for t in T:

t.start()

for t in T:

t.join()

if __name__ == '__main__':

cookie = "fingerprint=cdc14f4281201fec2035d743ff230b; buvid_fp=DE7C73-E24E-462C-B112-EE78EB55C45B148824infoc; buvid_fp_plain=1BC3F4-4DB9-D82C-44A2-FB17273DB52757240infoc; b_ut=-1; i-wanna-go-back=-1; _uuid=4C8466C-79D5-F07A-032C-F6EF1635706854601infoc; buvid3=DE7C7303-E24E-462C-B112-EE78EB545B148824infoc; CURRENT_FNVAL=80; blackside_state=1; sid=7w6ao01l; rpdid=|(u)mmY|~YJ|0J'uYJklJ~ul|; CURRENT_QUALITY=112; PVID=4; bfe_id=cade757b9d3223973a5d4e9161f3bc; innersign=1"

cookies = {}

for c in cookie.split(";"):

b = c.split("=")

cookies[b[0]] = b[1]

BV = 'BV14h411n7ok'

host = host # 主机名

user = user # 用户名

password = password # 密码

bilibili = Bilibili(BV, 0, cookies, 369)

bilibili.main(369, BV)