用Python爬取国家政策网

原学习博客:https://blog.csdn.net/hua_you_qiang/article/details/114535433

我们通过搜索框搜索关键字,就能够搜索到相关的信息,回车后,网址就会发生变化。

从主页网址变成http://sousuo.gov.cn/s.htmt=zhengce&q=%E6%88%BF%E5%9C%B0%E4%BA%A7

通过这个网址可以发现“房地产”编码成了“%E6%88%BF%E5%9C%B0%E4%BA%A7”

因为网页上的网址使用汉字进行传输不方便,所以很多网址都对汉字进行编码

定义头部并导库

import requests

from bs4 import BeautifulSoup

import os

# 定义请求头header

headers = {"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/88.0.4324.190 Safari/537.36 FS"}

获取有关key值编码后的url

例如:将“房地产”转换为“%E6%88%BF%E5%9C%B0%E4%BA%A7”

# 获取有关key值的编码后的url

def get_key_url(key):

url = 'http://sousuo.gov.cn/s.htm?t=zhengce&q='+str(key.encode()).replace(r'\x', '%').upper()[2:-1]

return url

获取相关url返回的html数据

# 获取相关url返回的html数据

def get_html(url):

r = requests.get(url=url, headers=headers)

r.encoding = r.apparent_encoding

# print("状态:", r.raise_for_status)

return r.text

获取网页的html数据如下

获取相关url返回的json数据

def get_json(url):

r = requests.get(url=url, headers=headers)

r.encoding = r.apparent_encoding

# print("状态:", r.raise_for_status) return r.json()

获取json数据中和所求数据有关的对象并存入url_dict字典

def get_url_list(json_text):

url_dict = dict()

for each in json_text['searchVO']['catMap']['gongwen']['listVO']:

# print(each['url'],each['title'].replace('<em>','').replace('</em>',''))

url_dict[each['url']] = each['title'].replace('<em>','').replace('</em>','')

for each in json_text['searchVO']['catMap']['otherfile']['listVO']:

# print(each['url'],each['title'].replace('<em>','').replace('</em>',''))

url_dict[each['url']] = each['title'].replace('<em>','').replace('</em>','')

return url_dict

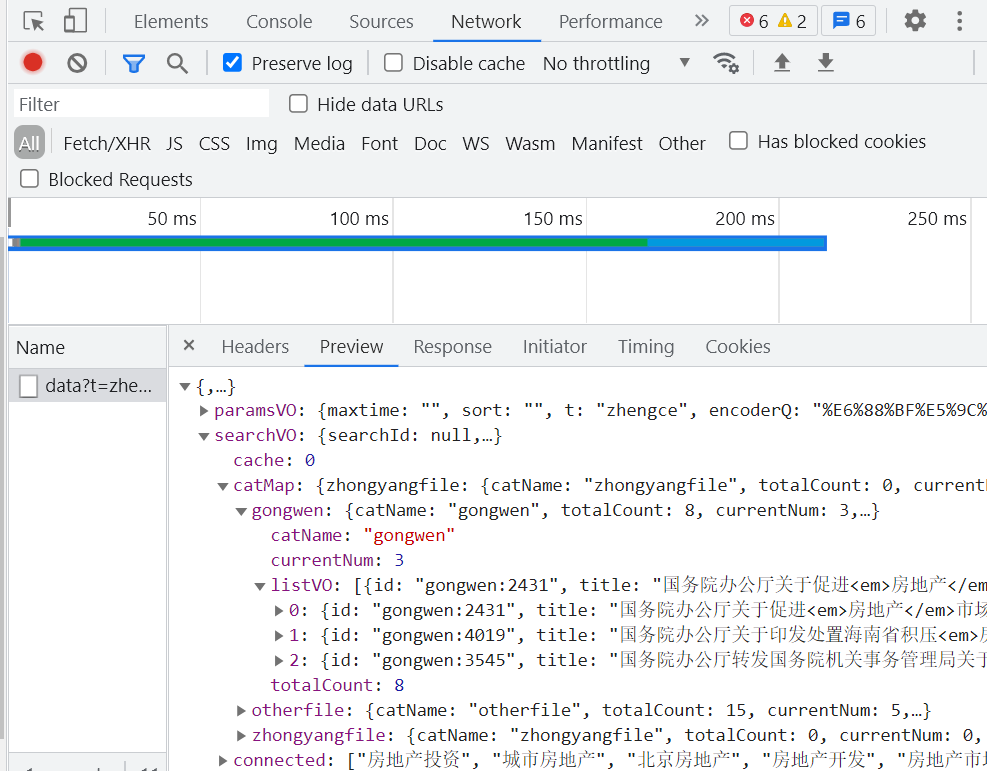

获取到的数据(房地产第二页)如下图所示,我们要在其中通过get_url_list函数抽取listVO的数据

获取所有连接

# 获取所有的连接

def get_all_link(key):

# print(key)

# 对key关键字进行编码

key = str(key.encode()).replace(r'\x', '%').upper()[2:-1]

print(key)

# 创建一个dict字典用于存放返回所求的数据

url_dict = dict()

# 获取页数

# 注意!!此处的获取页数page的代码是正确的,但是具体操作循环语句时可能会报错,因为在爬取文章的时候保存文件是以文章标题保存的txt文件,而文章标题很可能出现非法字符无法保存。所以很可能报错。

page_text = get_html('http://sousuo.gov.cn/s.htm?t=zhengce&q='+key)

# print(page_text)

soup = BeautifulSoup(page_text, 'lxml')

last_page = soup.find_all('a', attrs={'class': 'lastPage'})

# print(type(last_page[0]))

page = int(last_page[0].get('page'))

# print(type(page))

print(page)

# 爬取的页数(假定爬取3页,如果确定标题名保存无误可以填page)

for i in range(3):

# 请求的url

url = 'http://sousuo.gov.cn/data?t=zhengce&q='+key+'&timetype=timeqb&mintime=&maxtime=&sort=&sortType=1&searchfield=title&pcodeJiguan=&childtype=&subchildtype=&tsbq=&pubtimeyear=&puborg=&pcodeYear=&pcodeNum=&filetype=&p='+str(i)+'&n=5&inpro='

json_text = get_json(url)

print(json_text)

dic = get_url_list(json_text)

if not dic:

print("无")

break

url_dict.update(dic)

return url_dict

保存文件

# 保存文件

def save_file(url, title, key):

print(url)

text = get_html(url)

soup = BeautifulSoup(text, 'lxml')

# 标题作为保存,可能会出现非法命名字符而报错

fp = open(f'../file/{key}/{title}.txt', 'w', encoding='utf-8')

for each in soup.find_all('p'):

if each.string:

print(each.string)

fp.writelines(each.string)

fp.close()

print("写入成功")

所有代码

import requests

import lxml

from bs4 import BeautifulSoup

import re

import os

# 定义请求头header

headers = {"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/88.0.4324.190 Safari/537.36 FS"}

def get_key_url(key):

url = 'http://sousuo.gov.cn/s.htm?t=zhengce&q='+str(key.encode()).replace(r'\x', '%').upper()[2:-1]

return url

# 获取相关url返回的html数据

def get_html(url):

r = requests.get(url=url, headers=headers)

r.encoding = r.apparent_encoding

# print("状态:", r.raise_for_status)

return r.text

# 获取相关url返回的json数据

def get_json(url):

r = requests.get(url=url, headers=headers)

r.encoding = r.apparent_encoding

# print("状态:", r.raise_for_status)

return r.json()

# 获取json数据中和所求数据有关的对象并存入url_dict字典

def get_url_list(json_text):

url_dict = dict()

for each in json_text['searchVO']['catMap']['gongwen']['listVO']:

# print(each['url'],each['title'].replace('<em>','').replace('</em>',''))

url_dict[each['url']] = each['title'].replace('<em>','').replace('</em>','')

for each in json_text['searchVO']['catMap']['otherfile']['listVO']:

# print(each['url'],each['title'].replace('<em>','').replace('</em>',''))

url_dict[each['url']] = each['title'].replace('<em>','').replace('</em>','')

return url_dict

# 获取所有的连接

def get_all_link(key):

# print(key)

key = str(key.encode()).replace(r'\x', '%').upper()[2:-1]

print(key)

url_dict = dict()

# 获取关键字页数page

page_text = get_html('http://sousuo.gov.cn/s.htm?t=zhengce&q='+key)

# print(page_text)

soup = BeautifulSoup(page_text, 'lxml')

last_page = soup.find_all('a', attrs={'class': 'lastPage'})

# print(type(last_page[0]))

page = int(last_page[0].get('page'))

# print(type(page))

print(page)

for i in range(page):

url = 'http://sousuo.gov.cn/data?t=zhengce&q='+key+'&timetype=timeqb&mintime=&maxtime=&sort=&sortType=1&searchfield=title&pcodeJiguan=&childtype=&subchildtype=&tsbq=&pubtimeyear=&puborg=&pcodeYear=&pcodeNum=&filetype=&p='+str(i)+'&n=5&inpro='

json_text = get_json(url)

print(json_text)

dic = get_url_list(json_text)

if not dic:

print("无")

break

url_dict.update(dic)

return url_dict

# 保存文件

def save_file(url, title, key):

print(url)

text = get_html(url)

soup = BeautifulSoup(text, 'lxml')

fp = open(f'../file/{key}/{title}.txt', 'w', encoding='utf-8')

for each in soup.find_all('p'):

if each.string:

print(each.string)

fp.writelines(each.string)

fp.close()

print("写入成功")

def main():

key = input("请输入想要搜索的关键字:")

if not os.path.exists(f'../file/{key}/'):

os.makedirs(f'../file/{key}/')

url_dict = get_all_link(key)

for url in url_dict:

save_file(url, url_dict[url], key)

main()

通过以上的学习,我明白了断点调试以及及时print输入的重要性。我可以在调试的过程中,知道哪一步出现问题并及时解决。

这是我第一次编写实例爬虫的代码,一个下午搞定了结果,但是深究代码的逻辑和里面的具体内涵还是十分重要的。希望以后能多多练习爬虫的代码并及时分析记录有关python爬虫的相关步骤和逻辑。

实验改进

因为在save_file的时候会遇见文章标题名不符合文件保存要求的情况,导致报错,所以我们可以定义一个函数对爬取下来的标题进行替换非法字符,然后再保存。

def filename_is_leagal(filename):

unstr = ['?','<','>','"',':','|','/','\\','*']

for i in unstr:

if i in filename:

filename = filename.replace(i,',')

return filename

然后在保存文件的时候进行:

# 保存文件

def save_file(url, title, key):

print(url)

text = get_html(url)

title = filename_is_leagal(title)#标题非法字符替换

soup = BeautifulSoup(text, 'lxml')

fp = open(f'../file/{key}/{title}.txt', 'w', encoding='utf-8')

for each in soup.find_all('p'):

if each.string:

print(each.string)

fp.writelines(each.string)

fp.close()

print("写入成功")