此程序经过测试非常的稳定,无网络状态下会提示,网络正常就可以爬取,每次最多50页,可根据需要选择。问题就是代码过于冗长,看得人眼花缭乱,估计只有我自己能看懂,水平有限,见谅了。做这个程序是想练习下综合的实战应用,界面页相对简洁友好,整个程序也都做个异常处理,当然一定会有不够完善的地方,也请各位同仁批评指正。

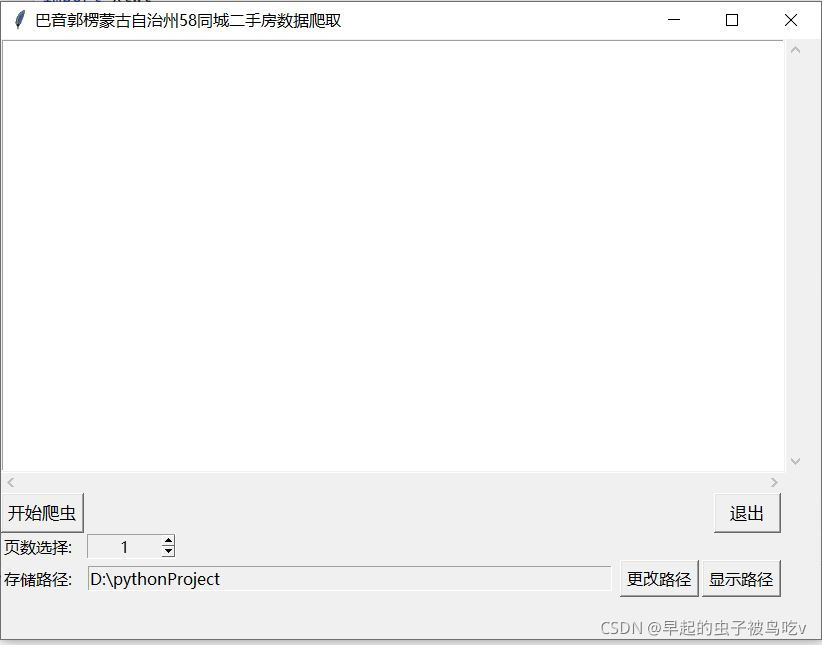

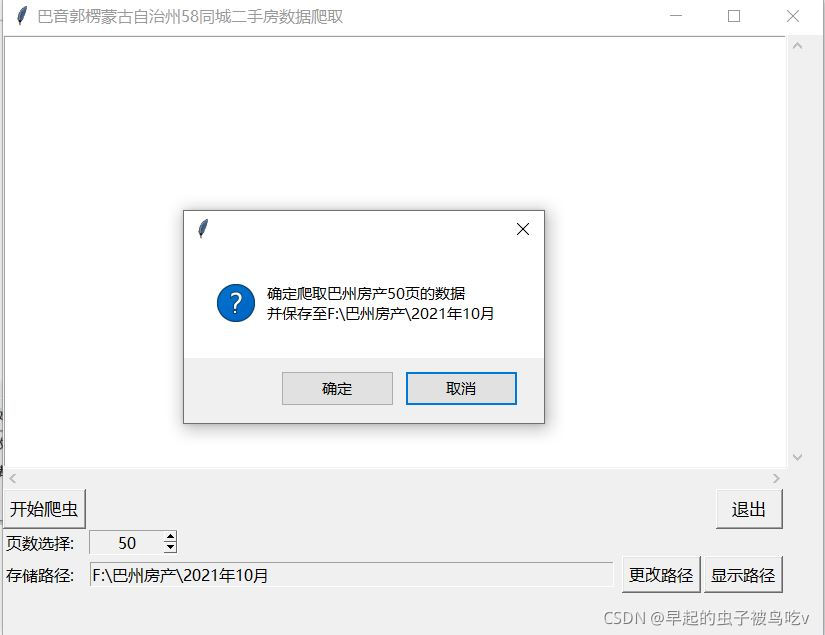

界面如下:

?

?代码如下(不含代理IP的API):

from tkinter import *

import requests

from lxml import html

import random

import xlwt

import time

from requests.adapters import HTTPAdapter

from retry import *

from tkinter.filedialog import askdirectory

import threading

import tkinter.messagebox # 弹窗库

root = Tk()

global list_title

import os

'''

文件路径选择

'''

xVariable = tkinter.IntVar()

def selectPath():

path_ = askdirectory(title="路径选择")

if path_ == "":

path.get()

else:

path_ = path_.replace("/", "\\") # 实际在代码中执行的路径为“\“ 所以替换一下

path.set(path_)

def openPath():

dir = os.path.dirname(path.get() + "\\")

os.system('start ' + dir) # start+空格 相当于windows里CMD命令打开文件夹

path = StringVar()

path.set(os.path.abspath("."))

text = Listbox(root, font=('微软雅黑', 15), width=52, height=13)

text.grid_configure(row=1, columnspan=6)

sc = Scrollbar(root, command=text.yview)

sc.grid_configure(row=1, column=7, sticky=S + E + N)

sc1 = Scrollbar(root, orient="horizontal", command=text.xview,)

sc1.grid_configure(row=2, columnspan=6, sticky=EW)

text.config(yscrollcommand=sc.set)

text.config(xscrollcommand=sc1.set)

def download():

try:

response = requests.get("http://www.xiongmaodaili.cn/").status_code

if response == 200:

lujing["state"] = DISABLED

spbox["state"] = DISABLED

button["text"] = "正在爬取"

button["state"] = DISABLED

savapath = path.get()

page = xVariable.get()

def get_ip():

try:

url1 = '代理IP的地址,这里删除了...'

response = requests.get(url1)

if response.status_code == 200:

while response.text[0] == '{' or response.text[0] == "":

time.sleep(2)

response = requests.get(url1)

return [x for x in response.text.split('\r')]

# print('获取ip失败')

# 此处返回的内容是多行的字符串,使用列表表达式使其拆分成组合成列表

else:

text.insert(END, '请求失败')

except Exception as e:

text.insert(END, e)

i = 0

@retry(tries=4, delay=1, backoff=1, jitter=0, max_delay=1)

def my_request(url):

requests.adapters.DEFAULT_RETRIES = 15

s = requests.session()

s.keep_alive = False # 关闭之前的连接,避免连接过多

global r

try:

ips = get_ip()

proxy = {'https': ips[0]}

text.insert(END, proxy)

r = requests.get(url, headers=head, proxies=proxy, verify=False, timeout=5)

r.encoding = 'utf-8'

except BaseException: # 捕获异常的时候,这里粗略的写了BaseException,根据需要可写的更具体。

text.insert(url, "请求失败,开始重试")

ips = get_ip()

proxy = {'https': ips[0]}

text.insert(proxy)

r = requests.get(url, headers=head, proxies=proxy, verify=False, timeout=5)

r.encoding = 'utf-8'

return r

global r

head = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.198 Safari/537.36",

"accept": "application/json, text/plain, */*", "accept-encoding": "gzip, deflate, br",

"accept-language": "zh-CN,zh;q=0.9"}

work_book = xlwt.Workbook(encoding="utf-8")

sheet = work_book.add_sheet("巴州二手房信息")

sheet.write(0, 3, "小区名称")

sheet.write(0, 4, "区域1")

sheet.write(0, 5, "区域2")

sheet.write(0, 6, "地址")

sheet.write(0, 7, "总价(万元)")

sheet.write(0, 8, "单价(元/㎡)")

sheet.write(0, 2, "房子大小(㎡)")

sheet.write(0, 1, "房型")

sheet.write(0, 0, "标题")

row_num = 1

z = 0

v = 0

# page = xVariable.get()

for i in range(0, page):

v += 1

i += 1

try:

url = "https://bygl.58.com/ershoufang/p" + str(i) + "/"

text.insert(END, url)

text.see(END)

text.update()

my_request(url)

except Exception as e:

text.insert(END, e)

text.insert(END, "第" + str(i) + "页出错!")

text.insert(END, "--------------------------")

continue

else:

preview_html = html.fromstring(r.text)

list_title = preview_html.xpath("//div[@class='property-content-title']/h3/text()|//p["

"@class='property-content-info-comm-name']/text()|//p[ "

"@class='property-content-info-comm-address']//span/text()|//span[ "

"@class='property-price-total-num']/text()|//p["

"@class='property-price-average']/text()|//p["

"@class='property-content-info-text'][1]/text()|//p["

"@class='property-content-info-text property-content-info-attribute']//span//text()")

list_title = [str(x) for x in list_title]

while not list_title:

text.insert(END, "列表为空,重新获取代理IP:")

my_request(url)

list_title = preview_html.xpath("//div[@class='property-content-title']/h3/text()|//p["

"@class='property-content-info-comm-name']/text()|//p[ "

"@class='property-content-info-comm-address']//span/text()|//span[ "

"@class='property-price-total-num']/text()|//p["

"@class='property-price-average']/text()|//p["

"@class='property-content-info-text'][1]/text()|//p["

"@class='property-content-info-text property-content-info-attribute']//span//text()")

list_title = [str(x) for x in list_title]

time.sleep(random.random() * 2)

break

text.insert(END, "成功爬取第" + str(i) + "页数据")

z += 1

text.insert(END, "抓取成功率:{:.2%}".format(z / v))

text.insert(END, "----------------------------")

text.see(END)

text.update()

for j in range(len(list_title)):

if j % 14 == 0:

title = list_title[j + 8]

area1 = list_title[j + 9]

biaoti = list_title[j]

area2 = list_title[j + 10]

area3 = list_title[j + 11]

totalnum = list_title[j + 12]

avg = list_title[j + 13]

size = list_title[j + 7].strip().strip('\n')

house_type = list_title[j + 1] + list_title[j + 2] + list_title[j + 3] + list_title[j + 4] + \

list_title[

j + 5] + list_title[j + 6]

# print(type(list_title[j + 6]))

sheet.write(row_num, 3, title)

sheet.write(row_num, 4, area1)

sheet.write(row_num, 5, area2)

sheet.write(row_num, 6, area3)

sheet.write(row_num, 7, totalnum)

sheet.write(row_num, 8, avg)

sheet.write(row_num, 2, size)

sheet.write(row_num, 1, house_type)

sheet.write(row_num, 0, biaoti)

row_num += 1

time.sleep(3)

# file_name = r"F:\巴州二手房爬取.xls"

file_name = savapath + "\\" + time.strftime("%Y-%m-%d") + "共" + str(page) + "页数据.xls"

print(file_name)

if os.path.exists(file_name):

k = 1

name, suffix = file_name.split(".") # 数据处理,以点号进行前后分割

name = name + "(" + str(k) + ")"

file_name = name + "." + suffix

if os.path.exists(file_name):

print(file_name)

while os.path.exists(file_name):

name2 = name.split("(")

name1 = name2[1].split(")")

k = int(name1[0]) + k

file_name = name2[0] + "(" + str(k) + ")" + "." + suffix

# print(name1)

# print(name)

# print(k)

print(file_name)

work_book.save(file_name)

else:

work_book.save(file_name)

else:

work_book.save(file_name)

lujing["state"] = ACTIVE

text.insert(END, "文件生成成功:" + file_name)

text.insert(END, "\n")

tkinter.messagebox.showinfo("提示", "文件生成成功:" + file_name)

button["text"] = "开始爬虫"

button["state"] = ACTIVE

spbox["state"] = "readonly"

except:

text.insert(END,"网络异常,请检查后重试")

text.see(END)

def spiderok():

page = xVariable.get()

if tkinter.messagebox.askokcancel("", "确定爬取巴州房产" + str(page) + "页的数据\n并保存至" + path.get(), default="cancel"):

download()

def thread_it(func):

t = threading.Thread(target=func)

t.setDaemon(True)

t.start()

root.title("巴音郭楞蒙古自治州58同城二手房数据爬取")

root.geometry("820x600+600+230")

def tuichu():

if tkinter.messagebox.askokcancel(title="提示", message="您确定要退出吗?", default="cancel"):

root.quit()

root.protocol("WM_DELETE_WINDOW", tuichu)

button = Button(root, text="开始爬虫", font=("微软雅黑", 10), command=lambda: thread_it(spiderok))

button.grid(row=3, sticky=W)

lb = Label(root, text="页数选择:")

lb.grid(row=4, column=0, sticky=W)

spbox = Spinbox(root, from_=1, to=50, increment=1, justify="center", textvariable=xVariable, state="readonly", width=8)

spbox.grid(row=4, column=1, sticky=W)

button1 = Button(root, text="退出", padx=10, font=("微软雅黑", 10), command=tuichu)

button1.grid(row=3, column=4, sticky=E)

Label(root, text="存储路径:").grid(row=5, column=0, sticky=W)

Entry(root, textvariable=path, state="readonly").grid(row=5, column=1, ipadx=170)

lujing = Button(root, text="更改路径", command=selectPath)

lujing.grid(row=5, column=3, sticky=E)

Button(root, text="显示路径", command=openPath).grid(row=5, column=4, sticky=E)

root.update()

root.mainloop()