需求

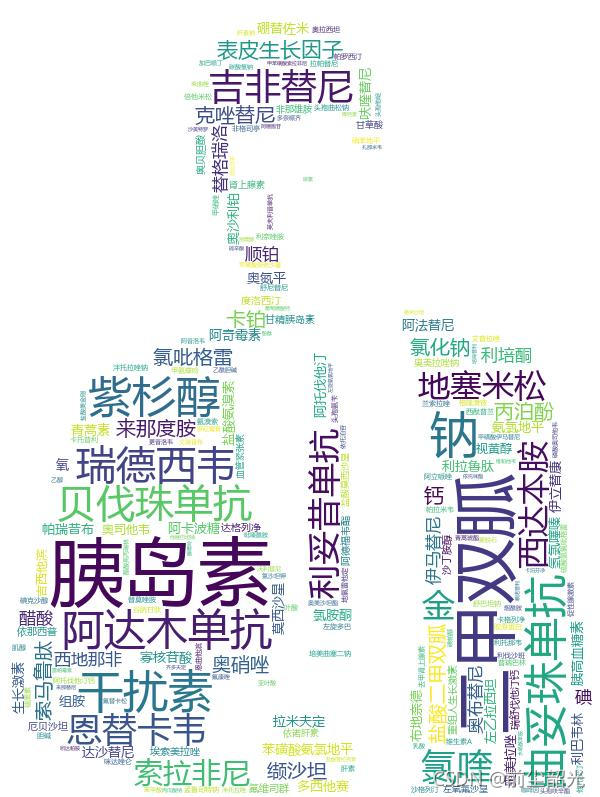

现有爬虫获取的sql文件,要求根据指定药品在文件中的出现次数制作词云,分析出未来的热门药物

基本思路

从文件中读取出所有药物的名称,将其作为字典的key值存入字典ciyun,按行读入,初始所有value为0,这里需要去除其中的每行的换行符

通过结巴分词库进行分词,这里需要引入药物的名称文件作为分词关键字,通过循环按行进行分词,判断该词是否在字典ciyun的key中,如果存在,value+1,通过wordcloud库制作词云即可

from wordcloud import WordCloud

import jieba

import wordcloud

import numpy as np

from os import path

import matplotlib.pyplot as plt

import PIL.Image as Image

ciyun = {}#词云

with open("medicine.txt", encoding="utf-8") as file:

for line in file.readlines():

li=list(line)

li[-1]=''

line=''.join(li)

ciyun[line]=0

with open("new_data1.txt", "r", encoding="utf-8") as file:

for line in file.readlines(): # 读取每行

jieba.load_userdict("medicine.txt") # 加载自定义词典

poss = jieba.cut(line) # 分词并返回该词词性

for w in poss:

if(w!="\n"):

if w in ciyun.keys():

w=w.replace('\n', '').replace('\r', '')

w=w.strip()

ciyun[w]+=1

Mask = np.array(Image.open(path.join('backgroundimage.png')))

w = wordcloud.WordCloud( font_path = "msyh.ttc", mask = Mask, \

width = 1000, height = 700, background_color = "white", \

).fit_words(ciyun)

plt.imshow(w,interpolation='bilinear')

plt.axis('off')

w.to_file(r'new.jpg')

plt.show()

效果如下: