爬虫流程:准备工作??爬取网页,获取数据(核心)??解析内容??保存数据

解析页面内容:使用beautifulsoup定位特定的标签位置,使用正则表达式找到具体内容

import导入一些库,做准备工作。

正则表达式(find开头),用来筛选信息(正则表达式用 re 库)。

以下例子爬取的内容是:电影详情链接,图片链接,影片中文名,影片外国名,评分,评价数,概况,相关信息。

���代码如下:

# -*- codeing = utf-8 -*-

from bs4 import BeautifulSoup ?# 网页解析,获取数据

import re ?# 正则表达式,进行文字匹配

import urllib.request, urllib.error ?# 制定URL,获取网页数据

import sqlite3 ?# 进行SQLite数据库操作,数据导出 (import pandas as pd)

import xlwt ?# 进行excel操作

findLink = re.compile(r'<a href="(.*?)">') ?# 创建正则表达式对象,标售规则??影片详情链接的规则

findImgSrc = re.compile(r'<img.*src="(.*?)"', re.S)

findTitle = re.compile(r'<span class="title">(.*)</span>')

findRating = re.compile(r'<span class="rating_num" property="v:average">(.*)</span>')

findJudge = re.compile(r'<span>(\d*)人评价</span>')

findInq = re.compile(r'<span class="inq">(.*)</span>')

findBd = re.compile(r'<p class="">(.*?)</p>', re.S)

#���准备工作

def main():

????baseurl = "https://movie.douban.com/top250?start=" ?#要爬取的网页链接,例:豆瓣网

????datalist = getData(baseurl) ?#用来存储爬取的网页信息

????dbpath = "命名.db" ?????????????#当前目录新建数据库,存储进去

????saveData(datalist,dbpath) ????# 保存数据

# ���以下为具体爬取网页过程

def getData(baseurl): ??#调用 getData(baseurl)

????datalist = [] ??#用来存储爬取的网页信息

????for i in range(0, 10): ?# 调用获取页面信息的函数,10次(电影评分Top250,每个页面只显示25个,所以需要访问页面10次)

????????url = baseurl + str(i * 25)

????????html = askURL(url) ?# 保存获取到的网页源码

??#��� 逐一解析数据,需用到正则表达式

????????soup = BeautifulSoup(html, "html.parser")

????????for item in soup.find_all('div', class_="item"): ?# 查找符合要求的字符串

????????????data = [] ?# 保存一部电影所有信息

????????????item = str(item)

????????????link = re.findall(findLink, item)[0] ?# 通过正则表达式查找

????????????data.append(link)

????????????imgSrc = re.findall(findImgSrc, item)[0]

????????????data.append(imgSrc)

????????????titles = re.findall(findTitle, item)

????????????if (len(titles) == 2):

????????????????ctitle = titles[0]

????????????????data.append(ctitle)

????????????????otitle = titles[1].replace("/", "") ?#替换,以消除转义字符

????????????????data.append(otitle)

????????????else:

????????????????data.append(titles[0])

????????????????data.append(' ')

????????????rating = re.findall(findRating, item)[0]

????????????data.append(rating)

????????????judgeNum = re.findall(findJudge, item)[0]

????????????data.append(judgeNum)

????????????inq = re.findall(findInq, item)

????????????if len(inq) != 0:

????????????????inq = inq[0].replace("。", "") ???#替换

????????????????data.append(inq)

????????????else:

????????????????data.append(" ")

????????????bd = re.findall(findBd, item)[0]

????????????bd = re.sub('<br(\s+)?/>(\s+)?', "", bd)

????????????bd = re.sub('/', "", bd)

????????????data.append(bd.strip())

????????????datalist.append(data)

????return datalist

# ���得到指定一个URL的网页内容

def askURL(url): ?#调用askURL来请求网页

????head = { ?# 模拟浏览器头部信息,向豆瓣服务器发送消息(字典)

????????"User-Agent": "Mozilla / 5.0(Windows NT 10.0; Win64; x64) AppleWebKit / 537.36(KHTML, like Gecko) Chrome / 80.0.3987.122 ?Safari / 537.36"

????}

????# 用户代理,表示告诉豆瓣服务器,我们是什么类型的机器、浏览器(本质上是告诉浏览器,我们可以接收什么水平的文件内容)。如果不写的话,访问某些网站的时候会被认出来爬虫,显示错误。

????request = urllib.request.Request(url, headers=head)

????html = ""

????try:

????????response = urllib.request.urlopen(request)

????????html = response.read().decode("utf-8") ?#读取网页的内容,设置编码为utf-8,防止乱码

????except urllib.error.URLError as e:

????????if hasattr(e, "code"):

????????????print(e.code)

????????if hasattr(e, "reason"):

????????????print(e.reason)

????return html

# ���保存数据到数据库

def saveData2DB(datalist,dbpath):

?????init_db(dbpath)

?????conn = sqlite3.connect(dbpath)

?????cur = conn.cursor()

?????for data in datalist:

?????????????for index in range(len(data)):

?????????????????if index == 4 or index == 5:

?????????????????????continue

?????????????????data[index] = '"'+data[index]+'"'

?????????????sql = '''

?????????????????????insert into movie250(

?????????????????????info_link,pic_link,cname,ename,score,rated,instroduction,info)

?????????????????????values (%s)'''%",".join(data)

?????????????# print(sql) ????#输出查询语句,用来测试

?????????????cur.execute(sql)

?????????????conn.commit()

?????cur.close

?????conn.close()

?def init_db(dbpath):

?????sql = '''

?????????create table movie250(

?????????id integer ?primary ?key autoincrement,

?????????info_link text,

?????????pic_link text,

?????????cname varchar,

?????????ename varchar ,

?????????score numeric,

?????????rated numeric,

?????????instroduction text,

?????????info text

?????????)

?????''' ?#创建数据表

?????conn = sqlite3.connect(dbpath)

?????cursor = conn.cursor()

?????cursor.execute(sql)

?????conn.commit()

?????conn.close()

if __name__ == "__main__": ?# 当程序执行时

????# 调用函数

?????main()

?????init_db("movietest.db")

?????print("爬取完毕!")

���使用Excel保存数据时:

saveData(datalist,savepath) #数据的存储方式

# ���保存数据到表格

def saveData(datalist,savepath):

????print("save.......")

????book = xlwt.Workbook(encoding="utf-8",style_compression=0) #创建workbook对象

????sheet = book.add_sheet('豆瓣电影Top250', cell_overwrite_ok=True) #创建工作表

????col = ("电影详情链接","图片链接","影片中文名","影片外国名","评分","评价数","概况","相关信息") ??#创建工作表,创列(在当前目录下创建)

#然后把 dataList里的数据一条条存进去

????for i in range(0,8):

????????sheet.write(0,i,col[i]) ?#列名

????for i in range(0,250):

????????# print("第%d条" %(i+1)) ??????#输出语句,用来测试

????????data = datalist[i]

????????for j in range(0,8):

????????????sheet.write(i+1,j,data[j]) ?#数据

????book.save(savepath) ??#保存���爬取东方财富数据:

参考链接:

(https://blog.csdn.net/cainiao_python/article/details/115804583)

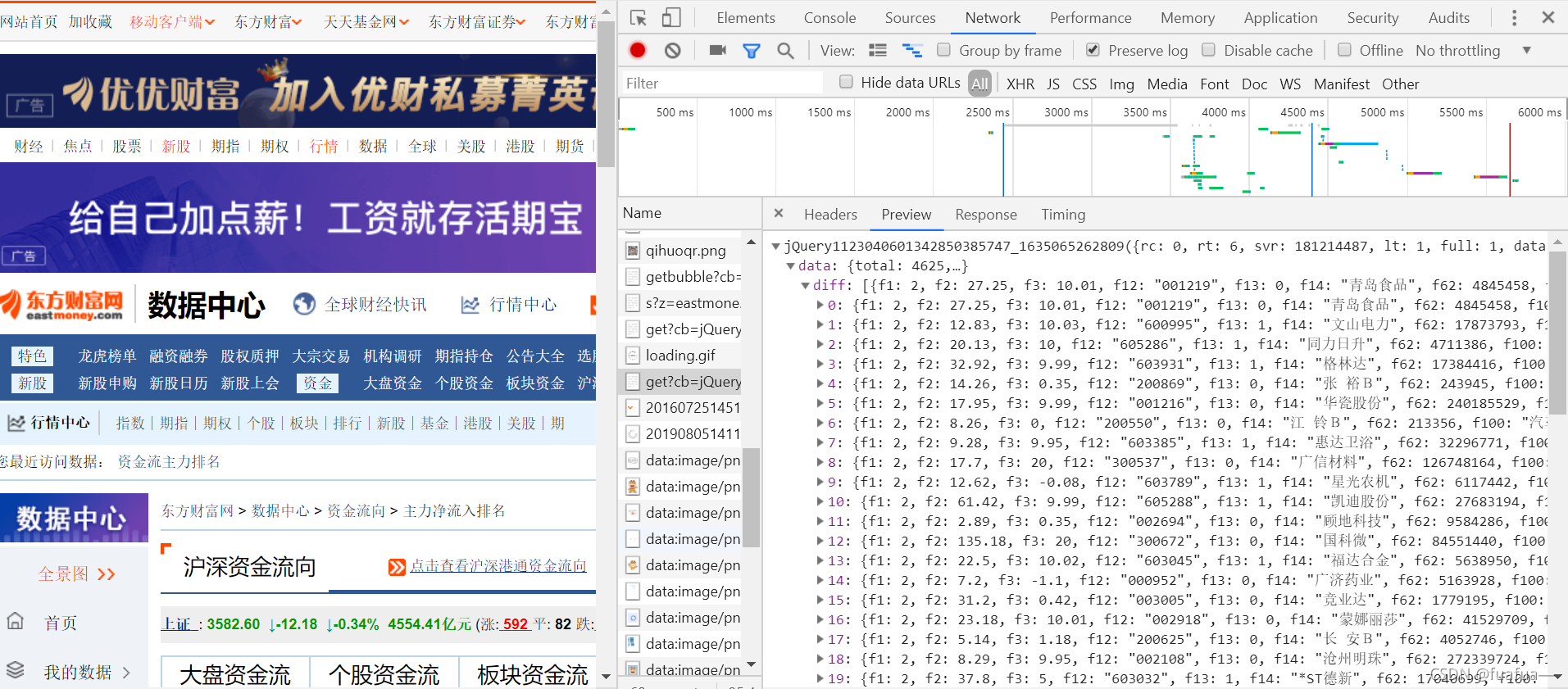

爬取如图数据:

?完整的代码如下:

import re ?#正则表达式,进行文字匹配

import random

import requests

import pymysql

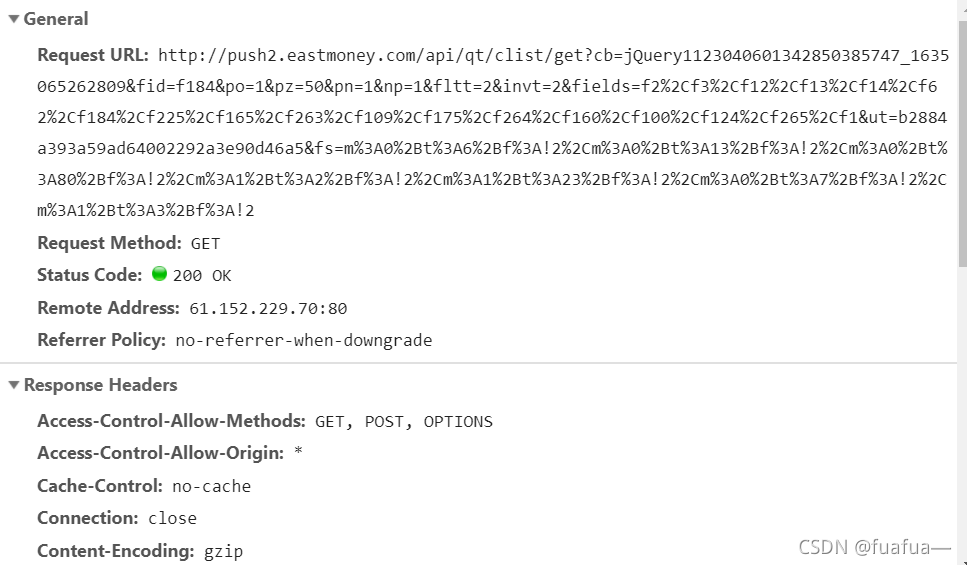

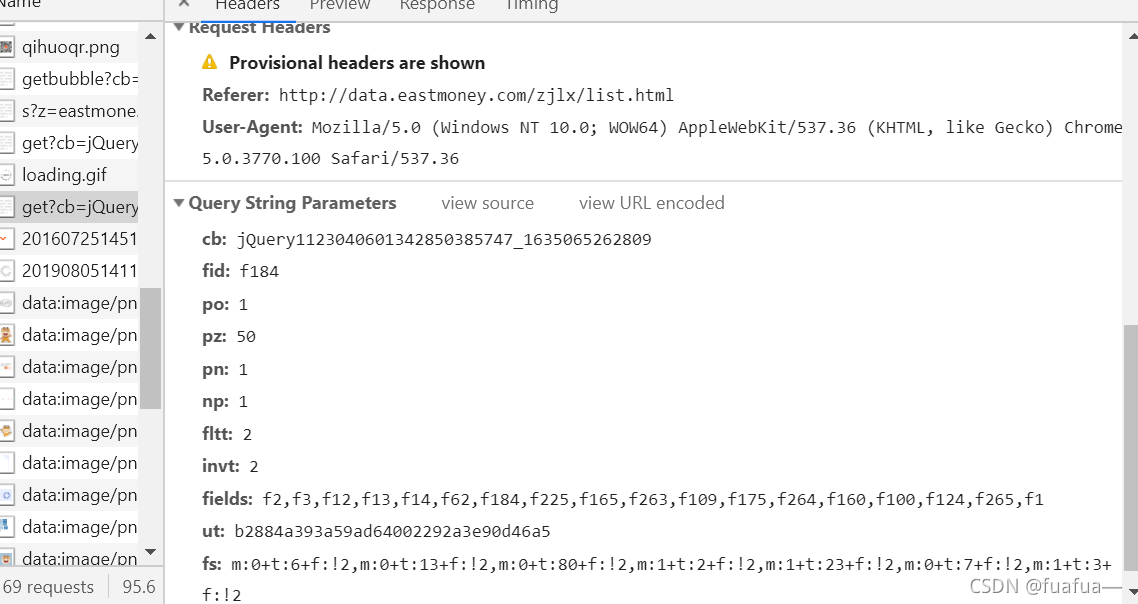

urls=r'http://push2.eastmoney.com/api/qt/clist/get?cb=jQuery112307026751684189851_1634985344914&fid=f184&po=1&pz=50&pn=1&np=1&fltt=2&invt=2&fields=f2%2Cf3%2Cf12%2Cf13%2Cf14%2Cf62%2Cf184%2Cf225%2Cf165%2Cf263%2Cf109%2Cf175%2Cf264%2Cf160%2Cf100%2Cf124%2Cf265%2Cf1&ut=b2884a393a59ad64002292a3e90d46a5&fs=m%3A0%2Bt%3A6%2Bf%3A!2%2Cm%3A0%2Bt%3A13%2Bf%3A!2%2Cm%3A0%2Bt%3A80%2Bf%3A!2%2Cm%3A1%2Bt%3A2%2Bf%3A!2%2Cm%3A1%2Bt%3A23%2Bf%3A!2%2Cm%3A0%2Bt%3A7%2Bf%3A!2%2Cm%3A1%2Bt%3A3%2Bf%

html = requests.get(urls) ?# 保存获取到的网页源码

html=html.text

headers = {

'Accept':'*/*',

'Accept-Encoding':'gzip',

'Accept-Language':'zh-CN,zh;q=0.9',

'Connection':'keep-alive',

'Cookie':'widget_dz_id=54511; widget_dz_cityValues=,; timeerror=1; defaultCityID=54511; defaultCityName=%u5317%u4EAC; Hm_lvt_a3f2879f6b3620a363bec646b7a8bcdd=1516245199; Hm_lpvt_a3f2879f6b3620a363bec646b7a8bcdd=1516245199; addFavorite=clicked',

'User-Agent':'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/75.0.3770.100 Safari/537.36'

}?#headers:装成豆瓣链接可识别的浏览器

?

?

def main():

????db=pymysql.connect(host='localhost',port=3306,user='root',password='123abc',database='test1',charset='utf8')

????cursor = db.cursor() #连接数据库

????sql='''create table df(

????????id int primary key not null,

????????daimas text not null,

????????names text not null,

????????zuixinjias float not null,

????????zhangdiefus float not null,

????????zhangdiees float not null,

????????chengjiaoliangs float not null,

????????chengjiaoes float not null,

????????zhenfus float not null,

????????zuigaos float not null,

????????zuidis float not null,

????????jinkais float not null,

????????zuoshous float not null,

????????liangbis float not null,

????????huanshoulvs float not null,

????????shiyinglvs float not null

????)

?????????''' ???#创建表格

?????????

????cursor.execute(sql)

????db.commit() ??????#提交数据库操作

????db.close() ???????#关闭数据库

???

for page in range(1,93):

????params = (

???????('cb', 'jQuery112307026751684189851_1634985344914'),

???????('pn', str(page)),

???????('pz', '50'),

???????('po', '1'),

???????('np', '1'),

???????('ut', 'b2884a393a59ad64002292a3e90d46a5'),

???????('fltt', '2'),

???????('invt', '2'),

???????('fid', 'f3'),

???????('fs', 'm:0+t:6+f:!2,m:0+t:13+f:!2,m:0+t:80+f:!2,m:1+t:2+f:!2,m:1+t:23+f:!2,m:0+t:7+f:!2,m:1+t:3+f:!2'),

???????('fields', 'f2,f3,f12,f13,f14,f62,f184,f225,f165,f263,f109,f175,f264,f160,f100,f124,f265,f1'),

???)

?

???

info = []

for url in urls:

????seconds = random.randint(3,6)

????response = requests.get(url, headers = headers).text

????daimas = re.findall('"f12":(.*?),',response)

????names = re.findall('"f14":"(.*?)"',response)

????zuixinjias = re.findall('"f2":(.*?),',response)

????zhangdiefus = re.findall('"f3":(.*?),',response)

????zhangdiees = re.findall('"f4":(.*?),',response)

????chengjiaoliangs = re.findall('"f5":(.*?),',response)

????chengjiaoes = re.findall('"f6":(.*?),',response)

????zhenfus = re.findall('"f7":(.*?),',response)

????zuigaos = re.findall('"f15":(.*?),',response)

????zuidis = re.findall('"f16":(.*?),',response)

????jinkais = re.findall('"f17":(.*?),',response)

????zuoshous = re.findall('"f18":(.*?),',response)

????liangbis = re.findall('"f10":(.*?),',response)

????huanshoulvs = re.findall('"f8":(.*?),',response)

????shiyinglvs = re.findall('"f9":(.*?),',response) ?#解析

????

????ls = []

????ls1 = []

????for i in range(20):

????????ls1.append(names[i])

????????ls1.append(names[i])

????????ls1.append(zuixinjias[i])

????????ls1.append(zhangdiefus[i])

????????ls1.append(zhangdiees[i])

????????ls1.append(chengjiaoliangs[i])

????????ls1.append(chengjiaoes[i])

????????ls1.append(zhenfus[i])

????????ls1.append(zuigaos[i])

????????ls1.append(zuidis[i])

????????ls1.append(jinkais[i])

????????ls1.append(zuoshous[i])

????????ls1.append(liangbis[i])

????????ls1.append(huanshoulvs[i])

????????ls1.append(shiyinglvs[i])

????????if len(ls1) == 15:

????????????ls.append(ls1)

????????????ls1 = [] #将内容写到之前创建好的数据库中

????????query = "insert into df values(%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s)"

????????for j in ls:

????????????cursor = db.cursor()

????????????db.ping(reconnect=True)

????????????cursor.execute(query, j)

????????db.commit()

????????db.close()

if __name__ == '__main__':

????main()

????print("爬取成功!")爬取完毕!