一、原理

1.什么是爬虫

- 爬虫从初始网页的URL开始,获取初始网页上的URL

- 在抓取网页的过程中,不断从当前页面上抽取新的URL放X队列

- 直到满足系统给定的停止条件;

二、代码

1.安装必要的包

- pip install requests

- pip install BeautifulSoup4

- pip install tqdm

- pip install html5lib

2.代码

import requests

from bs4 import BeautifulSoup

import csv

from tqdm import tqdm

# 模拟浏览器访问

Headers = 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.25 Safari/537.36 Core/1.70.3741.400 QQBrowser/10.5.3863.400'

# 表头

csvHeaders = ['题号', '难度', '标题', '通过率', '通过数/总提交数']

# 题目数据

subjects = []

# 爬取题目

print('题目信息爬取中:\n')

for pages in tqdm(range(1, 1 + 1)):

# 网址

r = requests.get(f'http://www.51mxd.cn/problemset.php-page={pages}.htm', Headers)

# 获取网页内容

r.raise_for_status()

# 编码

r.encoding = 'utf-8'

# 创建 BeautifulSoup对象

soup = BeautifulSoup(r.text, 'html5lib')

# 找到所有td标签

td = soup.find_all('td')

subject = []

for t in td:

# 一列一列的获取值组成一行

if t.string is not None:

subject.append(t.string)

if len(subject) == 5:

subjects.append(subject)

subject = []

#存放题目

with open('NYOJ_Subjects.csv', 'w', newline='') as file:

fileWriter = csv.writer(file)

fileWriter.writerow(csvHeaders)

fileWriter.writerows(subjects)

print('\n题目信息爬取完成!!!')

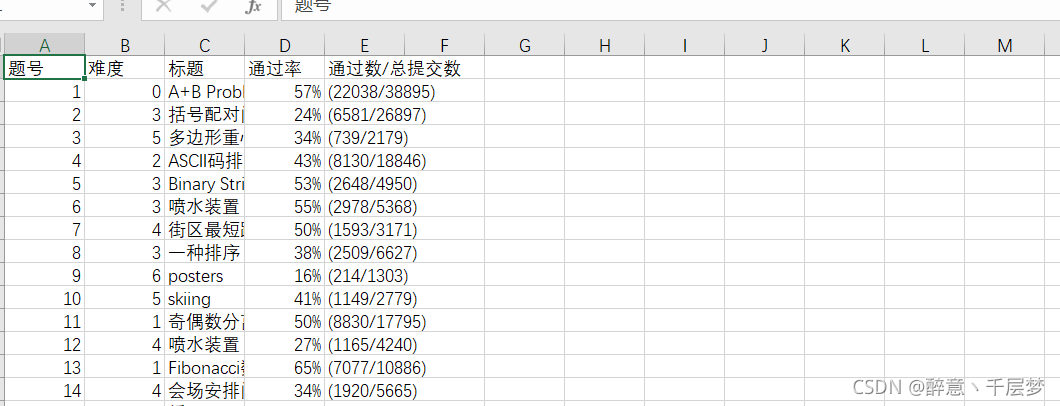

三、结果

网页上的数据如图

最终结果如图

四、总结

通过爬虫可以减少我们的工作量