网址:百度安全验证

导入库

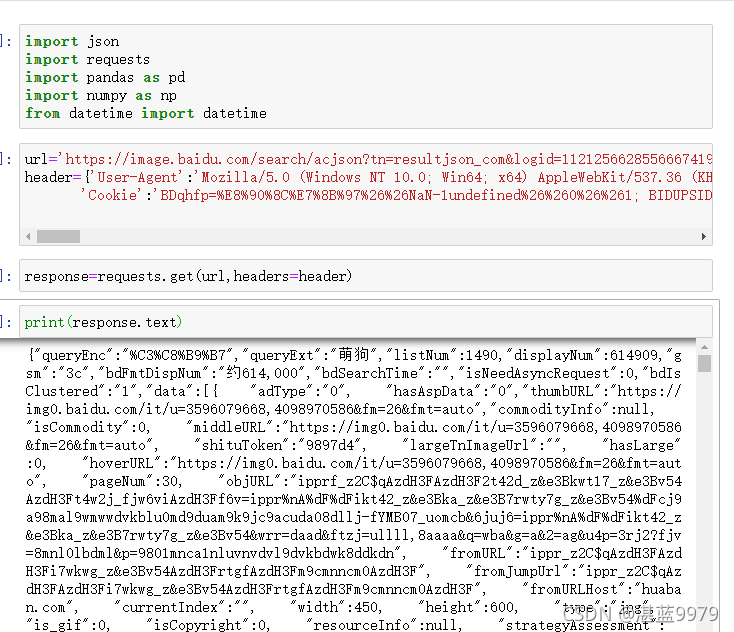

import json

import requests

import pandas as pd

import numpy as np

from datetime import datetime?请求网页

url='https://image.baidu.com/search/acjson?tn=resultjson_com&logid=11212566285566674193&ipn=rj&ct=201326592&is=&fp=result&fr=&word=%E8%90%8C%E7%8B%97&queryWord=%E8%90%8C%E7%8B%97&cl=2&lm=-1&ie=utf-8&oe=utf-8&adpicid=&st=&z=&ic=&hd=&latest=©right=&s=&se=&tab=&width=&height=&face=&istype=&qc=&nc=1&expermode=&nojc=&isAsync=&pn=30&rn=30&gsm=1e&1637206213111='

header={'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/81.0.4044.122 Safari/537.36',

'Cookie':'BDqhfp=%E8%90%8C%E7%8B%97%26%26NaN-1undefined%26%260%26%261; BIDUPSID=291138199359FAE35F45867F411AC08B; PSTM=1589631459; __yjs_duid=1_5618ae84156dc4b366fd86b35c5115201618118695816; BAIDUID=C3D5F87FF2F54CA3D5D485726A6E301E:FG=1; BDUSS=EpiM3UyZDdQTUU3a2dNYjdKSWxCcmxGdmZpMkVQbGQtSnJWTVZQZ0VSY0xqYXhoRVFBQUFBJCQAAAAAAAAAAAEAAAC0wI5LwLbDznnRrNGsAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAsAhWELAIVhe; BDUSS_BFESS=EpiM3UyZDdQTUU3a2dNYjdKSWxCcmxGdmZpMkVQbGQtSnJWTVZQZ0VSY0xqYXhoRVFBQUFBJCQAAAAAAAAAAAEAAAC0wI5LwLbDznnRrNGsAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAsAhWELAIVhe; indexPageSugList=%5B%22%E5%87%BA%E7%A7%9F%E8%BD%A6%E7%AB%96%E5%B1%8F%22%2C%22%E5%87%BA%E7%A7%9F%E8%BD%A6%22%2C%22%E5%82%80%E5%84%A1%22%2C%22%E6%81%90%E9%BE%99%22%5D; BDORZ=B490B5EBF6F3CD402E515D22BCDA1598; H_PS_PSSID=34441_35106_31254_35048_35096_34584_34518_34578_34606_34815_26350_34971_35113_35078; BAIDUID_BFESS=C3D5F87FF2F54CA3D5D485726A6E301E:FG=1; BDRCVFR[dG2JNJb_ajR]=mk3SLVN4HKm; userFrom=www.baidu.com; ab_sr=1.0.1_MjAyNjRkNjE5N2NkNTJkOWJjYzRjMjI4M2M3MDc5NzUxZDkzNzZmZjc4NTQyMzU2YTQwNjZjYTYxYjc4ZThjODJkN2U5ODA5NGMyNTE4ZDA0ZGY0ODNhNmFhNTgxZmEyNWE1MDFmZTM1NDA5YTA1Mjk3ZGYxODExY2ViNjQxNjNiMjEzMWI2YzE5M2NkZjY2Nzk1OWEyYmMzYzk3NDk5OTA1NzExYmFlODc1Mjc5YTc5MjExMTU1NjYwMmQzMzM2'}

response=requests.get(url,headers=header)

print(response.text)一页爬取

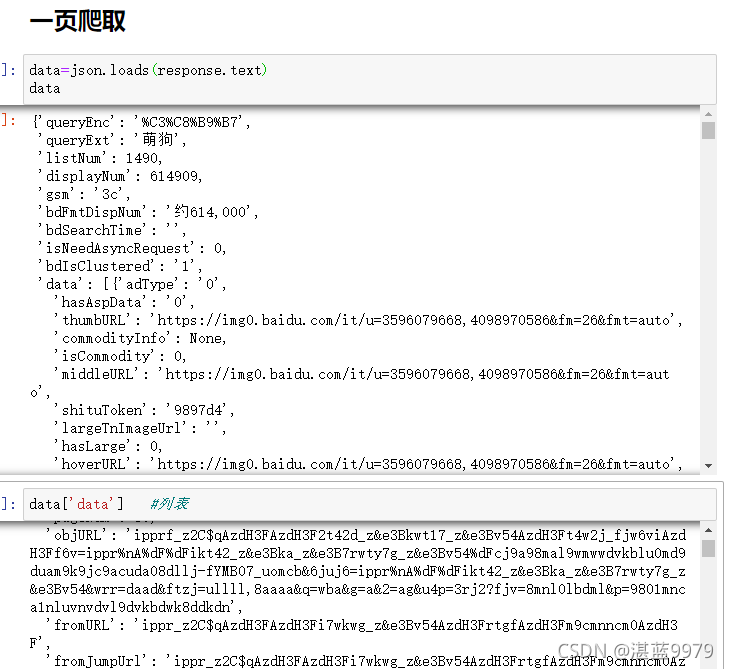

data=json.loads(response.text)

data

data['data'] #列表

len(data['data']) #一页30条

data['data'][0]['thumbURL']

for i in range(0,len(data['data'])-1):

url_p.append(data['data'][i]['thumbURL'])

len(url_p)

url_purl=[]

for i in range(len(url_p)):

res=requests.get(url_p[i],headers=header)

string='C:\\Users\\lenovo\\Desktop\\萌狗图片\\'+'第'+str(i)+'张.jpg'

with open(string,'wb') as f:

f.write(res.content)多页爬取

url_all=[]

for i in range(31):

url_all.append('https://image.baidu.com/search/acjson?tn=resultjson_com&logid=11212566285566674193&ipn=rj&ct=201326592&is=&fp=result&fr=&word=%E8%90%8C%E7%8B%97&queryWord=%E8%90%8C%E7%8B%97&cl=2&lm=-1&ie=utf-8&oe=utf-8&adpicid=&st=&z=&ic=&hd=&latest=©right=&s=&se=&tab=&width=&height=&face=&istype=&qc=&nc=1&expermode=&nojc=&isAsync=&pn='+str(30*i)+'&rn=30&gsm=1e&1637206213111=')

url_f=[]

for ul in url_all:

response=requests.get(url,headers=header)

data=json.loads(response.text)

for i in range(0,len(data['data'])-1):

url_f.append(data['data'][i]['thumbURL'])

len(url_f)

for i in range(len(url_f)):

res=requests.get(url_f[i],headers=header)

string='C:\\Users\\lenovo\\Desktop\\萌狗图片\\'+'第'+str(i)+'张.jpg'

with open(string,'wb') as f:

f.write(res.content)以下是代码截图

?

?

?

?

?