百度指数爬取原文在这里:如何用Python下载百度指数的数据_小小明-代码实体的专栏-CSDN博客

00.序言

一直想把百度指数每天定时爬下来,然后放到Dashboard里展示,终于,我看到了大神给出的方法。开心开心....本文会把每个步骤得到的结果一一列出(方便哪天脑子不好使,不知道这个步骤是干嘛的)

注意事项:

- 百度指数需要登录账号(为的是拿cookie,拿完就不用了吧)

- cookie需要在开发者工具里面找(Chrome F12)随便找一个链接打开,往下滑都能找到

- 百度指数只给塞5个对比词,超过了就需要再来一遍

01.百度指数爬虫

首先是标题头,大概知道这是为了伪装我们的爬虫像个正常用户一样去浏览,防止反爬...但具体的,等我深入学习之后再来填坑吧

headers = {

"Connection": "keep-alive",

"Accept": "application/json, text/plain, */*",

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.198 Safari/537.36",

"Sec-Fetch-Site": "same-origin",

"Sec-Fetch-Mode": "cors",

"Sec-Fetch-Dest": "empty",

"Referer": "https://index.baidu.com/v2/index.html",

"Accept-Language": "zh-CN,zh;q=0.9",

'Cookie': '太长,随用随找吧'

}然后是解密函数,这个是重点啊!!!(原文作者用decrypt搜索全局,找到了解密函数,论函数名字的的重要性)

def decrypt(ptbk, index_data): ## ptbk='pm1r-DUTRjYaVX95801+27.36-,%49';index_data='r1rpXar11Xja9DrXammpma91rrammjpa9DpU'

n = len(ptbk)//2 ## 15

a = dict(zip(ptbk[:n], ptbk[n:])) ## {'p': '5', 'm': '8', '1': '0', 'r': '1', '-': '+', 'D': '2', 'U': '7', 'T': '.', 'R': '3', 'j': '6', 'Y': '-', 'a': ',', 'V': '%', 'X': '4', '9': '9'}

return "".join([a[s] for s in index_data])然后是正文部分,把百度指数的数值爬取出来,装到数组里面,然后返回结果。

def get_index_data(keys, start=None, end=None): ##keys=['大众','丰田','特斯拉']

words = [[{"name": key, "wordType": 1}] for key in keys]

words = str(words).replace(" ", "").replace("'", "\"") ##'[[{"name":"丰田","wordType":1}],[{"name":"特斯拉","wordType":1}],[{"name":"大众","wordType":1}]]'

today = date.today() ## 2021-11-28

if start is None:

start = str(today-timedelta(days=8)) ## '2021-11-20'

if end is None:

end = str(today-timedelta(days=2)) ## '2021-11-26'

url = f'http://index.baidu.com/api/SearchApi/index?area=0&word={words}&area=0&startDate={start}&endDate={end}' ## 'http://index.baidu.com/api/SearchApi/index?area=0&word=[[{"name":"丰田","wordType":1}],[{"name":"特斯拉","wordType":1}],[{"name":"大众","wordType":1}]]&area=0&startDate=2021-11-20&endDate=2021-11-26'

print(words, start, end)

res = requests.get(url, headers=headers) ## <Response [200]>

data = res.json()['data'] ## {'userIndexes': [ {'word': [{'name': '丰田', 'wordType': 1}], 'all': {'startDate': '2021-11-20', 'endDate': '2021-11-26', 'data': 'r1rpXar11Xja9DrXammpma91rrammjpa9DpU'}, 'pc': {'startDate': '2021-11-20', 'endDate': '2021-11-26', 'data': ''}, 'wise': {'startDate': '2021-11-20', 'endDate': '2021-11-26', 'data': 'x17-qcx17-qcRdq7cRMdRcR7MqcRq1-cRCx1'}, 'type': 'day'}], 'uniqid': '66e410feaedcfd44beeb5fd473c5a243'}

uniqid = data['uniqid'] ## 'uniqid': '66e410feaedcfd44beeb5fd473c5a243'

url = f'http://index.baidu.com/Interface/ptbk?uniqid={uniqid}'

res = requests.get(url, headers=headers)

ptbk = res.json()['data']

result = {}

result["startDate"] = start

result["endDate"] = end

for userIndexe in data['userIndexes']:

name = userIndexe['word'][0]['name'] ## '丰田'

tmp = {}

index_all = userIndexe['all']['data'] ## 'r1rpXar11Xja9DrXammpma91rrammjpa9DpU'

index_all_data = [int(e) for e in decrypt(ptbk, index_all).split(",")]

tmp["all"] = index_all_data ## [10154, 10046, 9214, 8858, 9011, 8865, 9257]

index_pc = userIndexe['pc']['data'] ## '9XRa9Xmarr9Uarr1parr1Uar1jDar1RD'

index_pc_data = [int(e) for e in decrypt(ptbk, index_pc).split(",")]

tmp["pc"] = index_pc_data ## [943, 948, 1197, 1105, 1107, 1062, 1032]

index_wise = userIndexe['wise']['data'] ## '9Drra919mam1rUaUUpRaU91XaUm1RamDDp'

index_wise_data = [int(e) for e in decrypt(ptbk, index_wise).split(",")] ## [9211, 9098, 8017, 7753, 7904, 7803, 8225]

tmp["wise"] = index_wise_data

result[name] = tmp ## {'startDate': '2021-11-20', 'endDate': '2021-11-26', '丰田': {'all': [10154, 10046, 9214, 8858, 9011, 8865, 9257], 'pc': [943, 948, 1197, 1105, 1107, 1062, 1032], 'wise': [9211, 9098, 8017, 7753, 7904, 7803, 8225]}}

return result整个爬虫部分的代码如下:

import requests

import json

from datetime import date, timedelta

headers = {

"Connection": "keep-alive",

"Accept": "application/json, text/plain, */*",

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.198 Safari/537.36",

"Sec-Fetch-Site": "same-origin",

"Sec-Fetch-Mode": "cors",

"Sec-Fetch-Dest": "empty",

"Referer": "https://index.baidu.com/v2/index.html",

"Accept-Language": "zh-CN,zh;q=0.9",

'Cookie': 'F12,随用随查'

}

def decrypt(ptbk, index_data):

n = len(ptbk)//2

a = dict(zip(ptbk[:n], ptbk[n:]))

return "".join([a[s] for s in index_data])

def get_index_data(keys, start=None, end=None):

words = [[{"name": key, "wordType": 1}] for key in keys]

words = str(words).replace(" ", "").replace("'", "\"")

today = date.today()

if start is None:

start = str(today-timedelta(days=8))

if end is None:

end = str(today-timedelta(days=2))

url = f'http://index.baidu.com/api/SearchApi/index?area=0&word={words}&area=0&startDate={start}&endDate={end}'

print(words, start, end)

res = requests.get(url, headers=headers)

data = res.json()['data']

uniqid = data['uniqid']

url = f'http://index.baidu.com/Interface/ptbk?uniqid={uniqid}'

res = requests.get(url, headers=headers)

ptbk = res.json()['data']

result = {}

result["startDate"] = start

result["endDate"] = end

for userIndexe in data['userIndexes']:

name = userIndexe['word'][0]['name']

tmp = {}

index_all = userIndexe['all']['data']

index_all_data = [int(e) for e in decrypt(ptbk, index_all).split(",")]

tmp["all"] = index_all_data

index_pc = userIndexe['pc']['data']

index_pc_data = [int(e) for e in decrypt(ptbk, index_pc).split(",")]

tmp["pc"] = index_pc_data

index_wise = userIndexe['wise']['data']

index_wise_data = [int(e)

for e in decrypt(ptbk, index_wise).split(",")]

tmp["wise"] = index_wise_data

result[name] = tmp

return result

result = get_index_data(["丰田","特斯拉","大众"])

print(result)

结果如下:

{'startDate': '2021-11-20',

'endDate': '2021-11-26',

'丰田':

{'all': [11413, 11358, 10983, 10576, 10627, 10655, 10950], ## PC+移动

'pc': [1049, 994, 1440, 1317, 1303, 1249, 1240], ## PC

'wise': [10364, 10364, 9543, 9259, 9324, 9406, 9710]}, ## 移动

'特斯拉':

{'all': [43718, 42110, 45562, 44401, 56719, 43826, 43400],

'pc': [5742, 5400, 10792, 9356, 11819, 8825, 8359],

'wise': [37976, 36710, 34770, 35045, 44900, 35001, 35041]},

'大众':

{'all': [13245, 12874, 12337, 11782, 12068, 11712, 12440],

'pc': [1148, 1016, 1688, 1595, 1540, 1419, 1474],

'wise': [12097, 11858, 10649, 10187, 10528, 10293, 10966]}}02.导入数据库

这一个步骤主要是将上面的字典当作输入方,导入到数据库中。

注意事项 & 知识点:

- 数据库连接用pymysql,方便好用

- sql执行,用的语句是 if 这个DB/表不存在 then 创建;完美避开执行两次,第二次要注释代码的尴尬局面

- 日期转换问题(太复杂了,需要专门开一篇文章去系统学习如何转换):

- 导入包? from datetime import datetime, timedelta, date

- 怎么把字符串转为格式化日期:date = datetime.strptime(str, '%Y-%m-%d')

- 怎么求得两个日期之间的差:上面的 date1-date2 即可,但返回值不好看,所以建议用(date1-date2).days() 得到纯数字

- 日期加一天/减一天:new_date = old_date.date()+timedelta(days=1/-1)

- 字典操作问题(也是好复杂啊....)

- 取所有字典的key值:dict.keys() -->dict_keys(['startDate', 'endDate', '丰田', '特斯拉', '大众'])

- 上面转换为列表:list(dict.keys())

- 取出字典键对应的值,因为字典是无序的,所以只能用键去取:dict['丰田']['all']-->[43718, 42110, 45562, 44401, 56719, 43826, 43400]

- SQL执行和提交问题

- 执行SQL有单条执行和批量执行,这里用的是单条执行。需要注意的是,可以先把values值给生成,再赋给sql语句

- sql执行:cursor.execute(sql);conn.commit()

- 云数据库的白名单问题。(放通了自己的IP,但好像不管用...可以先讲究用着0.0.0.0/0),千万要找到别的办法

import pymysql

from datetime import datetime, timedelta, date

def conn_mysql(dict): ## {'startDate': '2021-11-20', 'endDate': '2021-11-26', '丰田': {'all': [11413, 11358, 10983, 10576, 10627, 10655, 10950], 'pc': [1049, 994, 1440, 1317, 1303, 1249, 1240], 'wise': [10364, 10364, 9543, 9259, 9324, 9406, 9710]}, ...}

# 初始化一个DB

conn = pymysql.connect(host='这里填mysql的连接',user='root',password='这里填密码')

cursor = conn.cursor() ## 建立游标

cursor.execute('CREATE DATABASE IF NOT EXISTS testDB DEFAULT CHARSET utf8 COLLATE utf8_general_ci;') ## 如果没有这个DB,就建一个

conn.select_db('testDB') ## 然后选择这个DB

# 创建baiduIndex表

cursor.execute('drop table if exists baiduIndex')

sql = """CREATE TABLE IF NOT EXISTS `baiduIndex` (

`brand` varchar(255) NOT NULL,

`create_date` varchar(255) NOT NULL, ## 这里本来是想选date类型,但好像导不进去...

`all_pw` int(11) NOT NULL,

`pc` int(11) NOT NULL,

`wise` int(11) NOT NULL,

`load_date` varchar(255) NOT NULL

) ENGINE=InnoDB DEFAULT CHARSET=utf8 AUTO_INCREMENT=0"""

cursor.execute(sql)

# 距离的天数,方便下面做循环(就是说,这样做是希望拿到all列表里有几个数值)

startdate = datetime.strptime(dict['startDate'], '%Y-%m-%d') ## 2021-11-20 00:00:00 因为dict['startDate']是str类型,所以转成日期型才好计算距离的天数,这个方法可笨了,让我之后看看有没有更好的方法

enddate = datetime.strptime(dict['endDate'], '%Y-%m-%d') ## 2021-11-26 00:00:00

diff = (enddate - startdate).days ## 如果不加.days()的话,输出来的格式是6 days, 0:00:00

# 想知道有几个品牌

brands = list(dict.keys()) ##['startDate', 'endDate', '丰田', '特斯拉', '大众']

brands_len = len(brands) - 2 ## 3,去掉'startDate', 'endDate'这两个固定字段

for i in range(brands_len):

for day in range(diff):

brand = brands[2 + i] ## '丰田'

createdate = startdate.date() + timedelta(days=day) ## 2021-11-20

all = dict[brand]['all'][day]

pc = dict[brand]['pc'][day]

wise = dict[brand]['wise'][day]

today = date.today()

data = '"' + brand + '","' + str(createdate) + '",' + str(all) + ',' + str(pc) + ',' + str(wise) + ',"' + str(today) + '"' ## '"丰田","2021-11-20",11413,1049,10364,"2021-11-28"'

sql = ''' insert into baiduIndex (brand, create_date, all_pw, pc, wise, load_date) values(%s);''' % data ## ' insert into baiduIndex (brand, create_date, all_pw, pc, wise, load_date) values("丰田","2021-11-20",11413,1049,10364,"2021-11-28");'

print(sql)

cursor.execute(sql)

conn.commit()

# 关闭游标,关闭DB连接

cursor.close()

conn.close()03.QuickBI展示

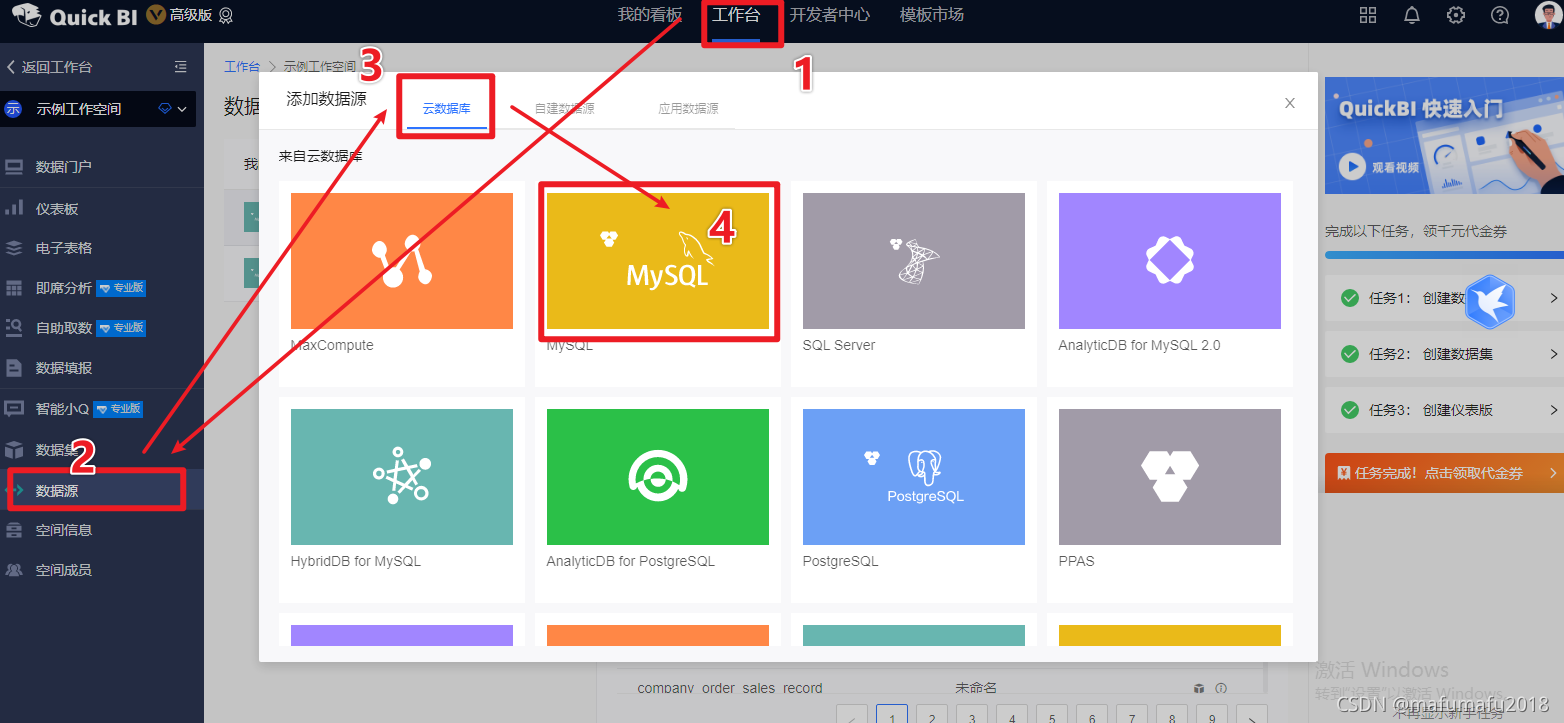

阿里云的BI可视化软件,挺符合中国人的使用习惯的,我觉得挺好用~(只有30天的免费试用期哦,且用且珍惜)链接:阿里云登录 - 欢迎登录阿里云,安全稳定的云计算服务平台

首先,创建数据源。

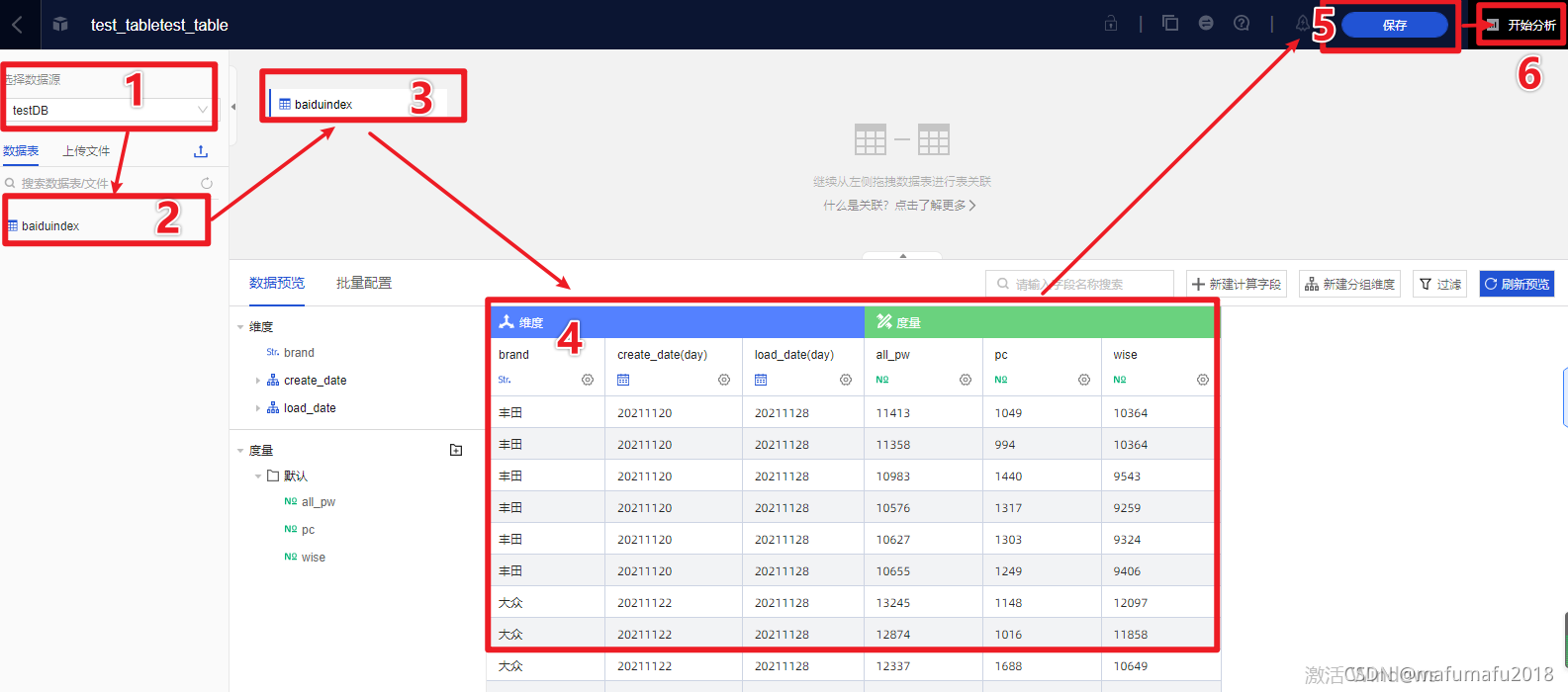

?然后,创建数据集。

Quick BI挺好用的,可以自动识别表里面的日期类型,所以上个步骤导入DB的时候不需要过分纠结一定要是date类型,varchar类型也可以。

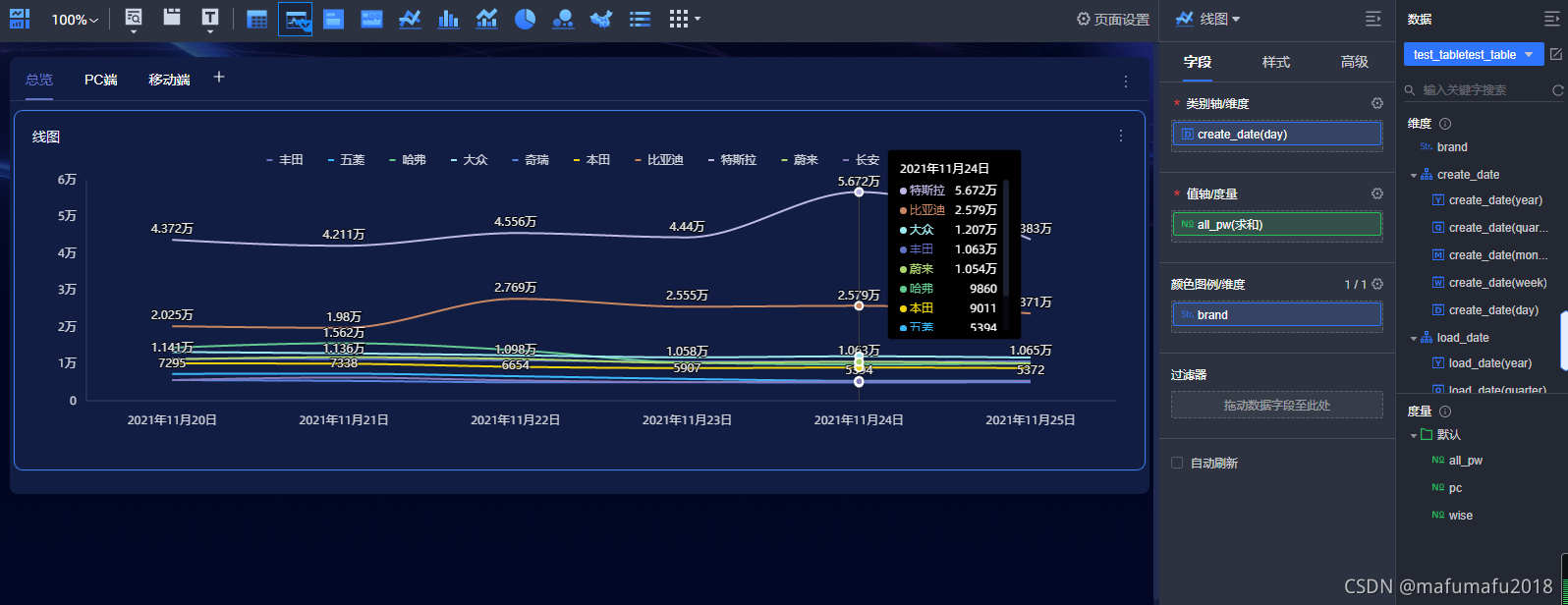

?开始进行创作(试用了一下Quick BI的Tab,好好用!!)?

故事线是:总览十大厂家的总声量-->细分PC端的声量及占比-->细分移动端的声量及占比

?哈弗在PC端的强势地位是我没想到的,这是发生了什么?

(可以通过继续抓取新闻资讯来继续深挖)

?04.结语

以下是全部代码,认识到还有很多不够优美的地方需要继续调整...加油!!!!

import requests

import json

from datetime import datetime, timedelta, date

headers = {

"Connection": "keep-alive",

"Accept": "application/json, text/plain, */*",

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.198 Safari/537.36",

"Sec-Fetch-Site": "same-origin",

"Sec-Fetch-Mode": "cors",

"Sec-Fetch-Dest": "empty",

"Referer": "https://index.baidu.com/v2/index.html",

"Accept-Language": "zh-CN,zh;q=0.9",

'Cookie': '啊啊啊啊啊自己填'

}

def decrypt(ptbk, index_data):

n = len(ptbk)//2

a = dict(zip(ptbk[:n], ptbk[n:]))

return "".join([a[s] for s in index_data])

def get_index_data(keys, start=None, end=None):

words = [[{"name": key, "wordType": 1}] for key in keys]

words = str(words).replace(" ", "").replace("'", "\"")

today = date.today()

if start is None:

start = str(today-timedelta(days=8))

if end is None:

end = str(today-timedelta(days=2))

url = f'http://index.baidu.com/api/SearchApi/index?area=0&word={words}&area=0&startDate={start}&endDate={end}'

print(words, start, end)

res = requests.get(url, headers=headers)

data = res.json()['data']

uniqid = data['uniqid']

url = f'http://index.baidu.com/Interface/ptbk?uniqid={uniqid}'

res = requests.get(url, headers=headers)

ptbk = res.json()['data']

result = {}

result["startDate"] = start

result["endDate"] = end

for userIndexe in data['userIndexes']:

name = userIndexe['word'][0]['name']

tmp = {}

index_all = userIndexe['all']['data']

index_all_data = [int(e) for e in decrypt(ptbk, index_all).split(",")]

tmp["all"] = index_all_data

index_pc = userIndexe['pc']['data']

index_pc_data = [int(e) for e in decrypt(ptbk, index_pc).split(",")]

tmp["pc"] = index_pc_data

index_wise = userIndexe['wise']['data']

index_wise_data = [int(e)

for e in decrypt(ptbk, index_wise).split(",")]

tmp["wise"] = index_wise_data

result[name] = tmp

return result

import pymysql

def conn_mysql(dict):

# 初始化一个DB

conn = pymysql.connect(host='啊啊啊啊啊自己填',user='root',password='啊啊啊啊啊自己填')

cursor = conn.cursor()

cursor.execute('CREATE DATABASE IF NOT EXISTS testDB DEFAULT CHARSET utf8 COLLATE utf8_general_ci;')

conn.select_db('testDB')

# 创建baiduIndex表

# cursor.execute('drop table if exists baiduIndex')

sql = """CREATE TABLE IF NOT EXISTS `baiduIndex` (

`brand` varchar(255) NOT NULL,

`create_date` date,

`all_pw` int(11) NOT NULL,

`pc` int(11) NOT NULL,

`wise` int(11) NOT NULL,

`load_date` date

) ENGINE=InnoDB DEFAULT CHARSET=utf8 AUTO_INCREMENT=0"""

cursor.execute(sql)

# 距离的天数

startdate = datetime.strptime(dict['startDate'], '%Y-%m-%d')

enddate = datetime.strptime(dict['endDate'], '%Y-%m-%d')

diff = (enddate - startdate).days

print(diff)

# 想知道有几个品牌

brands = list(dict.keys())

brands_len = len(brands) - 2

sql_list = []

for i in range(brands_len):

for day in range(diff):

brand = brands[2 + i]

createdate = startdate.date() + timedelta(days=day)

all = dict[brand]['all'][day]

pc = dict[brand]['pc'][day]

wise = dict[brand]['wise'][day]

today = date.today()

data = '"' + brand + '","' + str(createdate) + '",' + str(all) + ',' + str(pc) + ',' + str(wise) + ',"' + str(today) + '"'

sql = ''' insert into baiduIndex (brand, create_date, all_pw, pc, wise, load_date) values(%s);''' % data

print(sql)

cursor.execute(sql)

conn.commit()

# 关闭游标,关闭DB连接

cursor.close()

conn.close()

result = get_index_data(["比亚迪","长安","奇瑞","五菱","哈弗"])

conn_mysql(result)