本案例只做学习,不做他用!

import requests

import os

from lxml import etree

import random

UA=[

"Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; AcooBrowser; .NET CLR 1.1.4322; .NET CLR 2.0.50727)",

"Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 6.0; Acoo Browser; SLCC1; .NET CLR 2.0.50727; Media Center PC 5.0; .NET CLR 3.0.04506)",

"Mozilla/4.0 (compatible; MSIE 7.0; AOL 9.5; AOLBuild 4337.35; Windows NT 5.1; .NET CLR 1.1.4322; .NET CLR 2.0.50727)",

"Mozilla/5.0 (Windows; U; MSIE 9.0; Windows NT 9.0; en-US)",

"Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Win64; x64; Trident/5.0; .NET CLR 3.5.30729; .NET CLR 3.0.30729; .NET CLR 2.0.50727; Media Center PC 6.0)",

"Mozilla/5.0 (compatible; MSIE 8.0; Windows NT 6.0; Trident/4.0; WOW64; Trident/4.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; .NET CLR 1.0.3705; .NET CLR 1.1.4322)",

"Mozilla/4.0 (compatible; MSIE 7.0b; Windows NT 5.2; .NET CLR 1.1.4322; .NET CLR 2.0.50727; InfoPath.2; .NET CLR 3.0.04506.30)",

"Mozilla/5.0 (Windows; U; Windows NT 5.1; zh-CN) AppleWebKit/523.15 (KHTML, like Gecko, Safari/419.3) Arora/0.3 (Change: 287 c9dfb30)",

"Mozilla/5.0 (X11; U; Linux; en-US) AppleWebKit/527+ (KHTML, like Gecko, Safari/419.3) Arora/0.6",

"Mozilla/5.0 (Windows; U; Windows NT 5.1; en-US; rv:1.8.1.2pre) Gecko/20070215 K-Ninja/2.1.1",

"Mozilla/5.0 (Windows; U; Windows NT 5.1; zh-CN; rv:1.9) Gecko/20080705 Firefox/3.0 Kapiko/3.0",

"Mozilla/5.0 (X11; Linux i686; U;) Gecko/20070322 Kazehakase/0.4.5",

"Mozilla/5.0 (X11; U; Linux i686; en-US; rv:1.9.0.8) Gecko Fedora/1.9.0.8-1.fc10 Kazehakase/0.5.6",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/535.11 (KHTML, like Gecko) Chrome/17.0.963.56 Safari/535.11",

"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_7_3) AppleWebKit/535.20 (KHTML, like Gecko) Chrome/19.0.1036.7 Safari/535.20",

"Opera/9.80 (Macintosh; Intel Mac OS X 10.6.8; U; fr) Presto/2.9.168 Version/11.52",

]

m=1

headers={'user-agent':random.choice(UA)}

def get_html(n):

global m

url=f'https://www.3158.cn/xiangmu/canyin/?pt=all&page={n}'

resp=requests.get(url,headers=headers).text

file=os.path.join('小吃网',str(m)+'.html')

with open(file,'w+',encoding='utf-8') as f:

f.write(resp)

m+=1

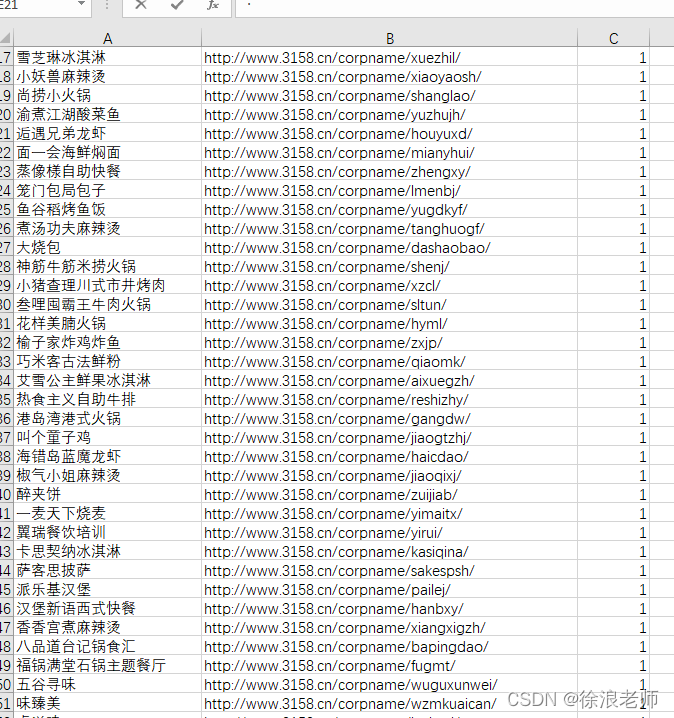

def get_content(m):

# 读取html

file = os.path.join('小吃网', str(m) + '.html')

with open(file,'r',encoding='utf-8') as f:

resp=f.read()

# xpath抓取标签

element = etree.HTML(resp)

origin_li = element.xpath("//ul[contains(@class,'xm-list')]/li")

for item in origin_li:

data_name=item.xpath("./div[@class='top']/a/@title")[0]

data_link="http:" + item.xpath("./div[@class='top']/a/@href")[0]

data_inve=1

data_address=1

data_bot=1

# 组合存储格式

data = f'{data_name},{data_link},{data_inve},{data_address},{data_bot}\n'

print(data)

save_csv(data)

def save_csv(data):

with open(f'小吃网.csv','a+',encoding='ANSI',errors='ignore') as f:

f.write(data)

if __name__ == '__main__':

path=os.path.exists('小吃网')

os.mkdir('小吃网') if not path else print('文件夹已经建立!')

# for n in range(1,130):

# get_html(n)

# print('文档全部保存完毕!')

with open(f'小吃网.csv','a+',encoding='ANSI',errors='ignore') as f:

f.write(f'{"名称"},{"链接"},{"费用"},{"地址"},{"参数"}\n')

for m in range(1,130):

data=get_content(m)

print('第'+str(m)+'页储存完毕!')