获取网站的基本信息

- 目标网址:https://www.umei.cc/katongdongman/dongmanbizhi/index.htm

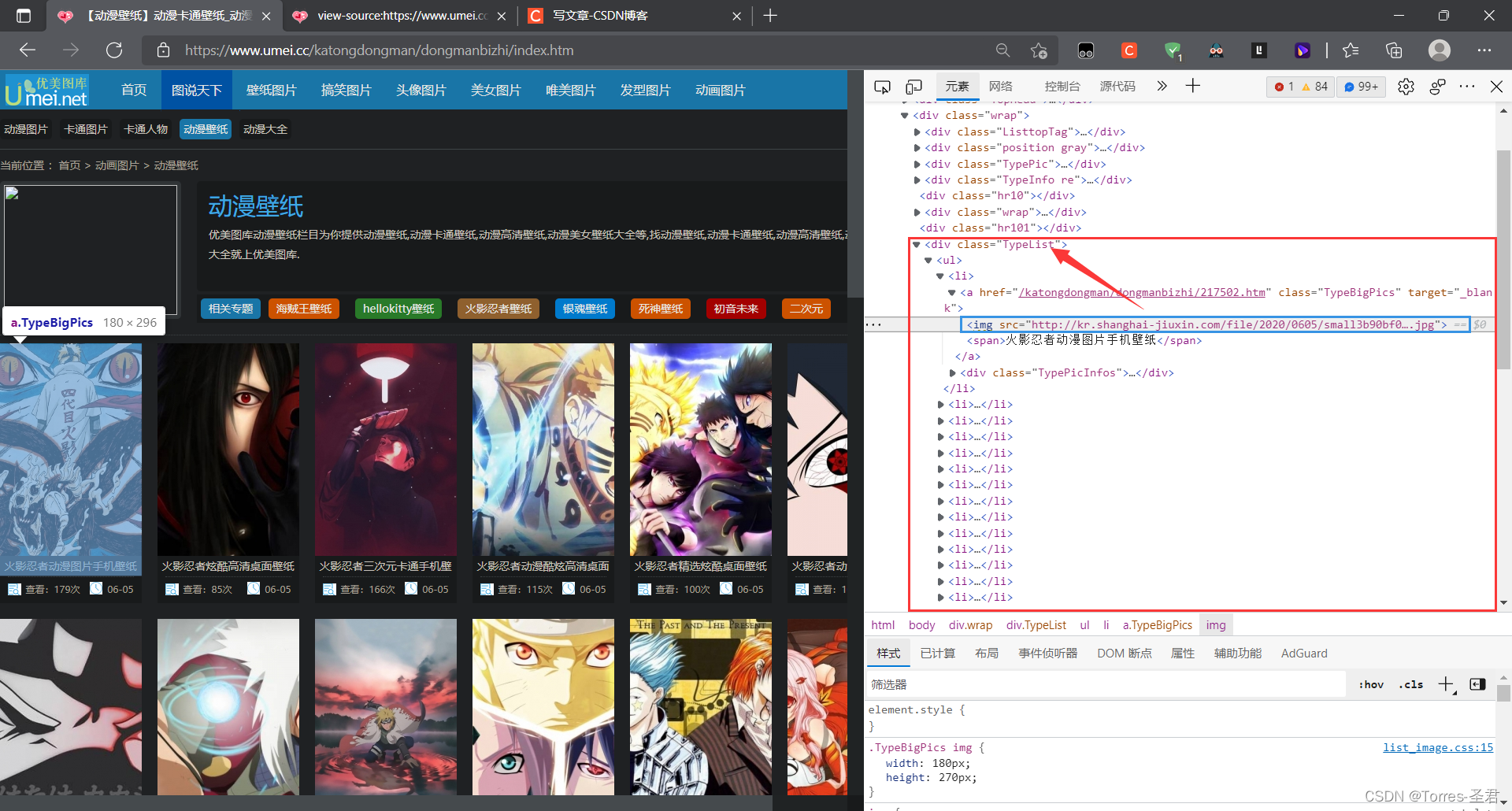

- 在进入网站后,右键网站任意位置点击

查看页面源代码 - 在源码中发现居然可以直接找到图片的链接,点进链接核实确实是页面对应的图片,那接下来就省事多了

- 用

requests对网站发送请求,代码如下:

import requests

url = 'https://www.umei.cc/katongdongman/dongmanbizhi/index.htm'

res = requests.get(url)

print(res)

- 运行代码后得到结果:

<Response [200]>返回值为200,说明网站请求成功

获取页面上所有图片链接

-

右键页面点

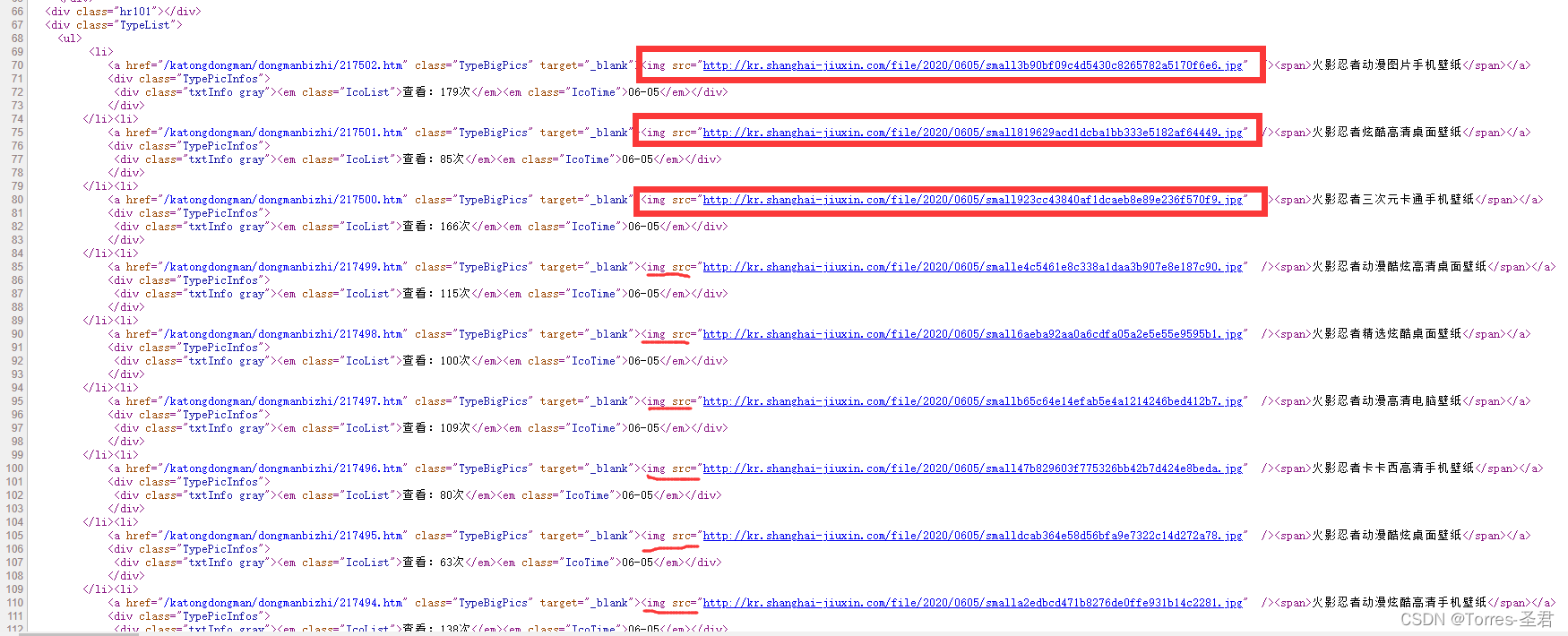

检查,通过对图片的定位,发现图片的链接在一个class="TypeList"的div标签内

-

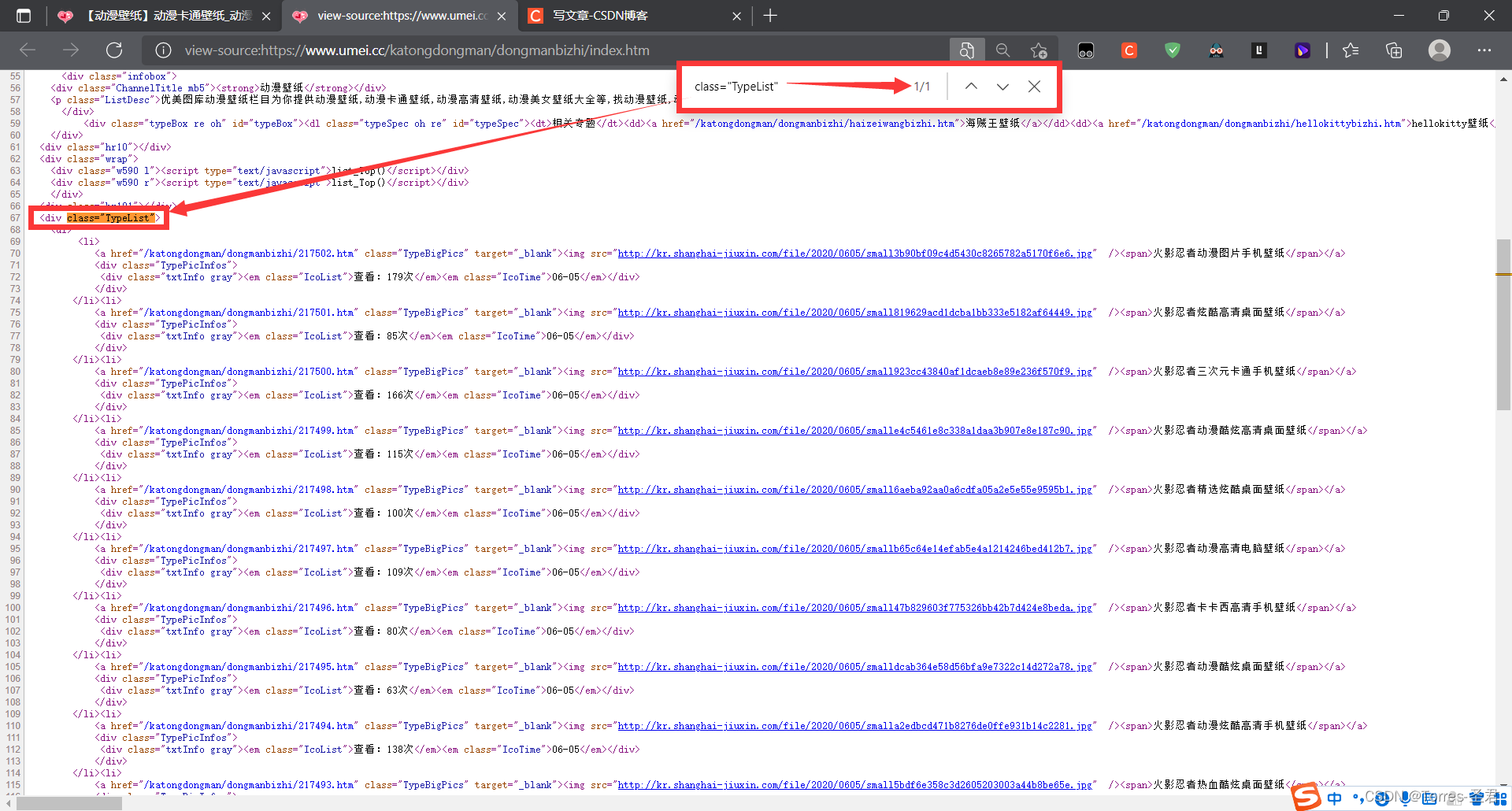

再次回到页面的源码,按

ctrl + f组合键对页面源码进行查找,查找的内容为class="TypeList",通过查找发现页面还恰好只有这一个,所以这里采用bs4来获取页面的元素

-

bs4的具体用法这里不在过多叙述,废话不多说,直接上代码:

import requests

from bs4 import BeautifulSoup

url = 'https://www.umei.cc/katongdongman/dongmanbizhi/index.htm'

res = requests.get(url)

soup = BeautifulSoup(res.text, "html.parser")

data = soup.find("div", class_="TypeList").find_all("img")

for image_urls in data:

img_url = image_urls.get("src")

print(img_url)

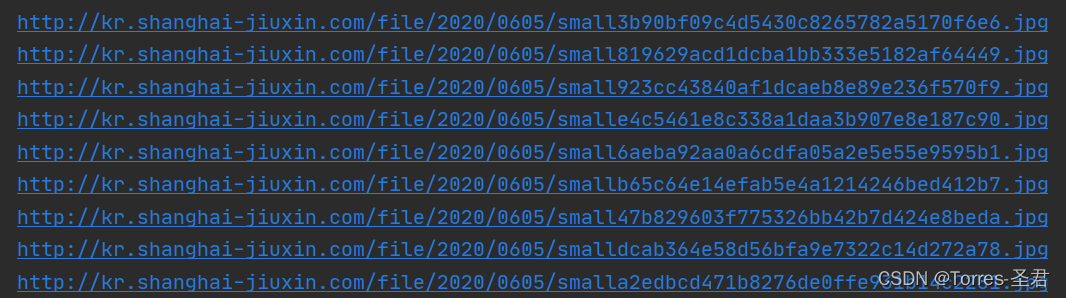

- 运行代码后即可得到页面上所以图片的链接

获取所有页面的图片链接

-

通过页面点击下一页,我们不难发现页面URL的变化(比如第2页、第3页),可以看到URL中只有

index_后的数值发生了变化,而这个数值还正好和页码相对应

-

按照上面获取单页图片链接的代码,可以把页码的URL都存入一个列表中,然后对列表中的每个URL发送请求,为避免过多访问而被封IP,所以这里构造一个URL的请求头,完整代码如下:

import requests

from bs4 import BeautifulSoup

def get_images_url():

url_list = ['https://www.umei.cc/katongdongman/dongmanbizhi/index.htm']

# 生成网页链接列表

for i in range(2, 27):

url_list.append(f'https://www.umei.cc/katongdongman/dongmanbizhi/index_{i}.htm')

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/96.0.4664.55 Safari/537.36 Edg/96.0.1054.41'

}

for i in range(0, len(url_list)):

print("第%d页所有图片链接:" % (i+1))

res = requests.get(url_list[i], headers=headers, timeout=5)

res.encoding = "utf-8"

con = BeautifulSoup(res.text, "html.parser")

# 图片链接标签定位

data = con.find("div", class_="TypeList").find_all("img")

# 获取所有图片的链接

for image_urls in data:

img_url = image_urls.get("src")

print(img_url)

get_images_url()

-

通过程序执行后得到链接,发现图片链接都是封面的图片,清晰度很低,所以通过进入子页面获取的图片链接和封面链接作对比,发现子页面图片的链接比封面图片的链接只多了

small或rn,去掉这些字符即可得到子页面的图片

-

还有就是图片链接是

notimg.gif的图片,都没有子页面图片链接(即子页面为空,原图已被删除),所以也要去除这些子页面不存在的图片链接

-

综合上述需求只需对图片链接加以操作即可完成,此时得到的链接即为有效子页面图片链接,完整代码如下:

import requests

from bs4 import BeautifulSoup

# 获取有效的子页面图片链接

def get_images_url():

global new_img_url

url_list = ['https://www.umei.cc/katongdongman/dongmanbizhi/index.htm']

# 生成网页链接列表

for i in range(2, 27):

url_list.append(f'https://www.umei.cc/katongdongman/dongmanbizhi/index_{i}.htm')

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/96.0.4664.55 Safari/537.36 Edg/96.0.1054.41'

}

for i in range(0, len(url_list)):

print("第%d页所有图片链接:" % (i+1))

res = requests.get(url_list[i], headers=headers, timeout=5)

res.encoding = "utf-8"

# 实例化bs4对象

con = BeautifulSoup(res.text, "html.parser")

# 图片链接标签定位

data = con.find("div", class_="TypeList").find_all("img")

# 获取所有图片的链接

for image_urls in data:

# 获取子页面的图片链接

img_url = image_urls.get("src")

if "small" in img_url.split("/")[-1]:

new_img_url = img_url.replace("small", "")

elif "rn" in img_url.split("/")[-1]:

new_img_url = img_url.replace("rn", "")

elif "gif" in img_url.split(".")[-1]:

pass

else:

new_img_url = img_url

print(new_img_url)

get_images_url()

下载保存图片到本地

- 因为上面已获取所有子页面的图片链接,所以这里只需将图片下载保存到本地即可,下载方法:对子页面图片链接发送请求,并以

.content二进制接收,然后以二进制写模式打开文件,并以二进制写入文件即可,代码如下:

# 下载保存图片到本地

def download_images(url):

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/96.0.4664.55 Safari/537.36 Edg/96.0.1054.41'

}

img_res = requests.get(url, headers=headers, timeout=5)

img_name= url.split("/")[-1]

# 这里的img_name就是每个图片的名字,可自行修改

with open("动漫壁纸/" + img_name, "wb") as w:

w.write(img_res.content)

print(f"{img_name}图片下载完成")

-

这里程序无需等到运行自行结束,因为那样需要等待很久,这里截取一部分的运行结果(文件夹内图片的顺序不是下载图片时的顺序)

-

当然,这样一个个下载肯定是不行的,因为完成速度太慢了,接下来介绍用异步协程来保存图片

异步批量下载图片

-

安装异步爬虫模块:

pip install aiohttp -

使用

aiohttp的aiohttp.ClientSession()建立访问请求,并给程序添加请求超时时异常返回内容,防止因超时而引起程序报错

# 使用异步&协程批量下载图片

async def aiohttp_download(url):

img_name = url.split("/")[-1]

try:

async with aiohttp.ClientSession() as session:

async with session.get(url, timeout=10) as res:

with open('动漫壁纸/' + img_name, 'wb') as w:

# 读取内容是异步的,需要await挂起

w.write(await res.content.read())

print(f"{img_name}下载完成")

except Exception as re:

print(f"{img_name}请求超时...")

- 构建异步爬虫主体,启用后再次执行程序可发现,原本1页需要几分钟才能下载完成的内容,现在只需10秒就搞定了

# 异步爬虫主体

async def run():

tasks = []

for link in all_images:

tasks.append(aiohttp_download(link))

await asyncio.wait(tasks)

完整代码

import requests

from bs4 import BeautifulSoup

import aiohttp

import asyncio

import os

# 获取有效的子页面图片链接

def get_images_url():

url_list = ['https://www.umei.cc/katongdongman/dongmanbizhi/index.htm']

page = int(input("请输入要下载图片的页数:"))

if page >= 2:

# 生成网页链接列表

for i in range(2, page+1):

url_list.append(f'https://www.umei.cc/katongdongman/dongmanbizhi/index_{i}.htm')

elif page == 1:

pass

else:

print("输入有误")

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/96.0.4664.55 Safari/537.36 Edg/96.0.1054.41'

}

for i in range(0, len(url_list)):

res = requests.get(url_list[i], headers=headers, timeout=5)

res.encoding = "utf-8"

# 实例化bs4对象

con = BeautifulSoup(res.text, "html.parser")

# 图片链接标签定位

data = con.find("div", class_="TypeList").find_all("img")

# 获取所有图片的链接

for image_urls in data:

# 获取子页面的图片链接

img_url = image_urls.get("src")

if "small" in img_url.split("/")[-1]:

new_img_url = img_url.replace("small", "")

all_images.append(new_img_url)

elif "rn" in img_url.split("/")[-1]:

new_img_url = img_url.replace("rn", "")

all_images.append(new_img_url)

elif "gif" in img_url.split(".")[-1]:

pass

else:

# new_img_url = img_url

all_images.append(img_url)

# download_images(new_img_url)

print(f"<第{i+1}页>图片链接获取完毕!")

# 下载保存图片到本地,已被异步所代替(可删除)

def download_images(url):

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/96.0.4664.55 Safari/537.36 Edg/96.0.1054.41'

}

img_res = requests.get(url, headers=headers, timeout=10)

img_name = url.split("/")[-1]

with open("动漫壁纸/" + img_name, "wb") as w:

w.write(img_res.content)

print(f"{img_name}图片下载完成")

# 使用异步&协程批量下载图片

async def aiohttp_download(url):

img_name = url.split("/")[-1]

try:

async with aiohttp.ClientSession() as session:

async with session.get(url, timeout=10) as res:

with open('动漫壁纸/' + img_name, 'wb') as w:

# 读取内容是异步的,需要await挂起

w.write(await res.content.read())

print(f"{img_name}下载完成")

except Exception as re:

print(f"{img_name}请求超时...")

# 异步爬虫主体

async def run():

tasks = []

for link in all_images:

tasks.append(aiohttp_download(link))

await asyncio.wait(tasks)

# 用于存放全部图片的链接

all_images = []

# 在当前目录新建文件夹

os.mkdir("动漫壁纸")

# 调用生成图片链接函数

get_images_url()

# 启用异步下载

loop = asyncio.get_event_loop()

loop.run_until_complete(run())

print("全部下载完成...")