一、小练手

1.自动填充百度网页的查询关键字完成自动搜索

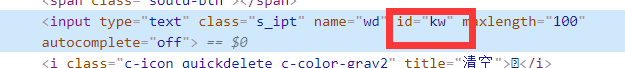

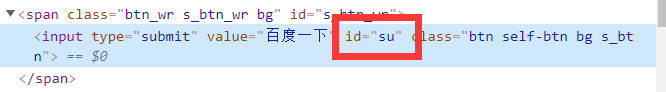

通过查看百度网页的源码找到搜素框的id以及搜素按钮的id

获取百度网页

driver = webdriver.Chrome("E:\GoogleDownload\chromedriver_win32\chromedriver.exe")

driver.get("https://www.baidu.com/")

填充搜索框

search=driver.find_element_by_id("kw")

search.send_keys("醉意丶千层梦")

模拟点击

send_button=driver.find_element_by_id("su")

send_button.click()

效果

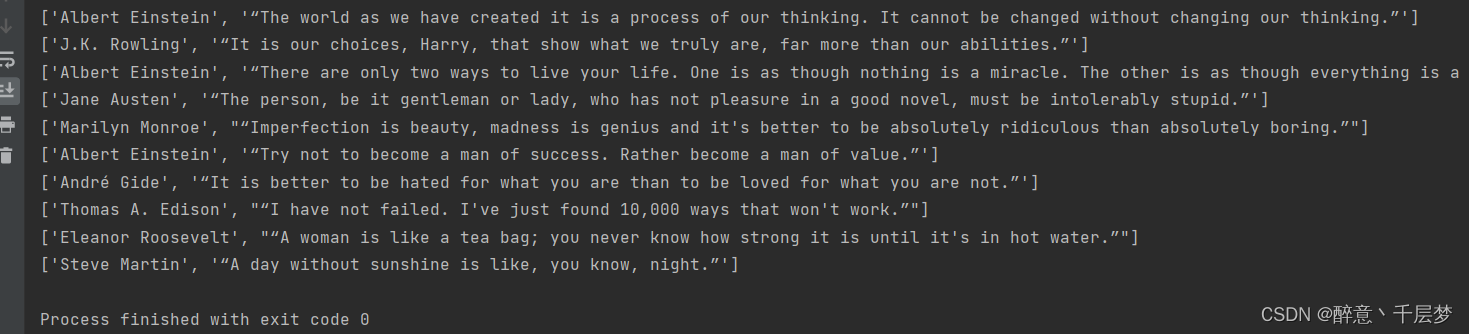

2.到指定网站去爬取十句名言

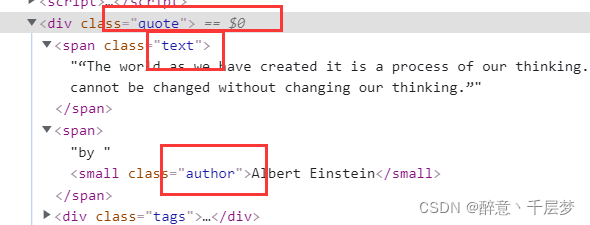

分析网页,含有quote类的标签即为所要的标签

其中text类名言,author为作者。

代码实现

driver = webdriver.Chrome("E:\GoogleDownload\chromedriver_win32\chromedriver.exe")

# 名言所在网站

driver.get("http://quotes.toscrape.com/js/")

# 表头

csvHeaders = ['作者','名言']

# 所有数据

subjects = []

# 单个数据

subject=[]

# 获取所有含有quote的标签

res_list=driver.find_elements_by_class_name("quote")

# 分离出需要的内容

for tmp in res_list:

subject.append(tmp.find_element_by_class_name("author").text)

subject.append(tmp.find_element_by_class_name("text").text)

print(subject)

subjects.append(subject)

subject=[]

效果

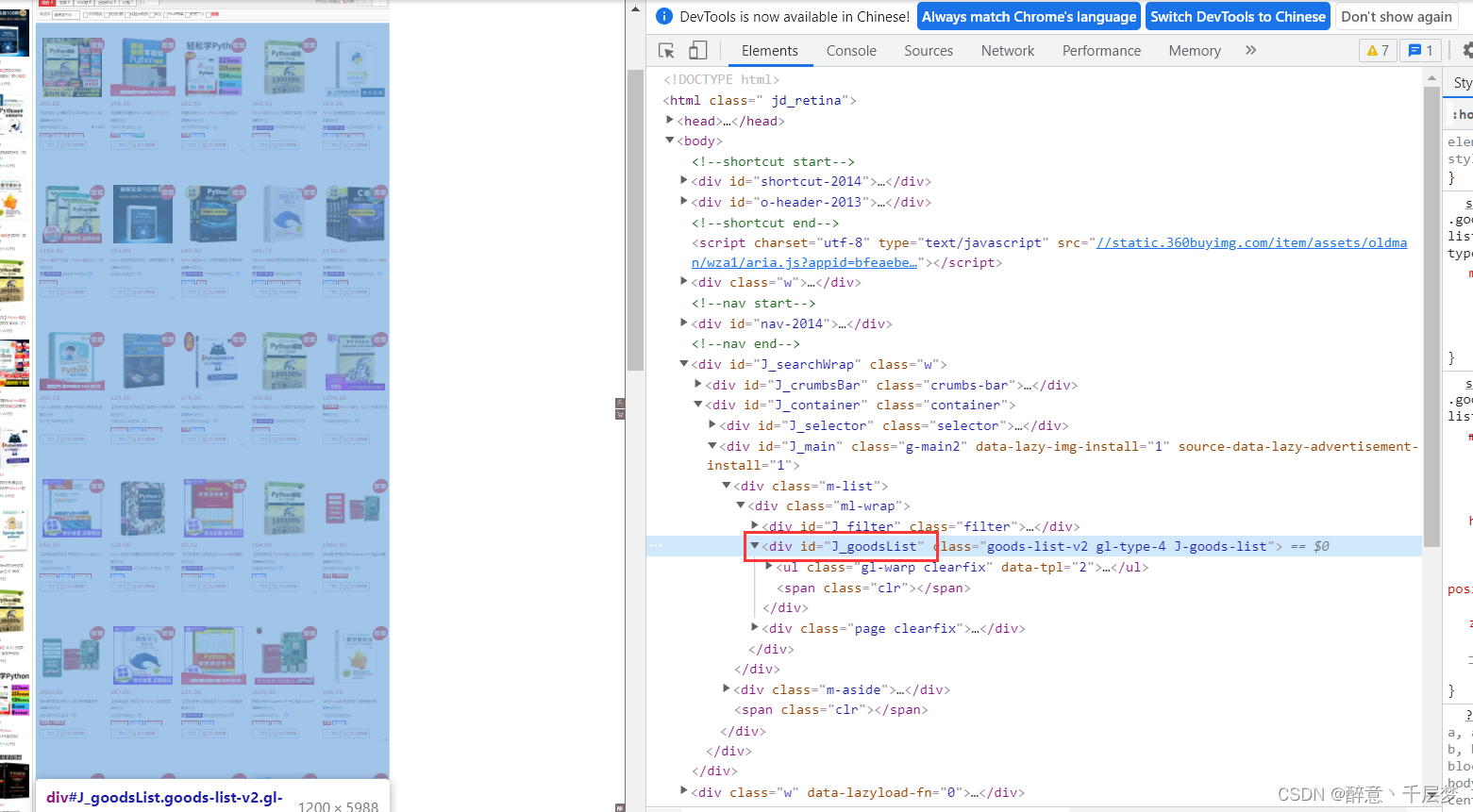

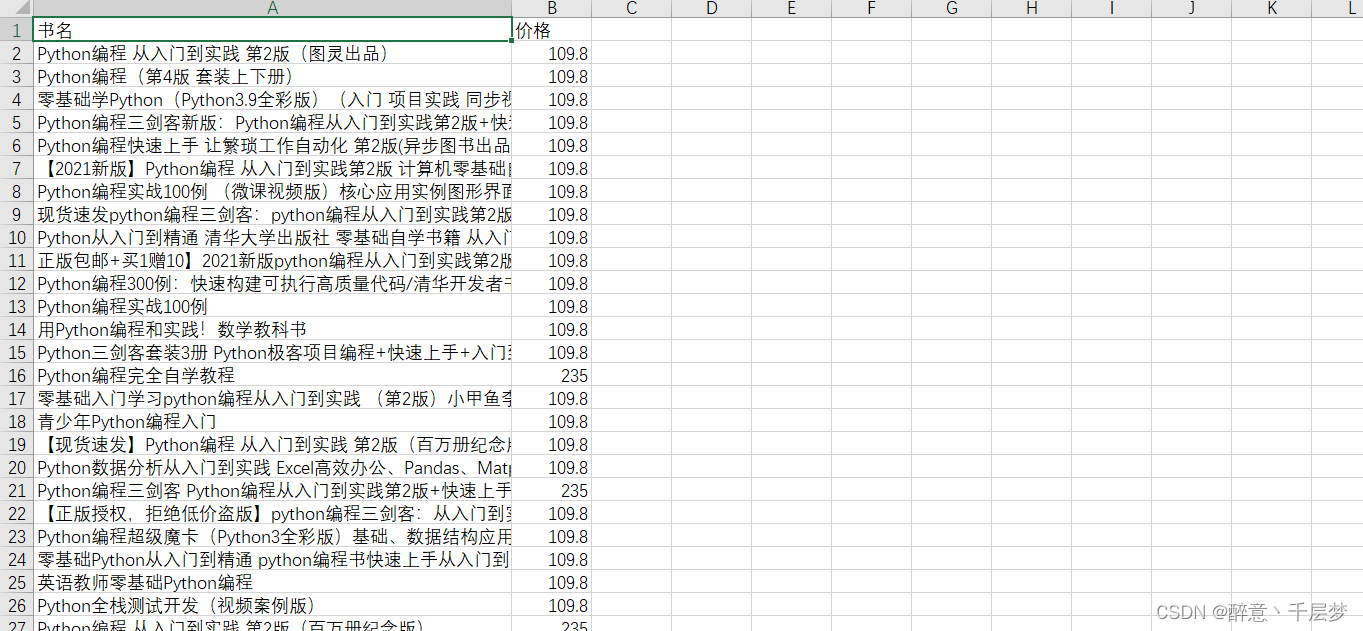

二、爬取京东特定商品

1.分析网页

获取输入框

点击搜素按钮

获取展示书籍的列表

获取每一本书籍

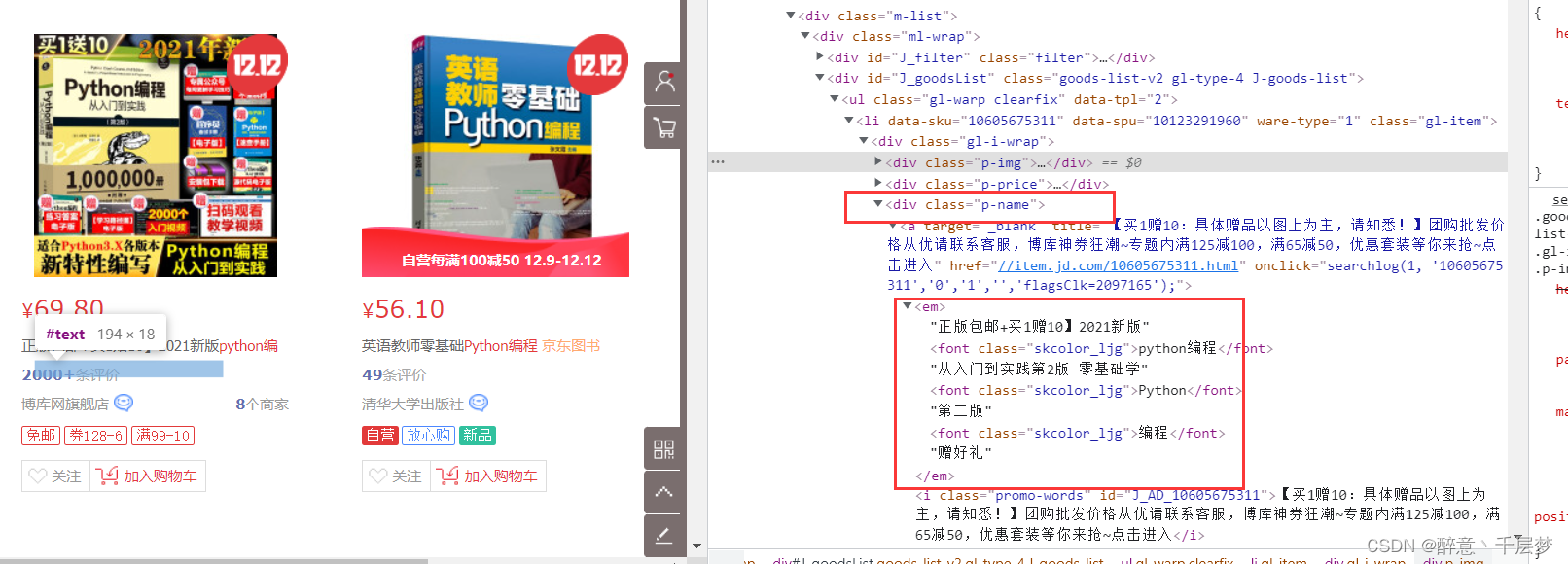

书名

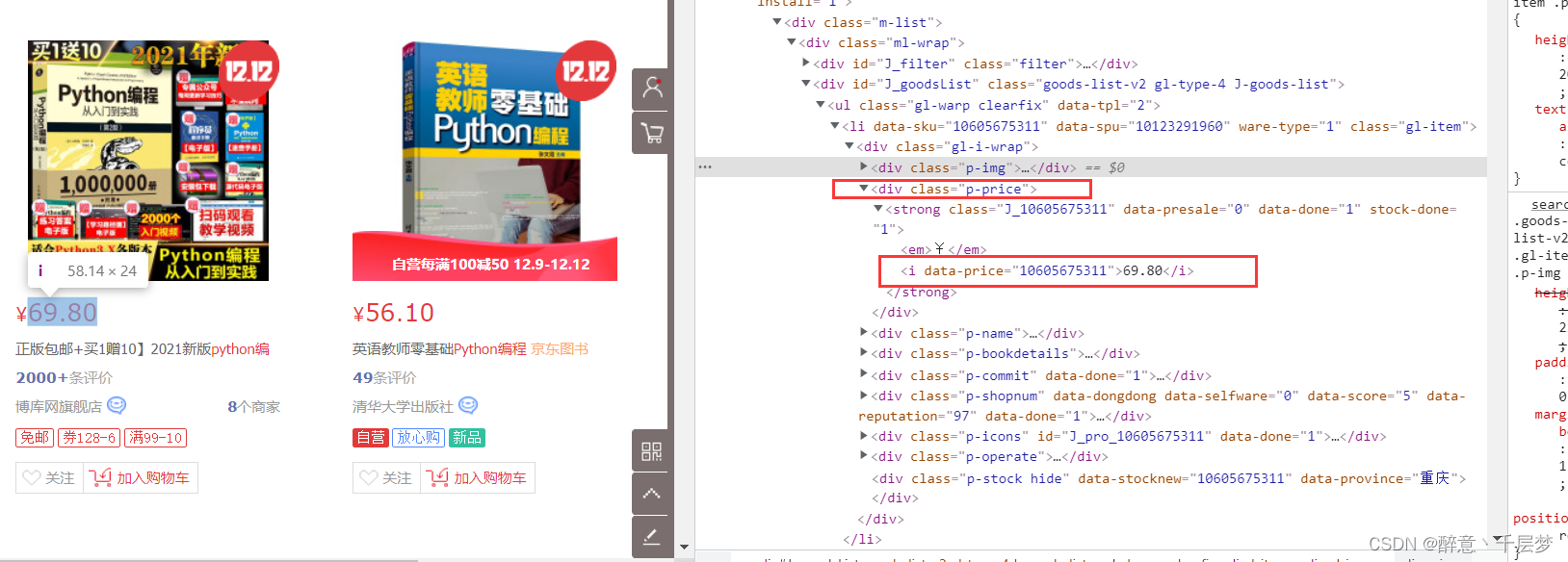

价格

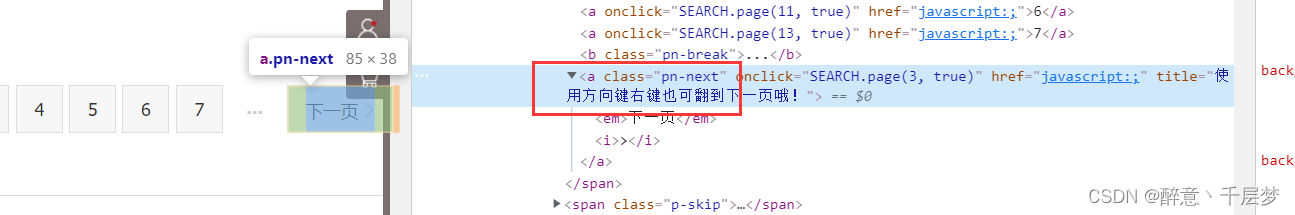

跳转到下一页

2.实现

driver = webdriver.Chrome("E:\GoogleDownload\chromedriver_win32\chromedriver.exe")

driver.set_window_size(1920,1080)

# 京东网站

driver.get("https://www.jd.com/")

# 输入需要查找的关键字

key=driver.find_element_by_id("key").send_keys("python编程")

time.sleep(1)

# 点击搜素按钮

button=driver.find_element_by_class_name("button").click()

time.sleep(1)

# 获取所有窗口

windows = driver.window_handles

# 切换到最新的窗口

driver.switch_to.window(windows[-1])

time.sleep(1)

# js语句

js = 'return document.body.scrollHeight'

# 获取body高度

max_height = driver.execute_script(js)

max_height=(int(max_height/1000))*1000

# 当前滚动条高度

tmp_height=1000

# 所有书籍的字典

res_dict={}

# 需要爬取的数量

num=200

while len(res_dict)<num:

# 当切换网页后重新设置高度

tmp_height = 1000

while tmp_height < max_height:

# 向下滑动

js = "window.scrollBy(0,1000)"

driver.execute_script(js)

tmp_height += 1000

# 书籍列表

J_goodsList = driver.find_element_by_id("J_goodsList")

ul = J_goodsList.find_element_by_tag_name("ul")

# 所有书籍

res_list = ul.find_elements_by_tag_name("li")

# 把没有记录过的书籍加入字典

for res in res_list:

# 以书名为键,价格为值

# 两种方式获取指定标签值

res_dict[res.find_element_by_class_name('p-name').find_element_by_tag_name('em').text] \

= res.find_element_by_xpath("//div[@class='p-price']//i").text

if len(res_dict)==num:

break

time.sleep(2)

if len(res_dict) == num:

break

# 下一页按钮所在父标签

J_bottomPage=driver.find_element_by_id("J_bottomPage")

# 下一页按钮

next_button=J_bottomPage.find_element_by_class_name("pn-next").click()

# 切换窗口

windows = driver.window_handles

driver.switch_to.window(windows[-1])

time.sleep(3)

# 表头

csvHeaders = ['书名','价格']

# 所有书籍

csvRows=[]

# 书籍

row=[]

# 字典转列表

for key,value in res_dict.items():

row.append(key)

row.append(value)

csvRows.append(row)

row=[]

# 保存爬取结果

with open('./output/jd_books.csv', 'w', newline='') as file:

fileWriter = csv.writer(file)

fileWriter.writerow(csvHeaders)

fileWriter.writerows(csvRows)

效果

爬取的数据

三、总结

爬虫很好用,爬虫工具更好用