前言

Python爬虫实践题目:“毕业将近,大部分学生面临找工作的压力,如何快速的找到自己心仪的岗位并且及时投递简历成为同学们关心的问题,请设计和实现一个爬取主流招聘网站(例如拉勾网,前程无忧)招聘信息的爬虫”。好像粉过头了?!

一、爬虫概述

爬虫是为获取信息而生的,互联网时代爬虫无处不在。好吧,我们还是进入正题,吹不下去了。

二、项目分析

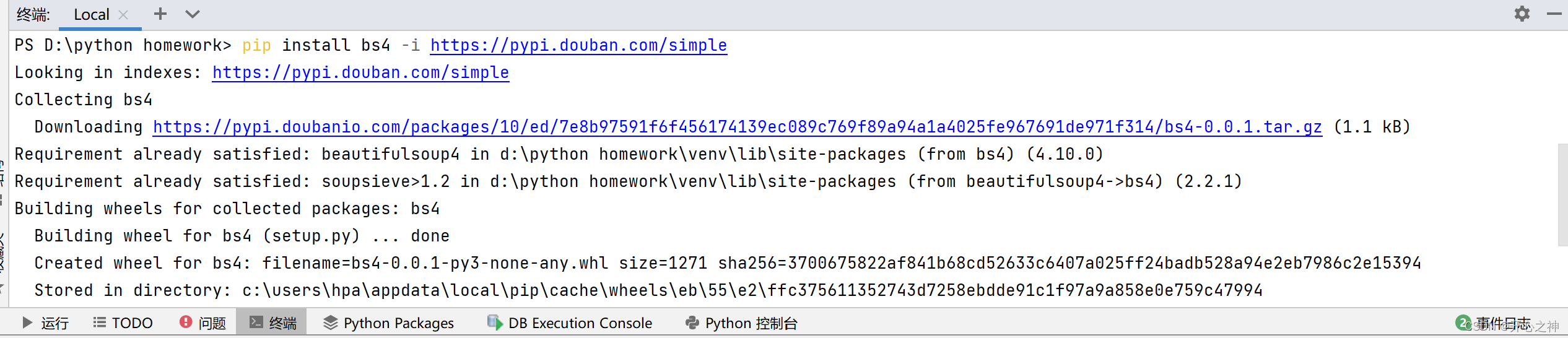

1.引入库

代码如下(示例):

from urllib import parse

import urllib.request

import re

import random

import json

import xlwt

库安装教程:pip install 库名 -i https://pypi.douban.com/simple

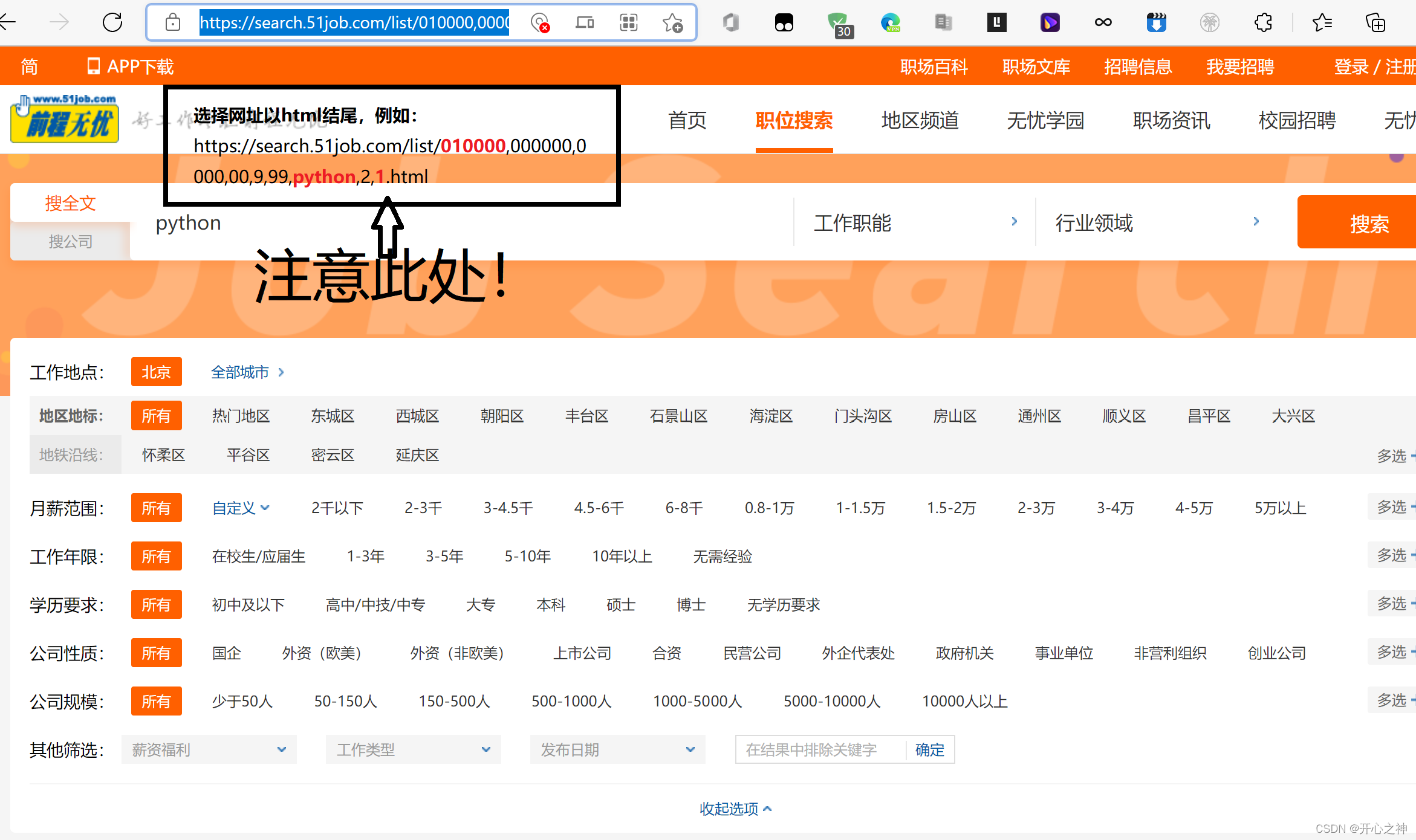

2.网址分析

目标网址:https://www.51job.com/

岗位搜索网址:

上图“注意此处!”那里第一处红色字体代表地区编号,就可以根据编号设计一个函数查询需要的地区岗位:

#获取地址

def add(p):

placelist = {'北京': '010000', '上海': '020000', '广州': '030200', '深圳': '040000', '武汉': '180200',

'西安': '200200', '杭州': '080200', '南京': '070200', '成都': '090200', '重庆': '060000',

'东莞': '030800', '大连': '230300', '沈阳': '230200', '苏州': '070300', '昆明': '250200',

'长沙': '190200', '合肥': '150200', '宁波': '080300', '郑州': '170200', '天津': '050000',

'青岛': '120300', '济南': '120200', '哈尔滨': '220200', '长春': '240200', '福州': '110200',

'珠三角': '01', '全国': '000000'}

placename = list(placelist.keys())

place=p

if place == str(1):

print("正在查询全国岗位")

place = placelist['全国']

else:

if place in placename:

print("正在查询%s的工作岗位" % place)

place = placelist[place]

else:

print("没有找到该城市,已为您切换到全国范围")

place = placelist['全国']

return place

第二处代表搜索的岗位(不过要用parse.quote()进行二次编码):

keyword = parse.quote(parse.quote(kw))

第三处代表页数,设计一个函数查看该地区该岗位共有多少页,方便爬取:

#获取页数

def ap():

rlist = re.compile(r'window.__SEARCH_RESULT__ = (.*?)</script>', re.S)

url = "https://search.51job.com/list/" + place + ",000000,0000,00,9,99," + keyword + ",2,1.html"

html = askURL(url)

rel = re.findall(rlist, html)

string = " ".join(rel)

finddata = json.loads(string)

allpage=finddata['total_page']

return allpage

3.爬取思路

用urllib.request.Request对目标网址发起get请求,urllib.request.urlopen接收请求,51job网站格式是gbk,用‘gbk’对获取的网页源码解码,解码后的文本格式是字符串,可直接用正则提取,如果要寻找网页的其它内容可用BeautifulSoup解析寻找,这里只需用正则。

#获取网页源码HTML

def askURL(url):

# 设置代理ip

proxies = {

'http': 'http://112.80.248.73:80',

'https': 'https://120.52.73.105'

}

head=get_headers()

request=urllib.request.Request(url,headers=head)

html=""

try:

response=urllib.request.urlopen(request)

html=response.read().decode("gbk")

except urllib.error.URLError as e:

if hasattr(e,"code"):

print(e.code)

if hasattr(e,"reason"):

print(e.reason)

return html

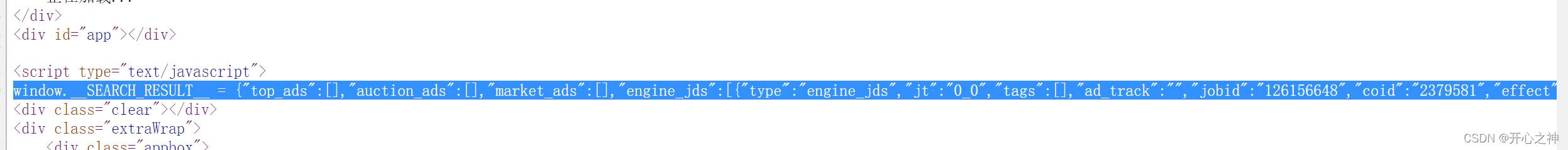

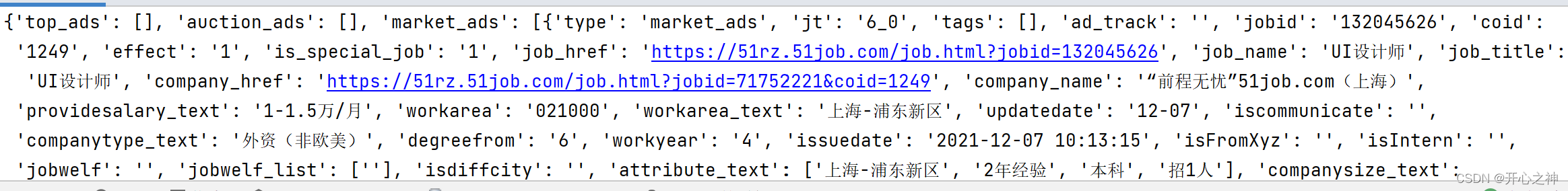

通过查看页面源代码发现我们所需的内容在window.SEARCH_RESULT 里面,需要用到正则表达式提取内容

rlist=re.compile(r'window.__SEARCH_RESULT__ = (.*?)</script>',re.S)

rel=re.findall(rlist,html)

再用json把提取内容转变为字典键值对形式方便取值

finddata=json.loads(string)

接着用键取值进一步获取自己所需的数据(例如工作详情链接,工作名字,工资,福利,工作经验要求,学历要求,公司名字,公司地址,预计招收人数,发布时间等等),想要什么自己取就完事,取的值存入列表,方便存储。

#取自己所需信息

datalist=finddata["engine_jds"]

for i in datalist:

job_href = i['job_href']

if len(job_href) !=0:

data.append(job_href)

else:

data.append(" ")

job_name = i['job_name']

data.append(job_name)

providesalary_text=i["providesalary_text"]

if len(providesalary_text) !=0:

data.append(providesalary_text)

else:

data.append(" ")

jobwelf = i['jobwelf']

if len(jobwelf) !=0:

data.append(jobwelf)

else:

data.append(" ")

workex=i['attribute_text'][1]

data.append(workex)

workeducation=i['attribute_text']

if len(workeducation)==4:

workeducation=workeducation[2]

else:

workeducation=" "

data.append(workeducation)

company_name=i['company_name']

data.append(company_name)

workplace=i['attribute_text'][0]

data.append(workplace)

findpeople=i['attribute_text']

if len(findpeople)==4:

findpeople=findpeople[3]

elif len(findpeople)==3:

findpeople=findpeople[2]

else:

findpeople=' '

data.append(findpeople)

date=i['updatedate']

data.append(date)

Datalist.append(data)

细节处理(防止封掉主机IP),我们对一个网站多次爬取,一不小心就无了,这时就需要简单伪装一下请求头,让我们不被反爬(虽然这对于这些大网站来说等于无用功,但是能凑凑代码数量,装就完事!),来看看我们如何装起来,设置随机请求头。

#随机获取一个headers

def get_headers():

user_agents = ["Mozilla/5.0 (Windows NT 6.1; rv:2.0.1) Gecko/20100101 Firefox/4.0.1",

"Mozilla/5.0 (Windows; U; Windows NT 6.1; en-us) AppleWebKit/534.50 (KHTML, like Gecko) Version/5.1 Safari/534.50",

"Opera/9.80 (Windows NT 6.1; U; en) Presto/2.8.131 Version/11.11",

"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/95.0.4638.54 Safari/537.36 Edg/95.0.1020.38",

"Mozilla/5.0(WindowsNT6.1;rv:2.0.1)Gecko/20100101Firefox/4.0.1",

"Opera/9.80(Macintosh;IntelMacOSX10.6.8;U;en)Presto/2.8.131Version/11.11",

"Opera/9.80(WindowsNT6.1;U;en)Presto/2.8.131Version/11.11",

"Mozilla/5.0(Macintosh;IntelMacOSX10_7_0)AppleWebKit/535.11(KHTML,likeGecko)Chrome/17.0.963.56Safari/535.11"]

headers = {"User-Agent":random.choice(user_agents)}

return headers

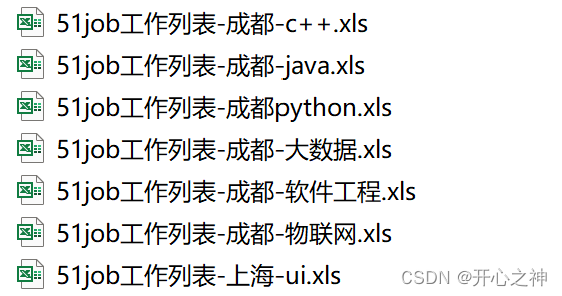

4.存入数据

存入数据有些难度,东拼西凑终于给它们装进了excel表里,因为能力有限,存不进去的只能异常捕获丢掉了,本来还想搞进数据库里面,结果因为难度太大而放弃了这一想法。

#保存到excel表

def saveData(datalist,savepath,n):

print("保存中....")

number=n

book = xlwt.Workbook(encoding="utf-8",style_compression=0)

sheet = book.add_sheet('51job',cell_overwrite_ok=True)

col = ("工作详情链接","工作名字","工资","福利","工作经验要求","学历要求","公司名字","公司地址","预计招收人数","发布时间")

for i in range(0,len(col)):

sheet.write(0,i,col[i])#别名

for i in range(0,number):#50是datalist列表长度

print("第%d条"%(i+1))

data = datalist[i]

for j in range(0,len(col)):

try:

sheet.write(i+1,j,data[j])

except Exception:

pass

book.save(savepath)

5.结果展示

爬取的几个excel表

6.源码

# -*- codeing = utf-8 -*-

# @time : 2021/11/22 21:38

# @Author : 开心之神

# @File : test.py

# @Software: PyCharm

# 用到的库

from urllib import parse

import urllib.request

import re

import random

import json

import xlwt

def main():

datalist = get_link()

n=len(datalist)

#保存到excel

savepath="51job工作列表-%s-%s.xls"%(pe,kw)

saveData(datalist,savepath,n)

#获取页数

def ap():

rlist = re.compile(r'window.__SEARCH_RESULT__ = (.*?)</script>', re.S)

url = "https://search.51job.com/list/" + place + ",000000,0000,00,9,99," + keyword + ",2,1.html"

html = askURL(url)

rel = re.findall(rlist, html)

string = " ".join(rel)

finddata = json.loads(string)

allpage=finddata['total_page']

return allpage

#获取数据

def get_link():

data=[]

Datalist=[]

rlist=re.compile(r'window.__SEARCH_RESULT__ = (.*?)</script>',re.S)

for page in range(1,pgg):

url = "https://search.51job.com/list/" + place + ",000000,0000,00,9,99," + keyword + ",2," + str(page) + ".html"

html=askURL(url)

rel=re.findall(rlist,html)

string=" ".join(rel)

finddata=json.loads(string)

datalist=finddata["engine_jds"]

for i in datalist:

job_href = i['job_href']

if len(job_href) !=0:

data.append(job_href)

else:

data.append(" ")

job_name = i['job_name']

data.append(job_name)

providesalary_text=i["providesalary_text"]

if len(providesalary_text) !=0:

data.append(providesalary_text)

else:

data.append(" ")

jobwelf = i['jobwelf']

if len(jobwelf) !=0:

data.append(jobwelf)

else:

data.append(" ")

workex=i['attribute_text'][1]

data.append(workex)

workeducation=i['attribute_text']

if len(workeducation)==4:

workeducation=workeducation[2]

else:

workeducation=" "

data.append(workeducation)

company_name=i['company_name']

data.append(company_name)

workplace=i['attribute_text'][0]

data.append(workplace)

findpeople=i['attribute_text']

if len(findpeople)==4:

findpeople=findpeople[3]

elif len(findpeople)==3:

findpeople=findpeople[2]

else:

findpeople=' '

data.append(findpeople)

date=i['updatedate']

data.append(date)

Datalist.append(data)

r=[]

for i in range(0,len(Datalist)):

r=r+list_of_groups(Datalist[i],10)

r = [list(t) for t in set(tuple(_) for _ in r)]

return r

#获取地址

def add(p):

placelist = {'北京': '010000', '上海': '020000', '广州': '030200', '深圳': '040000', '武汉': '180200',

'西安': '200200', '杭州': '080200', '南京': '070200', '成都': '090200', '重庆': '060000',

'东莞': '030800', '大连': '230300', '沈阳': '230200', '苏州': '070300', '昆明': '250200',

'长沙': '190200', '合肥': '150200', '宁波': '080300', '郑州': '170200', '天津': '050000',

'青岛': '120300', '济南': '120200', '哈尔滨': '220200', '长春': '240200', '福州': '110200',

'珠三角': '01', '全国': '000000'}

placename = list(placelist.keys())

place=p

if place == str(1):

print("正在查询全国岗位")

place = placelist['全国']

else:

if place in placename:

print("正在查询%s的工作岗位" % place)

place = placelist[place]

else:

print("没有找到该城市,已为您切换到全国范围")

place = placelist['全国']

return place

#列表分隔

def list_of_groups(init_list, children_list_len):

list_of_groups = zip(*(iter(init_list),) *children_list_len)

end_list = [list(i) for i in list_of_groups]

count = len(init_list) % children_list_len

end_list.append(init_list[-count:]) if count !=0 else end_list

return end_list

#随机获取一个headers

def get_headers():

user_agents = ["Mozilla/5.0 (Windows NT 6.1; rv:2.0.1) Gecko/20100101 Firefox/4.0.1",

"Mozilla/5.0 (Windows; U; Windows NT 6.1; en-us) AppleWebKit/534.50 (KHTML, like Gecko) Version/5.1 Safari/534.50",

"Opera/9.80 (Windows NT 6.1; U; en) Presto/2.8.131 Version/11.11",

"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/95.0.4638.54 Safari/537.36 Edg/95.0.1020.38",

"Mozilla/5.0(WindowsNT6.1;rv:2.0.1)Gecko/20100101Firefox/4.0.1",

"Opera/9.80(Macintosh;IntelMacOSX10.6.8;U;en)Presto/2.8.131Version/11.11",

"Opera/9.80(WindowsNT6.1;U;en)Presto/2.8.131Version/11.11",

"Mozilla/5.0(Macintosh;IntelMacOSX10_7_0)AppleWebKit/535.11(KHTML,likeGecko)Chrome/17.0.963.56Safari/535.11"]

headers = {"User-Agent":random.choice(user_agents)}

return headers

#获取网页源码HTML

def askURL(url):

# 设置代理ip

proxies = {

'http': 'http://112.80.248.73:80',

'https': 'https://120.52.73.105'

}

head=get_headers()

request=urllib.request.Request(url,headers=head)

html=""

try:

response=urllib.request.urlopen(request)

html=response.read().decode("gbk")

except urllib.error.URLError as e:

if hasattr(e,"code"):

print(e.code)

if hasattr(e,"reason"):

print(e.reason)

return html

#保存到excel表

def saveData(datalist,savepath,n):

print("保存中....")

number=n

book = xlwt.Workbook(encoding="utf-8",style_compression=0)

sheet = book.add_sheet('51job',cell_overwrite_ok=True)

col = ("工作详情链接","工作名字","工资","福利","工作经验要求","学历要求","公司名字","公司地址","预计招收人数","发布时间")

for i in range(0,len(col)):

sheet.write(0,i,col[i])#别名

for i in range(0,number):#50是datalist列表长度

print("第%d条"%(i+1))

data = datalist[i]

for j in range(0,len(col)):

try:

sheet.write(i+1,j,data[j])

except Exception:

pass

book.save(savepath)

if __name__ =="__main__":

kw = input("请输入你要搜索的岗位关键字(输入+查询全部岗位):")

keyword = parse.quote(parse.quote(kw))

pe=input("请输入要查询的地方(输入1查询全国):")

place = add(pe)

print("当前共%s页"%ap())

pgg = input("请输入要爬取的页数:")

pgg = int(pgg) + 1

main()

print("爬取并保存完毕!")

三、总结

经过这次项目,我学到了很多,至于具体是多少就不告诉大家了,以上就是今天要讲的内容,本文仅仅简单介绍了爬取51job招聘信息,而还没有进行数据可视化,因为这是实践项目,我们是两个人一队,所以较难的部分交给了另一位队友,大家想看数据分析的可以点击下面链接进去查看,因为是第一次写博客,不了解排版之类的,请大家多多担待,还有如果博客内容有不对的地方请留言纠正我,谢谢大家!菜鸡弱弱的张望