前言:近期总有刚接触Pyppeteer的同学提问,今日得闲索性写个demo,供大家参考。

安装Pyppeteer环节省略,自行查阅相关文档。

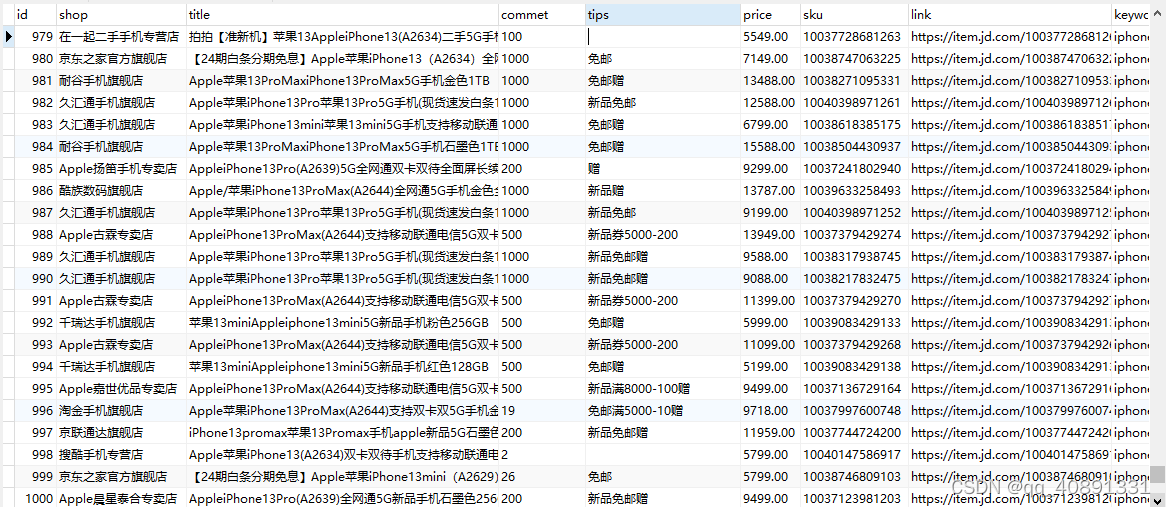

下述代码业务场景:用户输入关键字,脚本采集并存储与该关键字相应所有商品的SKU、标题、价格、店铺名、评论数、优惠活动、链接。

备注:未采集评论详情,本脚本已经拿到SKU再想拿评论详情不要太简单,我就不写了。(当然如果有老板抛私活,还是愿意效劳的。)

代码块:

# coding:'utf-8'

import asyncio

import tkinter

import random

import pymysql

from pyppeteer import launch

from bs4 import BeautifulSoup

def screen_size():

tk = tkinter.Tk()

width = tk.winfo_screenwidth()

height = tk.winfo_screenheight()

tk.quit()

return width, height

async def awaiting(page):

for i in range(6):

await asyncio.sleep(1)

await page.evaluate('window.scrollBy(2000, window.innerHeight)')

return None

async def openPage(url, keyword):

browser = await launch({

"headless": False,

'args': [

'--disable0000-extensions',

'--hide-scrollbars',

'--disable-bundled-ppapi-flash',

'--mute-audio',

'--no-sandbox',

'--start-maximized',

'--disable-dev-shm-usage',

'--disable-setuid-sandbox',

'--disable-gpu',

'--disable-infobars'

]

})

context = await browser.createIncognitoBrowserContext()

print("已打开浏览器")

page = await context.newPage()

await page.goto(url)

width, height = screen_size()

await page.setViewport({"width": width, "height":height})

await page.setUserAgent("Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.77 Safari/537.36")

await page.evaluate('''() =>{ Object.defineProperties(navigator,{ webdriver:{ get: () => false } }) }''')

# await page.screenshot({'path': str(random.randint(10000, 99999)) + ".png"})

await page.type("#key",keyword, {"delay": random.randint(100, 150)-50})

await page.click(".button")

await asyncio.sleep(random.randint(1, 2))

await awaiting(page)

pageNumber = await page.evaluate(

"""document.querySelector('#J_bottomPage > span.p-skip > em:nth-child(1) > b').innerText;""")

print("共有%s页"%pageNumber)

db = pymysql.connect(host='127.0.0.1', port=3306, user="MySQL用户名", passwd="MySQL密码", db="数据库名")

cursor = db.cursor()

for i in range(int(pageNumber)):

await awaiting(page)

count = await page.evaluate("""document.querySelector('.gl-warp.clearfix').childElementCount;""")

print("本页共有%s条数据" % count)

for y in range(count):

# await page.evaluate('window.scrollBy(2000, window.innerHeight)') # 滚动到底部

await asyncio.sleep(1)

y += 1

sku = BeautifulSoup(await page.evaluate("""document.querySelector('#J_goodsList > ul > li:nth-child(%s) > div > div.p-price').innerHTML"""%y),"lxml")

sku_ = sku.find('strong')['class'][0].strip("J_")

price = await page.evaluate("""document.querySelector('#J_goodsList > ul > li:nth-child(%s) > div > div.p-price > strong > i').innerText;"""%y)

soup = BeautifulSoup(await page.evaluate("""document.querySelector('#J_goodsList > ul > li:nth-child(%s) > div > div.p-name.p-name-type-2').innerHTML;"""%y),"lxml")

link = soup.find('a')['href']

title = await page.evaluate("""document.querySelector('#J_goodsList > ul > li:nth-child(%s) > div > div.p-name.p-name-type-2 > a > em').innerText;"""%y)

commet = await page.evaluate("""document.querySelector('#J_goodsList > ul > li:nth-child(%s) > div > div.p-commit').innerText;"""%y)

shop = await page.evaluate("""document.querySelector('#J_goodsList > ul > li:nth-child(%s) > div > div.p-shop > span > a').innerText;"""%y)

tips = await page.evaluate("""document.querySelector('#J_pro_%s').innerText;"""%sku_)

sql = """insert into JDshoplist(title,shop,link,price,commet,tips,keyword) values ("%s","%s","%s","%s","%s","%s","%s")"""%(title,shop,link,price,commet,tips,keyword)

cursor.execute(sql)

db.commit()

print("已采集完第{}页的商品信息,本页共有{}条商品信息。".format(i,count))

await page.click(".pn-next")

cursor.close()

db.close()

if __name__ == '__main__':

url = "https://www.jd.com/"

keyword = "iphone13"

loop = asyncio.get_event_loop()

loop.run_until_complete(openPage(url, keyword))

数据库: