一、概念描述

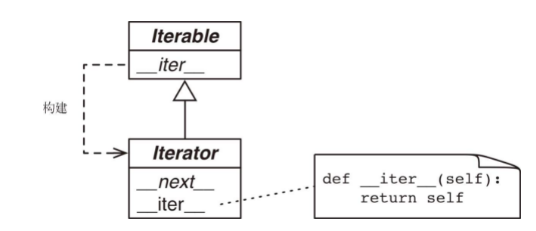

可迭代对象就是可以迭代的对象,我们可以通过内置的iter函数获取其迭代器,可迭代对象内部需要实现__iter__函数来返回其关联的迭代器;

迭代器是负责具体数据的逐个遍历的,其通过实现__next__函数得以逐个的访问关联的数据元素;同时通过实现__iter__来实现对可迭代对象的兼容;

生成器是一种迭代器模式,其实现了数据的惰性生成,即只有使用的时候才会生成对应的元素;

二、序列的可迭代性

python内置的序列可以通过for进行迭代,解释器会调用iter函数获取序列的迭代器,由于iter函数兼容序列实现的__getitem__,会自动创建一个迭代器;

迭代器的

import re

from dis import dis

class WordAnalyzer:

reg_word = re.compile('\w+')

def __init__(self, text):

self.words = self.__class__.reg_word.findall(text)

def __getitem__(self, index):

return self.words[index]

def iter_word_analyzer():

wa = WordAnalyzer('this is mango word analyzer')

print('start for wa')

for w in wa:

print(w)

print('start while wa_iter')

wa_iter = iter(wa)

while True:

try:

print(next(wa_iter))

except StopIteration as e:

break;

iter_word_analyzer()

dis(iter_word_analyzer)

# start for wa

# this

# is

# mango

# word

# analyzer

# start while wa_iter

# this

# is

# mango

# word

# analyzer

# 15 0 LOAD_GLOBAL 0 (WordAnalyzer)

# 2 LOAD_CONST 1 ('this is mango word analyzer')

# 4 CALL_FUNCTION 1

# 6 STORE_FAST 0 (wa)

#

# 16 8 LOAD_GLOBAL 1 (print)

# 10 LOAD_CONST 2 ('start for wa')

# 12 CALL_FUNCTION 1

# 14 POP_TOP

#

# 17 16 LOAD_FAST 0 (wa)

# 18 GET_ITER

# >> 20 FOR_ITER 12 (to 34)

# 22 STORE_FAST 1 (w)

#

# 18 24 LOAD_GLOBAL 1 (print)

# 26 LOAD_FAST 1 (w)

# 28 CALL_FUNCTION 1

# 30 POP_TOP

# 32 JUMP_ABSOLUTE 20

#

# 20 >> 34 LOAD_GLOBAL 1 (print)

# 36 LOAD_CONST 3 ('start while wa_iter')

# 38 CALL_FUNCTION 1

# 40 POP_TOP

#

# 21 42 LOAD_GLOBAL 2 (iter)

# 44 LOAD_FAST 0 (wa)

# 46 CALL_FUNCTION 1

# 48 STORE_FAST 2 (wa_iter)

#

# 23 >> 50 SETUP_FINALLY 16 (to 68)

#

# 24 52 LOAD_GLOBAL 1 (print)

# 54 LOAD_GLOBAL 3 (next)

# 56 LOAD_FAST 2 (wa_iter)

# 58 CALL_FUNCTION 1

# 60 CALL_FUNCTION 1

# 62 POP_TOP

# 64 POP_BLOCK

# 66 JUMP_ABSOLUTE 50

#

# 25 >> 68 DUP_TOP

# 70 LOAD_GLOBAL 4 (StopIteration)

# 72 JUMP_IF_NOT_EXC_MATCH 114

# 74 POP_TOP

# 76 STORE_FAST 3 (e)

# 78 POP_TOP

# 80 SETUP_FINALLY 24 (to 106)

#

# 26 82 POP_BLOCK

# 84 POP_EXCEPT

# 86 LOAD_CONST 0 (None)

# 88 STORE_FAST 3 (e)

# 90 DELETE_FAST 3 (e)

# 92 JUMP_ABSOLUTE 118

# 94 POP_BLOCK

# 96 POP_EXCEPT

# 98 LOAD_CONST 0 (None)

# 100 STORE_FAST 3 (e)

# 102 DELETE_FAST 3 (e)

# 104 JUMP_ABSOLUTE 50

# >> 106 LOAD_CONST 0 (None)

# 108 STORE_FAST 3 (e)

# 110 DELETE_FAST 3 (e)

# 112 RERAISE

# >> 114 RERAISE

# 116 JUMP_ABSOLUTE 50

# >> 118 LOAD_CONST 0 (None)

# 120 RETURN_VALUE

三、经典的迭代器模式

标准的迭代器需要实现两个接口方法,一个可以获取下一个元素的__next__方法和直接返回self的__iter__方法;

迭代器迭代完所有的元素的时候会抛出StopIteration异常,但是python内置的for、列表推到、元组拆包等会自动处理这个异常;

实现__iter__主要为了方便使用迭代器,这样就可以最大限度的方便使用迭代器;

迭代器只能迭代一次,如果需要再次迭代就需要再次调用iter方法获取新的迭代器,这就要求每个迭代器维护自己的内部状态,即一个对象不能既是可迭代对象同时也是迭代器;

从经典的面向对象设计模式来看,可迭代对象可以随时生成自己关联的迭代器,而迭代器负责具体的元素的迭代处理;

import re

from dis import dis

class WordAnalyzer:

reg_word = re.compile('\w+')

def __init__(self, text):

self.words = self.__class__.reg_word.findall(text)

def __iter__(self):

return WordAnalyzerIterator(self.words)

class WordAnalyzerIterator:

def __init__(self, words):

self.words = words

self.index = 0

def __iter__(self):

return self;

def __next__(self):

try:

word = self.words[self.index]

except IndexError:

raise StopIteration()

self.index +=1

return word

def iter_word_analyzer():

wa = WordAnalyzer('this is mango word analyzer')

print('start for wa')

for w in wa:

print(w)

print('start while wa_iter')

wa_iter = iter(wa)

while True:

try:

print(next(wa_iter))

except StopIteration as e:

break;

iter_word_analyzer()

# start for wa

# this

# is

# mango

# word

# analyzer

# start while wa_iter

# this

# is

# mango

# word

# analyzer

四、生成器也是迭代器

生成器是调用生成器函数生成的,生成器函数是含有yield的工厂函数;

生成器本身就是迭代器,其支持使用next函数遍历生成器,同时遍历完也会抛出StopIteration异常;

生成器执行的时候会在yield语句的地方暂停,并返回yield右边的表达式的值;

def gen_func():

print('first yield')

yield 'first'

print('second yield')

yield 'second'

print(gen_func)

g = gen_func()

print(g)

for val in g:

print(val)

g = gen_func()

print(next(g))

print(next(g))

print(next(g))

# <function gen_func at 0x7f1198175040>

# <generator object gen_func at 0x7f1197fb6cf0>

# first yield

# first

# second yield

# second

# first yield

# first

# second yield

# second

# StopIteration

我们可以将__iter__作为生成器函数

import re

from dis import dis

class WordAnalyzer:

reg_word = re.compile('\w+')

def __init__(self, text):

self.words = self.__class__.reg_word.findall(text)

def __iter__(self):

for word in self.words:

yield word

def iter_word_analyzer():

wa = WordAnalyzer('this is mango word analyzer')

print('start for wa')

for w in wa:

print(w)

print('start while wa_iter')

wa_iter = iter(wa)

while True:

try:

print(next(wa_iter))

except StopIteration as e:

break;

iter_word_analyzer()

# start for wa

# this

# is

# mango

# word

# analyzer

# start while wa_iter

# this

# is

# mango

# word

# analyzer

五、实现惰性迭代器

迭代器的一大亮点就是通过__next__来实现逐个元素的遍历,这个大数据容器的遍历带来了可能性;

我们以前的实现在初始化的时候,直接调用re.findall得到了所有的序列元素,并不是一个很好的实现;我们可以通过re.finditer来在遍历的时候得到数据;

import re

from dis import dis

class WordAnalyzer:

reg_word = re.compile('\w+')

def __init__(self, text):

# self.words = self.__class__.reg_word.findall(text)

self.text = text

def __iter__(self):

g = self.__class__.reg_word.finditer(self.text)

print(g)

for match in g:

yield match.group()

def iter_word_analyzer():

wa = WordAnalyzer('this is mango word analyzer')

print('start for wa')

for w in wa:

print(w)

print('start while wa_iter')

wa_iter = iter(wa)

wa_iter1= iter(wa)

while True:

try:

print(next(wa_iter))

except StopIteration as e:

break;

iter_word_analyzer()

# start for wa

# <callable_iterator object at 0x7feed103e040>

# this

# is

# mango

# word

# analyzer

# start while wa_iter

# <callable_iterator object at 0x7feed103e040>

# this

# is

# mango

# word

# analyzer

六、使用生成器表达式简化惰性迭代器

生成器表达式是生成器的声明性定义,与列表推到的语法类似,只是生成元素是惰性的;

def gen_func():

print('first yield')

yield 'first'

print('second yield')

yield 'second'

l = [x for x in gen_func()]

for x in l:

print(x)

print()

ge = (x for x in gen_func())

print(ge)

for x in ge:

print(x)

# first yield

# second yield

# first

# second

#

# <generator object <genexpr> at 0x7f78ff5dfd60>

# first yield

# first

# second yield

# second

使用生成器表达式实现word analyzer

import re

from dis import dis

class WordAnalyzer:

reg_word = re.compile('\w+')

def __init__(self, text):

# self.words = self.__class__.reg_word.findall(text)

self.text = text

def __iter__(self):

# g = self.__class__.reg_word.finditer(self.text)

# print(g)

# for match in g:

# yield match.group()

ge = (match.group() for match in self.__class__.reg_word.finditer(self.text))

print(ge)

return ge

def iter_word_analyzer():

wa = WordAnalyzer('this is mango word analyzer')

print('start for wa')

for w in wa:

print(w)

print('start while wa_iter')

wa_iter = iter(wa)

while True:

try:

print(next(wa_iter))

except StopIteration as e:

break;

iter_word_analyzer()

# start for wa

# <generator object WordAnalyzer.__iter__.<locals>.<genexpr> at 0x7f4178189200>

# this

# is

# mango

# word

# analyzer

# start while wa_iter

# <generator object WordAnalyzer.__iter__.<locals>.<genexpr> at 0x7f4178189200>

# this

# is

# mango

# word

# analyzer