1.通过requests模块中的get方法获取整张页面信息。通过正则表达式,进行筛选。获得需要的数据,并进行持久化存储。

2.完整代码

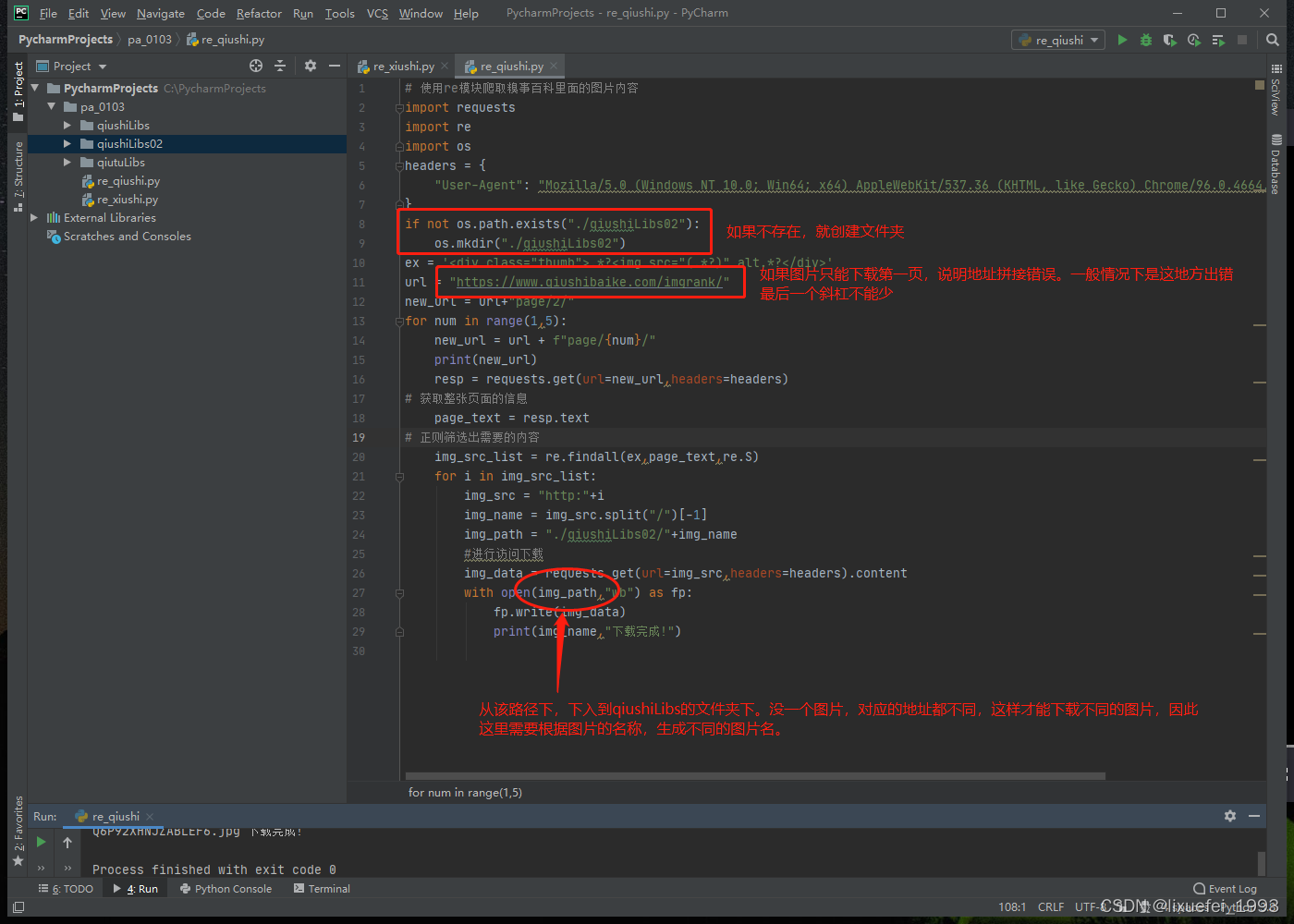

# 使用re模块爬取糗事百科里面的图片内容

import requests

import re

import os

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/96.0.4664.110 Safari/537.36"

}

if not os.path.exists("./qiushiLibs02"):

os.mkdir("./qiushiLibs02")

ex = '<div class="thumb">.*?<img src="(.*?)" alt.*?</div>'

url = "https://www.qiushibaike.com/imgrank/"

new_url = url+"page/2/"

for num in range(1,5):

new_url = url + f"page/{num}/"

print(new_url)

resp = requests.get(url=new_url,headers=headers)

# 获取整张页面的信息

page_text = resp.text

# 正则筛选出需要的内容

img_src_list = re.findall(ex,page_text,re.S)

for i in img_src_list:

img_src = "http:"+i

img_name = img_src.split("/")[-1]

img_path = "./qiushiLibs02/"+img_name

#进行访问下载

img_data = requests.get(url=img_src,headers=headers).content

with open(img_path,"wb") as fp:

fp.write(img_data)

print(img_name,"下载完成!")3.注意事项

?