bs4是通过网站的标签以及属性找到,对应文本信息或者标签属性。

1.bs4爬*网站菜价

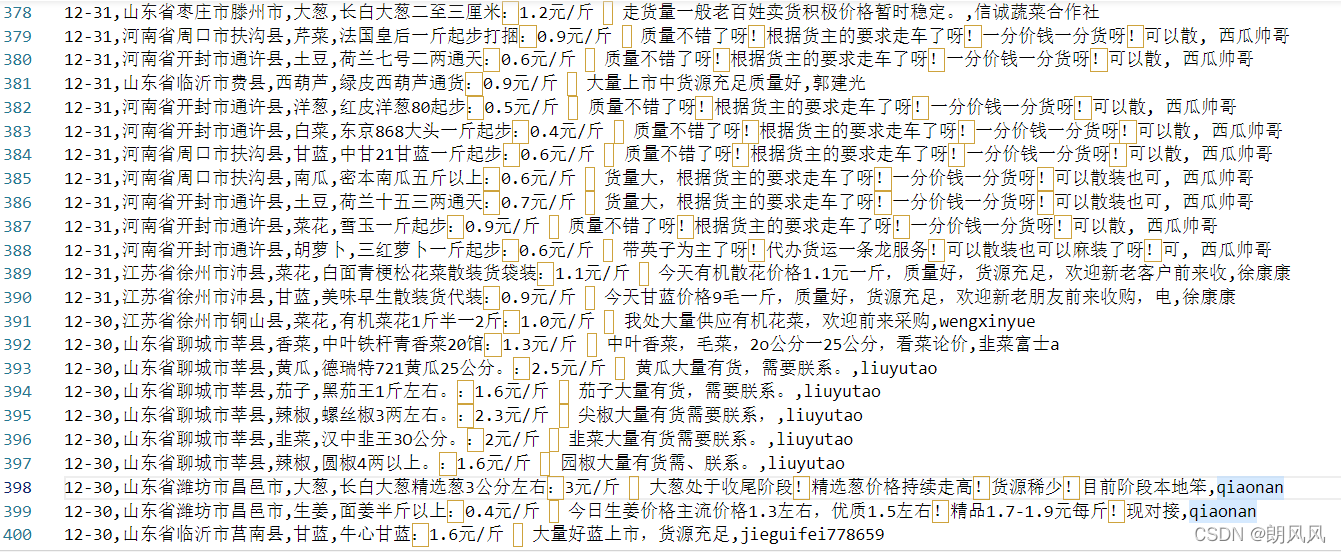

例程给的网站,改版了,需要post,查找返回数据,数据就不用在网页里面查找了,数据简单的,所以我自己又重新找了一个网站。

蔬菜商情网

import requests

from bs4 import BeautifulSoup

import csv

def geturl(url):

#url = 'http://www.shucai123.com/price/t'

#url+= str(2)

resp = requests.get(url)

resp.encoding='utf-8'

pagecontent= resp.text

resp.close()

return pagecontent

#print(resp.text)

f = open('菜价.csv',mode='w',newline='',encoding='utf-8')

csvwriter = csv.writer(f)

url = 'http://www.shucai123.com/price/t'

for i in range(20):

pagecontent = geturl(url+str(i+1))

page = BeautifulSoup(pagecontent,'html.parser') #指定html解析器

table = page.find('table',attrs={'class':'bjtbl'})

trs = table.find_all('tr')[1:]

for tr in trs:

tds = tr.find_all('td')

#print([_.text for _ in tds[:-1]])

csvwriter.writerow([_.text for _ in tds[:-1]])

print('%d over!!'%(i+1))

f.close()

2.bs4图片网站

将以下网站的主页上的对应的高清大图下载,

唯美图片

主要分为3部分

1.打开主页,并将对应图片位置的网址找到

2.打开子页面,并找到图片的链接

3.保存图片

#图片下载

import requests

from bs4 import BeautifulSoup

import os

def getpage(url): #获取网站源代码

resp = requests.get(url)

resp.encoding= 'utf-8'

pagecontent = resp.text

resp.close()

return pagecontent

def requestdownload(ImageUrl,name): #保存图片

resp = requests.get(ImageUrl)

with open('./pic/%s.png'%name, 'wb') as f:

f.write(resp.content) #将图片写入到文件

resp.close()

url= "https://www.umei.cc/"

pagecontent = getpage(url)

FilePath = os.getcwd()+'\\pic' #获取文件的地址在加上创建文件名称

if not os.path.exists(FilePath): #如果不存在则创建文件夹

os.makedirs(FilePath)

page = BeautifulSoup(pagecontent,'html.parser')

divs = page.find_all('div',attrs={'class':'PicListTxt'})

conter = 1

for div in divs:

spans = div.find_all('span')

for span in spans:

urlnew = url+span.a.get('href')[1:]

pagectnew = getpage(urlnew) # 从新打开新的网站

pagenew = BeautifulSoup(pagectnew,'html.parser')

imgurl = pagenew.find('div',attrs={'class':'ImageBody'}) #分别找到链接和名字

names = pagenew.find('div',attrs={'class':'ArticleTitle'})

requestdownload(imgurl.img['src'],names.text) #保存图片

print('%d over!!'%conter)

conter+=1

3.总结

最近放假了三天,在家忙事情忙了三天,文章一直都没有更新,不过乐扣的题每天都有做,只是做的比较少。