此次实战用到了的模块为requests、pandas、bs4。

一、源码

import requests

import pandas as pd

from bs4 import BeautifulSoup

data_info = {

'图书排名': [],

'图书名称': [],

'图书作者': [],

'图书价格': []

}

def parse_html(soup):

li_list = soup.select(".bang_list li")

for li in li_list:

data_info['图书排名'].append(li.select(".list_num")[0].text.replace(".",""))

data_info['图书名称'].append(li.select(".name a")[0].text)

data_info['图书作者'].append(li.select(".publisher_info a")[0].text)

data_info['图书价格'].append(float(li.select(".price .price_n")[0].text.replace("¥","")))

headers={

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/97.0.4692.99 Safari/537.36 Edg/97.0.1072.76"

}

for i in range(1,26):

url=f"http://bang.dangdang.com/books/childrensbooks/01.41.00.00.00.00-recent30-0-0-1-{i}-bestsell"

response=requests.get(url,headers).text

soup=BeautifulSoup(response,"lxml")

parse_html(soup)

print(f"第{i}页爬取完毕")

book_info = pd.DataFrame(data_info)

print(book_info.isnull())

print(book_info.duplicated())

book_info['图书价格'][book_info['图书价格'] > 100] = None

book_info = book_info.dropna()

book_info.to_csv('图书销售排行.csv', encoding='utf-8', index=False)

二、在学习过程中可能会遇到的问题

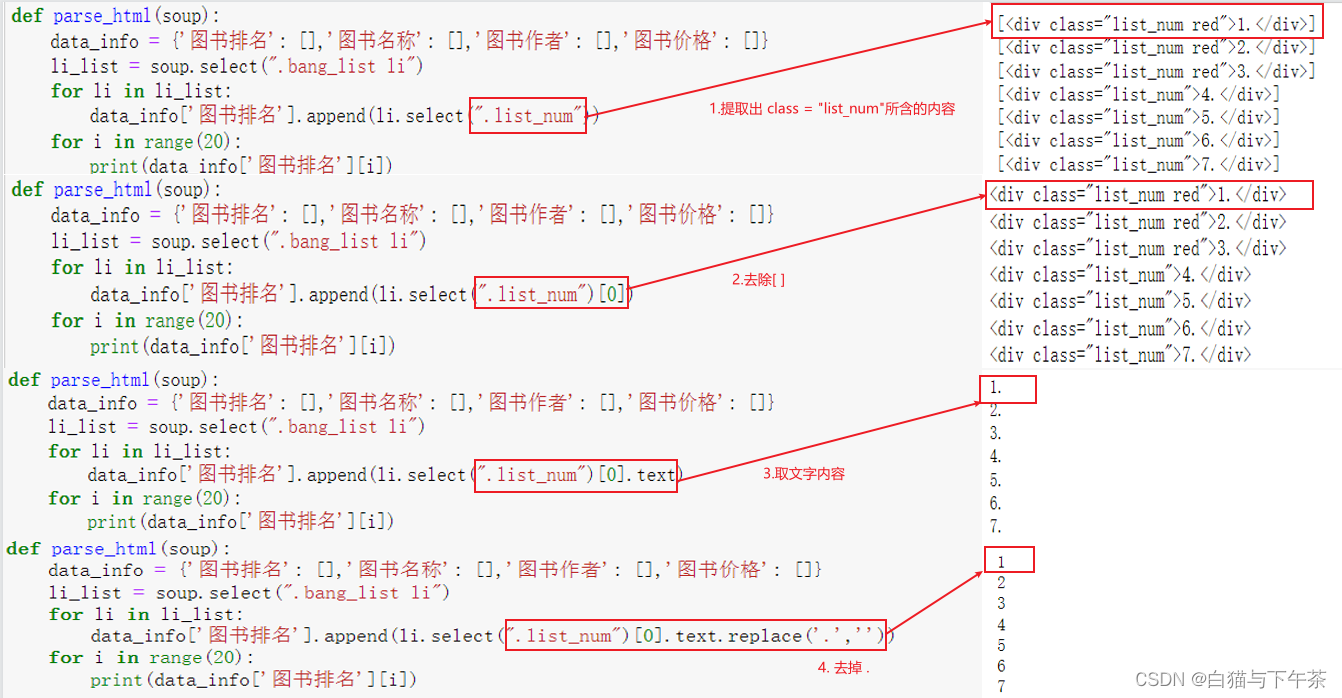

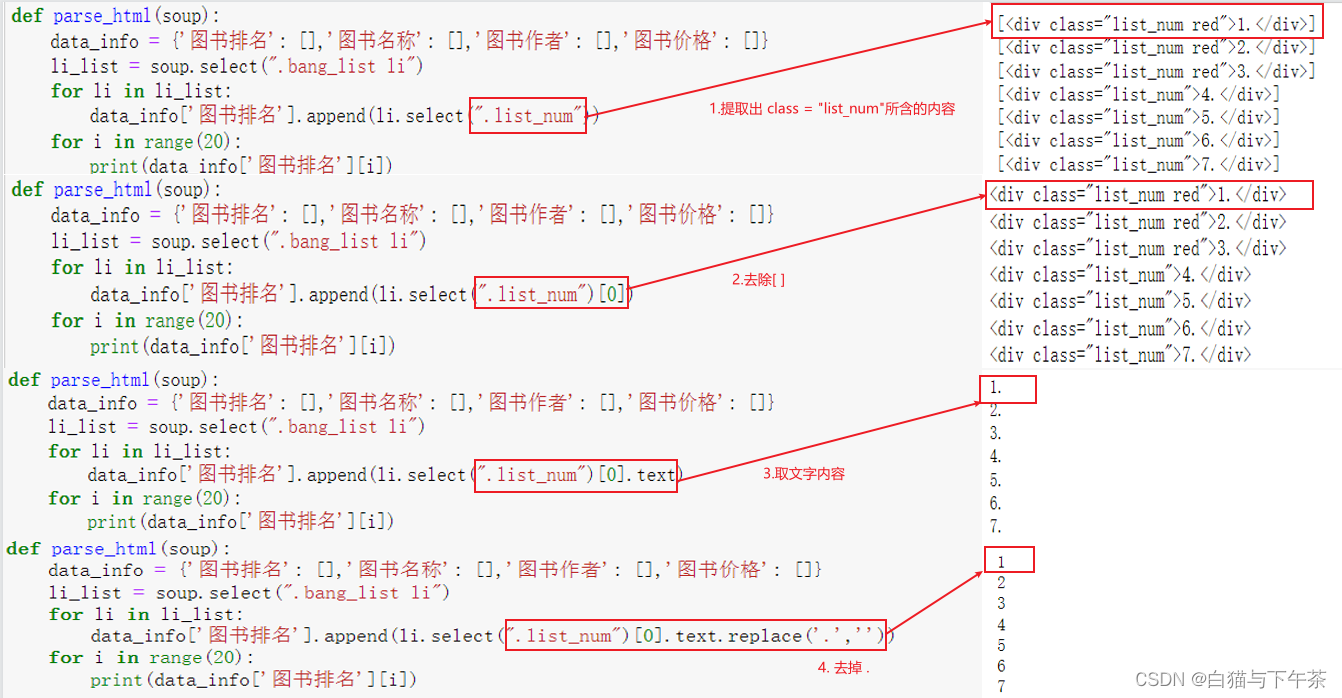

问题1:不理解源码中关于parse_html类中代码的具体含义

|