本文教程来源

教程以汽车燃油效率的模型为例,气缸数,排量,马力以及重量 为变量

获取数据

dataset_path = keras.utils.get_file("auto-mpg.data", "http://archive.ics.uci.edu/ml/machine-learning-databases/auto-mpg/auto-mpg.data")

# 不是用 keras.datasets.某数据库 了,这个是读取文件路径给下面的pandas用

print(dataset_path) # 在C:\Users\WQuiet\.keras\datasets\auto-mpg.data

# 枚举

column_names = ['MPG','Cylinders','Displacement','Horsepower','Weight',

'Acceleration', 'Model Year', 'Origin']

raw_dataset = pd.read_csv(dataset_path, names=column_names,

na_values = "?", comment='\t',

sep=" ", skipinitialspace=True)

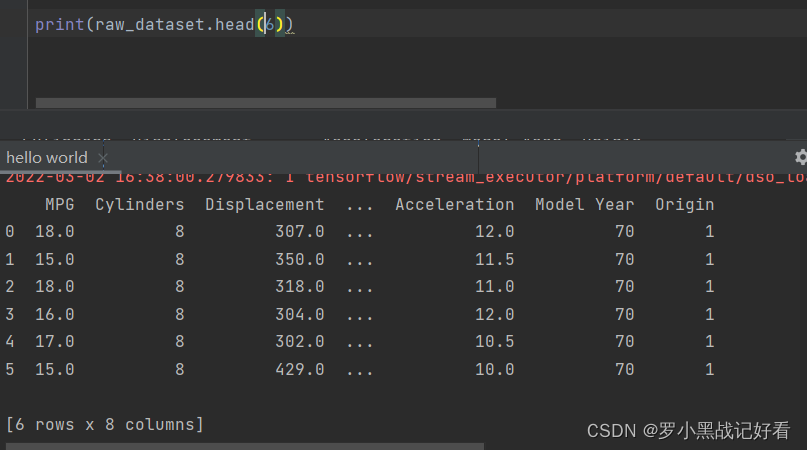

使用pandas做数据处理的第一步就是读取数据,数据源可以来自于各种地方,csv文件便是其中之一。而读取csv文件,pandas也提供了非常强力的支持,参数有四五十个。

- 数据输入的路径:可以是文件路径、可以是URL,也可以是实现read方法的任意对象。

- names:表头,就是类似于[“编号”, “姓名”, “地址”]那种

- na_values:这里是把问号替换成 NaN。完整的是{“指定的某列”: [“那一列要被替换的内容”, “2个以上就用中括号”], “result”: [“对”]})

- sep:读取csv文件时指定的分隔符,默认为逗号。注意:“csv文件的分隔符” 和 “我们读取csv文件时指定的分隔符” 一定要一致。

详解pandas的read_csv方法

下图是获取到的原始数据

数据处理

dataset = raw_dataset.copy() # 备份原始数据

print(dataset.isna().sum()) # 把 是空缺值 的位置计数求和列出来

dataset = dataset.dropna()# 去掉那行有空缺值的数据

origin = dataset.pop('Origin')

# "Origin" 那一列的数据代表国家,3个国家分别编号1 2 3。但那样分类不直观,因此拆成3列,只有一个国家能置一【即为独热码 (one-hot)

dataset['USA'] = (origin == 1)*1.0

dataset['Europe'] = (origin == 2)*1.0

dataset['Japan'] = (origin == 3)*1.0

# 分出训练集和测试集

train_dataset = dataset.sample(frac=0.8,random_state=0)

test_dataset = dataset.drop(train_dataset.index)

DataFrame.sample(n=None, frac=None, replace=False, weights=None, random_state=None, axis=None)

( 要抽取的行数,抽取行的比例【例如frac=0.8,就是抽取其中80%】,是否为有放回抽样,不懂,数据能重复吗,选择抽取数据的行还是列)

pandas.DataFrame.sample 随机选取若干行

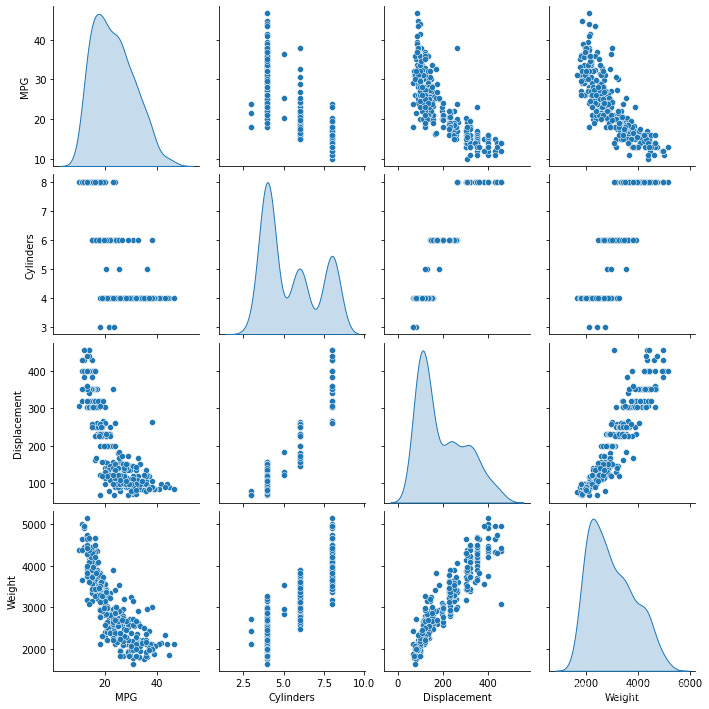

用数据图查看数据内容

sns.pairplot(train_dataset[[“MPG”, “Cylinders”, “Displacement”, “Weight”]], diag_kind=“kde”)

Seaborn是基于matplotlib的Python可视化库。它提供了一个高级界面来绘制有吸引力的统计图形。

pairplot主要展现的是变量两两之间的关系

sns.pairplot(data,kind=“reg”,diag_kind=“kde”)

kind:用于控制非对角线上的图的类型,可选"scatter"与"reg"

diag_kind:控制对角线上的图的类型,可选"hist"与"kde"

Python可视化 | Seaborn5分钟入门(七)——pairplot

数据图不显示就加一句plt.show()在后面 头文件需要的是 import matplotlib.pyplot as plt

用表格查看数据内容

train_stats = train_dataset.describe()

train_stats.pop("MPG") # 把这列数据去掉

train_stats = train_stats.transpose() # 矩阵转置 就,把行和列的标签互换

print(train_stats)

describe()函数可以查看DataFrame中数据的统计情况

describe函数基础介绍

pandas 的describe函数的参数详解

# 单独保存需要的数据

train_labels = train_dataset.pop('MPG')

test_labels = test_dataset.pop('MPG')

# 把数据归一化

def norm(x):

return (x - train_stats['mean']) / train_stats['std'] # 这里用的是转置之后的列表。。。所以这数据类型只能取一行不能取一列?

normed_train_data = norm(train_dataset)

normed_test_data = norm(test_dataset)

构建模型

def build_model():

model = keras.Sequential([

layers.Dense(64, activation='relu', input_shape=[len(train_dataset.keys())]),

layers.Dense(64, activation='relu'),

layers.Dense(1)

])

optimizer = tf.keras.optimizers.RMSprop(0.001)

model.compile(loss='mse',

optimizer=optimizer,

metrics=['mae', 'mse'])

return model

model = build_model() # 构建

model.summary() # 看模型的详情

训练模型

假设您有一个包含200个样本(数据行)的数据集,并且您选择的Batch大小为5和1,000个Epoch。

这意味着数据集将分为40个Batch,每个Batch有5个样本。每批五个样品后,模型权重将更新。

这也意味着一个epoch将涉及40个Batch或40个模型更新。

有1000个Epoch,模型将暴露或传递整个数据集1,000次。在整个培训过程中,总共有40,000Batch。

神经网络中Batch和Epoch之间的区别是什么?

wq:因此 如果想要训练得快点,就加大batch或减小Epoch

先试用一下

example_batch = normed_train_data[:10]

example_result = model.predict(example_batch)

predict为输入样本生成输出预测。就,用刚刚搭建的网络及其初始参数跑一下预测

中文文档里有讲model的predict和fit

# 通过为每个完成的时期打印一个点来显示训练进度

class PrintDot(keras.callbacks.Callback):

def on_epoch_end(self, epoch, logs):

if epoch % 100 == 0: print('')

print('.', end='')

EPOCHS = 1000

history = model.fit(

normed_train_data, train_labels,

epochs=EPOCHS, validation_split = 0.2, verbose=0,

callbacks=[PrintDot()])

回调函数,callback,是obj类型的。他可以让模型去拟合,也常在各个点被调用。它存储模型的状态,能够采取措施打断训练,保存模型,加载不同的权重,或者替代模型状态。

虽然我们称之为回调“函数”,但事实上Keras的回调函数是一个类。定义新的回调函数必须继承自该类

回调函数以字典logs为参数,该字典包含了一系列与当前batch或epoch相关的信息

目前,模型的.fit()中有下列参数会被记录到logs中:

- 在每个epoch的结尾处(on_epoch_end),logs将包含训练的正确率和误差,acc和loss,如果指定了验证集,还会包含验证集正确率和误差val_acc)和val_loss,val_acc还额外需要在.compile中启用metrics=[‘accuracy’]。

- 在每个batch的开始处(on_batch_begin):logs包含size,即当前batch的样本数

- 在每个batch的结尾处(on_batch_end):logs包含loss,若启用accuracy则还包含acc

Keras中的回调函数Callbacks详解

# 调用还是跟上面一样,回调函数内容写着玩

class PrintDot(keras.callbacks.Callback):

def on_epoch_end(self, epoch, logs):

if epoch % 10 == 0:print('a')

print('n', end='b')

print(logs)

def on_batch_begin(self, batch, logs):

if batch % 5 == 0:

print('')

print('2')

print('3', end='c')

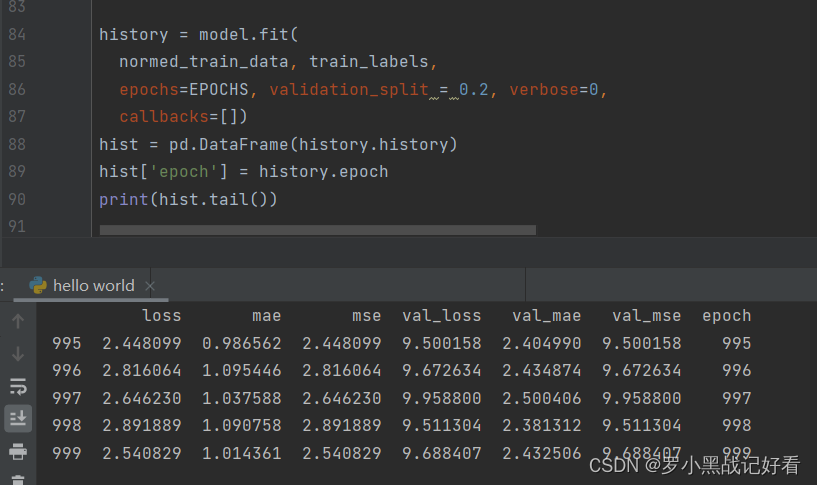

查看数据

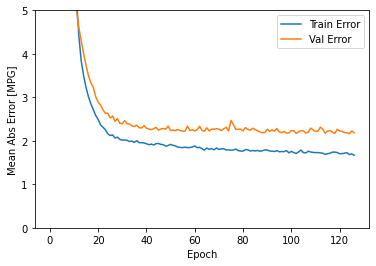

def plot_history(history):

# 拿数据的基本步骤

hist = pd.DataFrame(history.history)

hist['epoch'] = history.epoch

plt.figure()# 画布

plt.xlabel('Epoch')

plt.ylabel('Mean Abs Error [MPG]')

plt.plot(hist['epoch'], hist['mae'],# 按数据画线

label='Train Error')

plt.plot(hist['epoch'], hist['val_mae'],# 居然会自动换颜色

label = 'Val Error')

plt.ylim([0,5])

plt.legend()# 加上右上角图例

plt.figure()

plt.xlabel('Epoch')

plt.ylabel('Mean Square Error [$MPG^2$]')

plt.plot(hist['epoch'], hist['mse'],

label='Train Error')

plt.plot(hist['epoch'], hist['val_mse'],

label = 'Val Error')

plt.ylim([0,20])

plt.legend()

plt.show()

plot_history(history)

图中的数据表示 :训练集的误差越来越少,但测试集的误差却越来越大了,这八成是过拟合了。

补救模型

其实就加一个回调,让模型的训练集误差有点离谱的时候让他停下。

model = build_model()

# patience 值用来检查改进 epochs 的数量

early_stop = keras.callbacks.EarlyStopping(monitor='val_loss', patience=10)

history = model.fit(normed_train_data, train_labels, epochs=EPOCHS,

validation_split = 0.2, verbose=0, callbacks=[early_stop, PrintDot()])

early_stop = keras.callbacks.EarlyStopping(monitor=‘val_loss’,patience=50)

monitor:监控的数据接口。

keras定义了如下的数据接口可以直接使用:

- acc(accuracy),测试集的正确率

- loss,测试集的损失函数(误差)

- val_acc(val_accuracy),验证集的正确率

- val_loss,验证集的损失函数(误差),这是最常用的监控接口,因为监控测试集通常没有太大意义,验证集上的损失函数更有意义。

patience:对于设置的monitor,可以忍受在多少个epoch内没有改进 patient不宜设置过小,防止因为前期抖动导致过早停止训练。当然也不宜设置的过大,就失去了EarlyStopping的意义了。

然后

拿测试集试用一下看行不行

loss, mae, mse = model.evaluate(normed_test_data, test_labels, verbose=2)

print("Testing set Mean Abs Error: {:5.2f} MPG".format(mae))

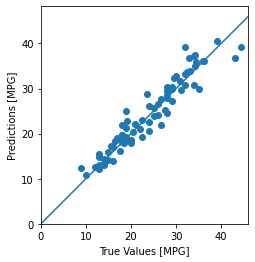

做预测

test_predictions = model.predict(normed_test_data).flatten()

plt.scatter(test_labels, test_predictions)

plt.xlabel('True Values [MPG]')

plt.ylabel('Predictions [MPG]')

plt.axis('equal')

plt.axis('square')

plt.xlim([0,plt.xlim()[1]])

plt.ylim([0,plt.ylim()[1]])

_ = plt.plot([-100, 100], [-100, 100])

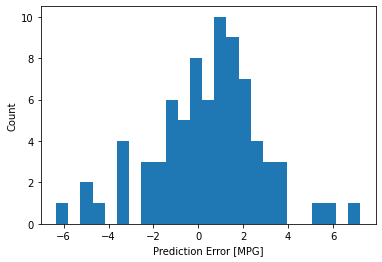

# 看误差分布

error = test_predictions - test_labels

plt.hist(error, bins = 25)

plt.xlabel("Prediction Error [MPG]")

_ = plt.ylabel("Count")

比如我们随机定义一个维度为(2,3,4)的数据a。flatten()和flatten(0)效果一样,a这个数据从0维展开,就是(2 ? 3 ? 4),长度就是(24)。a从1维展开flatten(1),就是( 2 , 3 ? 4 ) ,也就是(2,12)

flatten()参数详解

代码备份

过拟合的情况

import pathlib

import matplotlib.pyplot as plt

import pandas as pd

import seaborn as sns

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layers

dataset_path = keras.utils.get_file("auto-mpg.data", "http://archive.ics.uci.edu/ml/machine-learning-databases/auto-mpg/auto-mpg.data")

column_names = ['MPG','Cylinders','Displacement','Horsepower','Weight',

'Acceleration', 'Model Year', 'Origin']

raw_dataset = pd.read_csv(dataset_path, names=column_names,

na_values = "?", comment='\t',

sep=" ", skipinitialspace=True)

dataset = raw_dataset.copy()

dataset = dataset.dropna()

# print(dataset.isna().sum())

origin = dataset.pop('Origin')

# "Origin" 那一列的数据代表国家。但那样分类不直观,因此拆成3列,只有一个国家能置一【即为独热码 (one-hot)

dataset['USA'] = (origin == 1)*1.0

dataset['Europe'] = (origin == 2)*1.0

dataset['Japan'] = (origin == 3)*1.0

# 分出训练集和测试集

train_dataset = dataset.sample(frac=0.8,random_state=0)

test_dataset = dataset.drop(train_dataset.index)

# sns.pairplot(train_dataset[["MPG", "Cylinders", "Displacement", "Weight"]], diag_kind="kde")

# plt.show()

train_stats = train_dataset.describe()

train_stats.pop("MPG") # 把这列数据去掉

train_stats = train_stats.transpose() # 矩阵转置 就,把行和列的标签互换

# print(train_stats)

train_labels = train_dataset.pop('MPG')

test_labels = test_dataset.pop('MPG')

def norm(x):

return (x - train_stats['mean']) / train_stats['std']

normed_train_data = norm(train_dataset)

normed_test_data = norm(test_dataset)

def build_model():

model = keras.Sequential([

layers.Dense(64, activation='relu', input_shape=[len(train_dataset.keys())]),

layers.Dense(64, activation='relu'),

layers.Dense(1)

])

optimizer = tf.keras.optimizers.RMSprop(0.001)

model.compile(loss='mse',

optimizer=optimizer,

metrics=['mae', 'mse'])

return model

model = build_model()

# example_batch = normed_train_data[:10]

# example_result = model.predict(example_batch)

# print(example_result)

# 通过为每个完成的时期打印一个点来显示训练进度

class PrintDot(keras.callbacks.Callback):

def on_epoch_end(self, epoch, logs):

if epoch % 10 == 0:print('a')

print(logs)

# def on_batch_begin(self, batch, logs):

# if batch % 5 == 0:

# print('')

# print('2')

# print('3', end='c')

# on_epoch_begin: 在每个epoch开始时会自动调用

# on_batch_begin: 在每个batch开始时调用

# on_train_begin: 在训练开始时调用

# on_train_end: 在训练结束时调用

EPOCHS = 100

history = model.fit(

normed_train_data, train_labels,

epochs=EPOCHS, validation_split = 0.2, verbose=0,

callbacks=[PrintDot()])

hist = pd.DataFrame(history.history)

hist['epoch'] = history.epoch

print(hist.tail())

def plot_history(history):

# 拿数据的基本步骤

hist = pd.DataFrame(history.history)

hist['epoch'] = history.epoch

plt.figure()# 画布

plt.xlabel('Epoch')

plt.ylabel('Mean Abs Error [MPG]')

plt.plot(hist['epoch'], hist['mae'],# 按数据画线

label='Train Error')

plt.plot(hist['epoch'], hist['val_mae'],# 居然会自动换颜色

label = 'Val Error')

plt.ylim([0,5])

plt.legend()# 加上右上角图例

plt.figure()

plt.xlabel('Epoch')

plt.ylabel('Mean Square Error [$MPG^2$]')

plt.plot(hist['epoch'], hist['mse'],

label='Train Error')

plt.plot(hist['epoch'], hist['val_mse'],

label = 'Val Error')

plt.ylim([0,20])

plt.legend()

plt.show()

plot_history(history)

好好整理之后的代码

import pathlib

import matplotlib.pyplot as plt

import pandas as pd

import seaborn as sns

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layers

# 构建模型

def build_model():

model = keras.Sequential([

layers.Dense(64, activation='relu', input_shape=[len(train_dataset.keys())]),

layers.Dense(64, activation='relu'),

layers.Dense(1)

])

optimizer = tf.keras.optimizers.RMSprop(0.001)

model.compile(loss='mse',

optimizer=optimizer,

metrics=['mae', 'mse'])

return model

# 回调

# 通过为每个完成的时期打印一个点来显示训练进度

class PrintDot(keras.callbacks.Callback):

def on_epoch_end(self, epoch, logs):

if epoch % 10 == 0:print('a')

print(logs)

# def on_batch_begin(self, batch, logs):

# if batch % 5 == 0:

# print('')

# print('2')

# print('3', end='c')

# on_epoch_begin: 在每个epoch开始时会自动调用

# on_batch_begin: 在每个batch开始时调用

# on_train_begin: 在训练开始时调用

# on_train_end: 在训练结束时调用

# 展示 误差-epoch 的变化图

def plot_history(history):

# 拿数据的基本步骤

hist = pd.DataFrame(history.history)

hist['epoch'] = history.epoch

plt.figure()# 画布

plt.xlabel('Epoch')

plt.ylabel('Mean Abs Error [MPG]')

plt.plot(hist['epoch'], hist['mae'],# 按数据画线

label='Train Error')

plt.plot(hist['epoch'], hist['val_mae'],# 居然会自动换颜色

label = 'Val Error')

plt.ylim([0,5])

plt.legend()# 加上右上角图例

plt.figure()

plt.xlabel('Epoch')

plt.ylabel('Mean Square Error [$MPG^2$]')

plt.plot(hist['epoch'], hist['mse'],

label='Train Error')

plt.plot(hist['epoch'], hist['val_mse'],

label = 'Val Error')

plt.ylim([0,20])

plt.legend()

plt.show()

# 提取数据路径

dataset_path = keras.utils.get_file("auto-mpg.data", "http://archive.ics.uci.edu/ml/machine-learning-databases/auto-mpg/auto-mpg.data")

#枚举指定数据意义

column_names = ['MPG','Cylinders','Displacement','Horsepower','Weight',

'Acceleration', 'Model Year', 'Origin']

# 保存数据

raw_dataset = pd.read_csv(dataset_path, names=column_names,

na_values = "?", comment='\t',

sep=" ", skipinitialspace=True)

# 备份数据

dataset = raw_dataset.copy()

dataset = dataset.dropna()# 舍弃掉数据有空缺的那些行

# print(dataset.isna().sum())

origin = dataset.pop('Origin')

# "Origin" 那一列的数据代表国家。但那样分类不直观,因此拆成3列,只有一个国家能置一【即为独热码 (one-hot)

dataset['USA'] = (origin == 1)*1.0

dataset['Europe'] = (origin == 2)*1.0

dataset['Japan'] = (origin == 3)*1.0

# 分出训练集和测试集

train_dataset = dataset.sample(frac=0.8,random_state=0)# 随机不重复地拿80%的数据

test_dataset = dataset.drop(train_dataset.index) # 测试集 = 源数据集 - 训练集

# sns.pairplot(train_dataset[["MPG", "Cylinders", "Displacement", "Weight"]], diag_kind="kde")

# plt.show()

train_stats = train_dataset.describe()# 获取数据统计结果

train_stats.pop("MPG") # 把这列数据去掉

train_stats = train_stats.transpose() # 矩阵转置 就,把行和列的标签互换

# print(train_stats)

train_labels = train_dataset.pop('MPG')# 把train_dataset里的这列数据存进train_labels

test_labels = test_dataset.pop('MPG')

# 数据归一化

def norm(x):

return (x - train_stats['mean']) / train_stats['std']

normed_train_data = norm(train_dataset)

normed_test_data = norm(test_dataset)

# 构建模型

model = build_model()

# print(model.summary()) #检查模型

# 试预测

# example_batch = normed_train_data[:10]

# example_result = model.predict(example_batch)

# print(example_result)

# 对模型进行 EPOCHS 个周期的训练【这个数据库得被训练 EPOCHS 次

EPOCHS = 100

# 监控验证集的损失函数(误差) 如果连续 patience 个数据 都越来越糟就停止训练

early_stop = keras.callbacks.EarlyStopping(monitor='val_loss', patience=50)

# 进行训练并保存历史数据

history = model.fit(

normed_train_data, train_labels,

epochs=EPOCHS, validation_split = 0.2, verbose=0,

callbacks=[early_stop, PrintDot()])

# 用表格展示loss mae mse val_loss val_mae val_mse epoch等数据

# hist = pd.DataFrame(history.history)

# hist['epoch'] = history.epoch # 以epoch为行数

# print(hist.tail())

# 用曲线图展示 误差-epoch 的变化

# plot_history(history)

# 测试集试运行模型

loss, mae, mse = model.evaluate(normed_test_data, test_labels, verbose=2)

print("Testing set Mean Abs Error: {:5.2f} MPG".format(mae))# mpg是美制油耗单位

# 进行预测 并将输出数据降到1维

test_predictions = model.predict(normed_test_data).flatten()

# flatten()是对多维数据的降维函数。

# 使用测试集中的数据预测 MPG 值:

plt.scatter(test_labels, test_predictions)

plt.xlabel('True Values [MPG]')

plt.ylabel('Predictions [MPG]')

plt.axis('equal')

plt.axis('square')

plt.xlim([0,plt.xlim()[1]])

plt.ylim([0,plt.ylim()[1]])

_ = plt.plot([-100, 100], [-100, 100])

plt.show()

# 看下误差分布

error = test_predictions - test_labels

plt.hist(error, bins = 25)

plt.xlabel("Prediction Error [MPG]")

_ = plt.ylabel("Count")

plt.show()