项目获取

①项目代码以上传【是云开源】,回复【豆瓣爬虫】即可获取(免费开源)

项目简介

爬虫爬取豆瓣读书,每个图书标签下的前10本书。并保存到数据库中。项目仅供学习参考,切勿恶意攻击使用!

目标地址为:https://book.douban.com/tag/?view=type

项目技术

Python、Scrapy框架

部分代码

爬虫核心代码块:

class DBBookspiderSpider(scrapy.Spider):

name = "dbbookspider"

allowed_domains = ["book.douban.com"]

start_urls = get_tags()

# start_urls = ['https://book.douban.com/tag/%E6%8E%A8%E7%90%86']

def parse(self, response):

sel = Selector(response)

book_list = sel.css('#subject_list > ul > li')

for book in book_list[:10]:

item = DbbooksItem()

try:

# strip() 方法用于移除字符串头尾指定的字符(默认为空格)

item['book_name'] = book.xpath('div[@class="info"]/h2/a/text()').extract()[0].strip()

item['book_star'] = book.xpath("div[@class='info']/div[2]/span[@class='rating_nums']/text()").extract()[

0].strip()

item['book_pl'] = book.xpath("div[@class='info']/div[2]/span[@class='pl']/text()").extract()[0].strip()

pub = book.xpath('div[@class="info"]/div[@class="pub"]/text()').extract()[0].strip().split('/')

item['book_price'] = pub.pop()

item['book_date'] = pub.pop()

item['book_publish'] = pub.pop()

item['book_author'] = '/'.join(pub)

yield item

except:

pass

项目运行效果

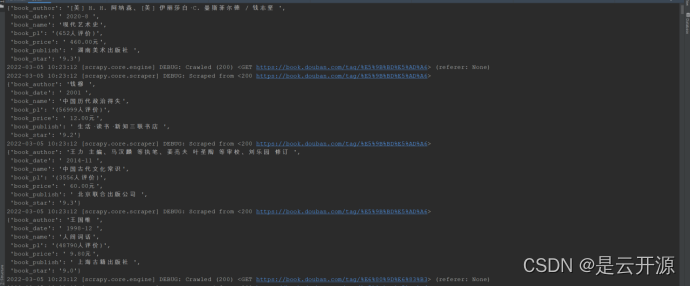

Pycharm后台运行效果:

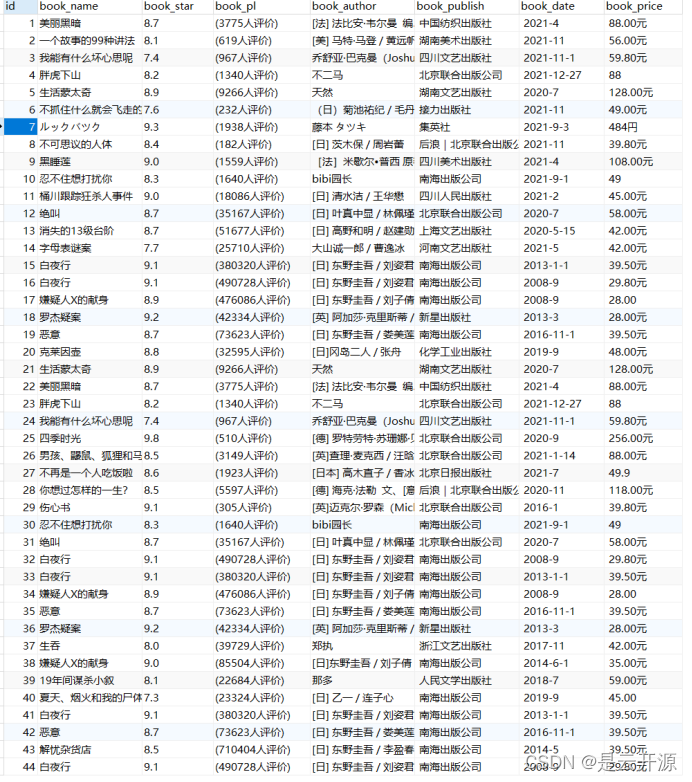

数据库保存效果: