此博客为学习 他人博客, bilibili视频解析所作的笔记,在看这个作者的项目之前,可以看看 这位up主的深度学习科普

项目源码: https://github.com/bubbliiiing/GAN-keras

此博文使用代码是 gan.py

1.Generator

def build_generator(self):

# --------------------------------- #

# 生成器,输入一串随机数字

# --------------------------------- #

model = Sequential()

model.add(Dense(256, input_dim=self.latent_dim))#把100维全连接到256个节点上

model.add(LeakyReLU(alpha=0.2))#激活函数

model.add(BatchNormalization(momentum=0.8))#标准化

model.add(Dense(512))#把256的神经元映射到512的神经元上

model.add(LeakyReLU(alpha=0.2))#激活函数

model.add(BatchNormalization(momentum=0.8))#标准化

model.add(Dense(1024))#把512的神经元映射到1024的神经元上

model.add(LeakyReLU(alpha=0.2))#激活函数

model.add(BatchNormalization(momentum=0.8))#标准化

model.add(Dense(np.prod(self.img_shape), activation='tanh'))#np.prod==28*28*1,把1024映射带784的神经元上

model.add(Reshape(self.img_shape))#再reshape成28*28*1

noise = Input(shape=(self.latent_dim,))#输入n维变量,例如100维

img = model(noise)#模型就能生成一张图片了

return Model(noise, img)

2.Discriminator

def build_discriminator(self):

# ----------------------------------- #

# 评价器,对输入进来的图片进行评价

# ----------------------------------- #

model = Sequential()

# 输入一张图片

model.add(Flatten(input_shape=self.img_shape))#28*28*1 Flatten:把28*28*1平铺成向量

model.add(Dense(512))#将784映射到512神经元上

model.add(LeakyReLU(alpha=0.2))#激活函数,alpha是学习率

model.add(Dense(256))#将512映射到256神经元上

model.add(LeakyReLU(alpha=0.2))#激活函数

# 判断真伪

model.add(Dense(1, activation='sigmoid'))#全连接到1维向量

img = Input(shape=self.img_shape)

validity = model(img)

return Model(img, validity)

3.完整代码

from __future__ import print_function, division

from keras.datasets import mnist

from keras.layers import Input, Dense, Reshape, Flatten, Dropout

from keras.layers import BatchNormalization, Activation, ZeroPadding2D

from keras.layers.advanced_activations import LeakyReLU

from keras.layers.convolutional import UpSampling2D, Conv2D

from keras.models import Sequential, Model

from keras.optimizers import Adam

import matplotlib.pyplot as plt

import sys

import os

import numpy as np

class GAN():

def __init__(self):

# --------------------------------- #

# 行28,列28,也就是mnist的shape

# --------------------------------- #

self.img_rows = 28

self.img_cols = 28

self.channels = 1

# 28,28,1

self.img_shape = (self.img_rows, self.img_cols, self.channels)

self.latent_dim = 100

# adam优化器,学习率0.000

2

optimizer = Adam(0.0002, 0.5)

self.discriminator = self.build_discriminator()

self.discriminator.compile(loss='binary_crossentropy',

optimizer=optimizer,

metrics=['accuracy'])

self.generator = self.build_generator()#生成生成网络模型

gan_input = Input(shape=(self.latent_dim,))#产生噪声输入

img = self.generator(gan_input)#生成一张图片

# 在训练generate的时候不训练discriminator

self.discriminator.trainable = False

# 对生成的假图片进行预测

validity = self.discriminator(img)#将生成图片传入判别模型中得到预测结果

self.combined = Model(gan_input, validity)#结合判别模型对生成模型进行训练

self.combined.compile(loss='binary_crossentropy', optimizer=optimizer)

def build_generator(self):

# --------------------------------- #

# 生成器,输入一串随机数字

# --------------------------------- #

model = Sequential()

model.add(Dense(256, input_dim=self.latent_dim))

model.add(LeakyReLU(alpha=0.2))

model.add(BatchNormalization(momentum=0.8))

model.add(Dense(512))

model.add(LeakyReLU(alpha=0.2))

model.add(BatchNormalization(momentum=0.8))

model.add(Dense(1024))

model.add(LeakyReLU(alpha=0.2))

model.add(BatchNormalization(momentum=0.8))

model.add(Dense(np.prod(self.img_shape), activation='tanh'))

model.add(Reshape(self.img_shape))

noise = Input(shape=(self.latent_dim,))

img = model(noise)

return Model(noise, img)

def build_discriminator(self):

# ----------------------------------- #

# 评价器,对输入进来的图片进行评价

# ----------------------------------- #

model = Sequential()

# 输入一张图片

model.add(Flatten(input_shape=self.img_shape))

model.add(Dense(512))

model.add(LeakyReLU(alpha=0.2))

model.add(Dense(256))

model.add(LeakyReLU(alpha=0.2))

# 判断真伪

model.add(Dense(1, activation='sigmoid'))

img = Input(shape=self.img_shape)

validity = model(img)

return Model(img, validity)

def train(self, epochs, batch_size=128, sample_interval=50):

# 获得数据

(X_train, _), (_, _) = mnist.load_data()#加载数据集

# 进行标准化,标准化

X_train = X_train / 127.5 - 1.

#28,28->28,28,1

X_train = np.expand_dims(X_train, axis=3)

# 创建标签

valid = np.ones((batch_size, 1))#把真图片标签为1

fake = np.zeros((batch_size, 1))#把假图片标记为0

for epoch in range(epochs):

# --------------------------- #

# 随机选取batch_size个图片

# 对discriminator进行训练

# --------------------------- #

idx = np.random.randint(0, X_train.shape[0], batch_size)#随机选取几张真是图片

imgs = X_train[idx]#放入这个数组

noise = np.random.normal(0, 1, (batch_size, self.latent_dim))#生成一堆noise

gen_imgs = self.generator.predict(noise)#传入生成模型中,生成生成模型

d_loss_real = self.discriminator.train_on_batch(imgs, valid)#传入真实图片,结果和1对比,train_on_batch一个batch一个batch的训练

d_loss_fake = self.discriminator.train_on_batch(gen_imgs, fake)#传入假图片,结果和0对

d_loss = 0.5 * np.add(d_loss_real, d_loss_fake)

# --------------------------- #

# 训练generator

# --------------------------- #

noise = np.random.normal(0, 1, (batch_size, self.latent_dim))#生成一组noise

g_loss = self.combined.train_on_batch(noise, valid)#生成模型和1对比

print ("%d [D loss: %f, acc.: %.2f%%] [G loss: %f]" % (epoch, d_loss[0], 100*d_loss[1], g_loss))

if epoch % sample_interval == 0:

self.sample_images(epoch)

def sample_images(self, epoch):

r, c = 5, 5

noise = np.random.normal(0, 1, (r * c, self.latent_dim))

gen_imgs = self.generator.predict(noise)

gen_imgs = 0.5 * gen_imgs + 0.5

fig, axs = plt.subplots(r, c)

cnt = 0

for i in range(r):

for j in range(c):

axs[i,j].imshow(gen_imgs[cnt, :,:,0], cmap='gray')

axs[i,j].axis('off')

cnt += 1

fig.savefig("images/%d.png" % epoch)

plt.close()

if __name__ == '__main__':

if not os.path.exists("./images"):

os.makedirs("./images")

gan = GAN()

gan.train(epochs=30000, batch_size=256, sample_interval=200)

4.项目实践

4.1 下载项目

项目地址:https://github.com/bubbliiiing/GAN-keras

4.2 环境配置

在annaconda里搭建一个虚拟环境,并配置

tensorflow==1.13.1

keras==2.1.5

作者说需要tensorflow-gpu,但是其实这个项目挺小的,完全可以使用CPU

4.3 运行代码

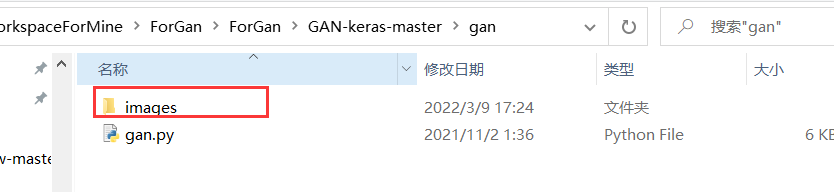

跳转到gan目录下,直接运行python文件就ok

python gan.py

4.4 查看运行结果

第三万次结果: