前言

某马帮忙爬了一下我的博客数据,奈何代码不入流,我这里重构一下

顺便记录一下整个爬虫的分析、构建和编码过程

需求

爬取我自己的每个博客的[‘博客名’, ‘阅读量’, ‘好评数’, ‘差评数’, ‘评论数’, ‘收藏数’, ‘链接’]

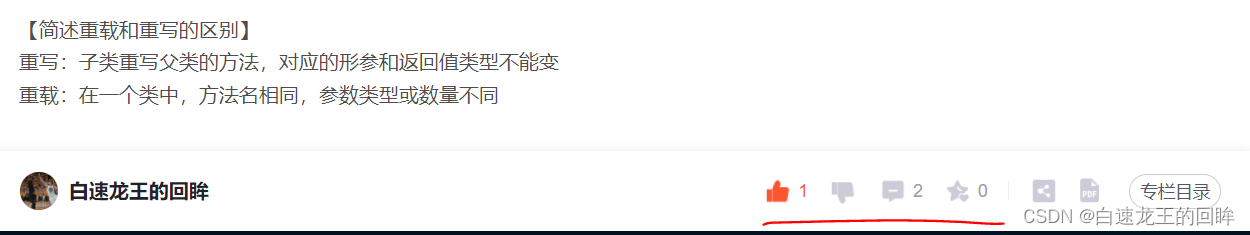

点开,检查,我们依次看看它们对应的标签是个啥

title:

title = soup.find('h1', class_='title-article', id='articleContentId').text.strip()

如上图代码,title就轻易得到了,read阅读量也是类似的,这里就不讲解

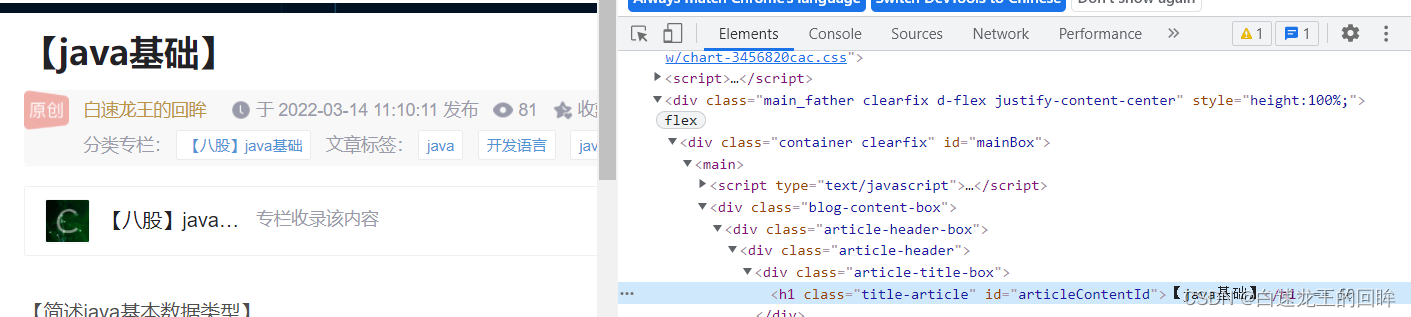

然而,由于’好评数’, ‘差评数’, ‘评论数’, '收藏数’都是对应的count,很难区分,我们干脆把span的count全部找出来,然后再找规律:

count_items = soup.findAll('span', class_='count')

# 0是点赞

# 1是反点赞

# 2是评论

# 3是收藏

like = int('0' if count_items[0].text.strip() == '' else count_items[0].text.strip())

dislike = int('0' if count_items[1].text.strip() == '' else count_items[1].text.strip())

review = int('0' if count_items[2].text.strip() == '' else count_items[2].text.strip())

collect = int('0' if count_items[3].text.strip() == '' else count_items[3].text.strip())

好了,这些字段的获取都是比较简单的,问题是

如何获取每个博客的url呢??

这就需要动态爬虫

动态爬虫获取我自己所有博客的url

首先,我所有的博客信息都在主页中:

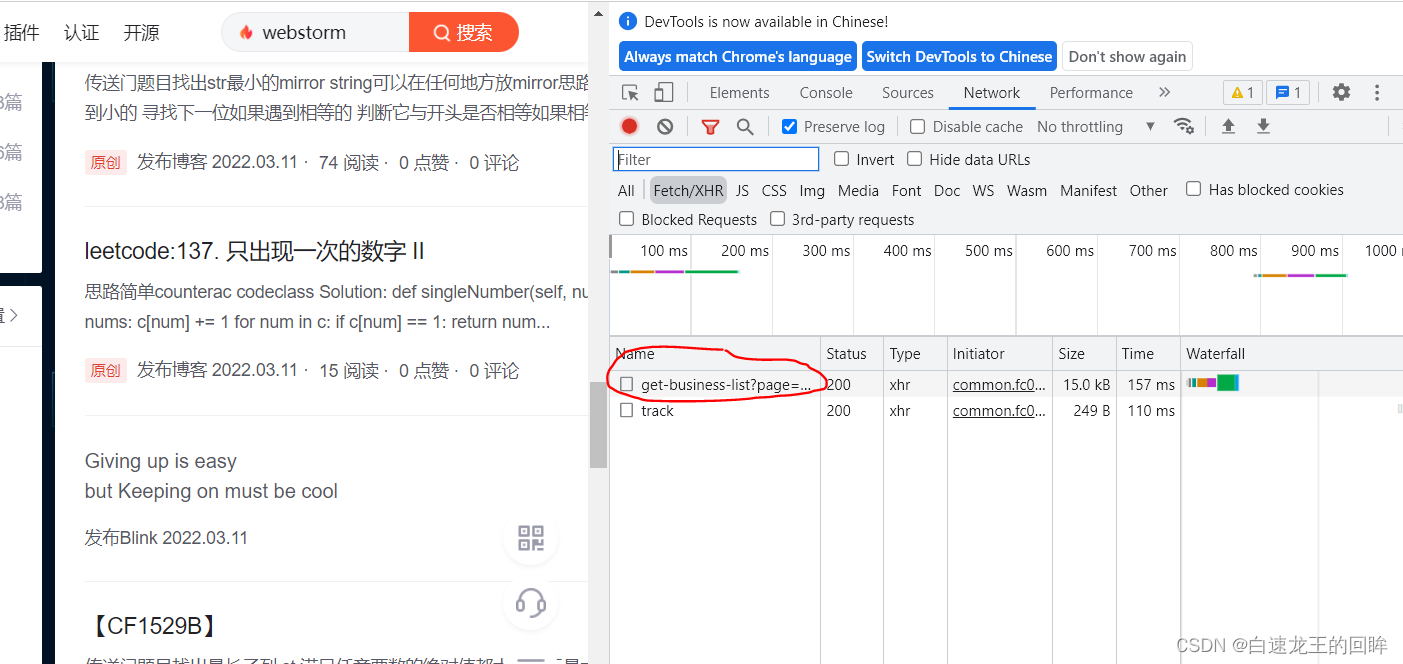

然而,主页并不能一次性加载出全部博客的信息,它会每隔一段时间显示出新的博客

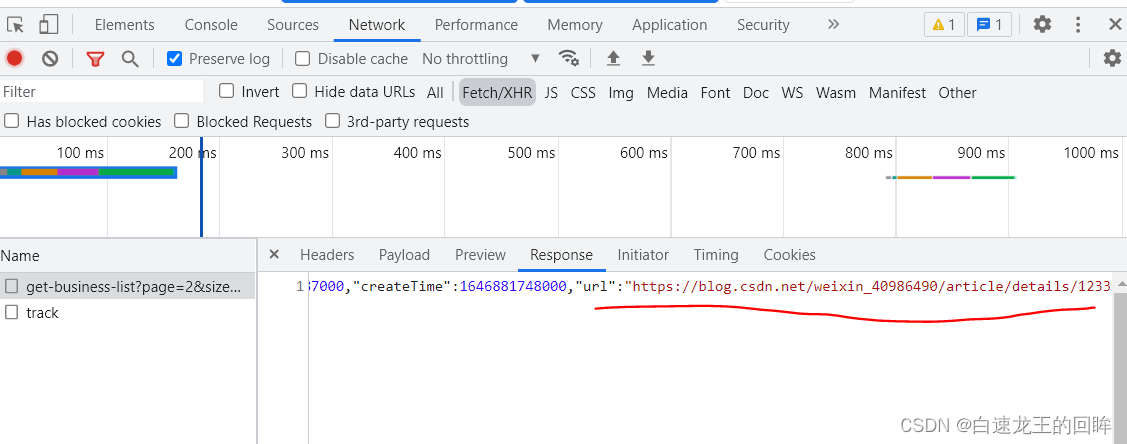

这时候我们就要观察检查中的networks中的XHR信息,看看它是加载哪个api:

我们把页面往下拉的同时,观察着xhr的变化:

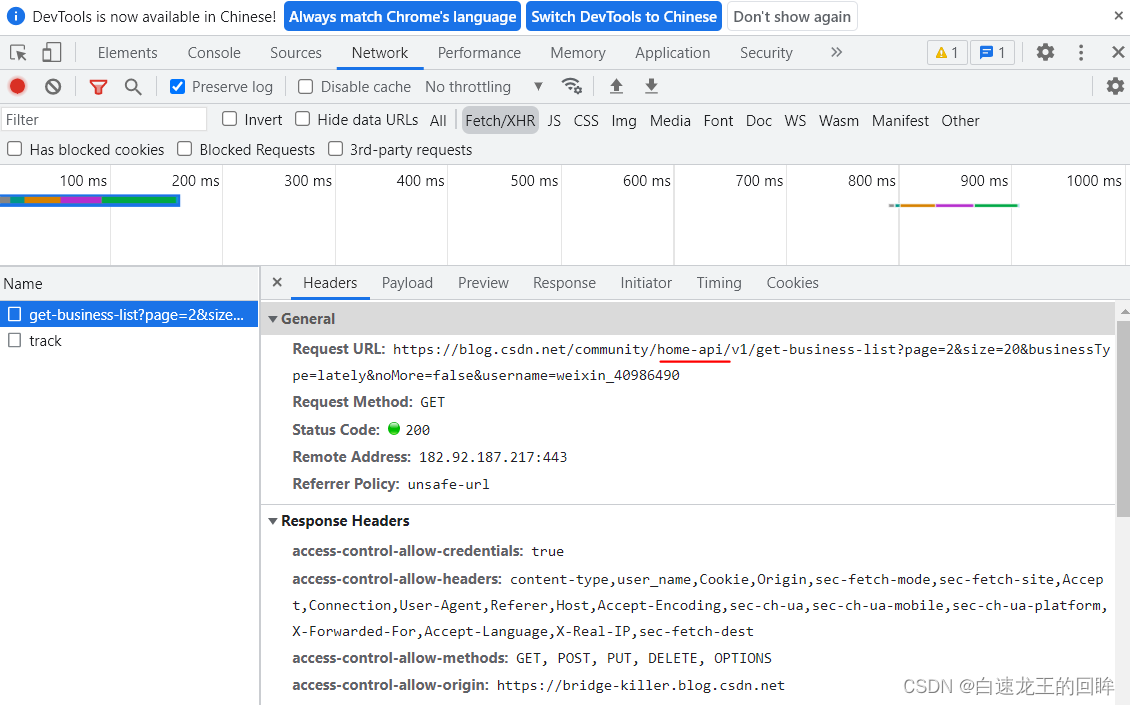

没错,就是它,我们加载到一定长度的时候,这个东西就出来了,他就是我们要找的url

果不其然是个api,我们看看它的response:

震惊地发现,他居然传回了每个博客的url!!!

好了,事已至此,基本逻辑就清晰了

我们的逻辑

根据api的规律,遍历页找到所有博客的url,进入所有博客url获取信息

最后利用workbook把全部信息记录到url中即可

my code

import requests

import time

from bs4 import BeautifulSoup

from openpyxl import Workbook

class myCrawl:

def __init__(self, max_page):

# store the basic info

self.article_info = []

self.total_article = 0

self.max_page = max_page

def worker(self):

# step1: 从主页加载进去,往下滑动,找到动态加载的url

# 每页20条,第i页

menu_url = "https://blog.csdn.net/community/home-api/v1/get-business-list?page={}&size=20&businessType=lately&noMore=false&username=weixin_40986490"

header = {

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/98.0.4758.102 Safari/537.36',

'referer': 'https://bridge-killer.blog.csdn.net/'

}

# 每页20条,一共差不多800条,40页

for page in range(1, self.max_page):

r1 = requests.get(menu_url.format(page), headers = header)

#print(r1.status_code)

for article in r1.json()['data']['list']:

article_url = article['url']

print("we are dealing with " + article_url)

r2 = requests.get(article_url, headers = header)

soup = BeautifulSoup(r2.text, 'html.parser')

# 获取信息

try:

title = soup.find('h1', class_='title-article', id='articleContentId').text.strip()

read = int(soup.find('span', class_='read-count').text.strip())

count_items = soup.findAll('span', class_='count')

# 0是点赞

# 1是反点赞

# 2是评论

# 3是收藏

like = int('0' if count_items[0].text.strip() == '' else count_items[0].text.strip())

dislike = int('0' if count_items[1].text.strip() == '' else count_items[1].text.strip())

review = int('0' if count_items[2].text.strip() == '' else count_items[2].text.strip())

collect = int('0' if count_items[3].text.strip() == '' else count_items[3].text.strip())

self.article_info.append([title, read, like, dislike, review, collect, article_url])

self.total_article += 1

except:

#可能会有blink

pass

# 不够20条了,到底了

if len(r1.json()['data']['list']) < 20:

break

# 防止反爬

time.sleep(1)

def save(self):

# [title, read, like, dislike, review, collect]

# 新建工作簿

wb = Workbook()

# 选择默认的工作表

sheet = wb.active

# 给工作表重命名

sheet.title = '我的博客数据'

# 表头

sheet.append(['博客名', '阅读量', '好评数', '差评数', '评论数', '收藏数', '链接'])

# 将数据一行一行写入

for row in self.article_info:

sheet.append(row)

# 保存文件

wb.save('我的博客数据.xlsx')

print("本次爬虫共统计了{}条博客数据".format(self.total_article))

if __name__ == '__main__':

spider = myCrawl(50)

spider.worker()

spider.save()

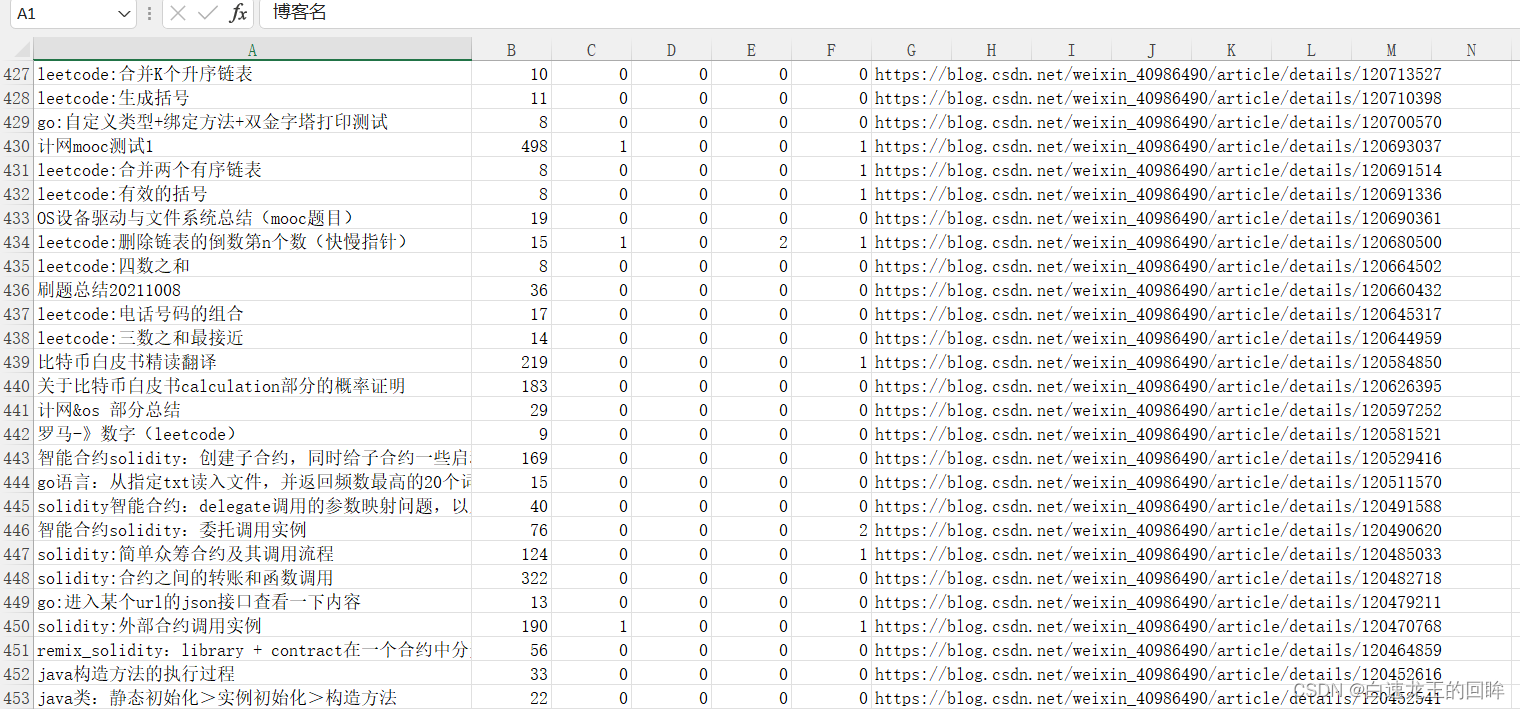

爬虫效果

总结:

把代码改成了oo的形式,可读性更好

后面考虑使用scrapy框架再重构一下,顺便熟悉一下scrapy

动态爬虫的关键就是找到动态加载时的api以及发现它的规律

然后从中得到真正博客页的url

后续对博客进行信息提取就是静态爬虫的内容了