需求

根据第1部分自然语言处理教学内容,请选择一本你喜欢的小说,利用上课讲的但不限于授课内容,对该小说进行分析。比如分析该小说的分词,词频,词性,小说人物出场次数排序,小说中食物排序(这个得有,我喜欢吃),小说人物关系等等。

1、前期准备

1.1 导入库

1.2 小说、用户字典、食物清单、停用词等txt文档 和 字体simfang.ttf 以及词云用到的图片

以上资料自行百度下载 或者 自我总结

2、源码

'''

Autor: 何邦渊

DateTime: 2022/3/20 21:24

IDE: PyCharm

Function: 根据第1部分自然语言处理教学内容,请选择一本你喜欢的小说,利用上课讲的但不限于授课内容,对该小说进行分析。比如分析该小说的分词,词频,

词性,小说人物出场次数排序,小说中食物排序(这个得有,我喜欢吃),小说人物关系等等。

要求:1代码以py文件附件形式上传,有功能性注释和普通注释。

2.功能介绍和运行结果截图可以在作业里写上。

3.小说文件用txt形式存储。

4.最后视功能完整性给分.

'''

import random

import networkx as nx

from imageio import imread

from wordcloud import WordCloud,ImageColorGenerator

import jieba

import jieba.posseg as pseg # 获取词性

from collections import Counter

import matplotlib.pyplot as plt

import numpy as np

from matplotlib.font_manager import FontProperties

# 去除词性为nr,但不是人名的词

excludes = ['乐章','小姑娘','荣耀','易拉灌','易容术','明白','全明星','蓝溪阁','季后赛','本赛季','砰砰','和兴欣','上赛季','华丽','司仪',

'西风','连胜','银武','周旋','马踏','安静','大屏幕','和嘉世','修正','了兴欣','卫星','谢谢','呼啸山庄','马甲','明星','英勇',

'真是太','冷不丁','小精灵','高潮','太久','布阵','祝福','段时间','格斗','高水平','言语','别提','冷笑','晓枪','白痴','赛中',

'顾忌','越来越近','封锁','小镇','贡献度','高阶','嘉世']

# 解决中文乱码,Python实现matplotlib显示中文的方法

plt.rcParams['font.sans-serif']=['SimHei']

plt.rcParams['axes.unicode_minus'] = False

font = FontProperties(fname=r"C:\Python\src\python与数据分析\simfang.ttf", size=14)

# 打开文本,生成列表

def open_text(path):

with open(path,'r',encoding='utf-8') as f:

return [line.strip() for line in f.readlines()]

# 对句子进行中文分词,词频,词性,并生成去除停用词和字符的小说文本

def seg_depart(path,total):

# 无符号文本

outstr = ''

# 创建一个停用词列表

stopwords = open_text('.\stopword.txt')

# 对文档中的每一行进行中文分词

with open(path,'r',encoding='utf-8') as text:

for line in text:

sentence_depart = pseg.cut(line.strip())

for word,flag in sentence_depart:

if word not in stopwords and word != '\t' and word != '' and len(word) >=2 and word.isdigit()!=True:

total[(word,flag)] = total.get((word,flag),0) + 1

outstr += word

with open('./全职高手分词词频词性.txt','w',encoding='utf-8') as text1:

for key,value in total.items():

text1.write('%s,%s,%d\n' %(key[0],key[1],value))

with open('./纯净版全职高手.txt','w',encoding='utf-8') as text2:

text2.write(outstr)

return total

# 人物出场次数排序

def character_sequence(total):

sequence = {}

for key,value in total.items():

if key[1]=='nr':

if key[0] == '叶修' or key[0] == '君莫笑':

word = '叶修'

elif key[0] == '苏沐橙' or key[0] == '沐雨橙风':

word = '苏沐橙'

elif key[0] == '方锐' or key[0] == '海无量':

word = '方锐'

elif key[0] == '唐柔' or key[0] == '寒烟柔':

word = '唐柔'

elif key[0] == '乔一帆' or key[0] == '一寸灰':

word = '乔一帆'

elif key[0] == '包荣兴' or key[0] == '包子入侵':

word = '包荣兴'

elif key[0] == '罗辑' or key[0] == '昧光':

word = '罗辑'

elif key[0] == '莫凡' or key[0] == '毁人不倦':

word = '莫凡'

elif key[0] == '安文逸' or key[0] == '小手冰凉':

word = '安文逸'

elif key[0] == '陈果' or key[0] == '逐烟霞':

word = '陈果'

elif key[0] == '魏琛' or key[0] == '迎风布阵':

word = '魏琛'

elif key[0] == '孙翔' or key[0] == '一叶知秋':

word = '孙翔'

elif key[0] == '韩文清' or key[0] == '大漠孤烟':

word ='韩文清'

elif key[0] == '喻文州' or key[0] == '索克萨尔':

word = '喻文州'

elif key[0] == ' 黄少天' or key [0] == '夜雨声烦':

word = '黄少天'

elif key[0] == '王杰希' or key[0] == '王不留行':

word = '王杰希'

else:

word = key[0]

# 字典的get方法,查找是否有键word,有则返回其对应键值,没有则返回后面的值0

sequence[word] = sequence.get(word,0) + value

# 剔除掉已经找出的不是人名的多频率词

for word in excludes:

if sequence.get(word,0) > 0:

del sequence[word]

# 根据字典值从大到小排序

sequence_new = sorted(sequence.items(),key=lambda x:x[1],reverse=True)

with open('./全职高手人物出场次数排序.txt','w',encoding='utf-8') as f:

for name,num in sequence_new:

f.write('%s,%d\n' %(name,num))

# 小说食物排序

def food_sequence(total):

sequence = {}

food = open_text('./全职高手食物.txt')

for key,value in total.items():

if key[0] in food:

sequence[key[0]] = value

with open('./全职高手食物排序.txt','w',encoding='utf-8') as f:

for word,value in sequence.items():

f.write('%s,%d\n' %(word,value))

# 随机生成颜色

colorNum = len(open_text('./全职高手人物.txt'))

def randomcolor():

colorArr = ['1', '2', '3', '4', '5', '6', '7', '8', '9', 'A', 'B', 'C', 'D', 'E', 'F']

color = ""

for i in range(6):

color += colorArr[random.randint(0, 14)]

return "#" + color

# 颜色存储列表

def color_list():

colorList = []

for i in range(colorNum):

colorList.append(randomcolor())

return colorList

# 生成人物关系图

def creat_relationship(path):

# 人物节点颜色

colors = color_list()

Names = open_text('./全职高手人物.txt')

relations = {}

# 按段落划分,假设在同一段落中出现的人物具有共现关系

lst_para = open_text(path) # lst_para是每一段

for text in lst_para:

for name_0 in Names:

if name_0 in text:

for name_1 in Names:

if name_1 in text and name_0 != name_1 and (name_1, name_0) not in relations:

relations[(name_0, name_1)] = relations.get((name_0, name_1), 0) + 1

maxRela = max([v for k, v in relations.items()])

relations = {k: v / maxRela for k, v in relations.items()}

# return relations

plt.figure(figsize=(15, 15))

# 创建无多重边无向图

G = nx.Graph()

for k, v in relations.items():

G.add_edge(k[0], k[1], weight=v)

# 筛选权重大于0.6的边

elarge = [(u, v) for (u, v, d) in G.edges(data=True) if d['weight'] > 0.6]

# 筛选权重大于0.3小于0.6的边

emidle = [(u, v) for (u, v, d) in G.edges(data=True) if (d['weight'] > 0.3) & (d['weight'] <= 0.6)]

# 筛选权重小于0.3的边

esmall = [(u, v) for (u, v, d) in G.edges(data=True) if d['weight'] <= 0.3]

# 设置图形布局

pos = nx.spring_layout(G) # 用Fruchterman-Reingold算法排列节点(样子类似多中心放射状)

# 设置节点样式

nx.draw_networkx_nodes(G, pos, alpha=0.8, node_size=1300, node_color=colors)

# 设置大于0.6的边的样式

nx.draw_networkx_edges(G, pos, edgelist=elarge, width=2.5, alpha=0.9, edge_color='g')

# 0.3~0.6

nx.draw_networkx_edges(G, pos, edgelist=emidle, width=1.5, alpha=0.6, edge_color='y')

# <0.3

nx.draw_networkx_edges(G, pos, edgelist=esmall, width=1, alpha=0.4, edge_color='b', style='dashed')

nx.draw_networkx_labels(G, pos, font_size=14)

plt.title("《全职高手》主要人物社交关系网络图")

# 关闭坐标轴

plt.axis('off')

# 保存图表

plt.savefig('./全职高手人物关系图', bbox_inches='tight')

plt.show()

# 生成词云

def GetWordCloud():

path_txt = './纯净版全职高手.txt'

path_img = './动漫.jpg'

f = open(path_txt,'r',encoding='utf-8').read()

background_image = imread(path_img)

# background_image = np.array(Image.open(path_img))

cut_text = " ".join(jieba.cut(f))

# 设置词云参数

wordcloud = WordCloud(

background_color="white", # 设置背景颜色

mask = background_image, #设置背景图片

max_words=400, #

width=600,

height=800,

# stopwords = "", #设置停用词

font_path="./simfang.ttf",

# 设置中文字体,使得词云可以显示(词云默认字体是“DroidSansMono.ttf字体库”,不支持中文),不加这个的话显示口型乱码

max_font_size=50, # 设置字体最大值

min_font_size=10,

random_state=30, # 设置有多少种配色方案

margin=2,

)

# 生成词云

wc = wordcloud.generate(cut_text)

# 展示词云图

# 生成颜色值

image_colors = ImageColorGenerator(background_image)

plt.imshow(wordcloud.recolor(color_func=image_colors), interpolation="bilinear")

# 关闭坐标系

plt.axis("off")

plt.show()

wc.to_file('./wordcloud.jpg') # 保存图片

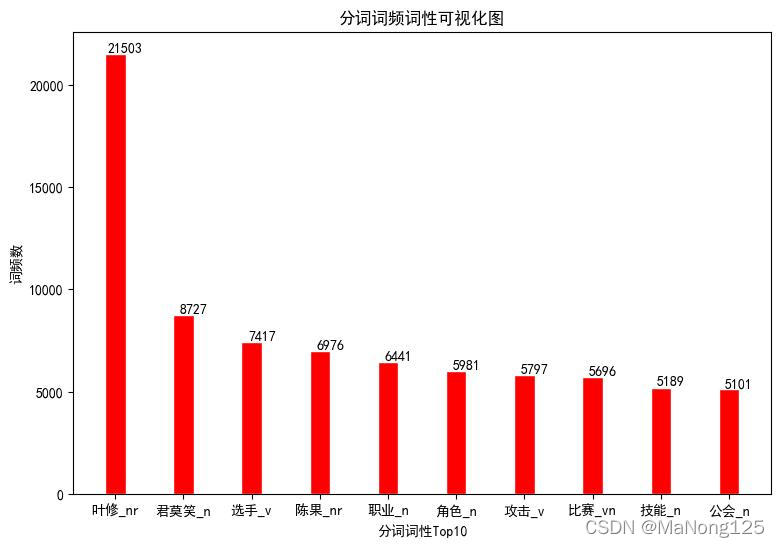

# 分词,词频,词性的可视化图

def create_wordPhotograph(total):

# 创建绘图对象,figsize参数可以指定绘图对象的宽度和高度,单位为英寸,一英寸=80px

plt.figure(figsize=(9, 6))

Y = []

sign = []

c = Counter(total).most_common(10)

# x,y的值

for word,num in c:

Y.append(num)

sign.append(word[0]+"_"+word[1])

plt.bar(np.arange(10) , Y, width=0.3, facecolor='red', edgecolor='white')

plt.xticks(np.arange(10), sign)

i = 0

X = np.arange(10)

# 在每个柱体上方显示数量

for x, y in zip(X, Y):

plt.text(x + 0.15, y + 0.1, '%d' % (Y[i]), ha='center', va='bottom')

i = i + 1

# 横坐标解释

plt.xlabel(u"分词词性Top10")

# 纵坐标解释

plt.ylabel(u"词频数")

# 图标题

plt.title(u"分词词频词性可视化图")

# 保存图

plt.savefig('./分词词频词性可视化图.jpg',bbox_inches='tight')

plt.show()

# 人物出场次序排序可视化图

def create_CharacterPhotograph():

# 创建绘图对象,figsize参数可以指定绘图对象的宽度和高度,单位为英寸,一英寸=80px

plt.figure(figsize=(9, 6))

Y = []

sign = []

i = 0

text = open_text('./全职高手人物出场次数排序.txt')

# x,y的值

for t in text:

if i<10:

tt = t.split(',')

Y.append(int(tt[1]))

sign.append(tt[0])

i+=1

plt.bar(np.arange(10) , Y, width=0.3, facecolor='red', edgecolor='white')

plt.xticks(np.arange(10), sign)

i = 0

X = np.arange(10)

# 在每个柱体上方显示数量

for x, y in zip(X, Y):

plt.text(x + 0.15, y + 0.1, '%d' % (Y[i]), ha='center', va='bottom')

i = i + 1

# 横坐标解释

plt.xlabel(u"出场人物Top10")

# 纵坐标解释

plt.ylabel(u"出场次数")

# 图标题

plt.title(u"人物出场次序排序可视化图")

# 保存图

plt.savefig('./人物出场次序排序可视化图.jpg',bbox_inches='tight')

plt.show()

# 小说食物排序可视化图

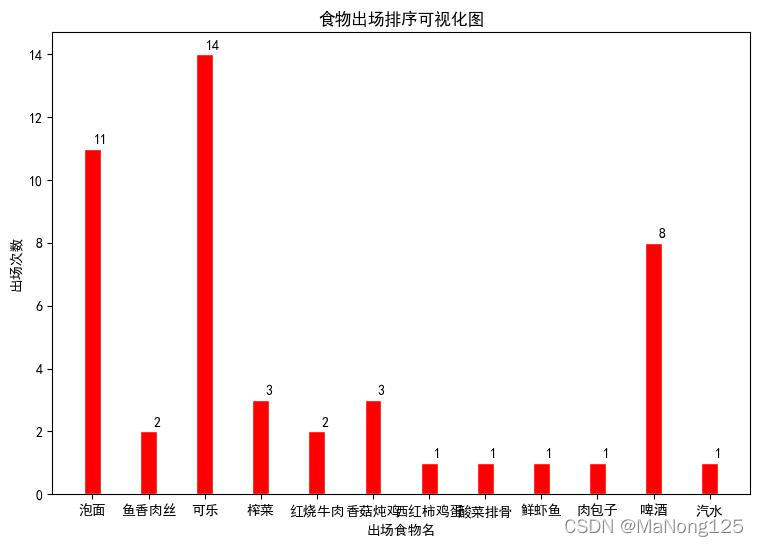

def create_foodPhotograph():

# 创建绘图对象,figsize参数可以指定绘图对象的宽度和高度,单位为英寸,一英寸=80px

plt.figure(figsize=(9, 6))

Y = []

sign = []

i = 0

text = open_text('./全职高手食物排序.txt')

# x,y的值

for t in text:

if i < len(text):

tt = t.split(',')

Y.append(int(tt[1]))

sign.append(tt[0])

i += 1

plt.bar(np.arange(len(text)), Y, width=0.3, facecolor='red', edgecolor='white')

plt.xticks(np.arange(len(text)), sign)

i = 0

X = np.arange(len(text))

# 在每个柱体上方显示数量

for x, y in zip(X, Y):

plt.text(x + 0.15, y + 0.1, '%d' % (Y[i]), ha='center', va='bottom')

i = i + 1

# 横坐标解释

plt.xlabel(u"出场食物名")

# 纵坐标解释

plt.ylabel(u"出场次数")

# 图标题

plt.title(u"食物出场排序可视化图")

# 保存图

plt.savefig('./食物出场排序可视化图.jpg', bbox_inches='tight')

plt.show()

def main():

total = {}

# 加载用户字典

jieba.load_userdict("./全职高手用户字典.txt")

# 分词后存入字典

total = seg_depart("./全职高手.txt",total)

# 人物出场次数排序,存入txt

character_sequence(total)

# 食物出场排序,存入txt

food_sequence(total)

# 生成主要人物关系图

creat_relationship("./全职高手.txt")

# 生成词云

GetWordCloud()

# 生成分词词频词性可视化图

create_wordPhotograph(total)

# 生成出场人物可视化图

create_CharacterPhotograph()

# 生成食物可视化图

create_foodPhotograph()

main()

3、运行结果

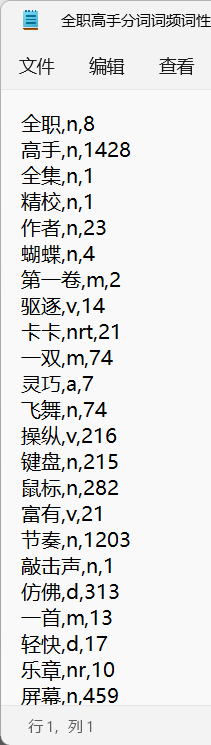

3.1 全职高手分词词频词性.txt

3.2 全职高手人物出场次数排序.txt

3.3 全职高手食物排序.txt

3.4 全职高手人物关系图.png

3.5 词云

3.6 分词词频词性可视化图.jpg

3.7 人物出场次序排序可视化图.jpg

3.8 食物出场排序可视化图.jpg