1.任务要求

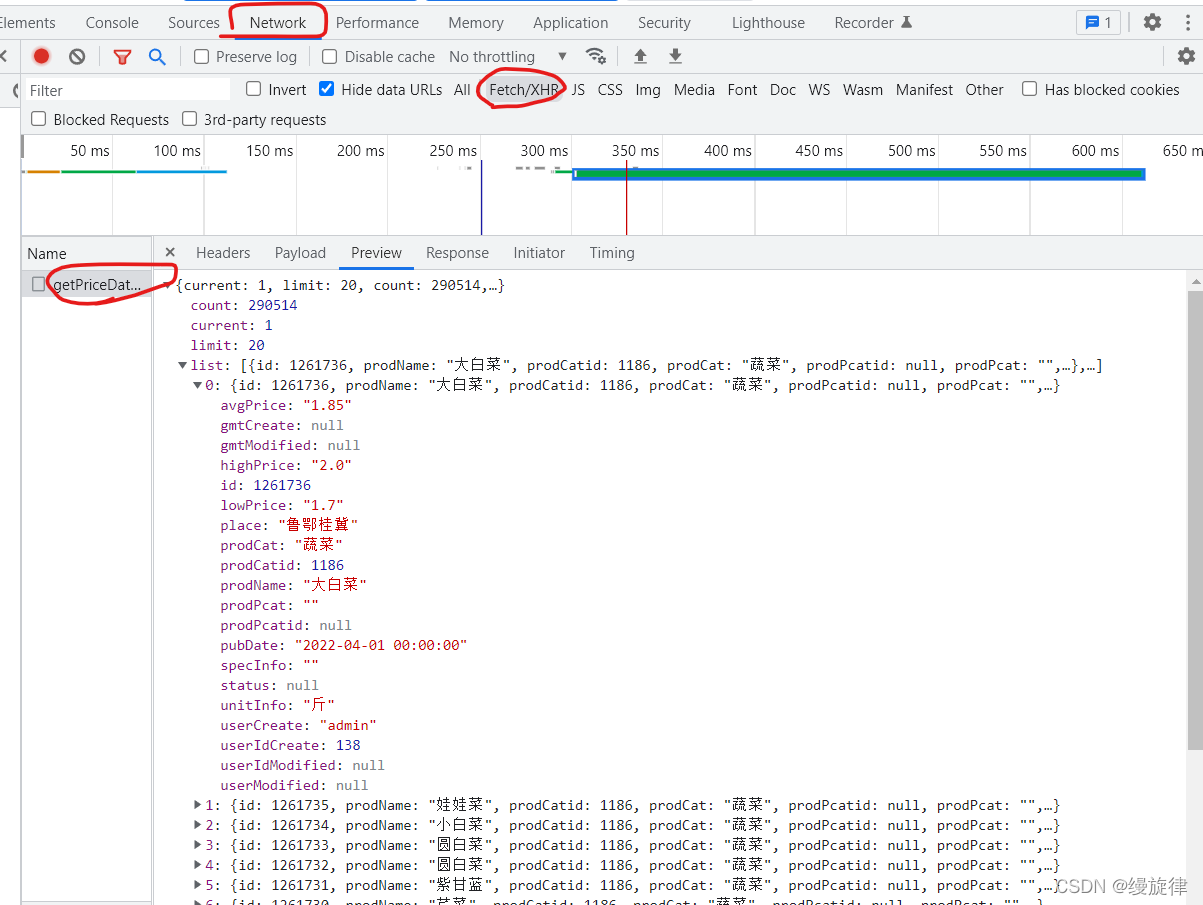

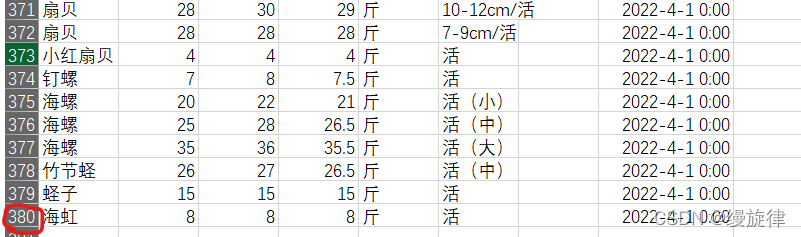

????????获取北京新发地市场多的页面蔬菜的信息:(下图为一个页面)

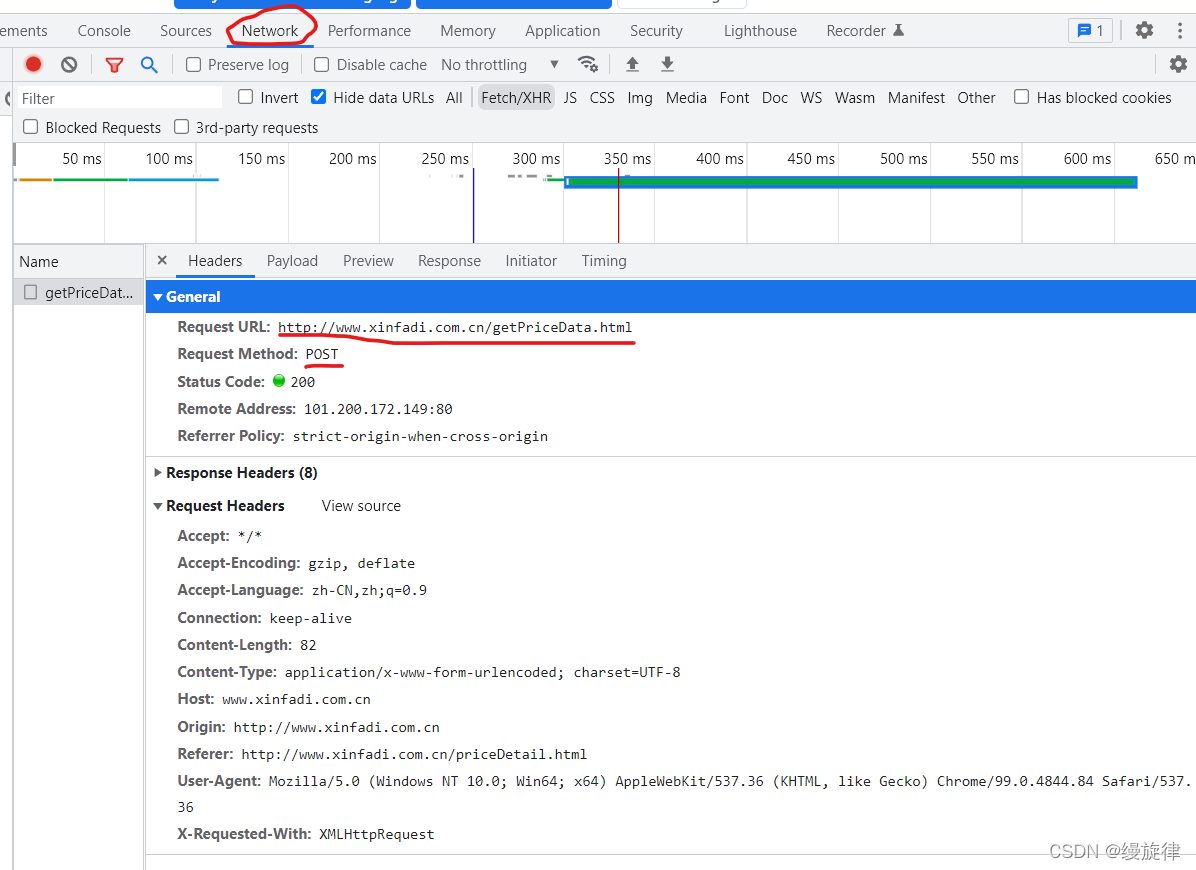

????????通过查看网页源代码,发现这些数据并不在网页源代码中,因此通过抓包工具寻找这些信息:

????????找到相应的url:

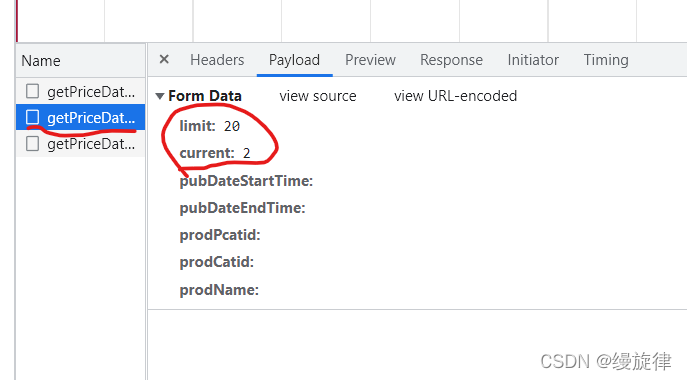

????????通过对比发现,不同的页面对应的url是一样的,改变的只有form data中的current,因此只需要修改对应的current数据即可获得多个页面的蔬菜信息:

????????若将每一个页面的信息提取看作一个任务,那么我们这里考虑使用多线程(可以将之看为多个工人)来处理这些任务。相比于一个任务完成再开始下一个任务的这一单线程处理方式,多线程往往具有更高的效率。

2.代码实现

2.1 多线程

import requests,csv,json,time

from concurrent.futures import ThreadPoolExecutor

f = open('veg_info.csv',mode='w',newline='')

csvwirter = csv.writer(f)

url = 'http://www.xinfadi.com.cn/getPriceData.html'

def single_page(current):

data = {

'limit': 20,

'current': current

}

res = requests.post(url,data=data)

dic = json.loads(res.text)

veg_info = dic['list']

name = [i['prodName'] for i in veg_info]

low_price = [i['lowPrice'] for i in veg_info]

high_price = [i['highPrice'] for i in veg_info]

avg_price = [i['avgPrice'] for i in veg_info]

unitinfo = [i['unitInfo'] for i in veg_info]

specinfo = [i['specInfo'] for i in veg_info]

place = [i['place'] for i in veg_info]

pubdate = [i['pubDate'] for i in veg_info]

page_info = zip(name,low_price,high_price,avg_price,unitinfo,specinfo,place,pubdate)

for i in list(page_info):

csvwirter.writerow(i)

print('page {} done!'.format(current))

if __name__ == '__main__':

start_time = time.time()

with ThreadPoolExecutor(5) as t_pool: # 5表示开辟5个线程

for i in range(1,20): # 获取1~19页的蔬菜信息

t_pool.submit(single_page,current=i)

end_time = time.time()

f.close()

print('download over! 用时{}s'.format(end_time-start_time)) # 用时1.6847822666168213s

page 2 done!

page 3 done!

page 4 done!

page 1 done!

page 5 done!

page 7 done!

page 8 done!

page 9 done!

page 6 done!

page 10 done!

page 12 done!

page 11 done!

page 13 done!

page 14 done!

page 15 done!

page 17 done!

page 16 done!

page 18 done!

page 19 done!

download over! 用时1.6847822666168213s

????????可以看出,多线程并不是按照从第一页到最后一页这样的顺序来依次处理任务的。19页,一页20条数据,共380条数据,总耗时1.68秒。

2.2 单线程(对比)

start_time = time.time()

for i in range(1,20):

single_page(i)

end_time = time.time()

f.close()

print('download over! 用时{}s'.format(end_time-start_time)) # 用时7.073571681976318s

????????总耗时7.07秒,远大于多线程的处理时间。