Pytorch基本语法及使用

-

版本说明

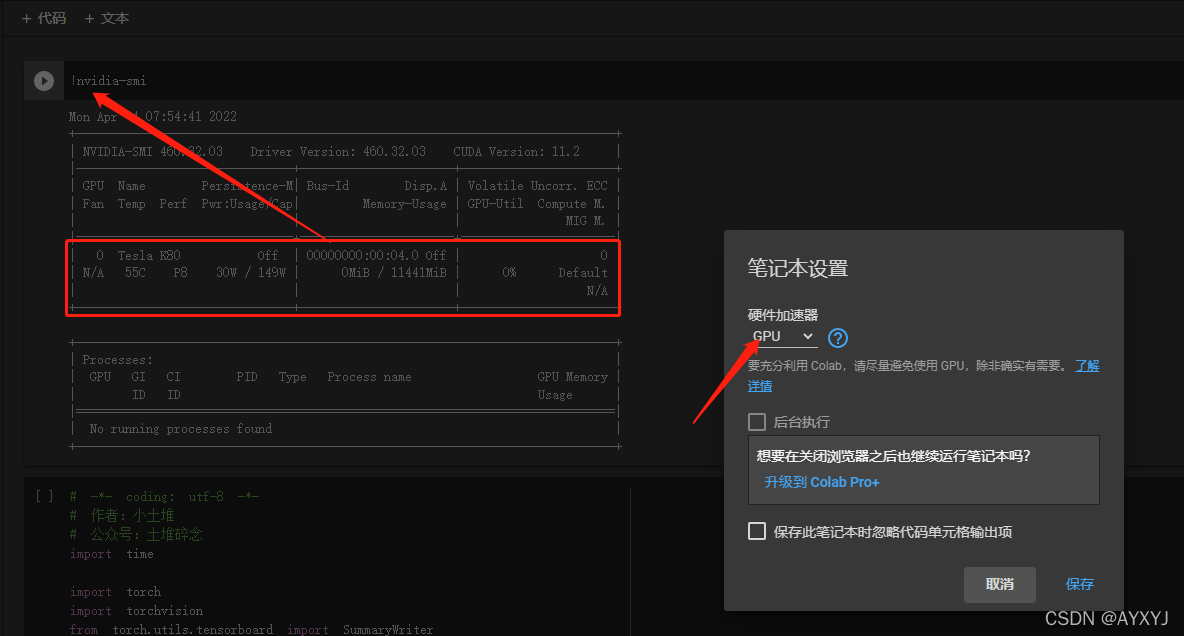

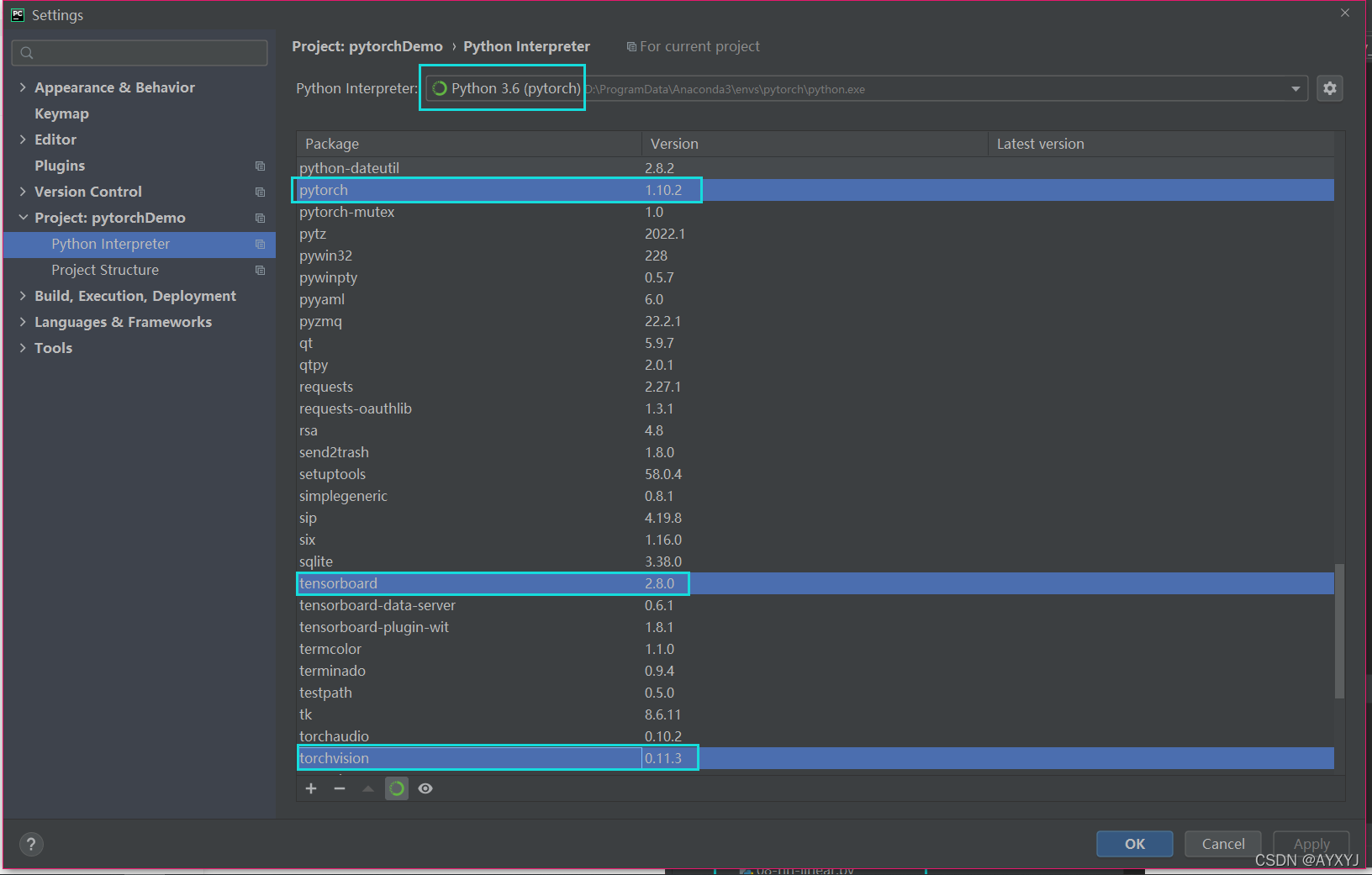

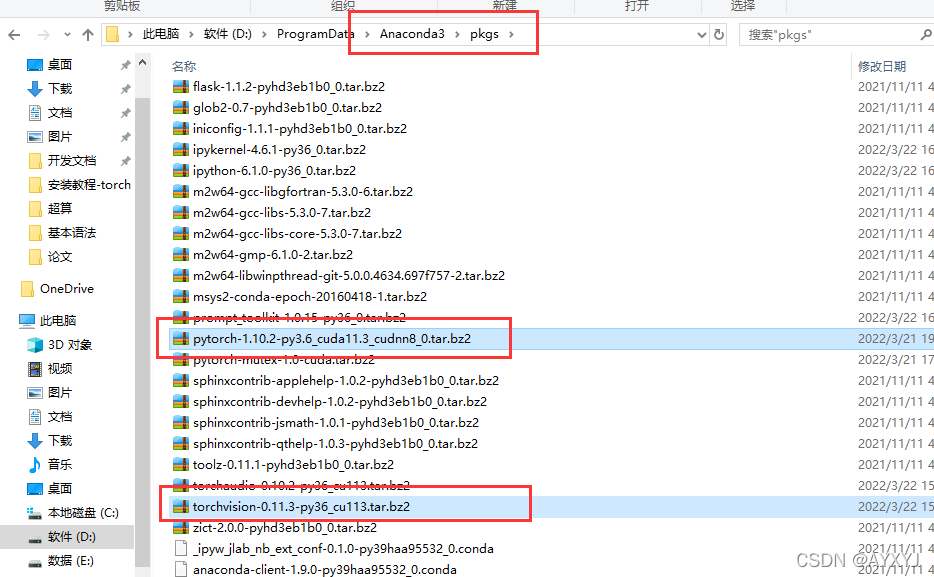

python==3.6 pytorch==1.10.2 # 和torchvision版本要对应,建议手动下载后安装 torchvision==0.11.3 # 和torch版本要对应,建议手动下载后安装 tensorboard==2.8.0

-

下载资源包链接并放到

./anaconda/pkgs目录下 , Wheel格式保存的Python安装包直接使用pip install 文件名即可

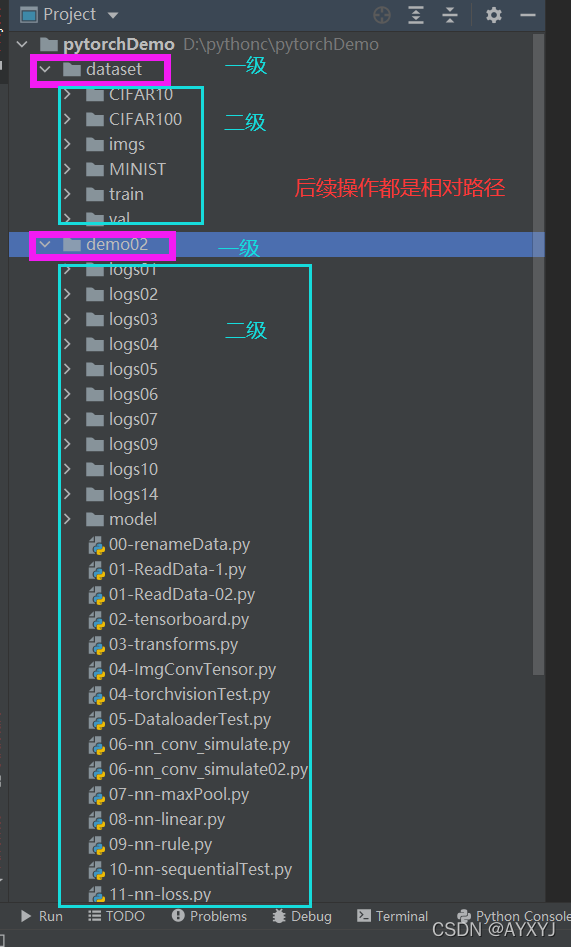

- 文件目录说明,后续代码都是基础此;云盘链接,pass:zzus

- 建议有神经网络基础后,在上手撸pytorch或者Tensorflow代码

- NN : 神经网络

- 全连接

- 神经元

- 学习率

- 激活函数

- 优化器,优化算法

- 正向传播和反向传播

- CNN:卷积神经网络

- 卷积

- 池化

- 线性层

- 激活层

- NN : 神经网络

00-renameData.py

- 数据集预处理,将标签读取并存储到txt,后续操作使用

import os

root_dir = "../dataset/train"

ants_label_dir = "ants"

bees_label_dir = "bees"

# 读取文件并生成对应的label.txt

img_path = os.listdir(os.path.join(root_dir, bees_label_dir))

out_dir = "bees_label"

label_path = os.path.join(root_dir, out_dir)

if not os.path.lexists(label_path):

os.makedirs(label_path)

for i in img_path:

file_name = i.split(".jpg")[0]

with open(os.path.join(label_path, "{}.txt".format(file_name)) , 'w' )as f:

f.write(bees_label_dir)

01-ReadData-01.py

- 数据集预处理,加载和标签,类似DataLoader() 【后边会有该用法】

#导包

from torch.utils.data import Dataset

from PIL import Image

import os

"""

读取文件夹中所有文件名称

"""

class MyData(Dataset):

def __init__(self , root_dir , label_dir):

self.root_dir = root_dir

self.label_dir = label_dir

self.path = os.path.join(self.root_dir , self.label_dir)

if not os.path.lexists(self.path):

os.makedirs(self.path)

self.img_path = os.listdir(self.path)

def __getitem__(self, idx):

img_name = self.img_path[idx]

img_item_path = os.path.join(self.root_dir , self.label_dir , img_name)

img = Image.open(img_item_path)

label = self.label_dir

return img , label

def __len__(self):

return len(self.img_path)

root_dir = "../dataset/train"

ants_label_dir = "ants"

bees_label_dir = "bees"

ants_dataset = MyData(root_dir ,ants_label_dir)

bees_dataset = MyData(root_dir ,bees_label_dir)

ant_img , ant_label = ants_dataset[0]

bee_img , bee_label = bees_dataset[0]

print(ant_label)

ant_img.show()

print(len(ants_dataset))

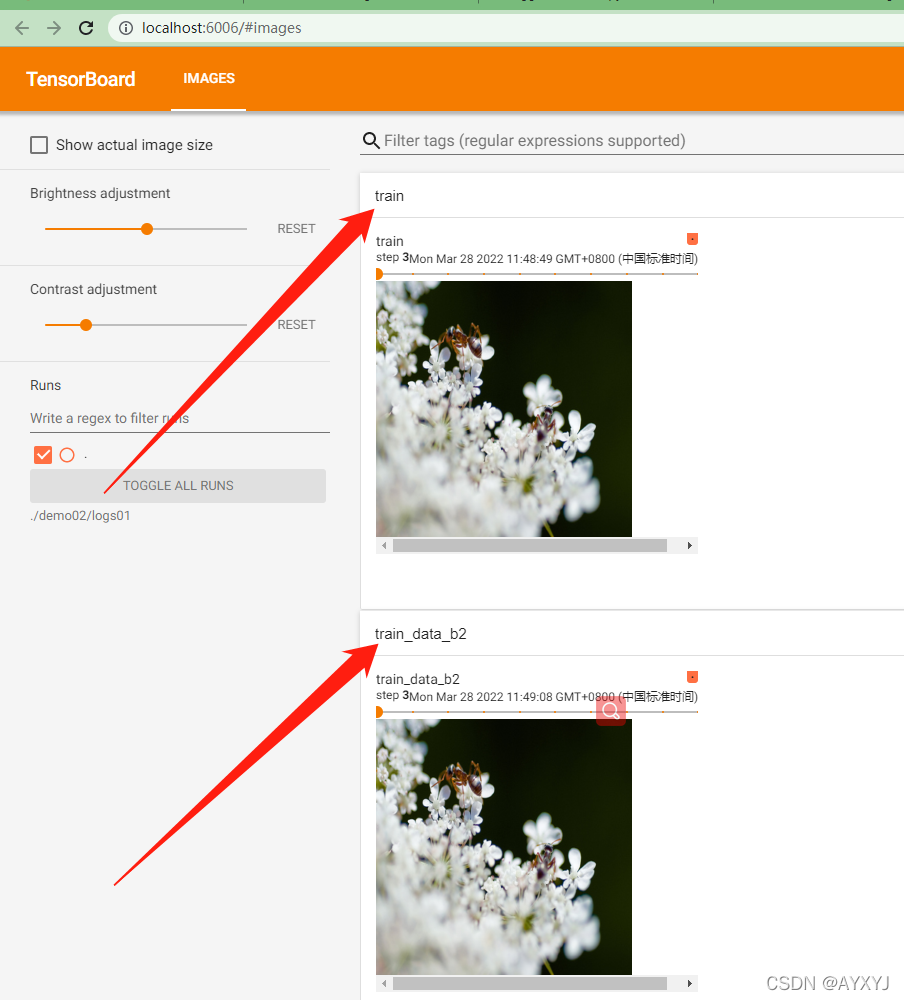

01-ReadData-02.py

- 数据加载后,通过Tensorboard进行展示【该地方跳过,学过Tensorboard在回来看就懂了】

import os

from PIL import Image

from torch.utils.data import DataLoader,Dataset

from torch.utils.tensorboard import SummaryWriter

from torchvision.transforms import transforms

from torchvision.utils import make_grid

"""

读取数据使用torch,并使用Tensorboard进行可视化

"""

class MyData(Dataset):

# 初始化 文件夹下所有文件名称列表 ; 标签 ; 根路径 # root_dir : 根目录 ; label_dir : 标签 也是 文件夹名称

def __init__(self , root_dir , label_dir , transform):

self.root_dir = root_dir

self.label_dir = label_dir

self.transform = transform # PIL -> Tensor

self.path = os.path.join(self.root_dir , self.label_dir)

if not os.path.lexists(self.path):

os.makedirs(self.path)

self.imgs_list = os.listdir(self.path)

# 返回图片和标签

def __getitem__(self, item):

img_name = self.imgs_list[item]

img_path = os.path.join(self.root_dir , self.label_dir , img_name)

img = Image.open(img_path)

img = self.transform(img)

label = self.label_dir

sample = {'image': img, 'target': label} # 返回张量图片和标签

return sample

def __len__(self):

return len(self.imgs_list)

if __name__ == '__main__':

root_dir = "../dataset/train/"

ants_label_dir = "ants"

bees_label_dir = "bees"

#格式转换

transform = transforms.Compose([transforms.Resize((256,256)) ,transforms.ToTensor()])

ants_dataset = MyData(root_dir, ants_label_dir ,transform=transform)

ant_img , ant_label = ants_dataset[0]

bees_dataset = MyData(root_dir , bees_label_dir , transform=transform)

bee_img , bee_label = bees_dataset[0]

#训练数据集

train_dataset = ants_dataset + bees_dataset

dataloader = DataLoader(train_dataset , batch_size= 1 , num_workers= 2) #数据加载器

# 测试图片

print(train_dataset[243]['target'])

print(train_dataset[0]['image'].shape)

writer = SummaryWriter("logs01") #tensorboard

step = 0

for data in train_dataset:

writer.add_image("train" , data['image'] ,global_step=step)

print(step)

step+=1

for i, j in enumerate(dataloader):

imgs, labels = j

print(type(j))

print(i, j['image'].shape)

writer.add_image("train_data_b2", make_grid(j['image']), i)

writer.close()

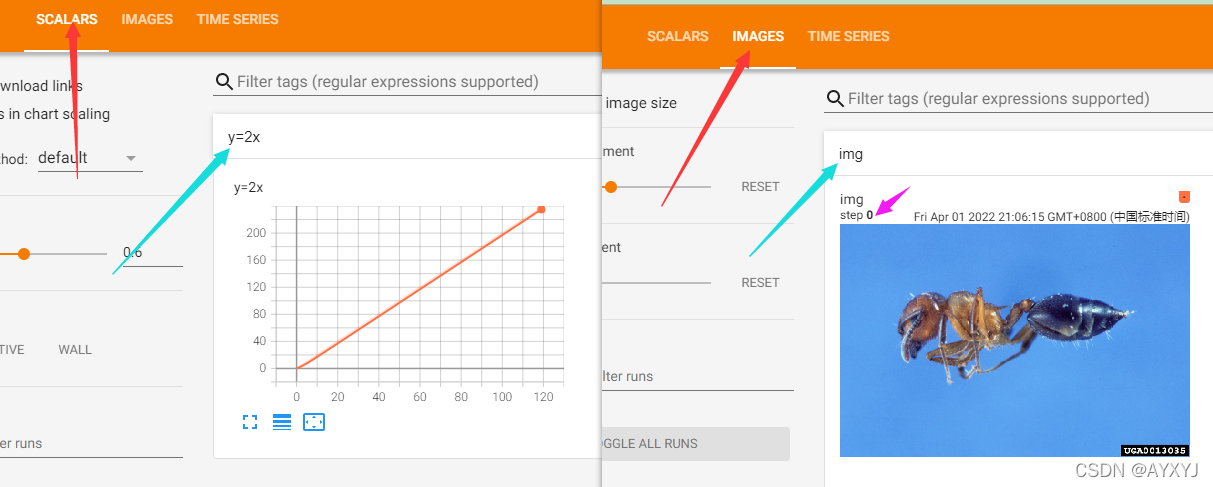

02-tensorboard.py

- Tensorboard基本API使用,掌握基本用法后,在去看01-ReadData-02.py 可视化

from PIL import Image

from torch.utils.tensorboard import SummaryWriter

import numpy as np

"""

TensorBoard 可视化面板 常见的api

- add_scalar 添加序列

- add_image 添加一张图片 参数类型如果是 array ,则需要指定 dataformats 步长也可以使用

- add_images 添加一组图片

- add_graph 添加网络模型 ,参数是模型和训练前数据

"""

writer = SummaryWriter("logs02")

image_path = "../dataset/train/ants/0013035.jpg"

img = Image.open(image_path)

# img.show()

img_array = np.array(img)

print(img_array.shape)

#写一张图片

# 此处添加的img_array 是numpy类型,所以需要使用dataformats="HWC" H:height ,W:Weight , C:Channel

# 如果是Tensor类型。则不需要进行制定格式

writer.add_image("img" , img_array , dataformats="HWC")

#y = 2x

for i in range(120):

#写一个序列

writer.add_scalar("y=2x" , 2*i , i)

#关闭流

writer.close()

# 查看tensorboard

# tensorboard --logdir=demo02/logs02

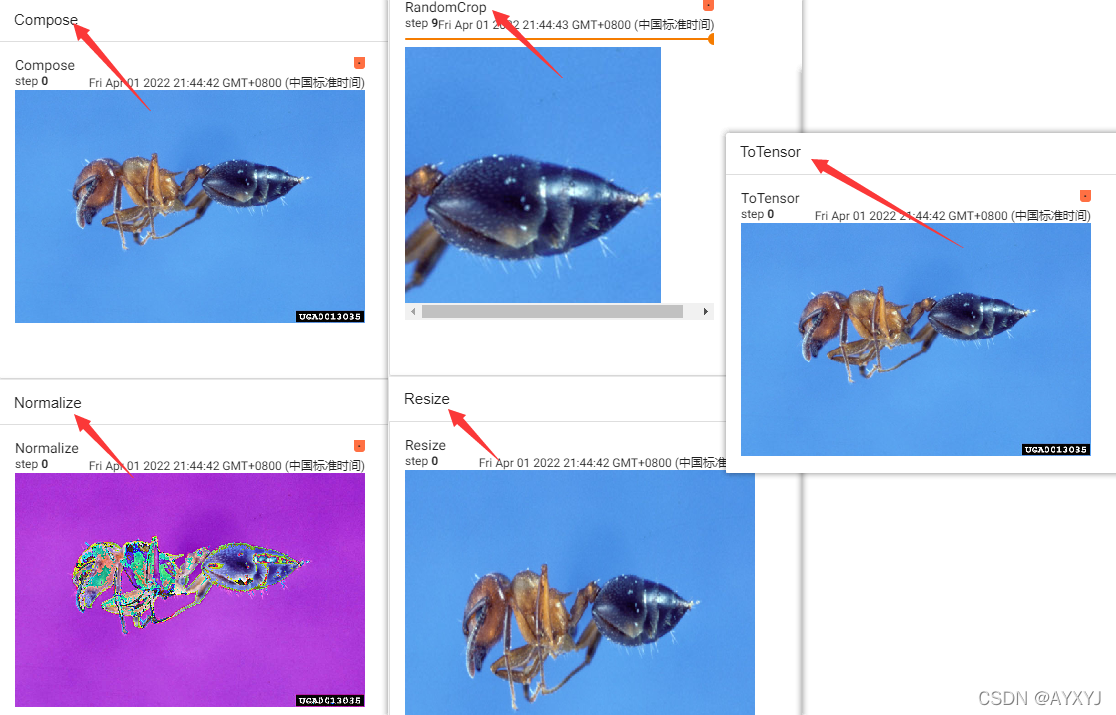

03-transforms.py

- 数据处理使用,transforms即转换的意思,通常是tensor<=>PIL.Image,shape转换等

from PIL import Image

import numpy as np

from torch.utils.tensorboard import SummaryWriter

from torchvision import transforms

"""

transforms 转换工具,数据预处理使用

"""

img = Image.open("../dataset/train/ants/0013035.jpg")

writer = SummaryWriter("logs03")

#numpy 将图片转换成数组

img_array = np.array(img)

print(img_array.shape)

# toTensor 将图片转换成Tensor

print(type(img))

transforms_toTensor = transforms.ToTensor()

img_tensor = transforms_toTensor(img)

print(type(img_tensor))

print(img_tensor)

writer.add_image("ToTensor" , img_tensor)

# Normalize 给定均值:(R,G,B) 方差:(R,G,B),将会把Tensor正则化。即:Normalized_image=(image-mean)/std。

transforms_Normalize = transforms.Normalize([0.5,0.5,0.5] , [0.5,0.5,0.5])

img_tensorToNorm = transforms_Normalize(img_tensor)

print(img_tensorToNorm)

writer.add_image("Normalize" , img_tensorToNorm)

# Resize 重置图片大小

transforms_Resize = transforms.Resize((512,512))

img_resize = transforms_Resize(img)

print(type(img_resize))

print(img_resize.size)

# PIL.Image 格式的 ,需要指定dataformats

writer.add_image("Resize" , np.array(img_resize) , dataformats="HWC")

# Compose 组合使用

transforms01_toTensor = transforms.ToTensor()

transforms02_Resize = transforms.Resize(512)

transforms_Compose = transforms.Compose([transforms01_toTensor , transforms02_Resize])

img_Compose = transforms_Compose(img)

print(type(img_Compose))

print(img.size)

writer.add_image("Compose" , img_Compose)

# RandomCrop 随机裁剪

transforms01_RandomCrop = transforms.RandomCrop(256)

transforms02_ToTensor = transforms.ToTensor()

transform_RandomCropAndToTensor = transforms.Compose([transforms01_RandomCrop , transforms02_ToTensor])

step = 0

for i in range(10):

writer.add_image("RandomCrop" , transform_RandomCropAndToTensor(img) , global_step=step)

step+=1

writer.close()

04-ImgConvTensor.py

- 常见转换写法

from PIL import Image

from torchvision import transforms

import torch

import os

import matplotlib.pyplot as plt

# loader使用torchvision中自带的transforms函数

loader = transforms.Compose([

transforms.ToTensor()])

unloader = transforms.ToPILImage()

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

# 输入图片地址

# 返回tensor变量

def image_loader(image_name):

image = Image.open(image_name).convert('RGB')

image = loader(image).unsqueeze(0)

return image.to(device, torch.float)

# 输入PIL格式图片

# 返回tensor变量

def PIL_to_tensor(image):

image = loader(image).unsqueeze(0)

return image.to(device, torch.float)

# 输入tensor变量

# 输出PIL格式图片

def tensor_to_PIL(tensor):

image = tensor.cpu().clone()

image = image.squeeze(0)

image = unloader(image)

return image

# 直接展示tensor格式图片

def imshow(tensor, title=None):

image = tensor.cpu().clone() # we clone the tensor to not do changes on it

image = image.squeeze(0) # remove the fake batch dimension

image = unloader(image)

plt.imshow(image)

if title is not None:

plt.title(title)

plt.pause(0.001) # pause a bit so that plots are updated

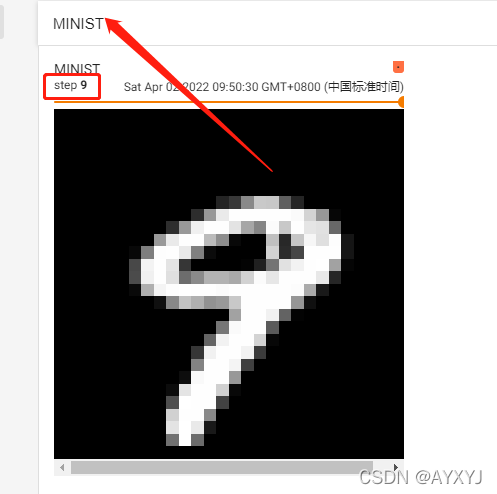

04-torchvisionTest.py

- 测试torchvision自带的数据集

import torchvision

from PIL import Image

from torch.utils.tensorboard import SummaryWriter

from torchvision import transforms

"""

torchvision 获取数据集 等一系列工具

"""

# 类型转换

transform = transforms.Compose([

transforms.ToTensor()

])

#tensorboard

writer = SummaryWriter("logs05")

# 获取数据集

train_set = torchvision.datasets.MNIST("../dataset/MINIST", transform=transform, download=True, train=True)

test_set = torchvision.datasets.MNIST("../dataset/MINIST", transform=transform, download=True, train=False)

# #未进行PIl转Tensor才可以执行

img, target = test_set[0]

print(test_set.classes)

print(img)

print(target)

print(type(img))

img = img.squeeze(0)

uploader = transforms.ToPILImage()

img = uploader(img)

print(type(img))

img.show()

# 去除数据集前十张

for i in range(10):

img ,target = test_set[i]

print(target)

writer.add_image("MINIST", img, i)

writer.close()

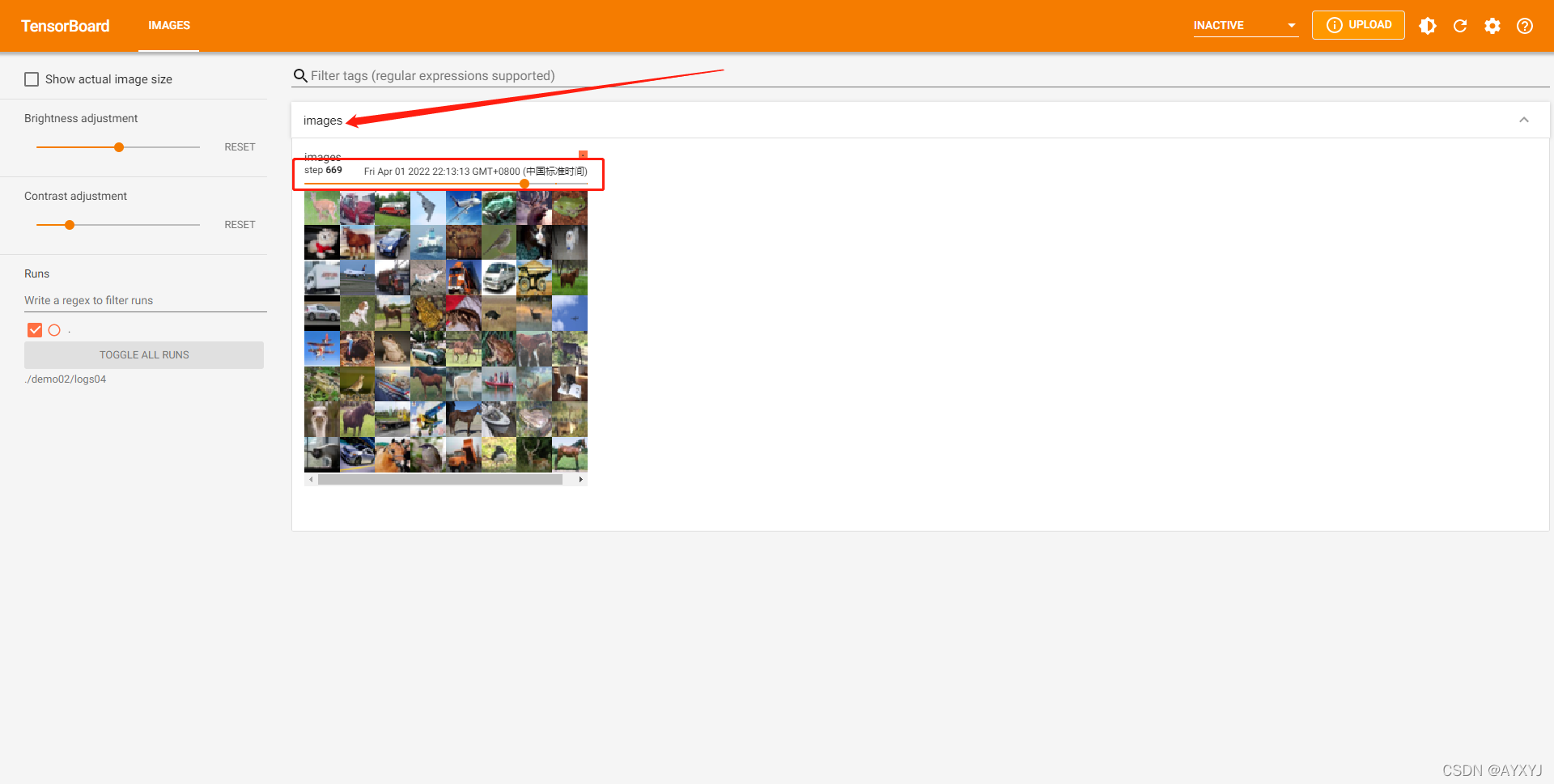

05-DataloaderTest.py

- 数据集加载,学习完转过头看01-ReadData-01.py

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

import torchvision

from torchvision import transforms

"""

使用torchvision的数据集 并使用 Dataloader加载数据集合

"""

# 加载的数据集 图片格式是PIL.Image 转成成 Tensor

transform = transforms.Compose([

transforms.ToTensor()

])

# tensorboard 面板

writer = SummaryWriter("logs04")

# 训练集合 和 测试集合

trainSet = torchvision.datasets.CIFAR10("../dataset/CIFAR10" , transform=transform ,train=True , download=True)

#testSet = torchvision.datasets.CIFAR10("../dataset/CIFAR10" , transform=transform ,train=False , download=True)

#测试集第一涨图片

img , target = trainSet[0]

print(img.shape)

print(target)

print(trainSet.classes[target])

"""

dataset 数据集合

batch_size 批处理大小

shuffle 是否洗牌 每次取不按照顺序

drop_last 是否舍弃最后不足batch_size大小的数据集

num_workers 处理数据的线程数

"""

dataloader = DataLoader(dataset=trainSet , batch_size= 64 , shuffle= True , drop_last=False ,num_workers=0)

print(dataloader)

step = 1

for data in dataloader:

imgs , target = data

writer.add_images("images" , imgs , global_step=step)

step+=1

# 关闭流

writer.close()

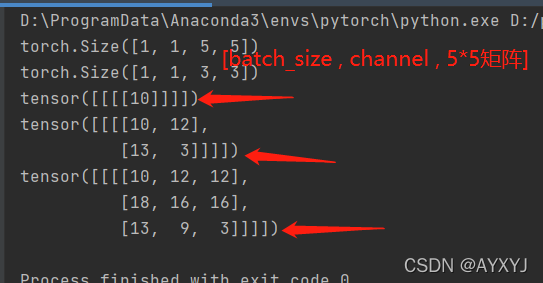

06-nn_conv_simulate.py

- 模拟卷积操作

"""

模拟卷积操作

"""

import torch

from torch.nn.functional import conv2d

"""

#简言之,torch.nn.Conv2d 的 weight 无法自定义,而需要手动设置 weight 时需要用到 torch.nn.function.conv2d。

torch.nn.Conv2d(in_channels,out_channels,kernel_size,stride=1,padding=0,dilation=1,groups=1,bias=True,padding_mode='zeros')

in_channels-----输入通道数

out_channels-------输出通道数

kernel_size--------卷积核大小

stride-------------步长

padding---------是否对输入数据填充0

torch.nn.functional.conv2d(input,weight,bias=None,stride=1,padding=0,dilation=1,groups=1)

input-------输入tensor大小(minibatch,in_channels,iH, iW)

weight------权重大小(out_channels, [公式], kH, kW)

"""

input = torch.tensor([

[1, 2, 0, 3, 1], [0, 1, 2, 3, 1], [1, 2, 1, 0, 0], [5, 2, 3, 1, 1], [2, 1, 0, 1, 1]

])

kernel = torch.tensor([

[1, 2, 1], [0, 1, 0], [2, 1, 0]

])

# 重置形状 (torch.Size, List[_int], Tuple[_int, ...])

input = torch.reshape(input , (1,1,5,5))

kernel = torch.reshape(kernel , (1,1,3,3))

print(input.shape)

print(kernel.shape)

# 卷积操作

output = conv2d(input , kernel , stride=3)

print(output) # (5-3+3)/3 = 1

# 卷积操作

output = conv2d(input , kernel , stride=2)

print(output) # (5-3+2)/2 = 2

# 卷积操作

output = conv2d(input , kernel , stride=1)

print(output) # (5-3+1)/1 = 3

06-nn_conv_simulate02.py

- 集成nn.Module定义一个卷积操作的网络模型,并进行正向传播

"""

使用nn.Moudle 构造一个神经网络,并使用卷积进行计算

会计算卷积之后的大小

"""

import torch

import torchvision

from torch import nn

from torch.nn import Conv2d

from torch.utils.data import DataLoader

# 数据集和数据加载

from torch.utils.tensorboard import SummaryWriter

trainSet = torchvision.datasets.CIFAR100(root="../dataset/CIFAR100/", download=True, transform=torchvision.transforms.ToTensor() ,train= True)

testSet = torchvision.datasets.CIFAR100(root="../dataset/CIFAR100/", download=True, transform=torchvision.transforms.ToTensor() ,train= False)

dataloader = DataLoader(dataset=testSet , batch_size=64 , drop_last=False , shuffle=True )

# tensorboard

writer = SummaryWriter("logs06")

# 一个卷积的神经网络模型

class Model(nn.Module):

def __init__(self) -> None:

super().__init__()

self.conv1 = Conv2d(in_channels=3, out_channels=6, kernel_size=3, stride=1, padding=0)

# (32 - 3 + 1 ) / 1 = 30

def forward(self, tensor):

x = self.conv1(tensor)

return x;

# 创建对象

model = Model()

# 遍历数据集

step = 0

for data in dataloader:

imgs , target = data

writer.add_images("CIFAR100" , imgs , global_step=step)

output = model(imgs)

output = torch.reshape(output , (-1,3,30,30))

print(output.shape)

writer.add_images("train_CIFAR100" , output , global_step=step)

step+=1

#关闭流

writer.close()

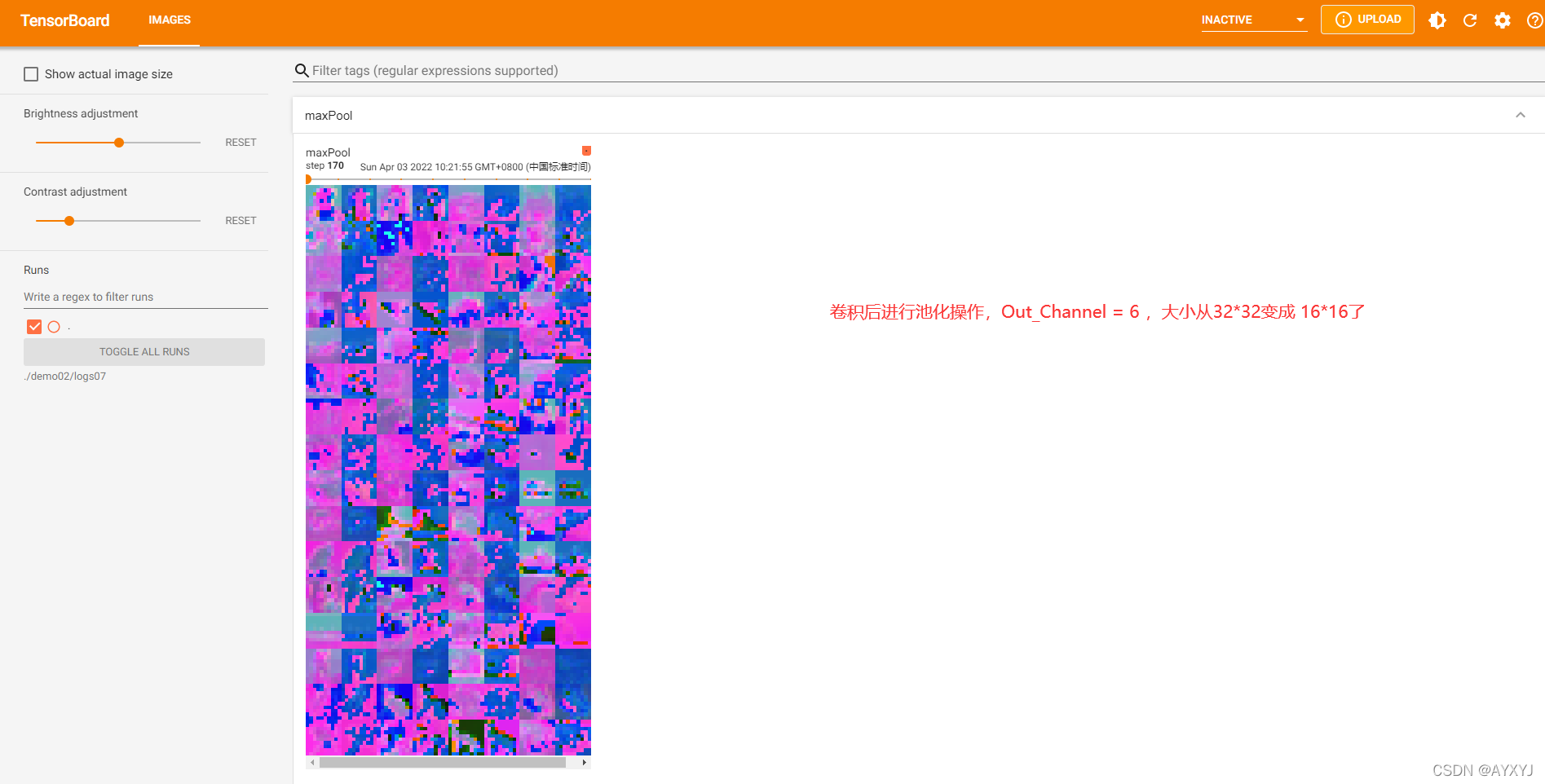

07-nn-maxPool.py

- 最大池化操作演示,平均池化自行了解

"""

最大池化操作

会计算池化之后的大小

"""

import torch

import torchvision

from torch import nn

from torch.nn import Conv2d, MaxPool2d

from torch.utils.data import DataLoader

# 加载数据

from torch.utils.tensorboard import SummaryWriter

test_set = torchvision.datasets.CIFAR10("../dataset/CIFAR10", download=True,

transform=torchvision.transforms.ToTensor())

dataloader = DataLoader(dataset=test_set, batch_size=64)

# tensorboard

writer = SummaryWriter("logs07")

# 定义网络模型

class Model(nn.Module):

def __init__(self) -> None:

super().__init__()

self.conv01 = Conv2d(in_channels=3, out_channels=6, kernel_size=3, stride=1, padding=0) # (32-3+1)/1=30

self.maxPool = MaxPool2d(kernel_size=3, ceil_mode=True) # (30-3+3)/3 = 10 channel=6

def forward(self, input):

output = self.conv01(input)

output = self.maxPool(output)

return output

# 声明模型对象

model = Model()

# 遍历数据集

step = 0

for data in dataloader:

imgs , target = data

imgs = model(imgs)

imgs = torch.reshape(imgs , (-1,3,10,10)) # batch_size=64 -》 batch_size=128

writer.add_images("maxPool" , imgs , global_step=step)

step+=1

# 关闭

writer.close()

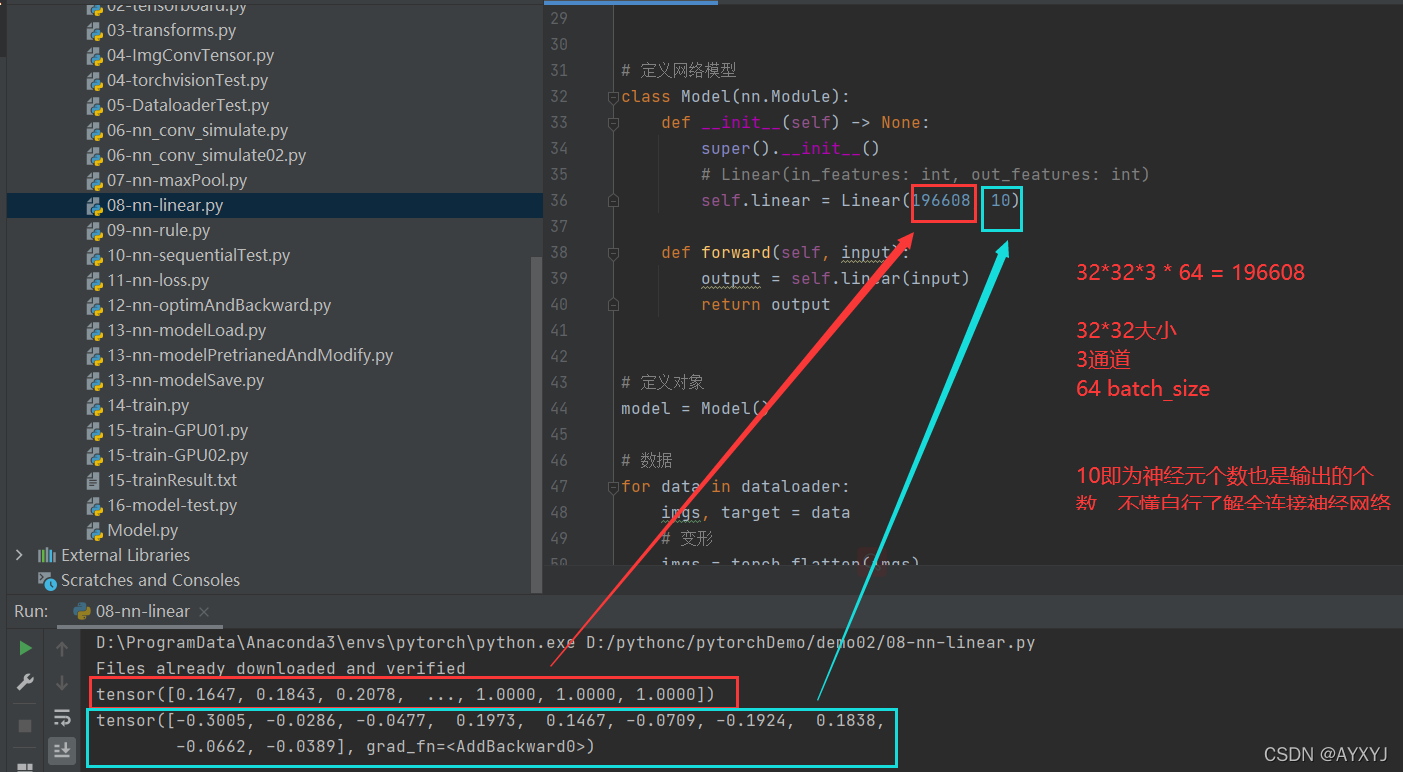

08-nn-linear.py

- 线性激活即全连接操作

"""

线性激活

输入特征数 输出特征数

PyTorch的nn.Linear()是用于设置网络中的全连接层的

需要注意在二维图像处理的任务中,全连接层的输入与输出一般都设置为二维张量,形状通常为[batch_size, size],不同于卷积层要求输入输出是四维张量

"""

import torch

import torchvision

from torch import nn

from torch.nn import Linear

from torch.utils.data import DataLoader

#画图

from matplotlib.font_manager import FontProperties

from pandas import np

import matplotlib.pyplot as plt

# 数据集加载

test_set = torchvision.datasets.CIFAR10("../dataset/CIFAR10", download=True,

transform=torchvision.transforms.ToTensor(), train=False)

dataloader = DataLoader(test_set, batch_size=64, shuffle=True, drop_last=False)

# 定义网络模型

class Model(nn.Module):

def __init__(self) -> None:

super().__init__()

# Linear(in_features: int, out_features: int)

self.linear = Linear(196608, 10)

def forward(self, input):

output = self.linear(input)

return output

# 定义对象

model = Model()

# 数据

for data in dataloader:

imgs, target = datao

# 变形

imgs = torch.flatten(imgs)

print(imgs)

output = model(imgs)

print(output)

break

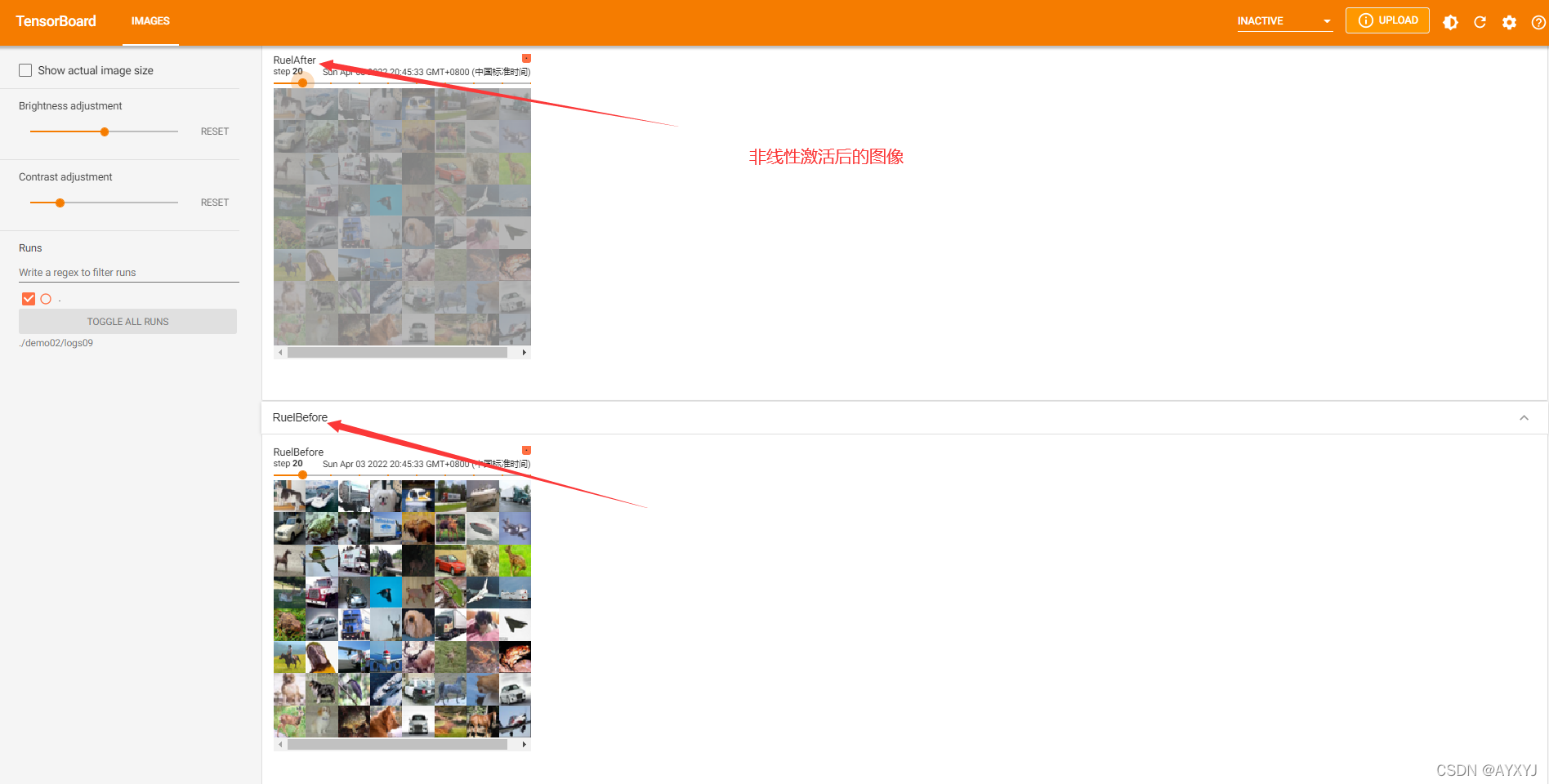

09-nn-rule.py

- 非线性激活,作用自己去学神经网络。

- 代码中展示了Rule,Sigmoid,常见的激活函数还有:tanh,softmax

"""

非线性激活函数

"""

import torchvision

import torch

#数据加载

from torch import nn

from torch.nn import ReLU, Sigmoid

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

testSet = torchvision.datasets.CIFAR10("../dataset/CIFAR10" , download=True , train=False , transform=torchvision.transforms.ToTensor())

dataloader = DataLoader(testSet , batch_size=64)

# 模型

class Modle(nn.Module):

def __init__(self) -> None:

super().__init__()

self.rule = ReLU()

self.sigmoid = Sigmoid()

def forward(self , input):

output = self.rule(input)

output = self.sigmoid(output)

return output

def train():

# 定义模型

model = Modle()

# 遍历数据 , tensorboard

writer = SummaryWriter("logs09")

step = 0

for data in dataloader:

imgs, target = data

writer.add_images("RuelBefore", imgs, global_step=step)

output = model(imgs)

writer.add_images("RuelAfter", output, global_step=step)

step += 1

# 关闭流

writer.close()

if __name__ == '__main__':

test = torch.tensor([

[1,-0.5],[-1,3]

])

print(test.shape)

test = torch.reshape(test , (-1,1,2,2))

print(test.shape)

"""

torch.Size([2, 2])

torch.Size([1, 1, 2, 2])

"""

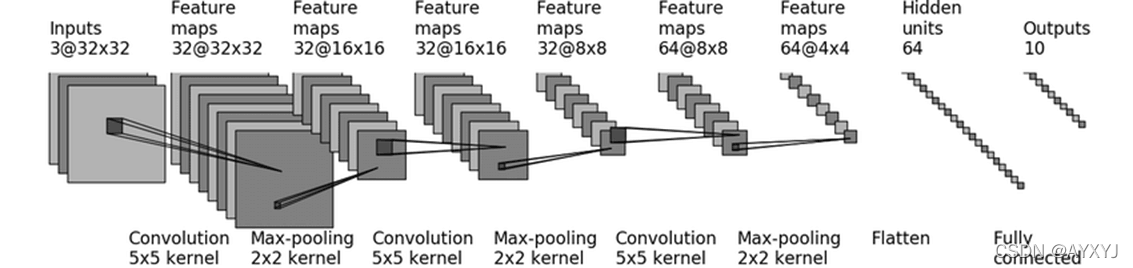

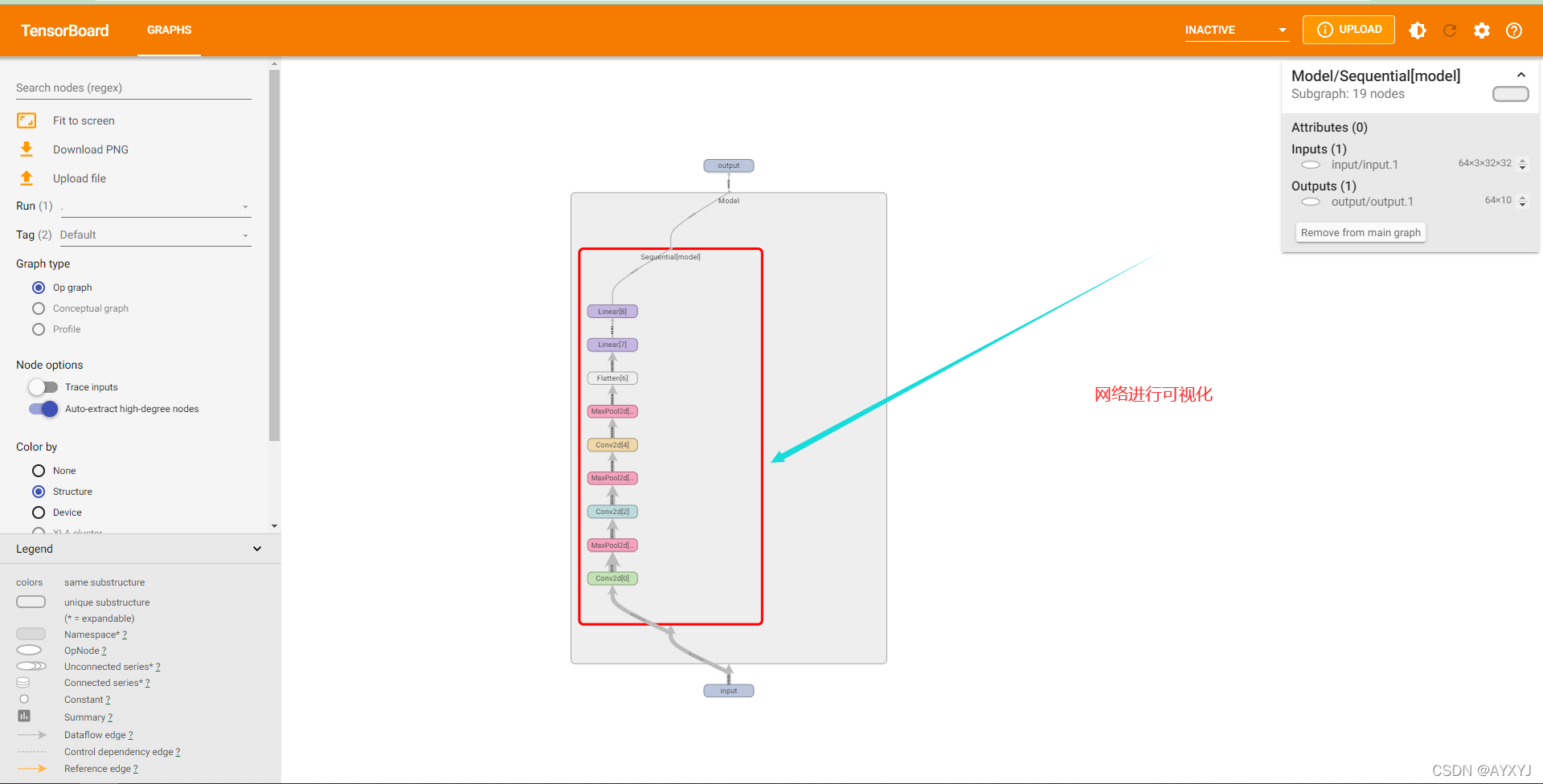

10-nn-sequentialTest.py

- 序列操作,可以对比使用ModuleList([])

- 后续训练都是基于该模型进行操作的,VGG16是使用的torchvision中的;

"""

PyTorch 中有一些基础概念在构建网络的时候很重要,比如 nn.Module, nn.ModuleList, nn.Sequential,这些类我们称之为容器 (containers),因为我们可以添加模块 (module) 到它们之中。

"""

from torch import nn

from torch.nn import Sequential, Conv2d, MaxPool2d, Flatten, Linear

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

from torchvision.datasets import CIFAR10

from torchvision.transforms import ToTensor

class Model(nn.Module):

def __init__(self) -> None:

super().__init__()

self.model = Sequential(

# (in_size - kernel_size + stride + 2padding ) / stride = out_size

Conv2d(in_channels=3, out_channels=32, kernel_size=5, stride=1, padding=2),

# stride 默认 kernel_size大小 ; ceil_model 模式就是会把不足square_size的边给保留下来,单独另算,或者也可以理解为在原来的数据上补充了值为-NAN的边。而floor模式则是直接把不足square_size的边给舍弃了。

MaxPool2d(kernel_size=2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

# 改变形状 二维张量,形状通常为[batch_size, size] [64,16]

Flatten(), # 64 个 一维 且长度 为 4*4 = 16

# 全连接层 4*4*64= 1024 输出out_channel即也是神经元数量

Linear(1024, 64),

Linear(64, 10)

)

def forward(self, input):

output = self.model(input)

return output

# 训练

def train(writer , input):

model = Model()

writer.add_graph(model , input)

# 输出模型看看

print(model)

output = model(input)

print(output.shape)

if __name__ == '__main__':

testSet = CIFAR10("../dataset/CIFAR10", download=True, train=False, transform=ToTensor())

dataloader = DataLoader(testSet, batch_size=64)

# tensorboard

writer = SummaryWriter("logs10")

step = 0

for data in dataloader:

imgs, target = data

output = train(writer , imgs)

break

"""

Model(

(model): Sequential(

(0): Conv2d(3, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(1): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(2): Conv2d(32, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(3): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(4): Conv2d(32, 64, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(5): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(6): Flatten(start_dim=1, end_dim=-1)

(7): Linear(in_features=1024, out_features=64, bias=True)

(8): Linear(in_features=64, out_features=10, bias=True)

)

)

torch.Size([64, 10])

"""

11-nn-loss.py

- 损失函数,即 y` - y = loss ;常见损失函数: L1Loss,MSELoss,CrossEntropyLoss

"""

损失函数

L1Loss

MSELoss

CrossEntropyLoss

"""

import torch

from torch.nn import L1Loss, MSELoss, CrossEntropyLoss

input = torch.tensor([1, 2, 3], dtype=torch.float32)

target = torch.tensor([1, 2, 5], dtype=torch.float32)

input = torch.reshape(input, (1, 1, 1, 3))

target = torch.reshape(target, (1, 1, 1, 3))

# 测试L1Loss

def testL1Loss():

loss = L1Loss(reduction='sum') # reduction='mean' #(0 + 0 +2) / 3 # tensor(0.667)

result_loss = loss(input, target) # (0 + 0 + 2) tensor(2 )

print(result_loss)

def testMSELoss():

loss = MSELoss()

result_loss = loss(input, target)

print(result_loss) # ( 0+ 0 + 2*2) /3 # tensor(1.3333)

def testCrossEntropyLoss():

x = torch.tensor([0.1, 0.2, 0.3])

y = torch.tensor([1])

x = torch.reshape(x, (1, 3)) # (batch_size , size)

loss_cross = CrossEntropyLoss()

result_loss = loss_cross(x, y)

print(result_loss) # tensor(1.1019) # 交叉熵公式

if __name__ == '__main__':

testCrossEntropyLoss()

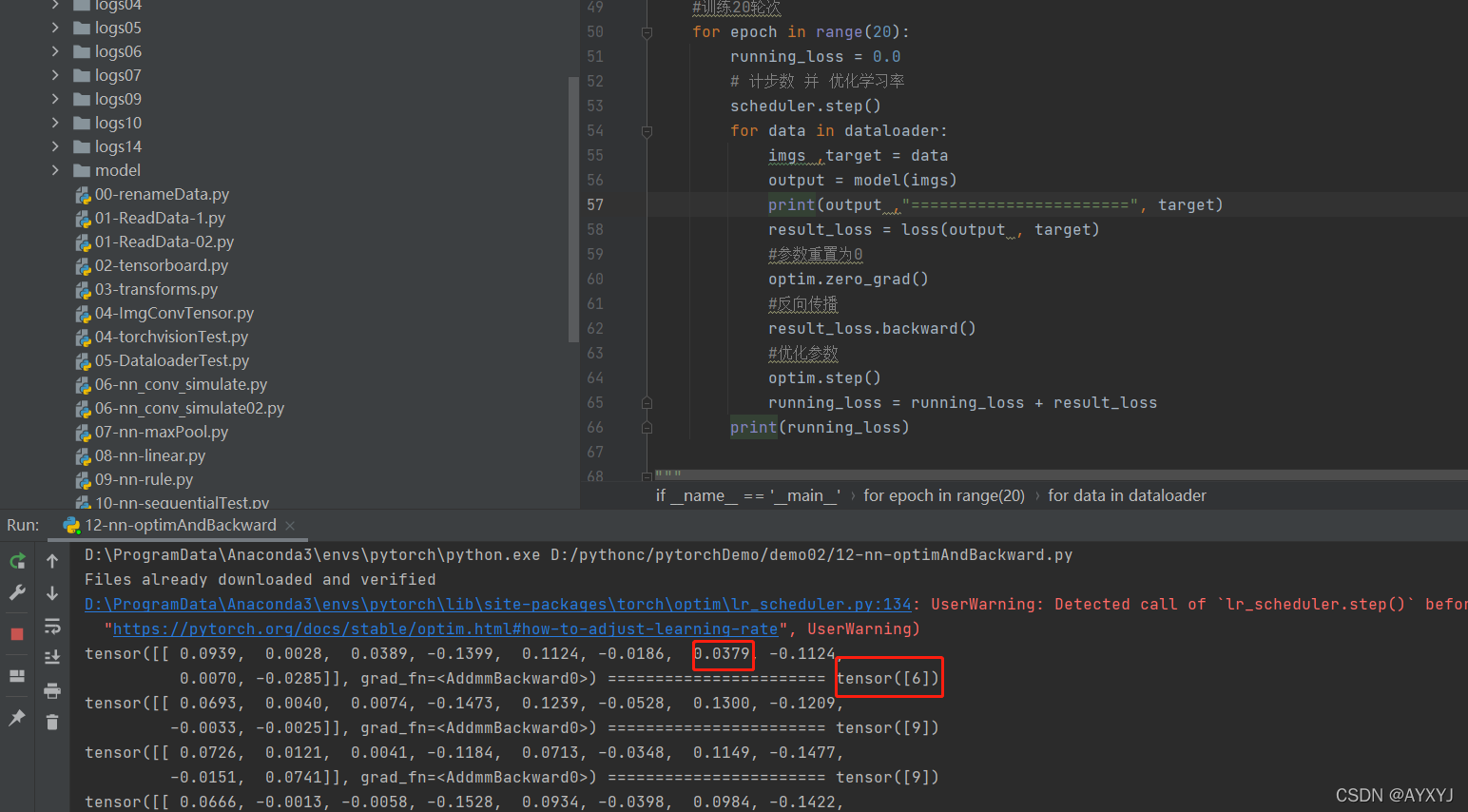

12-nn-optimAndBackward.py

- 优化器:优化参数,反向传播进而需要优化参数,基础!

""""

1.反向传播更新参数

2.优化器 SGD

"""

# 定义网络模型

import torch

import torchvision

from torch import nn

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear, CrossEntropyLoss

from torch.utils.data import DataLoader

class Model(nn.Module):

def __init__(self) -> None:

super().__init__()

self.model = nn.Sequential(

Conv2d(in_channels=3, out_channels=32, kernel_size=5, stride=1, padding=2),

MaxPool2d(kernel_size=2, stride=2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self, input):

output = self.model(input)

return output

if __name__ == '__main__':

test_set = torchvision.datasets.CIFAR10("../dataset/CIFAR10" , download=True , train=True, transform=torchvision.transforms.ToTensor())

dataloader = DataLoader(test_set , batch_size=1)

loss = CrossEntropyLoss()

model = Model()

#定义优化器 随机梯度下降

optim = torch.optim.SGD(model.parameters() , lr=0.01)

# 有序调整学习率 step_size:多少步调整 gamma学习率调整倍数,默认为0.1倍,即下降10倍。

scheduler = torch.optim.lr_scheduler.StepLR(optim , step_size= 5 ,gamma=0.1)

#训练20轮次

for epoch in range(20):

running_loss = 0.0

for data in dataloader:

imgs ,target = data

output = model(imgs)

#print(output ,"=======================", target)

result_loss = loss(output , target)

#参数重置为0

optim.zero_grad()

#反向传播

result_loss.backward()

#计步数 并 优化学习率

scheduler.step()

running_loss = running_loss + result_loss

print(running_loss)

"""

PyTorch学习率调整策略通过torch.optim.lr_scheduler接口实现。PyTorch提供的学习率调整策略分为三大类,分别是

有序调整:等间隔调整(Step),按需调整学习率(MultiStep),指数衰减调整(Exponential)和余弦退火CosineAnnealing。StepLR - 有序调整,MultiStepLR - 有序调整

自适应调整:自适应调整学习率 ReduceLROnPlateau。

自定义调整:自定义调整学习率 LambdaLR。

"""

13-nn-modelPretrianedAndModify.py

- 预模型模型参数;好处:已有的网络使用时候不是从头开始训练,而是使用网络提供的参数进行参数更新

- 使用torchvision提供的网络。有很多自行到官网查询

"""

模型参数预加载参数 和 修改现有的网络模型结构

"""

import torch

import torchvision

# 模型预训练

vgg16_true = torchvision.models.vgg16(pretrained=False) #pretrained=True

vgg16_false = torchvision.models.vgg16(pretrained=False)

print(vgg16_true)

print(vgg16_false)

# 手动下载参数并进行模型加载预训练参数

modelParam = torch.load("./model/vgg16-pretrain.pth")

vgg16_true.load_state_dict(modelParam)

#print(vgg16_true)

# 修改现有网络模型:添加一层

vgg16_true.classifier.add_module("add_liner" , torch.nn.Linear(1000, 10))

print(vgg16_true)

# 修改现有网络模型:修改某一层

vgg16_false.classifier[6] = torch.nn.Linear(4096 , 10)

print(vgg16_false)

"""

#输出2

VGG(

(features): Sequential(

(0): Conv2d(3, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

......

(30): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(avgpool): AdaptiveAvgPool2d(output_size=(7, 7))

(classifier): Sequential(

(0): Linear(in_features=25088, out_features=4096, bias=True)

......

(6): Linear(in_features=4096, out_features=1000, bias=True)

)

)

#输出3

VGG(

(features): Sequential(

(0): Conv2d(3, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

......

(30): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(avgpool): AdaptiveAvgPool2d(output_size=(7, 7))

(classifier): Sequential(

(0): Linear(in_features=25088, out_features=4096, bias=True)

......

(6): Linear(in_features=4096, out_features=1000, bias=True)

(add_liner): Linear(in_features=1000, out_features=10, bias=True)

)

)

#输出4

VGG(

(features): Sequential(

(0): Conv2d(3, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

......

(30): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(avgpool): AdaptiveAvgPool2d(output_size=(7, 7))

(classifier): Sequential(

(0): Linear(in_features=25088, out_features=4096, bias=True)

......

(6): Linear(in_features=4096, out_features=10, bias=True)

)

)

"""

13-nn-modelSave.py

- 模型保存有两种方法。推荐第二种

"""

1.模型保存

2.模型预训练参数使用

"""

import torchvision

# 加载torchvision提供的网络模型 , 并且设置预训练参数为false , 即从头开始训练,初始化参数是随机的,不能有很好的效果

vgg16 = torchvision.models.vgg16(pretrained=False)

# 模型保存方式一

torch.save(vgg16 , "./model/vgg16_method01.pth")

# 模型保存方式二(保存模型参数 官方推荐),保存成字典形式

torch.save(vgg16.state_dict() , "./model/vgg16_method02.pth")

print(vgg16)

"""

VGG(

(features): Sequential(

(0): Conv2d(3, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU(inplace=True)

(2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(3): ReLU(inplace=True)

(4): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(5): Conv2d(64, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(6): ReLU(inplace=True)

(7): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(8): ReLU(inplace=True)

(9): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(10): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(11): ReLU(inplace=True)

(12): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(13): ReLU(inplace=True)

(14): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(15): ReLU(inplace=True)

(16): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(17): Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(18): ReLU(inplace=True)

(19): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(20): ReLU(inplace=True)

(21): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(22): ReLU(inplace=True)

(23): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(24): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(25): ReLU(inplace=True)

(26): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(27): ReLU(inplace=True)

(28): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(29): ReLU(inplace=True)

(30): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(avgpool): AdaptiveAvgPool2d(output_size=(7, 7))

(classifier): Sequential(

(0): Linear(in_features=25088, out_features=4096, bias=True)

(1): ReLU(inplace=True)

(2): Dropout(p=0.5, inplace=False)

(3): Linear(in_features=4096, out_features=4096, bias=True)

(4): ReLU(inplace=True)

(5): Dropout(p=0.5, inplace=False)

(6): Linear(in_features=4096, out_features=1000, bias=True)

)

)

"""

13-nn-modelLoad.py

- 模型加载,要和模型保存要对应

"""

1.模型加载

2.模型预训练参数

"""

import torch

import torchvision

# 模型加载 保存方法一

model = torch.load("./model/vgg16_method01.pth")

print(model)

# 模型加载 保存方法二

# 加载torchvision提供的网络模型 , 并且设置预训练参数为false , 即从头开始训练,初始化参数是随机的,不能有很好的效果

vgg16 = torchvision.models.vgg16(pretrained=False)

modelParam = torch.load("./model/vgg16_method02.pth")

vgg16.load_state_dict(modelParam)

print(vgg16)

# 加载自己的模型的时候,确保能够找到自己的网络模型 from Model import *

14-train.py

1.网络训练基础完整模板,冲啊 !

# -*- coding: utf-8 -*-

# @Time : 2022/4/4 11:35

# @Author : zzuxyj

# @File : 14-train.py

"""

完整实现模型训练

"""

import torch

import torchvision

# 加载数据集

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

trainSet = torchvision.datasets.CIFAR10("../dataset/CIFAR10", train=True, transform=torchvision.transforms.ToTensor(),

download=True)

testSet = torchvision.datasets.CIFAR10("../dataset/CIFAR10", train=False, transform=torchvision.transforms.ToTensor(),

download=True)

# 计算长度

print("训练集长度:{}".format(len(trainSet)))

print("测试集长度:{}".format(len(testSet)))

# 加载数据集合

trainSetLoad = DataLoader(trainSet, batch_size=64)

testSetLoad = DataLoader(testSet, batch_size=64)

# 创建网络模型 vgg16

vgg16Param = torch.load("./model/vgg16-pretrain.pth")

vgg16 = torchvision.models.vgg16(pretrained=False)

vgg16.load_state_dict(vgg16Param)

vgg16.classifier[6] = torch.nn.Linear(4096, 10) # 10分类 修改网络模型

# 损失函数和优化器

loss_fn = torch.nn.CrossEntropyLoss()

# 学习率

learning_rate = 1e-2

optimizer = torch.optim.SGD(vgg16.parameters(), lr=learning_rate)

# 调整学习率

schduler = torch.optim.lr_scheduler.StepLR(optimizer, step_size=5000, gamma=0.1)

# 训练网络的参数

# 训练次数

total_train_step = 0

# 训练轮次

train_epoch = 20

# 测试次数

test_train_step = 0

# tensorboard

writer = SummaryWriter("logs14")

# 训练开始

for epoch in range(train_epoch):

print("-----------第{}轮次训练开始-------------".format(epoch + 1))

# 训练步骤开始

vgg16.train()

# 单轮次训练开始

for data in trainSetLoad:

imgs, target = data

output = vgg16(imgs)

result_loss = loss_fn(output, target)

# 优化网络模型

optimizer.zero_grad()

result_loss.backward()

schduler.step()

total_train_step += 1

if (total_train_step % 10 == 0):

print("训练次数:{},Loss:{}".format(total_train_step, result_loss.item()))

writer.add_scalar("train_loss", result_loss.item(), total_train_step)

# 测试步骤开始

vgg16.eval()

total_test_loss = 0

total_accuracy = 0

with torch.no_grad(): # torch.no_grad() 梯度设置为空 ,然后进行测试

for data in testSetLoad:

imgs, target = data

output = vgg16(imgs)

test_loss = loss_fn(output, target)

total_test_loss = total_test_loss + test_loss

accuracy = (output.argmax(1) == target).sum()

total_accuracy = total_accuracy + accuracy

print("测试集上的Loss:{}".format(total_test_loss))

print("测试集上的正确率:{}".format(total_accuracy / len(testSet)))

writer.add_scalar("test_loss", total_test_loss, test_train_step)

writer.add_scalar("test_accuracy", total_accuracy / len(testSet), test_train_step)

test_train_step += 1

if (total_accuracy > 0.8):

torch.save(vgg16.state_dict(), "vgg16-model{}-CIFAR10.pth".format(epoch))

print("模型保存")

# 关闭流

writer.close()

15-train-GPU01.py

- GPU使用方式1 ,推荐第二种

"""

完整实现模型训练 在GPU上训练

1.模型设置

2.损失函数设置

3.数据设置

"""

import torch

import torchvision

import time

# 加载数据集

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

trainSet = torchvision.datasets.CIFAR10("../dataset/CIFAR10", train=True, transform=torchvision.transforms.ToTensor(),

download=True)

testSet = torchvision.datasets.CIFAR10("../dataset/CIFAR10", train=False, transform=torchvision.transforms.ToTensor(),

download=True)

# 计算长度

print("训练集长度:{}".format(len(trainSet)))

print("测试集长度:{}".format(len(testSet)))

# 加载数据集合

trainSetLoad = DataLoader(trainSet, batch_size=64)

testSetLoad = DataLoader(testSet, batch_size=64)

# 创建网络模型 vgg16

vgg16Param = torch.load("./model/vgg16-pretrain.pth")

vgg16 = torchvision.models.vgg16(pretrained=False)

vgg16.load_state_dict(vgg16Param)

vgg16.classifier[6] = torch.nn.Linear(4096, 10) # 10分类 修改网络模型

if torch.cuda.is_available():

vgg16 = vgg16.cuda()

# 损失函数和优化器

loss_fn = torch.nn.CrossEntropyLoss()

if torch.cuda.is_available():

loss_fn = loss_fn.cuda()

# 学习率

learning_rate = 1e-2

optimizer = torch.optim.SGD(vgg16.parameters(), lr=learning_rate)

# 调整学习率

schduler = torch.optim.lr_scheduler.StepLR(optimizer, step_size=5000, gamma=0.1)

# 训练网络的参数

# 训练次数

total_train_step = 0

# 训练轮次

train_epoch = 20

# 测试次数

test_train_step = 0

# tensorboard

writer = SummaryWriter("logs14")

# 训练开始

for epoch in range(train_epoch):

print("-----------第{}轮次训练开始-------------".format(epoch + 1))

# 训练步骤开始

vgg16.train()

start_time = time.time()

# 优化学习率 每一轮

schduler.step()

# 单轮次训练开始

for data in trainSetLoad:

imgs, target = data

if torch.cuda.is_available():

imgs = imgs.cuda()

target = target.cuda()

output = vgg16(imgs)

result_loss = loss_fn(output, target)

# 优化网络模型

optimizer.zero_grad()

result_loss.backward()

optimizer.step()

total_train_step += 1

if (total_train_step % 10 == 0):

end_time = time.time()

print("训练时间:{}".format(end_time - start_time))

print("训练次数:{},Loss:{}".format(total_train_step, result_loss.item()))

writer.add_scalar("train_loss", result_loss.item(), total_train_step)

# 测试步骤开始

vgg16.eval()

total_test_loss = 0

total_accuracy = 0

with torch.no_grad(): # torch.no_grad() 梯度设置为空 ,然后进行测试

for data in testSetLoad:

imgs, target = data

if torch.cuda.is_available():

imgs = imgs.cuda()

target = target.cuda()

output = vgg16(imgs)

test_loss = loss_fn(output, target)

total_test_loss = total_test_loss + test_loss

accuracy = (output.argmax(1) == target).sum()

total_accuracy = total_accuracy + accuracy

print("测试集上的Loss:{}".format(total_test_loss))

print("测试集上的正确率:{}".format(total_accuracy / len(testSet)))

writer.add_scalar("test_loss", total_test_loss, test_train_step)

writer.add_scalar("test_accuracy", total_accuracy / len(testSet), test_train_step)

test_train_step += 1

if ((total_accuracy / len(testSet)) > 0.8):

torch.save(vgg16.state_dict(), "vgg16-model{}-CIFAR10.pth".format(epoch))

print("模型保存")

# 关闭流

writer.close()

15-train-GPU02.py

- 灵活

"""

完整实现模型训练 在GPU上训练 常用写法

"""

import torch

import torchvision

import time

# 加载数据集

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

trainSet = torchvision.datasets.CIFAR10("../dataset/CIFAR10", train=True, transform=torchvision.transforms.ToTensor(),

download=True)

testSet = torchvision.datasets.CIFAR10("../dataset/CIFAR10", train=False, transform=torchvision.transforms.ToTensor(),

download=True)

# 计算长度

print("训练集长度:{}".format(len(trainSet)))

print("测试集长度:{}".format(len(testSet)))

# 加载数据集合

trainSetLoad = DataLoader(trainSet, batch_size=64)

testSetLoad = DataLoader(testSet, batch_size=64)

# 创建网络模型 vgg16

vgg16Param = torch.load("./model/vgg16-pretrain.pth")

vgg16 = torchvision.models.vgg16(pretrained=False)

vgg16.load_state_dict(vgg16Param)

vgg16.classifier[6] = torch.nn.Linear(4096, 10) # 10分类 修改网络模型

# 计算位置

device=torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

vgg16.to(device)

# 损失函数和优化器

loss_fn = torch.nn.CrossEntropyLoss()

loss_fn.to(device)

# 学习率

learning_rate = 1e-2

optimizer = torch.optim.SGD(vgg16.parameters(), lr=learning_rate)

# 调整学习率

schduler = torch.optim.lr_scheduler.StepLR(optimizer, step_size=5000, gamma=0.1)

# 训练网络的参数

# 训练次数

total_train_step = 0

# 训练轮次

train_epoch = 20

# 测试次数

test_train_step = 0

# tensorboard

writer = SummaryWriter("logs14")

# 训练开始

for epoch in range(train_epoch):

print("-----------第{}轮次训练开始-------------".format(epoch + 1))

# 训练步骤开始

vgg16.train()

start_time = time.time()

# 单轮次训练开始

for data in trainSetLoad:

imgs, target = data

imgs = imgs.to(device)

target = target.to(device)

output = vgg16(imgs)

result_loss = loss_fn(output, target)

# 优化网络模型

optimizer.zero_grad()

result_loss.backward()

total_train_step += 1

if (total_train_step % 100 == 0):

end_time = time.time()

print("训练时间:{}".format(end_time - start_time))

print("训练次数:{},Loss:{}".format(total_train_step, result_loss.item()))

writer.add_scalar("train_loss", result_loss.item(), total_train_step)

# 优化lr

schduler.step()

# 测试步骤开始

vgg16.eval()

total_test_loss = 0

total_accuracy = 0

with torch.no_grad(): # torch.no_grad() 梯度设置为空 ,然后进行测试

for data in testSetLoad:

imgs, target = data

imgs = imgs.to(device)

target = target.to(device)

output = vgg16(imgs)

test_loss = loss_fn(output, target)

total_test_loss = total_test_loss + test_loss

accuracy = (output.argmax(1) == target).sum()

total_accuracy = total_accuracy + accuracy

print("测试集上的Loss:{}".format(total_test_loss))

print("测试集上的正确率:{}".format(total_accuracy / len(testSet)))

writer.add_scalar("test_loss", total_test_loss, test_train_step)

writer.add_scalar("test_accuracy", total_accuracy / len(testSet), test_train_step)

test_train_step += 1

if ((total_accuracy / len(testSet)) > 0.8):

torch.save(vgg16.state_dict(), "./model/vgg16-model{}-CIFAR10.pth".format(epoch))

print("模型保存")

# 关闭流

writer.close()

16-model-test.py

- 加载训练好的模型进行调用,完结撒花

import torch

import torchvision

from PIL import Image

"""

测试模型0

"""

# 数据加载

img_path = "../dataset/imgs/horse.png"

img = Image.open(img_path)

# png格式图片要进行转换

img = img.convert("RGB")

print(img)

# img.show()

# 转换格式

transform = torchvision.transforms.Compose([

torchvision.transforms.Resize((32,32)),

torchvision.transforms.ToTensor()

])

img = transform(img)

#print(img.shape)

#GPU

device=torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

# 加载训练好的模型

model = torchvision.models.vgg16(pretrained=False)

model.classifier[6] = torch.nn.Linear(4096, 10)

modelParam = torch.load("./model/vgg16-model19-CIFAR10.pth" , map_location=device)

model.load_state_dict(modelParam)

model.to(device)

#print(model)

# 图片输入格式改变

img = torch.reshape(img , (1,3,32,32))

img = img.to(device)

# target

target = ["airplane" , "automobile" , "bird" , "cat" , "deer" , "dog" , "frog" , "horse" , "ship" ,"truck" ]

target_ZH_CN = ["飞机" ," 汽车","鸟","猫","鹿","狗","蛙","马","船","卡车"]

#测试

model.eval()

with torch.no_grad():

output = model(img)

print(target_ZH_CN[output.argmax(1)])

17-安利一个白嫖GPU