文章目录

MADDPG运行MPE

背景介绍就不讲了,MADDPG的代码从openai的github下载的,需要的自取;MPE的代码同样。

Tip: 由于我是在服务器上跑的,所以不能用environment的render()函数查看图形化界面。否则会报错Cannot connect to “None” from pyglet.gl import *;也可以解决,不过比较麻烦,建议自行百度

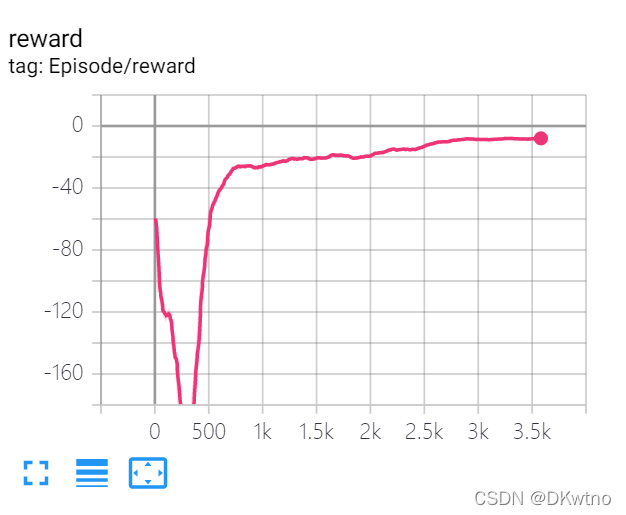

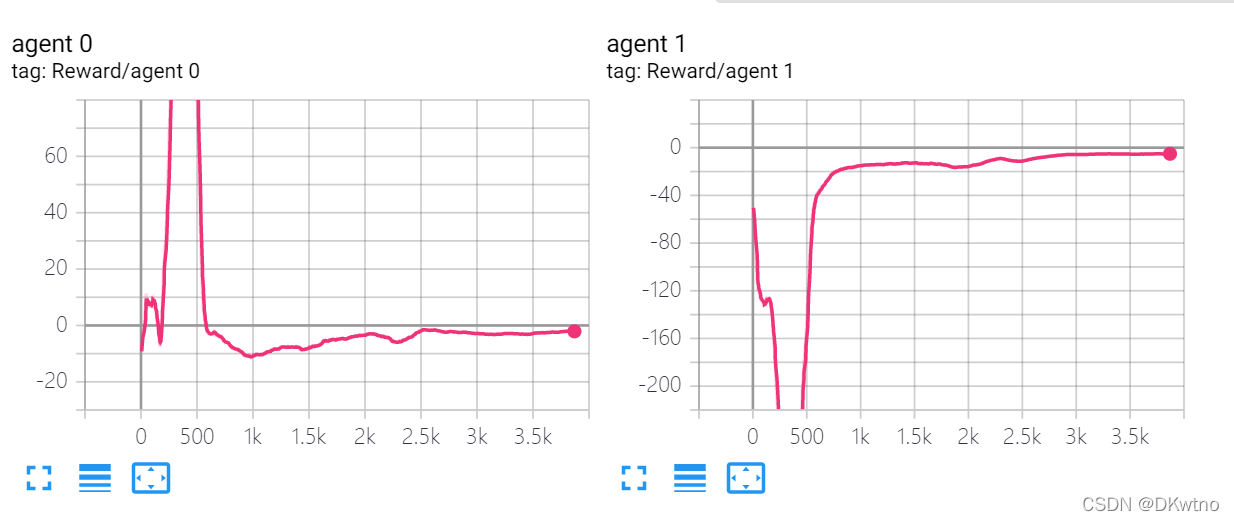

运行结果

分别展示global reward和agent reward。通过tensorboard展示的。可以看出来学得很快。

代码

虽然觉得应该没什么人有需要……贴上来了主部分的代码,其他的replay_buffer之类的在openai的github

import make_env

import numpy as np

from collections import deque

import time

from environment_curve import SimEnv

import numpy as np

import os

import time

import torch

import numpy as np

import torch.nn as nn

import torch.optim as optim

from parameter import parse_args

from replay_buffer import ReplayBuffer

from model_maddpg_curve import openai_actor, openai_critic_not_att

from torch.utils.tensorboard import SummaryWriter

project_name = 'maddpg_curve_na_bb' # not attention

writer = SummaryWriter(project_name)

TRUTH_V = 0

def local_make_env(arglist):

"""

create the environment from script

"""

env = make_env.make_env('simple_push')

return env

def get_trainers(env, obs_shape_n, action_shape_n, arglist):

"""

init the trainers or load the old model

"""

nagents = len(env.agents)

actors_cur = [None for _ in range(nagents)]

critics_cur = [None for _ in range(nagents)]

critics_tar = [None for _ in range(nagents)]

optimizers_c = [None for _ in range(nagents)]

actors_tar = [None for _ in range(nagents)]

optimizers_a = [None for _ in range(nagents)]

for i in range(nagents):

actors_cur[i] = openai_actor(obs_shape_n[i], env.action_space[0].n, arglist).to(arglist.device)

actors_tar[i] = openai_actor(obs_shape_n[i], env.action_space[0].n, arglist).to(arglist.device)

critics_tar[i] = openai_critic_not_att((obs_shape_n), (action_shape_n), arglist).to(arglist.device)

critics_cur[i] = openai_critic_not_att((obs_shape_n), (action_shape_n), arglist).to(arglist.device)

optimizers_c[i] = optim.Adam(critics_cur[i].parameters(), arglist.lr_c)

optimizers_a[i] = optim.Adam(actors_cur[i].parameters(), arglist.lr_a)

actors_tar = update_trainers(actors_cur, actors_tar, 1.0) # update the target par using the cur

critics_tar = update_trainers(critics_cur, critics_tar, 1.0) # update the target par using the cur

return actors_cur, critics_cur, actors_tar, critics_tar, optimizers_a, optimizers_c

def update_trainers(agents_cur, agents_tar, tao):

"""

update the trainers_tar par using the trainers_cur

This way is not the same as copy_, but the result is the same

out:

|agents_tar: the agents with new par updated towards agents_current

"""

for agent_c, agent_t in zip(agents_cur, agents_tar):

key_list = list(agent_c.state_dict().keys())

state_dict_t = agent_t.state_dict()

state_dict_c = agent_c.state_dict()

for key in key_list:

state_dict_t[key] = state_dict_c[key]*tao + \

(1-tao)*state_dict_t[key]

agent_t.load_state_dict(state_dict_t)

return agents_tar

# 重点代码!

def agents_train(arglist, game_step, update_cnt, memory, obs_size, action_size, \

actors_cur, actors_tar, critics_cur, critics_tar, optimizers_a, optimizers_c, activated_agents, env):

"""

use this func to make the "main" func clean

par:

|input: the data for training

|output: the data for next update

"""

# update all trainers, if not in display or benchmark mode

if game_step > arglist.learning_start_step and \

(game_step - arglist.learning_start_step) % arglist.learning_fre == 0:

if update_cnt == 0: print('\r=start training ...'+' '*100)

# update the target par using the cur

update_cnt += 1

for agent_idx, (actor_c, actor_t, critic_c, critic_t, opt_a, opt_c) in \

enumerate(zip(actors_cur, actors_tar, critics_cur, critics_tar, optimizers_a, optimizers_c)):

if agent_idx >= activated_agents or agent_idx < TRUTH_V: continue

if opt_c == None: continue # jump to the next model update

# sample the experience

_obs_n_o, _action_n, _rew_n, _obs_n_n, _done_n, activated_agents_n = memory.sample(arglist.batch_size, agent_idx) # --use the date to update the CRITIC

rew = torch.tensor(_rew_n, device=arglist.device, dtype=torch.float) # set the rew to gpu

done_n = torch.tensor(~_done_n, dtype=torch.float, device=arglist.device) # set the rew to gpu

action_cur_o = torch.from_numpy(_action_n).to(arglist.device, torch.float)

obs_n_o = torch.from_numpy(_obs_n_o).to(arglist.device, torch.float)

obs_n_n = torch.from_numpy(_obs_n_n).to(arglist.device, torch.float)

with torch.no_grad():

# 根据observation返回前x个truth的行为

action_tar = torch.cat([a_t(obs_n_n[:, obs_size[idx][0]:obs_size[idx][1]]).detach().cpu().to(arglist.device, torch.float) for idx, a_t in enumerate(actors_tar)], dim=1)

q_ = critic_t(obs_n_n, action_tar, agent_idx, activated_agents_n).reshape(-1)

tar_value = (q_*arglist.gamma*done_n + rew).detach() # q_*gamma*done + reward

q = critic_c(obs_n_o, action_cur_o, agent_idx, activated_agents_n).reshape(-1) # q

# q2 = critic_c_2(obs_n_o, action_cur_o, agent_idx, activated_agents).reshape(-1) # q

loss_c = torch.nn.MSELoss()(q, tar_value)

writer.add_scalar('Loss/C', loss_c, update_cnt)

opt_c.zero_grad()

loss_c.backward()

a = nn.utils.clip_grad_norm_(critic_c.parameters(), arglist.max_grad_norm)

opt_c.step()

model_out = actor_c(obs_n_o[:, obs_size[agent_idx][0]:obs_size[agent_idx][1]])

policy_c_new = model_out

# update the aciton of this agent

action_cur_o[:, action_size[agent_idx][0]:action_size[agent_idx][1]] = policy_c_new

if np.random.rand() > 0.5:

loss_a = torch.mul(-1, torch.mean(critic_c(obs_n_o, action_cur_o, agent_idx, activated_agents_n)))

opt_a.zero_grad()

(loss_a).backward()

nn.utils.clip_grad_norm_(actor_c.parameters(), arglist.max_grad_norm)

opt_a.step()

writer.add_scalar('Loss/A', loss_a, update_cnt)

torch.cuda.empty_cache()

# save the model to the path_dir ---cnt by update number

if update_cnt > arglist.start_save_model and update_cnt % arglist.fre4save_model == 0:

time_now = time.strftime('%y%m_%d%H%M')

print('=time:{} step:{} save'.format(time_now, game_step))

model_file_dir = os.path.join(arglist.save_dir, '{}_{}_{}_{}'.format( \

arglist.scenario_name, project_name, time_now, game_step))

if not os.path.exists(model_file_dir): # make the path

os.mkdir(model_file_dir)

for agent_idx, (a_c, a_t, c_c, c_t) in \

enumerate(zip(actors_cur, actors_tar, critics_cur, critics_tar)):

c_c = critics_cur[0]

torch.save(a_c, os.path.join(model_file_dir, 'a_c_{}.pt'.format(agent_idx)))

torch.save(a_t, os.path.join(model_file_dir, 'a_t_{}.pt'.format(agent_idx)))

torch.save(c_c, os.path.join(model_file_dir, 'c_c_{}.pt'.format(agent_idx)))

torch.save(c_t, os.path.join(model_file_dir, 'c_t_{}.pt'.format(agent_idx)))

# update the tar par

if np.random.rand()>0.5:

actors_tar = update_trainers(actors_cur, actors_tar, arglist.tao)

critics_tar = update_trainers(critics_cur, critics_tar, arglist.tao)

return update_cnt, actors_cur, actors_tar, critics_cur, critics_tar

def train(arglist):

"""

init the env, agent and train the agents

"""

"""step1: create the environment """

env = local_make_env(arglist)

print('=============================')

print('=1 Env {} is right ...'.format(arglist.scenario_name))

print('=============================')

"""step2: create agents"""

nagents = len(env.agents)

obs_shape_n = [env.observation_space[i].shape[0] for i in range(nagents)]

action_shape_n = [env.action_space[i].n for i in range(nagents)]

actors_cur, critics_cur, actors_tar, critics_tar, optimizers_a, optimizers_c = get_trainers(env, obs_shape_n, action_shape_n, arglist)

memory = ReplayBuffer(arglist.memory_size)

print('=2 The {} agents are inited ...'.format(nagents))

print('=============================')

"""step3: init the pars """

obs_size = []

action_size = []

game_step = 0

cur_episode = 0

update_cnt = 0

episode_cnt = 0

agent_info = [[[]]] # placeholder for benchmarking info

episode_rewards = [0.0] # sum of rewards for all agents

agent_rewards = [[0.0] for _ in range(nagents)] # individual agent reward

head_o, head_a, end_o, end_a = 0, 0, 0, 0

# 这里是对agent的观测空间不一致做的补偿

for obs_shape, action_shape in zip(obs_shape_n, action_shape_n):

end_o = end_o + obs_shape

end_a = end_a + action_shape

range_o = (head_o, end_o)

range_a = (head_a, end_a)

obs_size.append(range_o)

action_size.append(range_a)

head_o = end_o

head_a = end_a

print('=3 starting iterations ...')

print('=============================')

obs_n = env.reset()

for episode_gone in range(cur_episode, arglist.max_episode):

# cal the reward print the debug data

if game_step > 1 and game_step % arglist.per_episode_max_len == 0:

mean_ep_r = round(np.mean(episode_rewards[-200:-1]), 3)

for agent_idx in range(nagents):

mean_ep_ag_re = round(np.mean(agent_rewards[agent_idx][-200:-1]), 3)

writer.add_scalar('Reward/agent {}'.format(agent_idx), mean_ep_ag_re, episode_gone)

writer.add_scalar('Episode/reward', mean_ep_r, episode_gone)

print(" "*43 + 'episode reward:{}'.format(mean_ep_r), end='\r')

if game_step % 10000 == 0:

print('')

print()

print('=Training: steps:{} episode:{}'.format(game_step, episode_gone), end='\r')

for episode_cnt in range(arglist.per_episode_max_len):

# get action

action_n = [agent(torch.from_numpy(obs).to(arglist.device, dtype = torch.float)).detach().cpu().numpy() for agent, obs in zip(actors_cur, obs_n)]

if episode_gone < 20:

action_n = (np.random.rand(nagents, env.action_space[0].n) - 0.5) * 2

# interact with env

# 每个agent同时采取action

new_obs_n, rew_n, done_n, info_n = env.step(action_n)

activated_agent_n = len(env.agents)

# save the experience

memory.add(obs_n, np.concatenate(action_n), rew_n , new_obs_n, done_n, activated_agent_n)

episode_rewards[-1] += np.sum(rew_n)

for i, rew in enumerate(rew_n):

agent_rewards[i][-1] += rew

# train our agents

update_cnt, actors_cur, actors_tar, critics_cur, critics_tar = agents_train(\

arglist, game_step, update_cnt, memory, obs_size, action_size, \

actors_cur, actors_tar, critics_cur, critics_tar, optimizers_a, optimizers_c, activated_agent_n, env)

# update the obs_n

game_step += 1

obs_n = new_obs_n

done = np.any(done_n) # 所有done

terminal = (episode_cnt >= arglist.per_episode_max_len-1)

if done or terminal:

obs_n = env.reset()

agent_info.append([[]])

episode_rewards.append(0)

for a_r in agent_rewards:

a_r.append(0)

continue

writer.close()

writer.flush()

if __name__ == '__main__':

arglist = parse_args()

env = train(arglist)

吐槽

现在在写一篇应用MADRL的double auction的论文……真的是跑到崩溃,无数个模型都不好使,environment改了又改,妈个鸡……测试一下,maddpg,masac,qmix的代码应该都没问题,那只能再想想办法了……希望争取在5.15前写出来