前面已经介绍过使用scrapy爬取百度图片内容,这里介绍一下通过百度地图提供的接口爬取POI数据,并且保存到postgresql数据库中。图片爬虫与数据爬虫处理的方式还是有一些不一样的。

在百度地图开放平台上可以找到相关的地点检索的文档,关于接口的使用方法有很详细的描述,主要还是通过关键词和POI分类进行检测,注意不同的关键词可能得到的数据有重复,注意通过uid来去重。

具体的创建项目的过程,前面的博文里已经讲过,这里只展示一下核心代码

请求数据代码farm.py

import scrapy

import json

from catchFarm.items import CatchfarmItem

print("构造")

class FarmSpider(scrapy.Spider):

name = 'farm'

allowed_domains = ['api.map.baidu.com']

url = 'https://api.map.baidu.com/place/v2/search?query=牧业&tag=公司企业®ion={}&output=json&ak=你申请的ak&coord_type=1'

print("开始")

# 每次请求一个市的,市列表在这

areas = [

{

"name": '郑州市',

"code": '268'

}, {

"name": '驻马店市',

"code": '269'

}, {

"name": '安阳市',

"code": '267'

}, {

"name": '新乡市',

"code": '152'

}, {

"name": '洛阳市',

"code": '153'

}, {

"name": '商丘市',

"code": '154'

}, {

"name": '许昌市',

"code": '155'

}, {

"name": '濮阳市',

"code": '209'

},

{

"name": '开封市',

"code": '210'

}, {

"name": '焦作市',

"code": '211'

}, {

"name": '三门峡市',

"code": '212'

}, {

"name": '平顶山市',

"code": '213'

}, {

"name": '信阳市',

"code": '214'

}, {

"name": '鹤壁市',

"code": '215'

}, {

"name": '周口市',

"code": '308'

},

{

"name": '南阳市',

"code": '309'

},

{

"name": '漯河市',

"code": '344'

}

]

index = 0

start_urls = [url.format(areas[0]['code'])]

print(start_urls)

def parse(self, response):

print(response.text)

print("第{}次请求".format(str(self.index)))

results = json.loads(response.text)

results = results["results"]

for result in results:

item = CatchfarmItem()

# 异常数据处理

if 'location' not in result.keys():

continue

# 解析数据

item['name'] = result['name']

item['lat'] = result['location']['lat']

item['lng'] = result['location']['lng']

item['address'] = result['address']

item['province'] = result['province']

item['city'] = result['city']

item['area'] = result['detail']

item['uid'] = result['uid']

yield item

self.index = self.index + 1

if self.index >= len(self.areas):

return

yield scrapy.Request(self.update_url(),

callback=self.parse)

def update_url(self):

print(self.url.format(self.areas[self.index]['code']))

return self.url.format(self.areas[self.index]['code'])

item.py

import scrapy

class CatchfarmItem(scrapy.Item):

# define the fields for your item here like:

name = scrapy.Field()

lat = scrapy.Field()

lng = scrapy.Field()

address = scrapy.Field()

province = scrapy.Field()

city = scrapy.Field()

area = scrapy.Field()

detail = scrapy.Field()

uid = scrapy.Field()

pass

pipelines.py

import psycopg2

# useful for handling different item types with a single interface

from itemadapter import ItemAdapter

class CatchfarmPipeline(object):

def __init__(self):

self.cursor = None

self.conn = None

self.conn = psycopg2.connect(database="postgres",

user="postgres",

password="postgres",

host="127.0.0.1",

port="5432")

self.cursor = self.conn.cursor()

def open_spider(self, spider):

pass

def process_item(self, item, spider):

# id存在则不插入

sql = "INSERT INTO postgres.farm_baidu(name, lat, lng, address, province, city, area, uid, geom) " \

"VALUES ('{}', {}, {},'{}', '{}', '{}', '{}', '{}',st_point({},{},4326) ) on conflict(uid) do nothing"

sql = sql.format(item['name'], item['lat'], item['lng'], item['address'], item['province'], item['city'],

item['area'], item['uid'], item['lng'], item['lat'])

print(sql)

self.cursor.execute(sql)

return item

def close_spider(self, spider):

self.conn.commit()

self.cursor.close()

# 关闭数据库连接

self.conn.close()

settings.py

BOT_NAME = 'catchFarm'

SPIDER_MODULES = ['catchFarm.spiders']

NEWSPIDER_MODULE = 'catchFarm.spiders'

# Obey robots.txt rules

ROBOTSTXT_OBEY = False

# Configure maximum concurrent requests performed by Scrapy (default: 16)

CONCURRENT_REQUESTS = 1

DOWNLOAD_DELAY = 3

# 设置延迟

ITEM_PIPELINES = {

'catchFarm.pipelines.CatchfarmPipeline': 300,

}

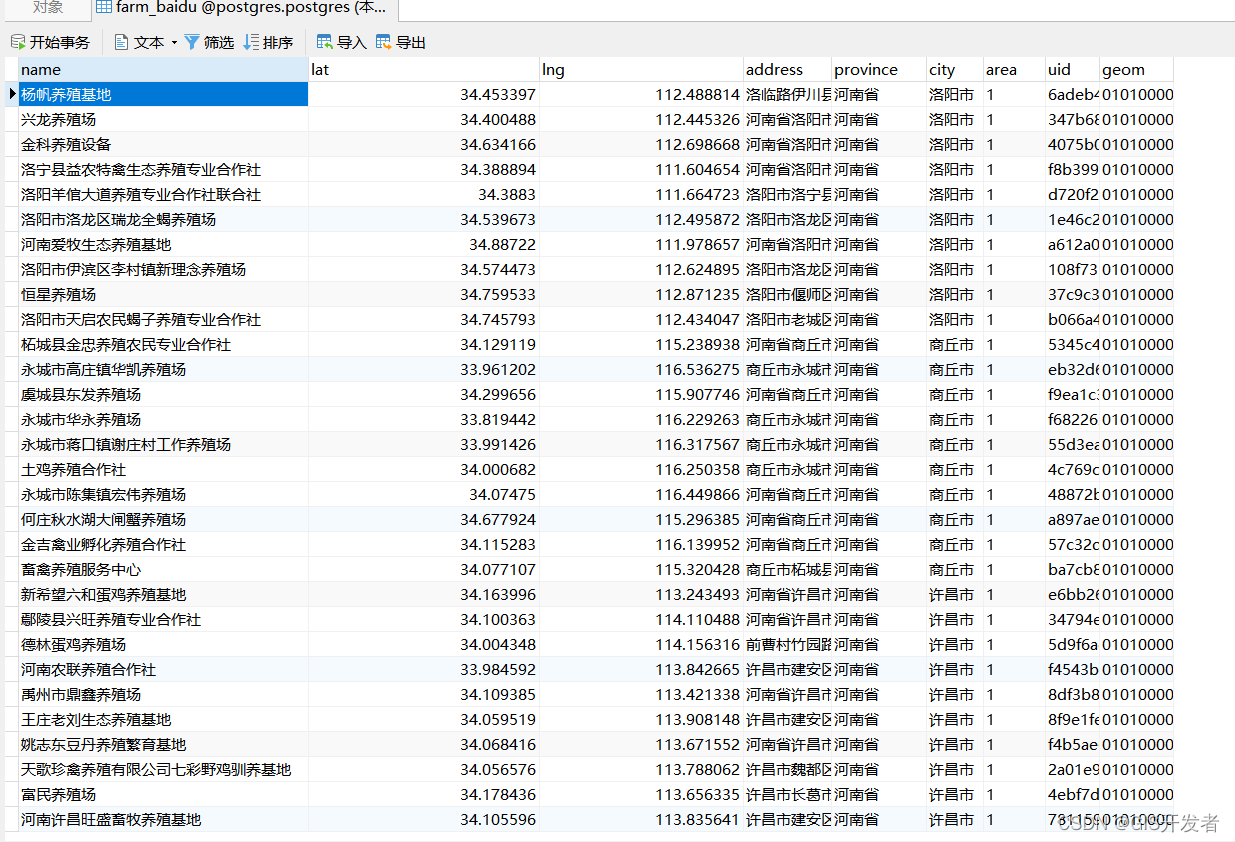

爬取结果