mindspore1.6.1 cpu版本

报错信息:

ValueError: For 'MatMul', the input dimensions must be equal, but got 'x1_col': 256 and 'x2_row': 400. And 'x' shape [8, 256](transpose_a=False), 'y' shape [120, 400](transpose_b=True).

这句报错描述的是我们在MatMul算子中进行张量的计算时

出现了不符合线性代数中乘法运算的情况:第一个张量的列数 不等于 第二个张量的行数

初看这个报错可能觉得我上面那句话是错的,请注意看看 transpose_a = False这个属性,代表当前的张量(矩阵)是否已经转置

问题分析:

1:是数据集中图片大小问题吗?

本实验使用的是MNIST数据集,使用mindspore.MnistDataset进行加载

图片的大小为28*28与这里提到的数字无关,不是这个问题

2.经过检查 400这个数字与网络中的全连接层有关

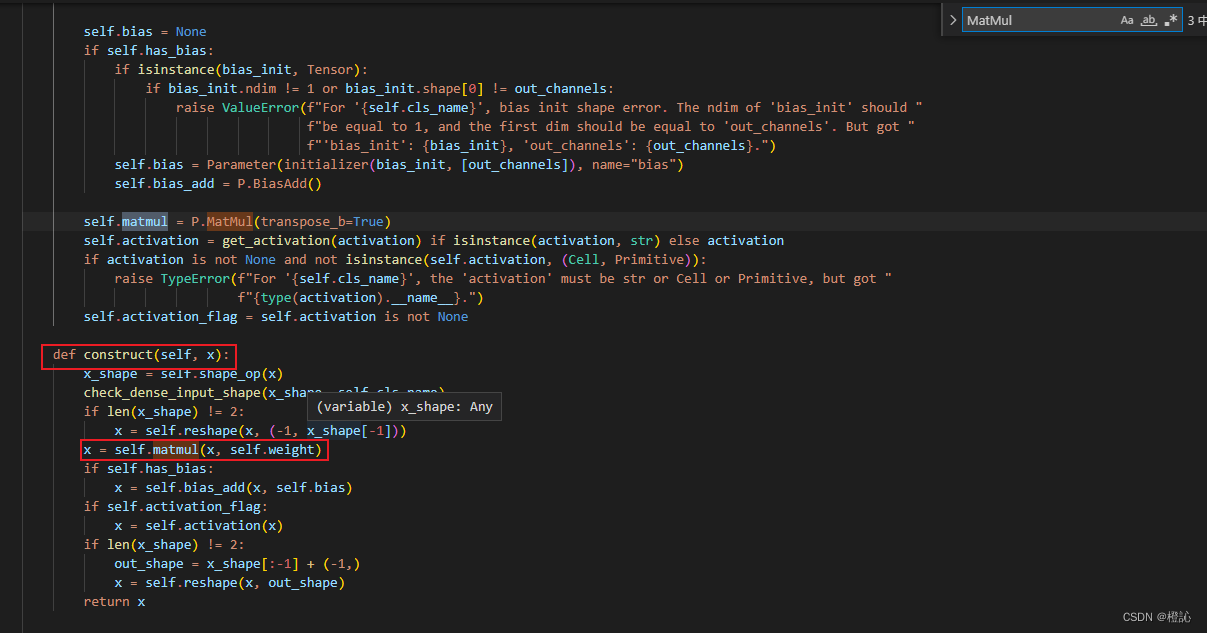

上图是全连接层的源码部分片段,在这里可以看到在construct中是有这样一个matmul操作

x = self.matmul(x, self.weight)

3.检查报错原因:

ValueError: For 'MatMul', the input dimensions must be equal, but got 'x1_col': 256 and 'x2_row': 400. And 'x' shape [8, 256](transpose_a=False), 'y' shape [120, 400](transpose_b=True).

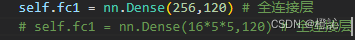

修改原代码中400的地方

是第一个全连接层的大小出错了

改为(256,120)

想要复现的宝子们看下面:

(我是在vscode的jupyter中运行的,不同的方法运行可能输出不一样)

数据集下载:(在这个目录下打开shell工具(例如MobaXterm))

运行如下指令

mkdir -p ./datasets/MNIST_Data/train ./datasets/MNIST_Data/test

wget -NP ./datasets/MNIST_Data/train https://mindspore-website.obs.myhuaweicloud.com/notebook/datasets/mnist/train-labels-idx1-ubyte

wget -NP ./datasets/MNIST_Data/train https://mindspore-website.obs.myhuaweicloud.com/notebook/datasets/mnist/train-images-idx3-ubyte

wget -NP ./datasets/MNIST_Data/test https://mindspore-website.obs.myhuaweicloud.com/notebook/datasets/mnist/t10k-labels-idx1-ubyte

wget -NP ./datasets/MNIST_Data/test https://mindspore-website.obs.myhuaweicloud.com/notebook/datasets/mnist/t10k-images-idx3-ubyte

tree ./datasets/MNIST_Data

完整源代码:

import numpy as np

import mindspore

import mindspore.nn as nn

from mindspore import Tensor,Model

from mindspore import dtype as mstype

from mindspore.train.callback import LossMonitor

import mindspore.dataset.transforms.c_transforms as C

import mindspore.dataset.vision.c_transforms as CV

import mindspore.dataset as ds

import os

import argparse

parser = argparse.ArgumentParser(description='MindSpore LeNet Example')

parser.add_argument('--device_target', type=str, default="CPU", choices=['Ascend', 'GPU', 'CPU'])

DATA_DIR = "./datasets/MNIST_Data/train"

# DATA_DIR = "./datasets/cifar-10-batches-bin/train"

if not os.path.exists(DATA_DIR):

os.makedirs(DATA_DIR)

#采样器

sampler = ds.SequentialSampler(num_samples=5)

# dataset = ds.Cifar100Dataset(DATA_DIR,sampler=sampler)

dataset = ds.MnistDataset(DATA_DIR,sampler=sampler) #这个是MNIST数据集

# dataset = ds.Cifar10Dataset(DATA_DIR,sampler=sampler) #这个是Cifar10数据集

class LeNet5(nn.Cell):

def __init__(self,num_class=10,num_channel = 1):

# 初始化网络

super(LeNet5,self).__init__()

# 定义所需要的运算

self.conv1 = nn.Conv2d(num_channel,6,5,pad_mode='valid') # 卷积

self.conv2 = nn.Conv2d(6,16,5,pad_mode='valid')

self.fc1 = nn.Dense(256,120) # 全连接层

# self.fc1 = nn.Dense(16*5*5,120) # 全连接层

self.fc2 = nn.Dense(120,84)

self.fc3 = nn.Dense(84,num_class)

self.max_pool2d = nn.MaxPool2d(kernel_size=2,stride=2)# 最大池化-降采样

self.relu = nn.ReLU() # 激活函数

self.flatten = nn.Flatten()# flatten 扁平的意思=> 将原来的高维数组换成只有 一行 的数组 列数是之前的各维度之积

# 定义网络构建函数

def construct(self,x):

# 构建前向网络

x = self.conv1(x)

x = self.relu(x)

x = self.max_pool2d(x)

x = self.conv2(x)

x = self.relu(x)

x = self.max_pool2d(x)

x = self.flatten(x)

print(x.shape)

print(self.shape)

print(self.weight)

x = self.fc1(x)

x = self.relu(x)

x = self.fc2(x)

x = self.relu(x)

x = self.fc3(x)

return x

#初始化网路

net = LeNet5()

for m in net.parameters_and_names():

print(m)

#定义超参

epoch = 10

batch_size = 64

learning_rate = 0.1

#构建数据集

sampler = ds.SequentialSampler(num_samples=128)

dataset = ds.MnistDataset(DATA_DIR,sampler = sampler)

#数据类型的转换

type_cast_op_image = C.TypeCast(mstype.float32)

type_cast_op_label = C.TypeCast(mstype.int32)

#数据序列读取方式

HWC2CHW = CV.HWC2CHW()

#构建数据集

dataset = dataset.map(operations=[type_cast_op_image,HWC2CHW],input_columns="image")

dataset = dataset.map(operations=type_cast_op_label,input_columns="label")

dataset = dataset.batch(batch_size)

print("\n")

#传入定义的超参

for p in net.trainable_params():

print(p)

optim = nn.SGD(params=net.trainable_params(),learning_rate=learning_rate)# 自动微分反向传播

loss = nn.SoftmaxCrossEntropyWithLogits(sparse=True,reduction='mean') # 交叉熵损失函数

#开始训练

model = Model(net,loss_fn=loss,optimizer=optim)

model.train(epoch=epoch,train_dataset=dataset,callbacks=LossMonitor())

输出:

[WARNING] ME(14204:8992,MainProcess):2022-04-29-13:57:28.537.104 [mindspore\train\model.py:536] The CPU cannot support dataset sink mode currently.So the training process will be performed with dataset not sink.

Output exceeds the size limit. Open the full output data in a text editor

('conv1.weight', Parameter (name=conv1.weight, shape=(6, 1, 5, 5), dtype=Float32, requires_grad=True))

('conv2.weight', Parameter (name=conv2.weight, shape=(16, 6, 5, 5), dtype=Float32, requires_grad=True))

('fc1.weight', Parameter (name=fc1.weight, shape=(120, 256), dtype=Float32, requires_grad=True))

('fc1.bias', Parameter (name=fc1.bias, shape=(120,), dtype=Float32, requires_grad=True))

('fc2.weight', Parameter (name=fc2.weight, shape=(84, 120), dtype=Float32, requires_grad=True))

('fc2.bias', Parameter (name=fc2.bias, shape=(84,), dtype=Float32, requires_grad=True))

('fc3.weight', Parameter (name=fc3.weight, shape=(10, 84), dtype=Float32, requires_grad=True))

('fc3.bias', Parameter (name=fc3.bias, shape=(10,), dtype=Float32, requires_grad=True))

Parameter (name=conv1.weight, shape=(6, 1, 5, 5), dtype=Float32, requires_grad=True)

Parameter (name=conv2.weight, shape=(16, 6, 5, 5), dtype=Float32, requires_grad=True)

Parameter (name=fc1.weight, shape=(120, 256), dtype=Float32, requires_grad=True)

Parameter (name=fc1.bias, shape=(120,), dtype=Float32, requires_grad=True)

Parameter (name=fc2.weight, shape=(84, 120), dtype=Float32, requires_grad=True)

Parameter (name=fc2.bias, shape=(84,), dtype=Float32, requires_grad=True)

Parameter (name=fc3.weight, shape=(10, 84), dtype=Float32, requires_grad=True)

Parameter (name=fc3.bias, shape=(10,), dtype=Float32, requires_grad=True)

epoch: 1 step: 1, loss is 2.302509069442749

epoch: 1 step: 2, loss is 2.302182197570801

epoch: 2 step: 1, loss is 2.3014421463012695

epoch: 2 step: 2, loss is 2.299675941467285

epoch: 3 step: 1, loss is 2.3004305362701416

epoch: 3 step: 2, loss is 2.2972540855407715

epoch: 4 step: 1, loss is 2.2994704246520996

...

epoch: 9 step: 1, loss is 2.2953572273254395

epoch: 9 step: 2, loss is 2.284273386001587

epoch: 10 step: 1, loss is 2.294651746749878

epoch: 10 step: 2, loss is 2.282334089279175