一、概念:张量,算子

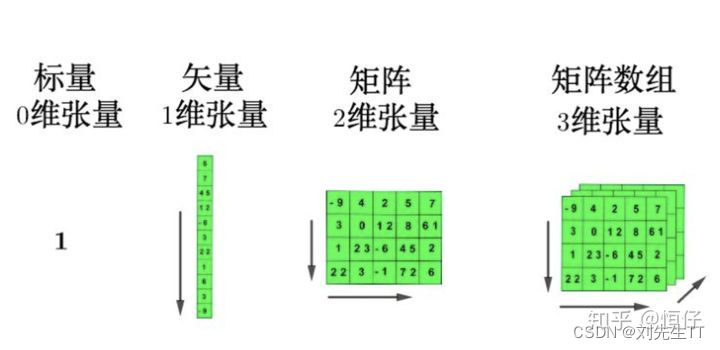

张量(Tensor):和numpy类似,张量就是一个多维数组。

张量的维度和数组的维度一样。

如下图所示:

举例:RGB就是一个三维图像,RGB中的每一个像素为一个维度。

Tensor有三个属性:

rank:number of dimensions(维度的数目)

shape:number of rows and columns(行和列的数目)

type: data type of tensor’s elements(数据的类型)

numpy和tensor的联系:二者共享内存,numpy和tensor之间可以进行相互转化。

区别:

1、numpy 默认类型是 float64、int32,tensor 默认类型是float32、int64

2、numpy只支持cpu加速,tensor不仅支持cou加速还支持gpu加速,很牛。

算子

人工智能的算子实际是一定的函数。可以对函数的运算进行定义。说白了就是一种算法.T_T.

二、张量

1.2张量

1.2.1创建张量

生成张量的numpy数组==>转换为对应的张量

1.2.1.1 指定数据创建张量

输出数组生成Numpy对象==>转换为tensor张量。

import torch

import numpy as np

#创建一个数组num1

num_1 = np.array([2.3,3.3,4.0]);

#将num1转换为tensor数据

num_1_to_tensor = torch.tensor(num_1);

print(num_1_to_tensor)

'''

2,3,4为输入的数据

tensor([2.3000, 3.3000, 4.0000], dtype=torch.float64)

'''

1.2.1.2 指定形状创建

生成指定形状的numpy数组==>转换为tensor张量

#创建大小为三行四列的一个张量

num_2 = torch.ones([3,4])

print(num_2)

'''

tensor([[1., 1., 1., 1.],

[1., 1., 1., 1.],

[1., 1., 1., 1.]])

'''

1.2.1.3 指定区间创建

生成指定区间的numpy数组==>转换为tensor张量

import torch

import numpy as np

#指定区间创建张量

arrange_tensor = torch.arange(start=1,end=5,step=1); #1开始,5结束,但是不包括5.

linespace_tensor = torch.linspace(start=1,end=5,steps=5);#1开始,5结束,包括5,steps为平分成几个数,

print('arange Tensor:',arrange_tensor);

print('linespace Tensor:',linespace_tensor);

'''

arange Tensor: tensor([1, 2, 3, 4])

linespace_steps为5的情况下

linespace Tensor: tensor([1., 2., 3., 4., 5.])

line_space_steps为6的情况下

linespace Tensor: tensor([1.0000, 1.8000, 2.6000, 3.4000, 4.2000, 5.0000])

'''

1.2.2张量的属性

1.2.2.1张量的形状

import torch

import numpy as np

#指定区间创建张量

#生成一个2*3*4*5维度大小的张量

ndim_4_Tensor = torch.ones([2,3,4,5])

#输出向量的维度

print("Number of dimensions:", ndim_4_Tensor.ndim)

#输出向量的大小

print("Shape of Tensor:", ndim_4_Tensor.shape)

#输出第0维的大小

print("Elements number along axis 0 of Tensor:", ndim_4_Tensor.shape[0])

#输出最后一维的大小

print("Elements number along the last axis of Tensor:", ndim_4_Tensor.shape[-1])

#输出总数

print('Number of elements in Tensor: ', ndim_4_Tensor.size)

'''

Number of dimensions: 4

Shape of Tensor: torch.Size([2, 3, 4, 5])

Elements number along axis 0 of Tensor: 2

Elements number along the last axis of Tensor: 5

Number of elements in Tensor: <built-in method size of Tensor object at 0x000001C79A6996D8>

'''

1.2.2.2 形状的改变

#生成数组

num3_array = np.array([[[1, 2, 3, 4, 5],

[6, 7, 8, 9, 10]],

[[11, 12, 13, 14, 15],

[16, 17, 18, 19, 20]],

[[21, 22, 23, 24, 25],

[26, 27, 28, 29, 30]]]);

num3_tensor = torch.tensor(num3_array);

#改变tensor张量的形状,但是总数最后要求一致

#举例:3*2*5 === 2*3*5

num3_tensor_reshape = torch.reshape(num3_tensor,[2,3,5])

print('Before reshape:',num3_tensor.shape);

print('After reshape:',num3_tensor_reshape.shape);

'''

Before reshape: torch.Size([3, 2, 5])

tensor([[[ 1, 2, 3, 4, 5],

[ 6, 7, 8, 9, 10]],

[[11, 12, 13, 14, 15],

[16, 17, 18, 19, 20]],

[[21, 22, 23, 24, 25],

[26, 27, 28, 29, 30]]], dtype=torch.int32)

After reshape: torch.Size([2, 3, 5])

tensor([[ 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15],

[16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30]],

dtype=torch.int32)

'''

#也可以变成2维的

#2*15 === 3*2*5 === 2*3*5

num3_tensor_reshape_to_2dim = torch.reshape(num3_tensor,[2,15])

print('After reshape to 2 dim:',num3_tensor_reshape_to_2dim.shape)

'''

After reshape to 2 dim: torch.Size([2, 15])

tensor([[ 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15],

[16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30]],

dtype=torch.int32)

'''

改变张量的形状,我自己的理解昂,就是先把张量变成一维数组,在从第一维开始排,一直排到最后一维。

1.2.2.3 张量的数据类型

张量生成中,如果咱们没有指定数据类型的话。

整数会生成int 64。浮点数会生成float 32

print("int type",torch.tensor(1).dtype)

print("float type",torch.tensor(1.0).dtype)

'''

int type torch.int64

float type torch.float32

'''

#在pytorch官方文档中并没有找到转换类型的函数,类似于飞桨的cast,但可以在生成的时候就改变他的类型

print("int to float",torch.ones([2,2],dtype=torch.float32))

'''

int to float

tensor([[1., 1.],

[1., 1.]])

'''

1.2.2.4 张量的设备位置

垃圾电脑没安装gpu版本的pytorch,这里建议大家登录kaggle官网,上面由免费时间的gpu加速,嘎嘎快!!

import numpy as np

import torch

#生成指定数组

num1 = np.array([[1,2,3],[4,5,6]]);

#转换为张量并存储在cpu上

num1_cpu = torch.tensor(num1,device=torch.device("cpu"))

print("The position of num1_tensor(CPU):",num1_cpu.device)

#转换为张量并存储在GPU上

num1_gpu = torch.tensor(num1,device=torch.device("cuda"))

print("The position of num1_tensor(GPU):",num1_gpu.device)

'''

The position of num1_tensor(CPU): cpu

The position of num1_tensor(GPU): cuda:0

AIStudio上介绍的时候有一种固定内存,我在搜索torch.devcie参数的时候并没有找到指定固定内存的参数,所以只生成了在gpu和cpu设备上的张量,由于我的电脑真的垃圾,真烦人,没有gpu版本的torch,是在kaggle官网上利用gpu运行的。

'''

1.2.3 张量与Numpy数组转换(张量和numpy数组之间是可以相互转换)

#numpy数组转换位张量

num_numpy = np.array([[1,2,3],[4,5,6]])

num_numpy_to_tensor = torch.tensor(num_numpy);

print('numpy convert to tensor(定义)',num_numpy_to_tensor)

#也可以使用函数torch.from_numpy

print('numpy convert to tensor(函数)',torch.from_numpy(num_numpy))

#将张量转换为tensor数组,就利用上面的在转换回去

print('tensor convert to numpy',num_numpy_to_tensor.numpy())

'''

numpy convert to tensor(定义) :

tensor([[1, 2, 3],

[4, 5, 6]], dtype=torch.int32)

numpy convert to tensor(函数) :

tensor([[1, 2, 3],

[4, 5, 6]], dtype=torch.int32)

tensor convert to numpy :

[[1 2 3]

[4 5 6]]

'''

1.2.4张量的访问

1.2.4.1 索引和切片

#生成张量

num_numpy = np.array([[1,2,3],[4,5,6]])

num_numpy_to_tensor = torch.tensor(num_numpy);

#索引:和普通的数组获取索引一样索引,对应的下标

for i in range(0, len(num_numpy_to_tensor)):

print(num_numpy_to_tensor[i])

#切片:和普通的数组切片一样,start stop step

print(num_numpy_to_tensor[0:1:])

'''

tensor([1, 2, 3], dtype=torch.int32)

tensor([4, 5, 6], dtype=torch.int32)

tensor([[1, 2, 3]], dtype=torch.int32)

'''

1.2.4.2 访问张量

#对张量进行访问

num_numpy = np.array([[1,2,3],[4,5,6]])

num_numpy_to_tensor = torch.tensor(num_numpy);

print("访问第一维:",num_numpy_to_tensor[0])

print("访问第一维第二个数字",num_numpy_to_tensor[1,1])

print("访问第一维的所有数字",num_numpy_to_tensor[1:])

'''

访问第一维: tensor([1, 2, 3], dtype=torch.int32)

访问第一维第二个数字:tensor(5, dtype=torch.int32)

访问第一维的所有数字:tensor([[4, 5, 6]], dtype=torch.int32)

'''

1.2.4.3 修改张量

#改变张量中某一个具体的数

print('before change',num_numpy_to_tensor)

#改变第二个的第二个数为2

num_numpy_to_tensor[1,1]=2;

print('after change',num_numpy_to_tensor)

'''

before change tensor([[1, 2, 3],

[4, 5, 6]], dtype=torch.int32)

after change tensor([[1, 2, 3],

[4, 2, 6]], dtype=torch.int32)

'''

1.2.5 张量的运算

1.2.5.1 数学运算

num1 = np.array([[1,-1,2],[2,-3,4.1]]);

num1_tensor = torch.tensor(num1);

#转换为绝对值

print('取绝对值abs:',num1_tensor.abs());

print('挨个向上取整:',num1_tensor.floor());

print('挨个四舍五入:',num1_tensor.round())

print('挨个元素计算自然常数为底的指数:',num1_tensor.exp())

print('挨个取自然对数:',num1_tensor.log())

print('逐个元素取倒数:',num1_tensor.reciprocal())

print('逐个元素计算平方',num1_tensor.square())

print('逐个元素计算平方根',num1_tensor.sqrt())

print('逐个元素计算正弦',num1_tensor.sin())

print('逐个元素计算余弦',num1_tensor.cos())

print('逐个元素相加',num1_tensor.add(1))

print('逐个元素相减',num1_tensor.subtract(1))

print('逐个元素相乘',num1_tensor.multiply(2))

print('逐个元素相除',num1_tensor.divide(2))

print('逐个元素取幂',num1_tensor.pow(2))

'''

取绝对值abs: tensor([[1.0000, 1.0000, 2.0000],

[2.0000, 3.0000, 4.1000]], dtype=torch.float64)

挨个向上取整: tensor([[ 1., -1., 2.],

[ 2., -3., 4.]], dtype=torch.float64)

挨个四舍五入: tensor([[ 1., -1., 2.],

[ 2., -3., 4.]], dtype=torch.float64)

挨个元素计算自然常数为底的指数: tensor([[2.7183e+00, 3.6788e-01, 7.3891e+00],

[7.3891e+00, 4.9787e-02, 6.0340e+01]], dtype=torch.float64)

挨个取自然对数: tensor([[0.0000, nan, 0.6931],

[0.6931, nan, 1.4110]], dtype=torch.float64)

逐个元素取倒数: tensor([[ 1.0000, -1.0000, 0.5000],

[ 0.5000, -0.3333, 0.2439]], dtype=torch.float64)

逐个元素计算平方 tensor([[ 1.0000, 1.0000, 4.0000],

[ 4.0000, 9.0000, 16.8100]], dtype=torch.float64)

逐个元素计算平方根 tensor([[1.0000, nan, 1.4142],

[1.4142, nan, 2.0248]], dtype=torch.float64)

逐个元素计算正弦 tensor([[ 0.8415, -0.8415, 0.9093],

[ 0.9093, -0.1411, -0.8183]], dtype=torch.float64)

逐个元素计算余弦 tensor([[ 0.5403, 0.5403, -0.4161],

[-0.4161, -0.9900, -0.5748]], dtype=torch.float64)

逐个元素相加 tensor([[ 2.0000, 0.0000, 3.0000],

[ 3.0000, -2.0000, 5.1000]], dtype=torch.float64)

逐个元素相减 tensor([[ 0.0000, -2.0000, 1.0000],

[ 1.0000, -4.0000, 3.1000]], dtype=torch.float64)

逐个元素相乘 tensor([[ 2.0000, -2.0000, 4.0000],

[ 4.0000, -6.0000, 8.2000]], dtype=torch.float64)

逐个元素相除 tensor([[ 0.5000, -0.5000, 1.0000],

[ 1.0000, -1.5000, 2.0500]], dtype=torch.float64)

逐个元素取幂 tensor([[ 1.0000, 1.0000, 4.0000],

[ 4.0000, 9.0000, 16.8100]], dtype=torch.float64)

Process finished with exit code 0

'''

1.2.5.2 逻辑运算

num_numpy = np.array([[1,2,3],[4,5,6]])

num_numpy_to_tensor = torch.tensor(num_numpy);

print('是否为有限',num_numpy_to_tensor.isfinite())

# 判断两个Tensor的每个元素是否相等,并返回形状相同的布尔类Tensor

print("判断两个张量的每个元素是否相等",num_numpy_to_tensor.equal(num_numpy_to_tensor))

print("判断两个张量的每个元是否不相等",num_numpy_to_tensor.not_equal(num_numpy_to_tensor))

print("判断x的元素是否小于y的元素",num_numpy_to_tensor.less(num_numpy_to_tensor))

print("判断x的元素是否小于或等于y的元素",num_numpy_to_tensor.less_equal(num_numpy_to_tensor))

print("判断x的元素是否大于y的对应元素",num_numpy_to_tensor.greater(num_numpy_to_tensor))

print("判断x的元素是否大于等于y的元素",num_numpy_to_tensor.greater_equal(num_numpy_to_tensor))

print("判断元素是否接近",num_numpy_to_tensor.allclose(num_numpy_to_tensor))

"""x.less_than(y) # 判断Tensor x的元素是否小于Tensor y的对应元素

'''

是否为有限 tensor([[True, True, True],

[True, True, True]])

判断两个张量的每个元素是否相等 True

判断两个张量的每个元是否不相等 tensor([[False, False, False],

[False, False, False]])

判断x的元素是否小于y的元素 tensor([[False, False, False],

[False, False, False]])

判断x的元素是否小于或等于y的元素 tensor([[True, True, True],

[True, True, True]])

判断x的元素是否大于y的对应元素 tensor([[False, False, False],

[False, False, False]])

判断x的元素是否大于等于y的元素 tensor([[True, True, True],

[True, True, True]])

判断元素是否接近 True

Process finished with exit code 0

'''

1.2.5.3 矩阵运算

num_numpy = np.array([[1,2.0,3],[4,5,6]])

num_numpy_to_tensor = torch.tensor(num_numpy);

#矩阵的转置

print(num_numpy_to_tensor.t())

#交换第0维和第1维

print(torch.transpose(num_numpy_to_tensor,1,0))

#矩阵的弗洛贝尼乌斯范数

print(torch.norm(num_numpy_to_tensor,p="fro"))

#矩阵(x-y)的2范数

print(num_numpy_to_tensor.dist(num_numpy_to_tensor,p=2))

#矩阵乘法

print(num_numpy_to_tensor.matmul(num_numpy_to_tensor.t()))

'''

tensor([[1., 4.],

[2., 5.],

[3., 6.]], dtype=torch.float64)

tensor([[1., 4.],

[2., 5.],

[3., 6.]], dtype=torch.float64)

tensor(9.5394, dtype=torch.float64)

tensor(0., dtype=torch.float64)

tensor([[14., 32.],

[32., 77.]], dtype=torch.float64)

'''

1.2.5.4 广播机制

x_numpy = np.ones((2,3,1,5))

y_numpy = np.ones((3,4,1))

x_tensor = torch.tensor(x_numpy)

y_tensor = torch.tensor(y_numpy)

z = x_tensor+y_tensor

print('broadcasting with two different shape tensor:',z.shape);

'''

broadcasting with two different shape tensor: torch.Size([2, 3, 4, 5])

'''

从输出结果看,此时x和y是不能广播的,因为在第一次从后往前的比较中,4和6不相等,不符合广播规则。

广播机制的计算规则

现在我们知道在什么情况下两个张量是可以广播的。两个张量进行广播后的结果张量的形状计算规则如下:

1)如果两个张量shape的长度不一致,那么需要在较小长度的shape前添加1,直到两个张量的形状长度相等。

2) 保证两个张量形状相等之后,每个维度上的结果维度就是当前维度上较大的那个。

以张量x和y进行广播为例,x的shape为[2, 3, 1,5],张量y的shape为[3,4,1]。首先张量y的形状长度较小,因此要将该张量形状补齐为[1, 3, 4, 1],再对两个张量的每一维进行比较。从第一维看,x在一维上的大小为2,y为1,因此,结果张量在第一维的大小为2。以此类推,对每一维进行比较,得到结果张量的形状为[2, 3, 4, 5]。

由于矩阵乘法函数paddle.matmul在深度学习中使用非常多,这里需要特别说明一下它的广播规则:

1)如果两个张量均为一维,则获得点积结果。

2) 如果两个张量都是二维的,则获得矩阵与矩阵的乘积。

3) 如果张量x是一维,y是二维,则将x的shape转换为[1, D],与y进行矩阵相乘后再删除前置尺寸。

4) 如果张量x是二维,y是一维,则获得矩阵与向量的乘积。

5) 如果两个张量都是N维张量(N > 2),则根据广播规则广播非矩阵维度(除最后两个维度外其余维度)。比如:如果输入x是形状为[j,1,n,m]的张量,另一个y是[k,m,p]的张量,则输出张量的形状为[j,k,n,p]。

三. 使用pytorch实现数据预处理

1. 读取数据集 house_tiny.csv、boston_house_prices.csv、Iris.csv

import pandas as pd

import torch

#读取数据集

data_iris = pd.read_csv('Iris.csv')

data_house_tiny = pd.read_csv('house_tiny.csv')

data_boston_house_prices = pd.read_csv('boston_house_prices.csv')

#输出数据集

print(data_iris)

print(data_house_tiny)

print(data_boston_house_prices)

'''

Id SepalLengthCm ... PetalWidthCm Species

0 1 5.1 ... 0.2 Iris-setosa

1 2 4.9 ... 0.2 Iris-setosa

2 3 4.7 ... 0.2 Iris-setosa

3 4 4.6 ... 0.2 Iris-setosa

4 5 5.0 ... 0.2 Iris-setosa

.. ... ... ... ... ...

145 146 6.7 ... 2.3 Iris-virginica

146 147 6.3 ... 1.9 Iris-virginica

147 148 6.5 ... 2.0 Iris-virginica

148 149 6.2 ... 2.3 Iris-virginica

149 150 5.9 ... 1.8 Iris-virginica

[150 rows x 6 columns]

NumRooms Alley Price

0 NaN Pave 127500

1 2.0 NaN 106000

2 4.0 NaN 178100

3 NaN NaN 140000

CRIM ZN INDUS CHAS NOX ... RAD TAX PTRATIO LSTAT MEDV

0 0.00632 18.0 2.31 0 0.538 ... 1 296 15.3 4.98 24.0

1 0.02731 0.0 7.07 0 0.469 ... 2 242 17.8 9.14 21.6

2 0.02729 0.0 7.07 0 0.469 ... 2 242 17.8 4.03 34.7

3 0.03237 0.0 2.18 0 0.458 ... 3 222 18.7 2.94 33.4

4 0.06905 0.0 2.18 0 0.458 ... 3 222 18.7 5.33 36.2

.. ... ... ... ... ... ... ... ... ... ... ...

501 0.06263 0.0 11.93 0 0.573 ... 1 273 21.0 9.67 22.4

502 0.04527 0.0 11.93 0 0.573 ... 1 273 21.0 9.08 20.6

503 0.06076 0.0 11.93 0 0.573 ... 1 273 21.0 5.64 23.9

504 0.10959 0.0 11.93 0 0.573 ... 1 273 21.0 6.48 22.0

505 0.04741 0.0 11.93 0 0.573 ... 1 273 21.0 7.88 11.9

[506 rows x 13 columns]

Process finished with exit code 0

'''

- 处理缺失值

import pandas as pd

import torch

#读取数据集

data_iris = pd.read_csv('Iris.csv')

data_house_tiny = pd.read_csv('house_tiny.csv')

data_boston_house_prices = pd.read_csv('boston_house_prices.csv')

#输出数据集

print(data_iris)

print(data_house_tiny)

print(data_boston_house_prices)

#处理缺失值和离散值

input,output = data_iris.iloc[:,0:5],data_iris.iloc[:,5]

#使用均值处理缺失值

input = input.fillna(input.mean())

print(input)

input = np.array(input)

#处理离散值

output = pd.get_dummies(output,dummy_na=True)

output = np.array(output)

print(input,output)

#读取输入输出

input_house_tiny,output_house_tiny = data_house_tiny.iloc[:,0:2],data_house_tiny.iloc[:,2]

#处理缺失值

input_house_tiny = input_house_tiny.fillna(input_house_tiny.mean())

#处理离散值

input_house_tiny = pd.get_dummies(input_house_tiny,dummy_na=True)

input_house_tiny,output_house_tiny = np.array(input_house_tiny),np.array(output_house_tiny)

priint(input_house_tiny,output_house_tiny)

#读取输出

input_boston_house_prices,output_boston_house_prices = data_boston_house_prices.iloc[:,0:12],data_boston_house_prices.iloc[:,12]

#处理缺失值

input_boston_house_prices,output_boston_house_prices = input_boston_house_prices.fillna(input_boston_house_prices.mean()),output_boston_house_prices.fillna(output_boston_house_prices.mean())

print(input_boston_house_prices,output_boston_house_prices)

'''

[[1.00e+00 5.10e+00 3.50e+00 1.40e+00 2.00e-01]

[2.00e+00 4.90e+00 3.00e+00 1.40e+00 2.00e-01]

[3.00e+00 4.70e+00 3.20e+00 1.30e+00 2.00e-01]

[4.00e+00 4.60e+00 3.10e+00 1.50e+00 2.00e-01]

[5.00e+00 5.00e+00 3.60e+00 1.40e+00 2.00e-01]

[6.00e+00 5.40e+00 3.90e+00 1.70e+00 4.00e-01]

[7.00e+00 4.60e+00 3.40e+00 1.40e+00 3.00e-01]

[8.00e+00 5.00e+00 3.40e+00 1.50e+00 2.00e-01]

[9.00e+00 4.40e+00 2.90e+00 1.40e+00 2.00e-01]

[1.00e+01 4.90e+00 3.10e+00 1.50e+00 1.00e-01]

[1.10e+01 5.40e+00 3.70e+00 1.50e+00 2.00e-01]

[1.20e+01 4.80e+00 3.40e+00 1.60e+00 2.00e-01]

[1.30e+01 4.80e+00 3.00e+00 1.40e+00 1.00e-01]

[1.40e+01 4.30e+00 3.00e+00 1.10e+00 1.00e-01]

[1.50e+01 5.80e+00 4.00e+00 1.20e+00 2.00e-01]

[1.60e+01 5.70e+00 4.40e+00 1.50e+00 4.00e-01]

[1.70e+01 5.40e+00 3.90e+00 1.30e+00 4.00e-01]

[1.80e+01 5.10e+00 3.50e+00 1.40e+00 3.00e-01]

[1.90e+01 5.70e+00 3.80e+00 1.70e+00 3.00e-01]

[2.00e+01 5.10e+00 3.80e+00 1.50e+00 3.00e-01]

[2.10e+01 5.40e+00 3.40e+00 1.70e+00 2.00e-01]

[2.20e+01 5.10e+00 3.70e+00 1.50e+00 4.00e-01]

[2.30e+01 4.60e+00 3.60e+00 1.00e+00 2.00e-01]

[2.40e+01 5.10e+00 3.30e+00 1.70e+00 5.00e-01]

[2.50e+01 4.80e+00 3.40e+00 1.90e+00 2.00e-01]

[2.60e+01 5.00e+00 3.00e+00 1.60e+00 2.00e-01]

[2.70e+01 5.00e+00 3.40e+00 1.60e+00 4.00e-01]

[2.80e+01 5.20e+00 3.50e+00 1.50e+00 2.00e-01]

[2.90e+01 5.20e+00 3.40e+00 1.40e+00 2.00e-01]

[3.00e+01 4.70e+00 3.20e+00 1.60e+00 2.00e-01]

[3.10e+01 4.80e+00 3.10e+00 1.60e+00 2.00e-01]

[3.20e+01 5.40e+00 3.40e+00 1.50e+00 4.00e-01]

[3.30e+01 5.20e+00 4.10e+00 1.50e+00 1.00e-01]

[3.40e+01 5.50e+00 4.20e+00 1.40e+00 2.00e-01]

[3.50e+01 4.90e+00 3.10e+00 1.50e+00 1.00e-01]

[3.60e+01 5.00e+00 3.20e+00 1.20e+00 2.00e-01]

[3.70e+01 5.50e+00 3.50e+00 1.30e+00 2.00e-01]

[3.80e+01 4.90e+00 3.10e+00 1.50e+00 1.00e-01]

[3.90e+01 4.40e+00 3.00e+00 1.30e+00 2.00e-01]

[4.00e+01 5.10e+00 3.40e+00 1.50e+00 2.00e-01]

[4.10e+01 5.00e+00 3.50e+00 1.30e+00 3.00e-01]

[4.20e+01 4.50e+00 2.30e+00 1.30e+00 3.00e-01]

[4.30e+01 4.40e+00 3.20e+00 1.30e+00 2.00e-01]

[4.40e+01 5.00e+00 3.50e+00 1.60e+00 6.00e-01]

[4.50e+01 5.10e+00 3.80e+00 1.90e+00 4.00e-01]

[4.60e+01 4.80e+00 3.00e+00 1.40e+00 3.00e-01]

[4.70e+01 5.10e+00 3.80e+00 1.60e+00 2.00e-01]

[4.80e+01 4.60e+00 3.20e+00 1.40e+00 2.00e-01]

[4.90e+01 5.30e+00 3.70e+00 1.50e+00 2.00e-01]

[5.00e+01 5.00e+00 3.30e+00 1.40e+00 2.00e-01]

[5.10e+01 7.00e+00 3.20e+00 4.70e+00 1.40e+00]

[5.20e+01 6.40e+00 3.20e+00 4.50e+00 1.50e+00]

[5.30e+01 6.90e+00 3.10e+00 4.90e+00 1.50e+00]

[5.40e+01 5.50e+00 2.30e+00 4.00e+00 1.30e+00]

[5.50e+01 6.50e+00 2.80e+00 4.60e+00 1.50e+00]

[5.60e+01 5.70e+00 2.80e+00 4.50e+00 1.30e+00]

[5.70e+01 6.30e+00 3.30e+00 4.70e+00 1.60e+00]

[5.80e+01 4.90e+00 2.40e+00 3.30e+00 1.00e+00]

[5.90e+01 6.60e+00 2.90e+00 4.60e+00 1.30e+00]

[6.00e+01 5.20e+00 2.70e+00 3.90e+00 1.40e+00]

[6.10e+01 5.00e+00 2.00e+00 3.50e+00 1.00e+00]

[6.20e+01 5.90e+00 3.00e+00 4.20e+00 1.50e+00]

[6.30e+01 6.00e+00 2.20e+00 4.00e+00 1.00e+00]

[6.40e+01 6.10e+00 2.90e+00 4.70e+00 1.40e+00]

[6.50e+01 5.60e+00 2.90e+00 3.60e+00 1.30e+00]

[6.60e+01 6.70e+00 3.10e+00 4.40e+00 1.40e+00]

[6.70e+01 5.60e+00 3.00e+00 4.50e+00 1.50e+00]

[6.80e+01 5.80e+00 2.70e+00 4.10e+00 1.00e+00]

[6.90e+01 6.20e+00 2.20e+00 4.50e+00 1.50e+00]

[7.00e+01 5.60e+00 2.50e+00 3.90e+00 1.10e+00]

[7.10e+01 5.90e+00 3.20e+00 4.80e+00 1.80e+00]

[7.20e+01 6.10e+00 2.80e+00 4.00e+00 1.30e+00]

[7.30e+01 6.30e+00 2.50e+00 4.90e+00 1.50e+00]

[7.40e+01 6.10e+00 2.80e+00 4.70e+00 1.20e+00]

[7.50e+01 6.40e+00 2.90e+00 4.30e+00 1.30e+00]

[7.60e+01 6.60e+00 3.00e+00 4.40e+00 1.40e+00]

[7.70e+01 6.80e+00 2.80e+00 4.80e+00 1.40e+00]

[7.80e+01 6.70e+00 3.00e+00 5.00e+00 1.70e+00]

[7.90e+01 6.00e+00 2.90e+00 4.50e+00 1.50e+00]

[8.00e+01 5.70e+00 2.60e+00 3.50e+00 1.00e+00]

[8.10e+01 5.50e+00 2.40e+00 3.80e+00 1.10e+00]

[8.20e+01 5.50e+00 2.40e+00 3.70e+00 1.00e+00]

[8.30e+01 5.80e+00 2.70e+00 3.90e+00 1.20e+00]

[8.40e+01 6.00e+00 2.70e+00 5.10e+00 1.60e+00]

[8.50e+01 5.40e+00 3.00e+00 4.50e+00 1.50e+00]

[8.60e+01 6.00e+00 3.40e+00 4.50e+00 1.60e+00]

[8.70e+01 6.70e+00 3.10e+00 4.70e+00 1.50e+00]

[8.80e+01 6.30e+00 2.30e+00 4.40e+00 1.30e+00]

[8.90e+01 5.60e+00 3.00e+00 4.10e+00 1.30e+00]

[9.00e+01 5.50e+00 2.50e+00 4.00e+00 1.30e+00]

[9.10e+01 5.50e+00 2.60e+00 4.40e+00 1.20e+00]

[9.20e+01 6.10e+00 3.00e+00 4.60e+00 1.40e+00]

[9.30e+01 5.80e+00 2.60e+00 4.00e+00 1.20e+00]

[9.40e+01 5.00e+00 2.30e+00 3.30e+00 1.00e+00]

[9.50e+01 5.60e+00 2.70e+00 4.20e+00 1.30e+00]

[9.60e+01 5.70e+00 3.00e+00 4.20e+00 1.20e+00]

[9.70e+01 5.70e+00 2.90e+00 4.20e+00 1.30e+00]

[9.80e+01 6.20e+00 2.90e+00 4.30e+00 1.30e+00]

[9.90e+01 5.10e+00 2.50e+00 3.00e+00 1.10e+00]

[1.00e+02 5.70e+00 2.80e+00 4.10e+00 1.30e+00]

[1.01e+02 6.30e+00 3.30e+00 6.00e+00 2.50e+00]

[1.02e+02 5.80e+00 2.70e+00 5.10e+00 1.90e+00]

[1.03e+02 7.10e+00 3.00e+00 5.90e+00 2.10e+00]

[1.04e+02 6.30e+00 2.90e+00 5.60e+00 1.80e+00]

[1.05e+02 6.50e+00 3.00e+00 5.80e+00 2.20e+00]

[1.06e+02 7.60e+00 3.00e+00 6.60e+00 2.10e+00]

[1.07e+02 4.90e+00 2.50e+00 4.50e+00 1.70e+00]

[1.08e+02 7.30e+00 2.90e+00 6.30e+00 1.80e+00]

[1.09e+02 6.70e+00 2.50e+00 5.80e+00 1.80e+00]

[1.10e+02 7.20e+00 3.60e+00 6.10e+00 2.50e+00]

[1.11e+02 6.50e+00 3.20e+00 5.10e+00 2.00e+00]

[1.12e+02 6.40e+00 2.70e+00 5.30e+00 1.90e+00]

[1.13e+02 6.80e+00 3.00e+00 5.50e+00 2.10e+00]

[1.14e+02 5.70e+00 2.50e+00 5.00e+00 2.00e+00]

[1.15e+02 5.80e+00 2.80e+00 5.10e+00 2.40e+00]

[1.16e+02 6.40e+00 3.20e+00 5.30e+00 2.30e+00]

[1.17e+02 6.50e+00 3.00e+00 5.50e+00 1.80e+00]

[1.18e+02 7.70e+00 3.80e+00 6.70e+00 2.20e+00]

[1.19e+02 7.70e+00 2.60e+00 6.90e+00 2.30e+00]

[1.20e+02 6.00e+00 2.20e+00 5.00e+00 1.50e+00]

[1.21e+02 6.90e+00 3.20e+00 5.70e+00 2.30e+00]

[1.22e+02 5.60e+00 2.80e+00 4.90e+00 2.00e+00]

[1.23e+02 7.70e+00 2.80e+00 6.70e+00 2.00e+00]

[1.24e+02 6.30e+00 2.70e+00 4.90e+00 1.80e+00]

[1.25e+02 6.70e+00 3.30e+00 5.70e+00 2.10e+00]

[1.26e+02 7.20e+00 3.20e+00 6.00e+00 1.80e+00]

[1.27e+02 6.20e+00 2.80e+00 4.80e+00 1.80e+00]

[1.28e+02 6.10e+00 3.00e+00 4.90e+00 1.80e+00]

[1.29e+02 6.40e+00 2.80e+00 5.60e+00 2.10e+00]

[1.30e+02 7.20e+00 3.00e+00 5.80e+00 1.60e+00]

[1.31e+02 7.40e+00 2.80e+00 6.10e+00 1.90e+00]

[1.32e+02 7.90e+00 3.80e+00 6.40e+00 2.00e+00]

[1.33e+02 6.40e+00 2.80e+00 5.60e+00 2.20e+00]

[1.34e+02 6.30e+00 2.80e+00 5.10e+00 1.50e+00]

[1.35e+02 6.10e+00 2.60e+00 5.60e+00 1.40e+00]

[1.36e+02 7.70e+00 3.00e+00 6.10e+00 2.30e+00]

[1.37e+02 6.30e+00 3.40e+00 5.60e+00 2.40e+00]

[1.38e+02 6.40e+00 3.10e+00 5.50e+00 1.80e+00]

[1.39e+02 6.00e+00 3.00e+00 4.80e+00 1.80e+00]

[1.40e+02 6.90e+00 3.10e+00 5.40e+00 2.10e+00]

[1.41e+02 6.70e+00 3.10e+00 5.60e+00 2.40e+00]

[1.42e+02 6.90e+00 3.10e+00 5.10e+00 2.30e+00]

[1.43e+02 5.80e+00 2.70e+00 5.10e+00 1.90e+00]

[1.44e+02 6.80e+00 3.20e+00 5.90e+00 2.30e+00]

[1.45e+02 6.70e+00 3.30e+00 5.70e+00 2.50e+00]

[1.46e+02 6.70e+00 3.00e+00 5.20e+00 2.30e+00]

[1.47e+02 6.30e+00 2.50e+00 5.00e+00 1.90e+00]

[1.48e+02 6.50e+00 3.00e+00 5.20e+00 2.00e+00]

[1.49e+02 6.20e+00 3.40e+00 5.40e+00 2.30e+00]

[1.50e+02 5.90e+00 3.00e+00 5.10e+00 1.80e+00]] [[1 0 0 0]

[1 0 0 0]

[1 0 0 0]

[1 0 0 0]

[1 0 0 0]

[1 0 0 0]

[1 0 0 0]

[1 0 0 0]

[1 0 0 0]

[1 0 0 0]

[1 0 0 0]

[1 0 0 0]

[1 0 0 0]

[1 0 0 0]

[1 0 0 0]

[1 0 0 0]

[1 0 0 0]

[1 0 0 0]

[1 0 0 0]

[1 0 0 0]

[1 0 0 0]

[1 0 0 0]

[1 0 0 0]

[1 0 0 0]

[1 0 0 0]

[1 0 0 0]

[1 0 0 0]

[1 0 0 0]

[1 0 0 0]

[1 0 0 0]

[1 0 0 0]

[1 0 0 0]

[1 0 0 0]

[1 0 0 0]

[1 0 0 0]

[1 0 0 0]

[1 0 0 0]

[1 0 0 0]

[1 0 0 0]

[1 0 0 0]

[1 0 0 0]

[1 0 0 0]

[1 0 0 0]

[1 0 0 0]

[1 0 0 0]

[1 0 0 0]

[1 0 0 0]

[1 0 0 0]

[1 0 0 0]

[1 0 0 0]

[0 1 0 0]

[0 1 0 0]

[0 1 0 0]

[0 1 0 0]

[0 1 0 0]

[0 1 0 0]

[0 1 0 0]

[0 1 0 0]

[0 1 0 0]

[0 1 0 0]

[0 1 0 0]

[0 1 0 0]

[0 1 0 0]

[0 1 0 0]

[0 1 0 0]

[0 1 0 0]

[0 1 0 0]

[0 1 0 0]

[0 1 0 0]

[0 1 0 0]

[0 1 0 0]

[0 1 0 0]

[0 1 0 0]

[0 1 0 0]

[0 1 0 0]

[0 1 0 0]

[0 1 0 0]

[0 1 0 0]

[0 1 0 0]

[0 1 0 0]

[0 1 0 0]

[0 1 0 0]

[0 1 0 0]

[0 1 0 0]

[0 1 0 0]

[0 1 0 0]

[0 1 0 0]

[0 1 0 0]

[0 1 0 0]

[0 1 0 0]

[0 1 0 0]

[0 1 0 0]

[0 1 0 0]

[0 1 0 0]

[0 1 0 0]

[0 1 0 0]

[0 1 0 0]

[0 1 0 0]

[0 1 0 0]

[0 1 0 0]

[0 0 1 0]

[0 0 1 0]

[0 0 1 0]

[0 0 1 0]

[0 0 1 0]

[0 0 1 0]

[0 0 1 0]

[0 0 1 0]

[0 0 1 0]

[0 0 1 0]

[0 0 1 0]

[0 0 1 0]

[0 0 1 0]

[0 0 1 0]

[0 0 1 0]

[0 0 1 0]

[0 0 1 0]

[0 0 1 0]

[0 0 1 0]

[0 0 1 0]

[0 0 1 0]

[0 0 1 0]

[0 0 1 0]

[0 0 1 0]

[0 0 1 0]

[0 0 1 0]

[0 0 1 0]

[0 0 1 0]

[0 0 1 0]

[0 0 1 0]

[0 0 1 0]

[0 0 1 0]

[0 0 1 0]

[0 0 1 0]

[0 0 1 0]

[0 0 1 0]

[0 0 1 0]

[0 0 1 0]

[0 0 1 0]

[0 0 1 0]

[0 0 1 0]

[0 0 1 0]

[0 0 1 0]

[0 0 1 0]

[0 0 1 0]

[0 0 1 0]

[0 0 1 0]

[0 0 1 0]

[0 0 1 0]

[0 0 1 0]]

[[3. 1. 0.]

[2. 0. 1.]

[4. 0. 1.]

[3. 0. 1.]] [127500 106000 178100 140000]

CRIM ZN INDUS CHAS NOX ... DIS RAD TAX PTRATIO LSTAT

0 0.00632 18.0 2.31 0 0.538 ... 4.0900 1 296 15.3 4.98

1 0.02731 0.0 7.07 0 0.469 ... 4.9671 2 242 17.8 9.14

2 0.02729 0.0 7.07 0 0.469 ... 4.9671 2 242 17.8 4.03

3 0.03237 0.0 2.18 0 0.458 ... 6.0622 3 222 18.7 2.94

4 0.06905 0.0 2.18 0 0.458 ... 6.0622 3 222 18.7 5.33

.. ... ... ... ... ... ... ... ... ... ... ...

501 0.06263 0.0 11.93 0 0.573 ... 2.4786 1 273 21.0 9.67

502 0.04527 0.0 11.93 0 0.573 ... 2.2875 1 273 21.0 9.08

503 0.06076 0.0 11.93 0 0.573 ... 2.1675 1 273 21.0 5.64

504 0.10959 0.0 11.93 0 0.573 ... 2.3889 1 273 21.0 6.48

505 0.04741 0.0 11.93 0 0.573 ... 2.5050 1 273 21.0 7.88

[506 rows x 12 columns] 0 24.0

1 21.6

2 34.7

3 33.4

4 36.2

...

501 22.4

502 20.6

503 23.9

504 22.0

505 11.9

Name: MEDV, Length: 506, dtype: float64

Process finished with exit code 0

'''

- 转换为张量格式

import pandas as pd

import torch

#读取数据集

data_iris = pd.read_csv('Iris.csv')

data_house_tiny = pd.read_csv('house_tiny.csv')

data_boston_house_prices = pd.read_csv('boston_house_prices.csv')

#处理缺失值和离散值

input,output = data_iris.iloc[:,0:5],data_iris.iloc[:,5]

#使用均值处理缺失值

input = input.fillna(input.mean())

input = np.array(input)

#处理离散值

output = pd.get_dummies(output,dummy_na=True)

output = np.array(output)

#转换为张量形式

input_tensor,output_tensor = torch.tensor(input),torch.tensor(output)

print(input_tensor,output_tensor)

#读取输入输出

input_house_tiny,output_house_tiny = data_house_tiny.iloc[:,0:2],data_house_tiny.iloc[:,2]

#处理缺失值

input_house_tiny = input_house_tiny.fillna(input_house_tiny.mean())

#处理离散值

input_house_tiny = pd.get_dummies(input_house_tiny,dummy_na=True)

input_house_tiny,output_house_tiny = np.array(input_house_tiny),np.array(output_house_tiny)

#转换为张量形式

input_house_tiny_tensor,output_house_tiny_tensor = torch.tensor(input_house_tiny),torch.tensor(output_house_tiny)

print(input_house_tiny_tensor,output_house_tiny_tensor)

#读取输出

input_boston_house_prices,output_boston_house_prices = data_boston_house_prices.iloc[:,0:12],data_boston_house_prices.iloc[:,12]

#处理缺失值

input_boston_house_prices,output_boston_house_prices = input_boston_house_prices.fillna(input_boston_house_prices.mean()),output_boston_house_prices.fillna(output_boston_house_prices.mean())

#转换为张量形式

input_boston_house_prices_numpy,output_boston_house_prices_numpy = np.array(input_boston_house_prices),np.array(output_boston_house_prices)

input_boston_house_prices_tensor,output_boston_house_prices_tensor = torch.tensor(input_boston_house_prices_numpy),torch.tensor(output_boston_house_prices_numpy)

print(input_boston_house_prices_tensor,output_boston_house_prices_tensor)

'''

tensor([[1.0000e+00, 5.1000e+00, 3.5000e+00, 1.4000e+00, 2.0000e-01],

[2.0000e+00, 4.9000e+00, 3.0000e+00, 1.4000e+00, 2.0000e-01],

[3.0000e+00, 4.7000e+00, 3.2000e+00, 1.3000e+00, 2.0000e-01],

[4.0000e+00, 4.6000e+00, 3.1000e+00, 1.5000e+00, 2.0000e-01],

[5.0000e+00, 5.0000e+00, 3.6000e+00, 1.4000e+00, 2.0000e-01],

[6.0000e+00, 5.4000e+00, 3.9000e+00, 1.7000e+00, 4.0000e-01],

[7.0000e+00, 4.6000e+00, 3.4000e+00, 1.4000e+00, 3.0000e-01],

[8.0000e+00, 5.0000e+00, 3.4000e+00, 1.5000e+00, 2.0000e-01],

[9.0000e+00, 4.4000e+00, 2.9000e+00, 1.4000e+00, 2.0000e-01],

[1.0000e+01, 4.9000e+00, 3.1000e+00, 1.5000e+00, 1.0000e-01],

[1.1000e+01, 5.4000e+00, 3.7000e+00, 1.5000e+00, 2.0000e-01],

[1.2000e+01, 4.8000e+00, 3.4000e+00, 1.6000e+00, 2.0000e-01],

[1.3000e+01, 4.8000e+00, 3.0000e+00, 1.4000e+00, 1.0000e-01],

[1.4000e+01, 4.3000e+00, 3.0000e+00, 1.1000e+00, 1.0000e-01],

[1.5000e+01, 5.8000e+00, 4.0000e+00, 1.2000e+00, 2.0000e-01],

[1.6000e+01, 5.7000e+00, 4.4000e+00, 1.5000e+00, 4.0000e-01],

[1.7000e+01, 5.4000e+00, 3.9000e+00, 1.3000e+00, 4.0000e-01],

[1.8000e+01, 5.1000e+00, 3.5000e+00, 1.4000e+00, 3.0000e-01],

[1.9000e+01, 5.7000e+00, 3.8000e+00, 1.7000e+00, 3.0000e-01],

[2.0000e+01, 5.1000e+00, 3.8000e+00, 1.5000e+00, 3.0000e-01],

[2.1000e+01, 5.4000e+00, 3.4000e+00, 1.7000e+00, 2.0000e-01],

[2.2000e+01, 5.1000e+00, 3.7000e+00, 1.5000e+00, 4.0000e-01],

[2.3000e+01, 4.6000e+00, 3.6000e+00, 1.0000e+00, 2.0000e-01],

[2.4000e+01, 5.1000e+00, 3.3000e+00, 1.7000e+00, 5.0000e-01],

[2.5000e+01, 4.8000e+00, 3.4000e+00, 1.9000e+00, 2.0000e-01],

[2.6000e+01, 5.0000e+00, 3.0000e+00, 1.6000e+00, 2.0000e-01],

[2.7000e+01, 5.0000e+00, 3.4000e+00, 1.6000e+00, 4.0000e-01],

[2.8000e+01, 5.2000e+00, 3.5000e+00, 1.5000e+00, 2.0000e-01],

[2.9000e+01, 5.2000e+00, 3.4000e+00, 1.4000e+00, 2.0000e-01],

[3.0000e+01, 4.7000e+00, 3.2000e+00, 1.6000e+00, 2.0000e-01],

[3.1000e+01, 4.8000e+00, 3.1000e+00, 1.6000e+00, 2.0000e-01],

[3.2000e+01, 5.4000e+00, 3.4000e+00, 1.5000e+00, 4.0000e-01],

[3.3000e+01, 5.2000e+00, 4.1000e+00, 1.5000e+00, 1.0000e-01],

[3.4000e+01, 5.5000e+00, 4.2000e+00, 1.4000e+00, 2.0000e-01],

[3.5000e+01, 4.9000e+00, 3.1000e+00, 1.5000e+00, 1.0000e-01],

[3.6000e+01, 5.0000e+00, 3.2000e+00, 1.2000e+00, 2.0000e-01],

[3.7000e+01, 5.5000e+00, 3.5000e+00, 1.3000e+00, 2.0000e-01],

[3.8000e+01, 4.9000e+00, 3.1000e+00, 1.5000e+00, 1.0000e-01],

[3.9000e+01, 4.4000e+00, 3.0000e+00, 1.3000e+00, 2.0000e-01],

[4.0000e+01, 5.1000e+00, 3.4000e+00, 1.5000e+00, 2.0000e-01],

[4.1000e+01, 5.0000e+00, 3.5000e+00, 1.3000e+00, 3.0000e-01],

[4.2000e+01, 4.5000e+00, 2.3000e+00, 1.3000e+00, 3.0000e-01],

[4.3000e+01, 4.4000e+00, 3.2000e+00, 1.3000e+00, 2.0000e-01],

[4.4000e+01, 5.0000e+00, 3.5000e+00, 1.6000e+00, 6.0000e-01],

[4.5000e+01, 5.1000e+00, 3.8000e+00, 1.9000e+00, 4.0000e-01],

[4.6000e+01, 4.8000e+00, 3.0000e+00, 1.4000e+00, 3.0000e-01],

[4.7000e+01, 5.1000e+00, 3.8000e+00, 1.6000e+00, 2.0000e-01],

[4.8000e+01, 4.6000e+00, 3.2000e+00, 1.4000e+00, 2.0000e-01],

[4.9000e+01, 5.3000e+00, 3.7000e+00, 1.5000e+00, 2.0000e-01],

[5.0000e+01, 5.0000e+00, 3.3000e+00, 1.4000e+00, 2.0000e-01],

[5.1000e+01, 7.0000e+00, 3.2000e+00, 4.7000e+00, 1.4000e+00],

[5.2000e+01, 6.4000e+00, 3.2000e+00, 4.5000e+00, 1.5000e+00],

[5.3000e+01, 6.9000e+00, 3.1000e+00, 4.9000e+00, 1.5000e+00],

[5.4000e+01, 5.5000e+00, 2.3000e+00, 4.0000e+00, 1.3000e+00],

[5.5000e+01, 6.5000e+00, 2.8000e+00, 4.6000e+00, 1.5000e+00],

[5.6000e+01, 5.7000e+00, 2.8000e+00, 4.5000e+00, 1.3000e+00],

[5.7000e+01, 6.3000e+00, 3.3000e+00, 4.7000e+00, 1.6000e+00],

[5.8000e+01, 4.9000e+00, 2.4000e+00, 3.3000e+00, 1.0000e+00],

[5.9000e+01, 6.6000e+00, 2.9000e+00, 4.6000e+00, 1.3000e+00],

[6.0000e+01, 5.2000e+00, 2.7000e+00, 3.9000e+00, 1.4000e+00],

[6.1000e+01, 5.0000e+00, 2.0000e+00, 3.5000e+00, 1.0000e+00],

[6.2000e+01, 5.9000e+00, 3.0000e+00, 4.2000e+00, 1.5000e+00],

[6.3000e+01, 6.0000e+00, 2.2000e+00, 4.0000e+00, 1.0000e+00],

[6.4000e+01, 6.1000e+00, 2.9000e+00, 4.7000e+00, 1.4000e+00],

[6.5000e+01, 5.6000e+00, 2.9000e+00, 3.6000e+00, 1.3000e+00],

[6.6000e+01, 6.7000e+00, 3.1000e+00, 4.4000e+00, 1.4000e+00],

[6.7000e+01, 5.6000e+00, 3.0000e+00, 4.5000e+00, 1.5000e+00],

[6.8000e+01, 5.8000e+00, 2.7000e+00, 4.1000e+00, 1.0000e+00],

[6.9000e+01, 6.2000e+00, 2.2000e+00, 4.5000e+00, 1.5000e+00],

[7.0000e+01, 5.6000e+00, 2.5000e+00, 3.9000e+00, 1.1000e+00],

[7.1000e+01, 5.9000e+00, 3.2000e+00, 4.8000e+00, 1.8000e+00],

[7.2000e+01, 6.1000e+00, 2.8000e+00, 4.0000e+00, 1.3000e+00],

[7.3000e+01, 6.3000e+00, 2.5000e+00, 4.9000e+00, 1.5000e+00],

[7.4000e+01, 6.1000e+00, 2.8000e+00, 4.7000e+00, 1.2000e+00],

[7.5000e+01, 6.4000e+00, 2.9000e+00, 4.3000e+00, 1.3000e+00],

[7.6000e+01, 6.6000e+00, 3.0000e+00, 4.4000e+00, 1.4000e+00],

[7.7000e+01, 6.8000e+00, 2.8000e+00, 4.8000e+00, 1.4000e+00],

[7.8000e+01, 6.7000e+00, 3.0000e+00, 5.0000e+00, 1.7000e+00],

[7.9000e+01, 6.0000e+00, 2.9000e+00, 4.5000e+00, 1.5000e+00],

[8.0000e+01, 5.7000e+00, 2.6000e+00, 3.5000e+00, 1.0000e+00],

[8.1000e+01, 5.5000e+00, 2.4000e+00, 3.8000e+00, 1.1000e+00],

[8.2000e+01, 5.5000e+00, 2.4000e+00, 3.7000e+00, 1.0000e+00],

[8.3000e+01, 5.8000e+00, 2.7000e+00, 3.9000e+00, 1.2000e+00],

[8.4000e+01, 6.0000e+00, 2.7000e+00, 5.1000e+00, 1.6000e+00],

[8.5000e+01, 5.4000e+00, 3.0000e+00, 4.5000e+00, 1.5000e+00],

[8.6000e+01, 6.0000e+00, 3.4000e+00, 4.5000e+00, 1.6000e+00],

[8.7000e+01, 6.7000e+00, 3.1000e+00, 4.7000e+00, 1.5000e+00],

[8.8000e+01, 6.3000e+00, 2.3000e+00, 4.4000e+00, 1.3000e+00],

[8.9000e+01, 5.6000e+00, 3.0000e+00, 4.1000e+00, 1.3000e+00],

[9.0000e+01, 5.5000e+00, 2.5000e+00, 4.0000e+00, 1.3000e+00],

[9.1000e+01, 5.5000e+00, 2.6000e+00, 4.4000e+00, 1.2000e+00],

[9.2000e+01, 6.1000e+00, 3.0000e+00, 4.6000e+00, 1.4000e+00],

[9.3000e+01, 5.8000e+00, 2.6000e+00, 4.0000e+00, 1.2000e+00],

[9.4000e+01, 5.0000e+00, 2.3000e+00, 3.3000e+00, 1.0000e+00],

[9.5000e+01, 5.6000e+00, 2.7000e+00, 4.2000e+00, 1.3000e+00],

[9.6000e+01, 5.7000e+00, 3.0000e+00, 4.2000e+00, 1.2000e+00],

[9.7000e+01, 5.7000e+00, 2.9000e+00, 4.2000e+00, 1.3000e+00],

[9.8000e+01, 6.2000e+00, 2.9000e+00, 4.3000e+00, 1.3000e+00],

[9.9000e+01, 5.1000e+00, 2.5000e+00, 3.0000e+00, 1.1000e+00],

[1.0000e+02, 5.7000e+00, 2.8000e+00, 4.1000e+00, 1.3000e+00],

[1.0100e+02, 6.3000e+00, 3.3000e+00, 6.0000e+00, 2.5000e+00],

[1.0200e+02, 5.8000e+00, 2.7000e+00, 5.1000e+00, 1.9000e+00],

[1.0300e+02, 7.1000e+00, 3.0000e+00, 5.9000e+00, 2.1000e+00],

[1.0400e+02, 6.3000e+00, 2.9000e+00, 5.6000e+00, 1.8000e+00],

[1.0500e+02, 6.5000e+00, 3.0000e+00, 5.8000e+00, 2.2000e+00],

[1.0600e+02, 7.6000e+00, 3.0000e+00, 6.6000e+00, 2.1000e+00],

[1.0700e+02, 4.9000e+00, 2.5000e+00, 4.5000e+00, 1.7000e+00],

[1.0800e+02, 7.3000e+00, 2.9000e+00, 6.3000e+00, 1.8000e+00],

[1.0900e+02, 6.7000e+00, 2.5000e+00, 5.8000e+00, 1.8000e+00],

[1.1000e+02, 7.2000e+00, 3.6000e+00, 6.1000e+00, 2.5000e+00],

[1.1100e+02, 6.5000e+00, 3.2000e+00, 5.1000e+00, 2.0000e+00],

[1.1200e+02, 6.4000e+00, 2.7000e+00, 5.3000e+00, 1.9000e+00],

[1.1300e+02, 6.8000e+00, 3.0000e+00, 5.5000e+00, 2.1000e+00],

[1.1400e+02, 5.7000e+00, 2.5000e+00, 5.0000e+00, 2.0000e+00],

[1.1500e+02, 5.8000e+00, 2.8000e+00, 5.1000e+00, 2.4000e+00],

[1.1600e+02, 6.4000e+00, 3.2000e+00, 5.3000e+00, 2.3000e+00],

[1.1700e+02, 6.5000e+00, 3.0000e+00, 5.5000e+00, 1.8000e+00],

[1.1800e+02, 7.7000e+00, 3.8000e+00, 6.7000e+00, 2.2000e+00],

[1.1900e+02, 7.7000e+00, 2.6000e+00, 6.9000e+00, 2.3000e+00],

[1.2000e+02, 6.0000e+00, 2.2000e+00, 5.0000e+00, 1.5000e+00],

[1.2100e+02, 6.9000e+00, 3.2000e+00, 5.7000e+00, 2.3000e+00],

[1.2200e+02, 5.6000e+00, 2.8000e+00, 4.9000e+00, 2.0000e+00],

[1.2300e+02, 7.7000e+00, 2.8000e+00, 6.7000e+00, 2.0000e+00],

[1.2400e+02, 6.3000e+00, 2.7000e+00, 4.9000e+00, 1.8000e+00],

[1.2500e+02, 6.7000e+00, 3.3000e+00, 5.7000e+00, 2.1000e+00],

[1.2600e+02, 7.2000e+00, 3.2000e+00, 6.0000e+00, 1.8000e+00],

[1.2700e+02, 6.2000e+00, 2.8000e+00, 4.8000e+00, 1.8000e+00],

[1.2800e+02, 6.1000e+00, 3.0000e+00, 4.9000e+00, 1.8000e+00],

[1.2900e+02, 6.4000e+00, 2.8000e+00, 5.6000e+00, 2.1000e+00],

[1.3000e+02, 7.2000e+00, 3.0000e+00, 5.8000e+00, 1.6000e+00],

[1.3100e+02, 7.4000e+00, 2.8000e+00, 6.1000e+00, 1.9000e+00],

[1.3200e+02, 7.9000e+00, 3.8000e+00, 6.4000e+00, 2.0000e+00],

[1.3300e+02, 6.4000e+00, 2.8000e+00, 5.6000e+00, 2.2000e+00],

[1.3400e+02, 6.3000e+00, 2.8000e+00, 5.1000e+00, 1.5000e+00],

[1.3500e+02, 6.1000e+00, 2.6000e+00, 5.6000e+00, 1.4000e+00],

[1.3600e+02, 7.7000e+00, 3.0000e+00, 6.1000e+00, 2.3000e+00],

[1.3700e+02, 6.3000e+00, 3.4000e+00, 5.6000e+00, 2.4000e+00],

[1.3800e+02, 6.4000e+00, 3.1000e+00, 5.5000e+00, 1.8000e+00],

[1.3900e+02, 6.0000e+00, 3.0000e+00, 4.8000e+00, 1.8000e+00],

[1.4000e+02, 6.9000e+00, 3.1000e+00, 5.4000e+00, 2.1000e+00],

[1.4100e+02, 6.7000e+00, 3.1000e+00, 5.6000e+00, 2.4000e+00],

[1.4200e+02, 6.9000e+00, 3.1000e+00, 5.1000e+00, 2.3000e+00],

[1.4300e+02, 5.8000e+00, 2.7000e+00, 5.1000e+00, 1.9000e+00],

[1.4400e+02, 6.8000e+00, 3.2000e+00, 5.9000e+00, 2.3000e+00],

[1.4500e+02, 6.7000e+00, 3.3000e+00, 5.7000e+00, 2.5000e+00],

[1.4600e+02, 6.7000e+00, 3.0000e+00, 5.2000e+00, 2.3000e+00],

[1.4700e+02, 6.3000e+00, 2.5000e+00, 5.0000e+00, 1.9000e+00],

[1.4800e+02, 6.5000e+00, 3.0000e+00, 5.2000e+00, 2.0000e+00],

[1.4900e+02, 6.2000e+00, 3.4000e+00, 5.4000e+00, 2.3000e+00],

[1.5000e+02, 5.9000e+00, 3.0000e+00, 5.1000e+00, 1.8000e+00]],

dtype=torch.float64) tensor([[1, 0, 0, 0],

[1, 0, 0, 0],

[1, 0, 0, 0],

[1, 0, 0, 0],

[1, 0, 0, 0],

[1, 0, 0, 0],

[1, 0, 0, 0],

[1, 0, 0, 0],

[1, 0, 0, 0],

[1, 0, 0, 0],

[1, 0, 0, 0],

[1, 0, 0, 0],

[1, 0, 0, 0],

[1, 0, 0, 0],

[1, 0, 0, 0],

[1, 0, 0, 0],

[1, 0, 0, 0],

[1, 0, 0, 0],

[1, 0, 0, 0],

[1, 0, 0, 0],

[1, 0, 0, 0],

[1, 0, 0, 0],

[1, 0, 0, 0],

[1, 0, 0, 0],

[1, 0, 0, 0],

[1, 0, 0, 0],

[1, 0, 0, 0],

[1, 0, 0, 0],

[1, 0, 0, 0],

[1, 0, 0, 0],

[1, 0, 0, 0],

[1, 0, 0, 0],

[1, 0, 0, 0],

[1, 0, 0, 0],

[1, 0, 0, 0],

[1, 0, 0, 0],

[1, 0, 0, 0],

[1, 0, 0, 0],

[1, 0, 0, 0],

[1, 0, 0, 0],

[1, 0, 0, 0],

[1, 0, 0, 0],

[1, 0, 0, 0],

[1, 0, 0, 0],

[1, 0, 0, 0],

[1, 0, 0, 0],

[1, 0, 0, 0],

[1, 0, 0, 0],

[1, 0, 0, 0],

[1, 0, 0, 0],

[0, 1, 0, 0],

[0, 1, 0, 0],

[0, 1, 0, 0],

[0, 1, 0, 0],

[0, 1, 0, 0],

[0, 1, 0, 0],

[0, 1, 0, 0],

[0, 1, 0, 0],

[0, 1, 0, 0],

[0, 1, 0, 0],

[0, 1, 0, 0],

[0, 1, 0, 0],

[0, 1, 0, 0],

[0, 1, 0, 0],

[0, 1, 0, 0],

[0, 1, 0, 0],

[0, 1, 0, 0],

[0, 1, 0, 0],

[0, 1, 0, 0],

[0, 1, 0, 0],

[0, 1, 0, 0],

[0, 1, 0, 0],

[0, 1, 0, 0],

[0, 1, 0, 0],

[0, 1, 0, 0],

[0, 1, 0, 0],

[0, 1, 0, 0],

[0, 1, 0, 0],

[0, 1, 0, 0],

[0, 1, 0, 0],

[0, 1, 0, 0],

[0, 1, 0, 0],

[0, 1, 0, 0],

[0, 1, 0, 0],

[0, 1, 0, 0],

[0, 1, 0, 0],

[0, 1, 0, 0],

[0, 1, 0, 0],

[0, 1, 0, 0],

[0, 1, 0, 0],

[0, 1, 0, 0],

[0, 1, 0, 0],

[0, 1, 0, 0],

[0, 1, 0, 0],

[0, 1, 0, 0],

[0, 1, 0, 0],

[0, 1, 0, 0],

[0, 1, 0, 0],

[0, 1, 0, 0],

[0, 1, 0, 0],

[0, 0, 1, 0],

[0, 0, 1, 0],

[0, 0, 1, 0],

[0, 0, 1, 0],

[0, 0, 1, 0],

[0, 0, 1, 0],

[0, 0, 1, 0],

[0, 0, 1, 0],

[0, 0, 1, 0],

[0, 0, 1, 0],

[0, 0, 1, 0],

[0, 0, 1, 0],

[0, 0, 1, 0],

[0, 0, 1, 0],

[0, 0, 1, 0],

[0, 0, 1, 0],

[0, 0, 1, 0],

[0, 0, 1, 0],

[0, 0, 1, 0],

[0, 0, 1, 0],

[0, 0, 1, 0],

[0, 0, 1, 0],

[0, 0, 1, 0],

[0, 0, 1, 0],

[0, 0, 1, 0],

[0, 0, 1, 0],

[0, 0, 1, 0],

[0, 0, 1, 0],

[0, 0, 1, 0],

[0, 0, 1, 0],

[0, 0, 1, 0],

[0, 0, 1, 0],

[0, 0, 1, 0],

[0, 0, 1, 0],

[0, 0, 1, 0],

[0, 0, 1, 0],

[0, 0, 1, 0],

[0, 0, 1, 0],

[0, 0, 1, 0],

[0, 0, 1, 0],

[0, 0, 1, 0],

[0, 0, 1, 0],

[0, 0, 1, 0],

[0, 0, 1, 0],

[0, 0, 1, 0],

[0, 0, 1, 0],

[0, 0, 1, 0],

[0, 0, 1, 0],

[0, 0, 1, 0],

[0, 0, 1, 0]], dtype=torch.uint8)

tensor([[3., 1., 0.],

[2., 0., 1.],

[4., 0., 1.],

[3., 0., 1.]], dtype=torch.float64) tensor([127500, 106000, 178100, 140000])

tensor([[6.3200e-03, 1.8000e+01, 2.3100e+00, ..., 2.9600e+02, 1.5300e+01,

4.9800e+00],

[2.7310e-02, 0.0000e+00, 7.0700e+00, ..., 2.4200e+02, 1.7800e+01,

9.1400e+00],

[2.7290e-02, 0.0000e+00, 7.0700e+00, ..., 2.4200e+02, 1.7800e+01,

4.0300e+00],

...,

[6.0760e-02, 0.0000e+00, 1.1930e+01, ..., 2.7300e+02, 2.1000e+01,

5.6400e+00],

[1.0959e-01, 0.0000e+00, 1.1930e+01, ..., 2.7300e+02, 2.1000e+01,

6.4800e+00],

[4.7410e-02, 0.0000e+00, 1.1930e+01, ..., 2.7300e+02, 2.1000e+01,

7.8800e+00]], dtype=torch.float64) tensor([24.0000, 21.6000, 34.7000, 33.4000, 36.2000, 28.7000, 22.9000, 27.1000,

16.5000, 18.9000, 15.0000, 18.9000, 21.7000, 20.4000, 18.2000, 19.9000,

23.1000, 17.5000, 20.2000, 18.2000, 13.6000, 19.6000, 15.2000, 14.5000,

15.6000, 13.9000, 16.6000, 14.8000, 18.4000, 21.0000, 12.7000, 14.5000,

13.2000, 13.1000, 13.5000, 18.9000, 20.0000, 21.0000, 24.7000, 30.8000,

34.9000, 26.6000, 25.3000, 24.7000, 21.2000, 19.3000, 20.0000, 16.6000,

14.4000, 19.4000, 19.7000, 20.5000, 25.0000, 23.4000, 18.9000, 35.4000,

24.7000, 31.6000, 23.3000, 19.6000, 18.7000, 16.0000, 22.2000, 25.0000,

33.0000, 23.5000, 19.4000, 22.0000, 17.4000, 20.9000, 24.2000, 21.7000,

22.8000, 23.4000, 24.1000, 21.4000, 20.0000, 20.8000, 21.2000, 20.3000,

28.0000, 23.9000, 24.8000, 22.9000, 23.9000, 26.6000, 22.5000, 22.2000,

23.6000, 28.7000, 22.6000, 22.0000, 22.9000, 25.0000, 20.6000, 28.4000,

21.4000, 38.7000, 43.8000, 33.2000, 27.5000, 26.5000, 18.6000, 19.3000,

20.1000, 19.5000, 19.5000, 20.4000, 19.8000, 19.4000, 21.7000, 22.8000,

18.8000, 18.7000, 18.5000, 18.3000, 21.2000, 19.2000, 20.4000, 19.3000,

22.0000, 20.3000, 20.5000, 17.3000, 18.8000, 21.4000, 15.7000, 16.2000,

18.0000, 14.3000, 19.2000, 19.6000, 23.0000, 18.4000, 15.6000, 18.1000,

17.4000, 17.1000, 13.3000, 17.8000, 14.0000, 14.4000, 13.4000, 15.6000,

11.8000, 13.8000, 15.6000, 14.6000, 17.8000, 15.4000, 21.5000, 19.6000,

15.3000, 19.4000, 17.0000, 15.6000, 13.1000, 41.3000, 24.3000, 23.3000,

27.0000, 50.0000, 50.0000, 50.0000, 22.7000, 25.0000, 50.0000, 23.8000,

23.8000, 22.3000, 17.4000, 19.1000, 23.1000, 23.6000, 22.6000, 29.4000,

23.2000, 24.6000, 29.9000, 37.2000, 39.8000, 36.2000, 37.9000, 32.5000,

26.4000, 29.6000, 50.0000, 32.0000, 29.8000, 34.9000, 37.0000, 30.5000,

36.4000, 31.1000, 29.1000, 50.0000, 33.3000, 30.3000, 34.6000, 34.9000,

32.9000, 24.1000, 42.3000, 48.5000, 50.0000, 22.6000, 24.4000, 22.5000,

24.4000, 20.0000, 21.7000, 19.3000, 22.4000, 28.1000, 23.7000, 25.0000,

23.3000, 28.7000, 21.5000, 23.0000, 26.7000, 21.7000, 27.5000, 30.1000,

44.8000, 50.0000, 37.6000, 31.6000, 46.7000, 31.5000, 24.3000, 31.7000,

41.7000, 48.3000, 29.0000, 24.0000, 25.1000, 31.5000, 23.7000, 23.3000,

22.0000, 20.1000, 22.2000, 23.7000, 17.6000, 18.5000, 24.3000, 20.5000,

24.5000, 26.2000, 24.4000, 24.8000, 29.6000, 42.8000, 21.9000, 20.9000,

44.0000, 50.0000, 36.0000, 30.1000, 33.8000, 43.1000, 48.8000, 31.0000,

36.5000, 22.8000, 30.7000, 50.0000, 43.5000, 20.7000, 21.1000, 25.2000,

24.4000, 35.2000, 32.4000, 32.0000, 33.2000, 33.1000, 29.1000, 35.1000,

45.4000, 35.4000, 46.0000, 50.0000, 32.2000, 22.0000, 20.1000, 23.2000,

22.3000, 24.8000, 28.5000, 37.3000, 27.9000, 23.9000, 21.7000, 28.6000,

27.1000, 20.3000, 22.5000, 29.0000, 24.8000, 22.0000, 26.4000, 33.1000,

36.1000, 28.4000, 33.4000, 28.2000, 22.8000, 20.3000, 16.1000, 22.1000,

19.4000, 21.6000, 23.8000, 16.2000, 17.8000, 19.8000, 23.1000, 21.0000,

23.8000, 23.1000, 20.4000, 18.5000, 25.0000, 24.6000, 23.0000, 22.2000,

19.3000, 22.6000, 19.8000, 17.1000, 19.4000, 22.2000, 20.7000, 21.1000,

19.5000, 18.5000, 20.6000, 19.0000, 18.7000, 32.7000, 16.5000, 23.9000,

31.2000, 17.5000, 17.2000, 23.1000, 24.5000, 26.6000, 22.9000, 24.1000,

18.6000, 30.1000, 18.2000, 20.6000, 17.8000, 21.7000, 22.7000, 22.6000,

25.0000, 19.9000, 20.8000, 16.8000, 21.9000, 27.5000, 21.9000, 23.1000,

50.0000, 50.0000, 50.0000, 50.0000, 50.0000, 13.8000, 13.8000, 15.0000,

13.9000, 13.3000, 13.1000, 10.2000, 10.4000, 10.9000, 11.3000, 12.3000,

8.8000, 7.2000, 10.5000, 7.4000, 10.2000, 11.5000, 15.1000, 23.2000,

9.7000, 13.8000, 12.7000, 13.1000, 12.5000, 8.5000, 5.0000, 6.3000,

5.6000, 7.2000, 12.1000, 8.3000, 8.5000, 5.0000, 11.9000, 27.9000,

17.2000, 27.5000, 15.0000, 17.2000, 17.9000, 16.3000, 7.0000, 7.2000,

7.5000, 10.4000, 8.8000, 8.4000, 16.7000, 14.2000, 20.8000, 13.4000,

11.7000, 8.3000, 10.2000, 10.9000, 11.0000, 9.5000, 14.5000, 14.1000,

16.1000, 14.3000, 11.7000, 13.4000, 9.6000, 8.7000, 8.4000, 12.8000,

10.5000, 17.1000, 18.4000, 15.4000, 10.8000, 11.8000, 14.9000, 12.6000,

14.1000, 13.0000, 13.4000, 15.2000, 16.1000, 17.8000, 14.9000, 14.1000,

12.7000, 13.5000, 14.9000, 20.0000, 16.4000, 17.7000, 19.5000, 20.2000,

21.4000, 19.9000, 19.0000, 19.1000, 19.1000, 20.1000, 19.9000, 19.6000,

23.2000, 29.8000, 13.8000, 13.3000, 16.7000, 12.0000, 14.6000, 21.4000,

23.0000, 23.7000, 25.0000, 21.8000, 20.6000, 21.2000, 19.1000, 20.6000,

15.2000, 7.0000, 8.1000, 13.6000, 20.1000, 21.8000, 24.5000, 23.1000,

19.7000, 18.3000, 21.2000, 17.5000, 16.8000, 22.4000, 20.6000, 23.9000,

22.0000, 11.9000], dtype=torch.float64)

Process finished with exit code 0

'''

心得体会:这次的难度比上次略微有提高。

第一部分:回顾了一下张量和算子的概念,以及张量和numpy之间的区别和优缺点。

第二部分:回顾了一下基本的tensor处理数据的函数。函数太多,比较麻烦,不过天上没有白掉的馅饼,也算加深了印象。

第三部分: 是一个崭新的部分,之前机器学习老师给的是已经处理好的数据集,没有进行数据预处理这方面,这部分也算了解了一些,处理缺失值有两种办法,一种是插值,一种是删除,插值的化可以用均值插值,也可以用中位数进行插值。删除直接删除其他元素就好了,对于一些语义,不是用数字进行表示的,例如玫瑰花、西兰花,可以转换为数据种类长度的的维度的变量,然后用[1,0]这种表示,例如[1,0]表示的是玫瑰花。[0,1]表示的是西兰花。

在进行张量转换的时候,直接使用读出来的csv文件会报错,需要用numpy进行转换,在转换为tensor变量,numpy相当于一个不收钱的中间商。

传送门:河大最牛老师