Python网络爬虫基本流程

网络爬虫基本流程:

① 访问站点

② 定位所需的信息

③ 得到并处理信息

1 访问站点

模拟浏览器,打开目标网站。

① requests库中的get()方法

② urllib库中的requests请求模块

1.1 requests库中的get()方法

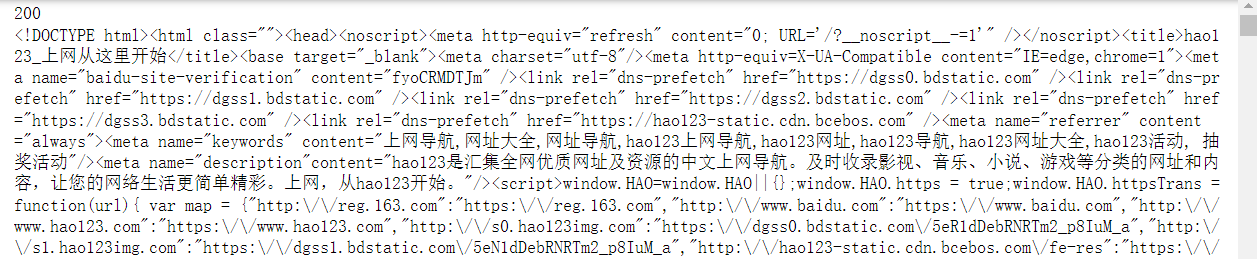

import requests

url = r'https://www.hao123.com/'

res = requests.get(url)

# 查看响应码

print(res.status_code)

# 查看响应内容,res.text 返回的是Unicode格式的数据

print (res.text)

# 查看响应内容,res.content返回的是服务器响应数据的原始二进制字节流,可以用来保存图片等二进制文件。

print (res.content)

# 查看响应头部字符编码

print (res.encoding)

1.2 urllib库中的requests请求模块

import urllib.request # 导入urllib的请求模块request

url = "https://www.hao123.com/"

# 调用urllib.request库的urlopen()方法打开网址

res = urllib.request.urlopen(url)

# 使用read()方法读取爬到的内容,并以utf-8方式编码

html = res.read().decode("utf-8")

# 打印响应的状态码

print(res.status)

print(html)

2 定位所需的信息

获取数据。打开网站之后,就可以自动化的获取我们所需要的网站数据。

规则

① 正则表达式(re模块)

② XPath

模块

③ BeautifulSoup

2.1 通过正则表达式获取数据

正则表达式的使用可参考:(15条消息) python——正则表达式(re模块)详解_nee~的博客-CSDN博客_python re 正则表达式

'''

<li class="item"><div class="border-wrap"><div class="cover shadow-cover "><a href="/ebook/21310705/?&dcs=provider-63694107-重庆大学出版社" class="pic" target="_self"><label class="cover-label vip-can-read-label"></label><img width="110px" height="165px" src="https://pic.arkread.com/cover/ebook/f/21310705.1653708337.jpg!cover_default.jpg" alt="房屋建筑学" itemprop="image" loading="lazy"></a></div><div class="info"><h4 class="title"><a href="/ebook/21310705/?&dcs=provider-63694107-重庆大学出版社" title="房屋建筑学">房屋建筑学</a></h4><div class="author"><span class="orig-author">王雪松 等</span></div><div class="rec-intro">《高等学校土木工程本科指导性专业规范配套系列教材:房屋建筑学》以文字为主,图文并茂,在内容上突出了新材料、新结构、新科技的运用,并从理论和原则上加以阐述。全书分…</div><div class="price-info "><span class="price-tag ">9.00元</span><a data-target-dialog="login" href="#" class="require-login btn-info btn-cart cart-icon-only"><i class="icon-cart"></i></a></div></div></div></li>

'''

import requests,re

url = r'https://read.douban.com/provider/63694107/'

header = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/103.0.5060.134 Safari/537.36 Edg/103.0.1264.71'

}

res = requests.get(url,headers = header)

#print(res.text)

#book_names = re.findall(r'<li class="item">.*?<span class="orig-author">(.*?)</span>',res.text,re.S)

# 解析数据

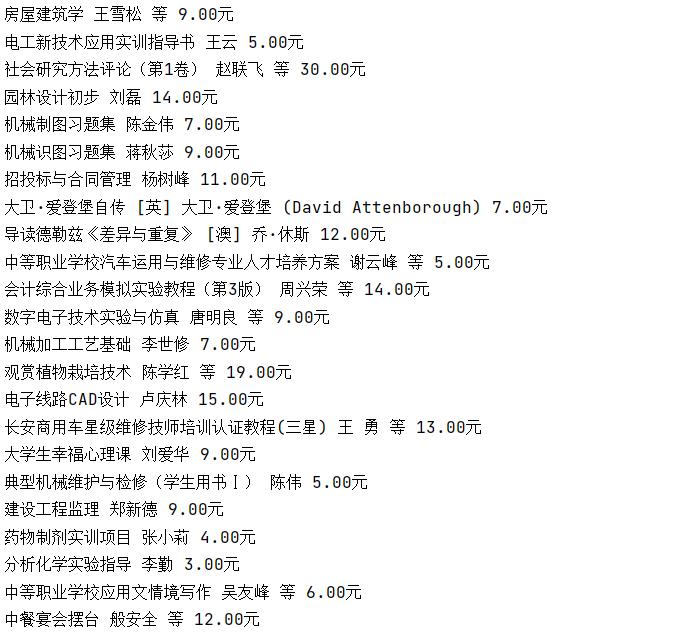

obj = re.compile(r'<li class="item">.*?<a href=".*?" title=".*?">(?P<book>.*?)</a>'

r'.*?<span class="orig-author">(?P<auther>.*?)</span>'

r'.*?<span class="price-tag ">(?P<price>.*?)</span>', re.S)

# 开始匹配

result = obj.finditer(res.text)

for it in result:

print(it.group("book"),it.group("auther"),it.group("price"))

2.2 通过XPath获取数据

xpath的使用可参考:(16条消息) python(爬虫篇)——Xpath提取网页数据_样子的木偶的博客-CSDN博客_python xpath 提取

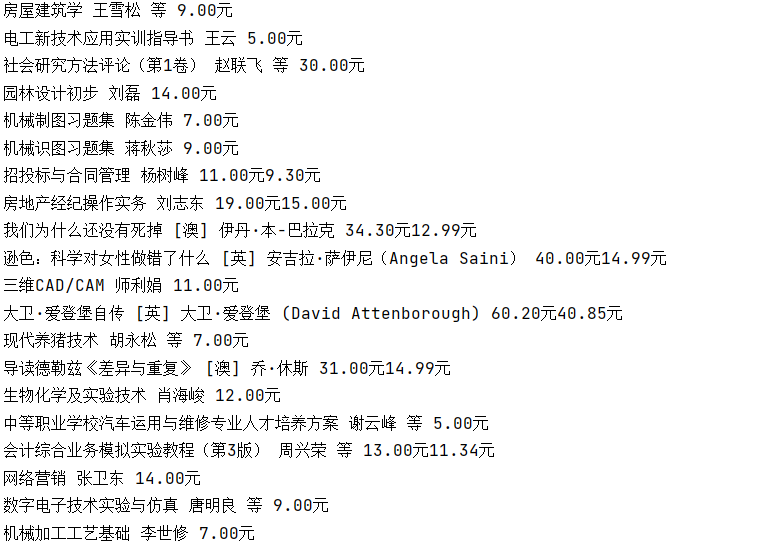

import requests

from lxml import etree

url = r'https://read.douban.com/provider/63694107/'

header = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/103.0.5060.134 Safari/537.36 Edg/103.0.1264.71'

}

res = requests.get(url,headers = header)

#print(res.text)

html = etree.HTML(res.text)

lis = html.xpath('/html/body/div[1]/div[3]/section[2]/div[2]/ul/li')

for li in lis:

book = li.xpath('div/div[2]/h4/a/text()')

auther = li.xpath('div/div[2]/div[1]/span/text()')

price = li.xpath('div/div[2]/div[3]/span/text()')

if len(price) == 0:

price = li.xpath('div/div[2]/div[3]/span/span/text()')

print(book[0],auther[0].split()[0],price[0])

2.3 通过BeautifulSoup获取数据

import requests

from bs4 import BeautifulSoup

url = r'https://read.douban.com/provider/63694107/'

header = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/103.0.5060.134 Safari/537.36 Edg/103.0.1264.71'

}

res = requests.get(url,headers = header)

#print(res.text)

soup = BeautifulSoup(res.text,'html.parser')

books = soup.findAll(class_="title")

authers = soup.findAll('span',class_="orig-author")

prices = soup.findAll(class_="price-tag")

for book,auther,price in zip(books,authers,prices):

print(book.text,auther.text,price.text)

3 保存数据

拿到数据之后,需要持久化到本地文件或者数据库等存储设备中。

3.1 保存到 csv 文件

with open('heros.xlsx', 'a', encoding='utf-8') as f:

f.write('{} {} {} {}\n'.format(hero['ename'][i], hero['cname'][i], hero['title'][i], hero['bg'][i]))

f.close()

3.2 保存到数据库

# 连接数据库

db = pymysql.connect(host='127.0.0.1', user='root', password='123456', database='hero')

conn = db.cursor() # 获取指针以操作数据库

sql = f'insert into cart values('学号',姓名,5)'

conn.execute(sql)

db.commit()