在做了简单的卷积神经网络LeNet-5进行MINIST识别之后,我们可以进行一些更加复杂的识别任务,比如小数据集,但是困难的分类任务,这个时候我们怎么样才能获得相对较好的结果呢,下面就介绍一下transfer learning。

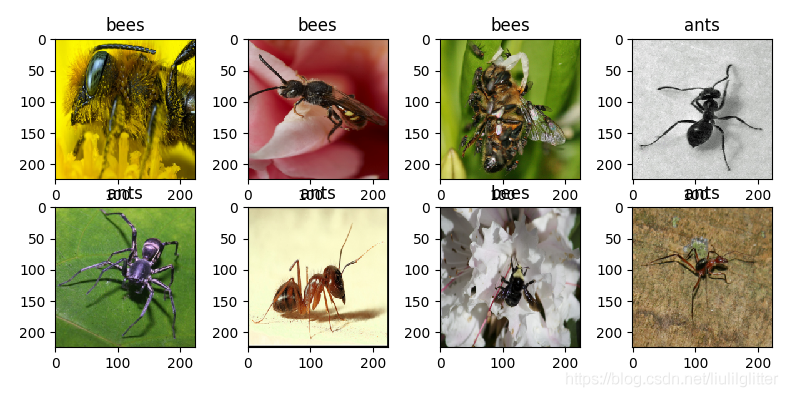

这里我们想要完成的任务是一个二分类,区分ants 和 bee,数据量在300张左右,并且图片类型各异,对于这种数据量极小,但是识别任务相对复杂的任务:(数据集的下载地址为:https://github.com/jaddoescad/ants_and_bees)

这里我们想要使用vgg16,我们使用pytorch上已经训练好的vgg16网络作为基础网络,然后不改变特征提取的参数,只对后面的全链接层的参数进行更新

首先仍旧是数据集的下载的处理:

import torch

import torch.nn as nn

from torchvision import transforms,datasets,models

import torch.nn.functional as F

import matplotlib.pyplot as plt

import numpy as np

transform=transforms.Compose([transform.Resize(224,224),

transform.ToTensor(),

transform.Normalize((0.5,0.5,0.5),(0.5,0.5,0.5))])

train_datastes=datasets.ImageFolder('./data/ants_and_bees/train',transform=transform)

val_datastes=datasets.ImageFolder('./data/ants_and_bees/val',transform=transform)

training_loader=torch.utils.data.DataLoader(dataset=training_datasets,batch_size=10,shuffle=True)

print(len(training_loader))

val_loader=torch.utils.data.DataLoader(datasets=val_datasets,batch_size=20,suffle=False)

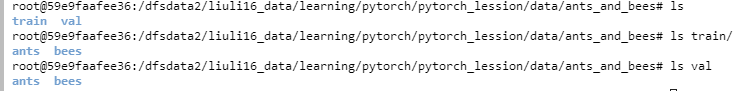

torchvision.datasets.ImageFolder 这个函数,可以将分在不同文件夹中的数据进行自动的打包和赋予标签,我们可以看一下 ants_and_bees文件夹中的目录情况:

ants 和 bees中都有很多的图片,torchvision.datasets.ImageFolder 这个函数就能将所有的图片加载上去,并将 ants定为类别0,bees类别1

下面就是对vgg网络的加载,预训练网络的下载,我们首先直接下载一下vgg16和其训练好的参数,然后看一下vgg16的整个结构:

model=models.vgg16(pretrained=True)

print(model)

可以看到vgg16的整个结构如下:

VGG(

(features): Sequential(

(0): Conv2d(3, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU(inplace=True)

(2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(3): ReLU(inplace=True)

(4): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(5): Conv2d(64, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(6): ReLU(inplace=True)

(7): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(8): ReLU(inplace=True)

(9): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(10): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(11): ReLU(inplace=True)

(12): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(13): ReLU(inplace=True)

(14): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(15): ReLU(inplace=True)

(16): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(17): Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(18): ReLU(inplace=True)

(19): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(20): ReLU(inplace=True)

(21): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(22): ReLU(inplace=True)

(23): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(24): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(25): ReLU(inplace=True)

(26): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(27): ReLU(inplace=True)

(28): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(29): ReLU(inplace=True)

(30): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(avgpool): AdaptiveAvgPool2d(output_size=(7, 7))

(classifier): Sequential(

(0): Linear(in_features=25088, out_features=4096, bias=True)

(1): ReLU(inplace=True)

(2): Dropout(p=0.5, inplace=False)

(3): Linear(in_features=4096, out_features=4096, bias=True)

(4): ReLU(inplace=True)

(5): Dropout(p=0.5, inplace=False)

(6): Linear(in_features=4096, out_features=1000, bias=True)

)

)

分为两个块,一块是features,然后接一个avepool,最后是classifier层,好的,然后我们根据自己的任务对这个vgg16网络进行修改,主要需要修改的部分为两个 1,固定住features模块的参数,将其 requires_grad设置为False,2. 将任务变成2分类

class VGG16(nn.Module):

def __init__(self,feature_freeze=True,num_classes=2):

model=models.vgg16(pretrained=True)

self.features=model.features

self.avgpool=model.avgpool

self.classifier=nn.Sequential(

nn.Linear(in_features=25088, out_features=4096, bias=True),

nn.ReLU(),

nn.Linear(in_features=4096, out_features=4096, bias=True),

nn.ReLU(),

nn.Linear(in_features=4096, out_features=num_classes, bias=True),

)

self.set_parameters_freeze(self.features,feature_freeze)

def forward(self,x):

features_output=self.features(x)

features_output=self.avgpool(features_output)

features_output=features_output.view(-1,25088)

pred=self.classifier(features_output)

return pred

def set_parameters_freeze(self,model,flag):

if flag:

for params in model.parameters():

params.requires_grad=False

然后我们可以输出模型看看修改之后的结果啦:

device={'cuda:0' if torch.cuda.is_available() else 'cpu'}

model=VGG16().to(device)

print(model)

输出结果:

VGGnet(

(features): Sequential(

(0): Conv2d(3, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU(inplace=True)

(2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(3): ReLU(inplace=True)

(4): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(5): Conv2d(64, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(6): ReLU(inplace=True)

(7): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(8): ReLU(inplace=True)

(9): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(10): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(11): ReLU(inplace=True)

(12): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(13): ReLU(inplace=True)

(14): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(15): ReLU(inplace=True)

(16): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(17): Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(18): ReLU(inplace=True)

(19): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(20): ReLU(inplace=True)

(21): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(22): ReLU(inplace=True)

(23): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(24): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(25): ReLU(inplace=True)

(26): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(27): ReLU(inplace=True)

(28): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(29): ReLU(inplace=True)

(30): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(avgpool): AdaptiveAvgPool2d(output_size=(7, 7))

(classifier): Sequential(

(0): Linear(in_features=25088, out_features=4096, bias=True)

(1): ReLU()

(2): Linear(in_features=4096, out_features=4096, bias=True)

(3): ReLU()

(4): Linear(in_features=4096, out_features=2, bias=True)

)

)

可以看到最后一层的输出已经变成了两个2,如果输出 features中的requires_grad, 也会发现都是False

最后就是进行训练啦:

criterion=nn.CrossEntropyLoss()

optimizer=torch.optim.Adam(model.parameters(),lr=0.0001)

epoch=10

losses=[]

val_losses=[]

val_acces=[]

for i in range(epoch):

running_loss=0.0

running_correct=0.0

for steps,(inputs,labels) in enumerate(training_loader):

inputs=inputs.to(device)

labels=labels.to(device)

y_pred=model.forward(inputs)

loss=criterion(y_pred,labels)

#print(loss)

optimizer.zero_grad()

loss.backward()

optimizer.step()

_,preds=torch.max(y_pred,1)

running_correct+=torch.sum(preds==labels.data)

running_loss=running_loss+loss.item()

epoch_loss=running_loss/len(training_datasets)

epoch_acc=running_correct/len(training_datasets)

losses.append(epoch_loss)

val_running_loss=0.0

val_running_correct=0.0

val_dataiter=iter(validation_loader)

with torch.no_grad():

for j in range(len(validation_loader)):

val_inputs,val_labels=val_dataiter.next()

#val_inputs=val_inputs.view(val_inputs.shape[0],-1)

val_inputs=val_inputs.to(device)

val_labels=val_labels.to(device)

#print(val_inputs.shape)

val_pred=model.forward(val_inputs)

valloss=criterion(val_pred,val_labels)

_,preds=torch.max(val_pred,1)

val_running_correct+=torch.sum(preds==val_labels.data)

val_running_loss=val_running_loss+valloss.item()

val_loss=val_running_loss/len(validation_datasets)

val_acc=val_running_correct/len(validation_datasets)

val_losses.append(val_loss)

val_acces.append(val_acc)

print(i,' traing loss:',epoch_loss,'epoch_acc:',epoch_acc.item())

print(i,' validation loss:',val_loss,'validation_acc:',val_acc.item())

plt.close()

plt.plot(losses,label='training_loss')

plt.plot(val_losses,label='validation_loss')

plt.legend()

plt.savefig('loss.png')

dir='./convolution_checkpoint'

PATH=os.path.join(dir,'model'+str(i)+'.pth')

torch.save(model.state_dict(), PATH)

最后val 的准确度能够达到90%,这是不用 transfer learning很难达到的一个准确度